Zero-Trust Security Revolution: Verify Everything Always

TL;DR: Graphics Processing Units (GPUs), originally designed for gaming, have become essential for AI and scientific computing due to their massive parallel processing capabilities. This transformation, enabled by NVIDIA's CUDA platform in 2006, has revolutionized everything from training ChatGPT to solving protein folding with AlphaFold, while creating a global GPU shortage.

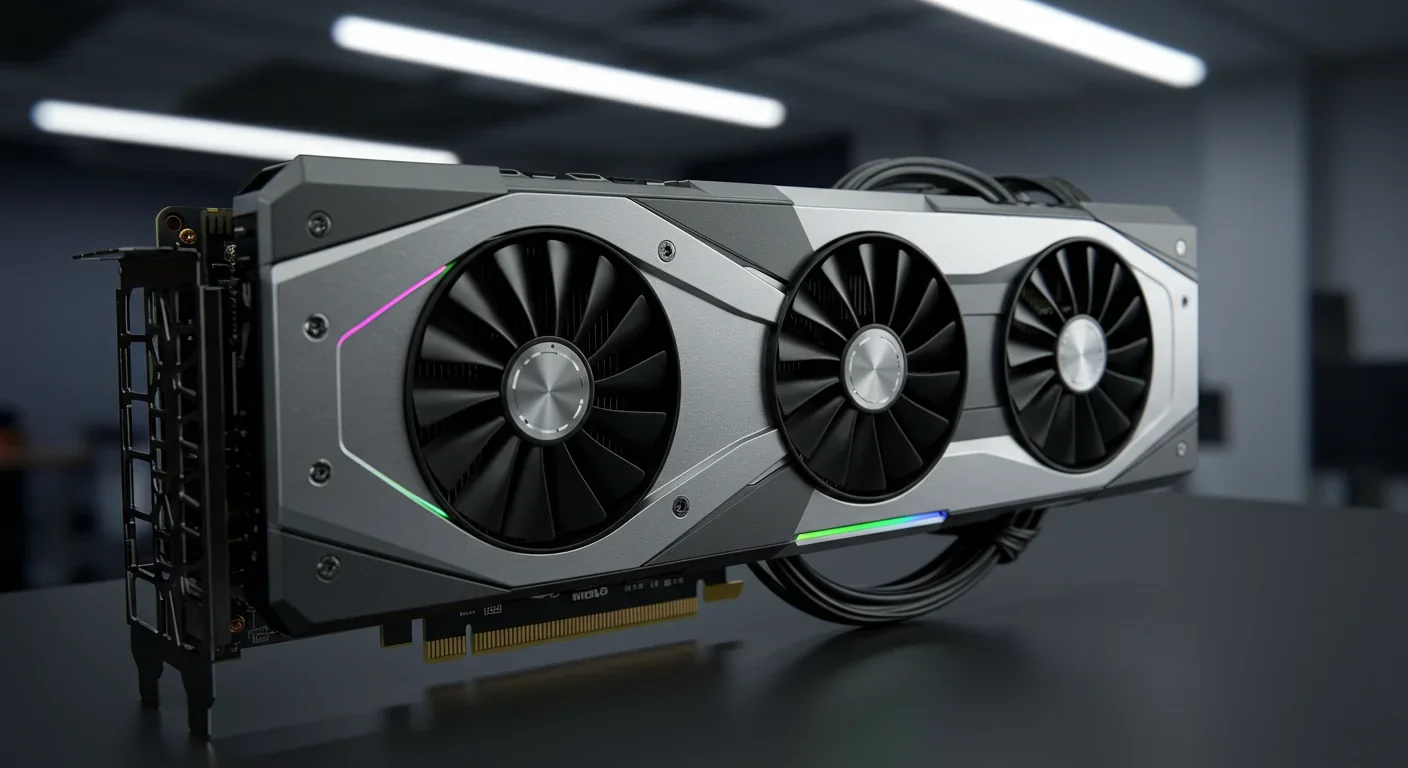

What started as a solution for rendering 3D explosions in video games has quietly become the most consequential computing breakthrough of the century. Graphics Processing Units (GPUs), once dismissed as specialized hardware for gamers and graphic designers, now power everything from ChatGPT's conversational abilities to protein folding simulations that are revolutionizing drug discovery. The transformation wasn't obvious at first—in fact, it took nearly a decade after GPUs became programmable before researchers realized they'd been sitting on the world's most powerful parallel computing architecture.

Today, the race to acquire GPUs has reshaped the global semiconductor industry, with OpenAI's Sam Altman delaying model releases due to GPU scarcity and the AI GPU market reaching $17.6 billion in 2024. But to understand how we got here requires going back to when computers were designed for fundamentally different tasks.

The fundamental difference between CPUs and GPUs isn't just about speed—it's about philosophy. Traditional CPUs are built for versatility, designed to handle any task a computer might throw at them, from word processing to database queries. They achieve this through sophisticated branch prediction, large caches, and complex instruction sets that make them excellent at sequential tasks requiring lots of decision-making.

GPUs took the opposite approach. Originally designed to render graphics, they needed to perform one specific operation: calculating the color and position of millions of pixels simultaneously. This led to a radically different architecture—one that sacrificed versatility for raw parallel processing power.

The numbers tell a dramatic story: A high-end consumer CPU has 16 cores, while NVIDIA's RTX 4090 GPU packs 16,384 CUDA cores—a thousand-fold advantage in parallel processing capability.

The numbers tell the story dramatically. A high-end consumer CPU typically has 16 cores, while NVIDIA's consumer RTX 4090 GPU packs 16,384 CUDA cores. The company's H100 data center GPU pushes that to 18,432 cores. That's not just an incremental improvement; it's a thousand-fold advantage in parallel processing capability.

But core count alone doesn't explain GPU dominance. Modern GPUs also benefit from specialized Tensor Cores designed specifically for matrix operations that are fundamental to neural networks. These cores can perform mixed-precision calculations at speeds CPUs simply can't match. Add in High Bandwidth Memory (HBM) that can feed data to thousands of cores simultaneously, and you have a machine purpose-built for the mathematical operations AI requires.

The performance difference in practice is staggering. Benchmarks show GPUs achieving 145x speedups on simple matrix operations compared to CPUs. For training a convolutional neural network, the gap widens to multiple orders of magnitude—what takes hours on a GPU could take weeks on a CPU.

The story of how GPUs became AI accelerators begins with a community of researchers who refused to accept that expensive graphics cards should only render video games. In the early 2000s, scientists noticed that GPUs were performing billions of floating-point operations per second—the same mathematical operations needed for scientific simulations, weather modeling, and financial analysis.

The problem was access. Early GPUs were designed exclusively for graphics, with programming interfaces that required framing every computation as a graphics rendering problem. Researchers had to disguise physics simulations as texture mapping operations, writing convoluted code that essentially tricked the GPU into doing scientific computing while pretending to render graphics.

"Everything changed in 2006 when NVIDIA introduced CUDA, a programming model that let developers harness GPU power directly without graphics pretense."

— NVIDIA Developer Blog

Everything changed in 2006 when NVIDIA introduced CUDA (Compute Unified Device Architecture), a programming model that let developers harness GPU power directly without graphics pretense. For the first time, scientists could write relatively straightforward code that unleashed thousands of parallel cores on their problems.

The timing proved crucial. Just as deep learning researchers were discovering that larger neural networks performed better, CUDA enabled them to train those networks in reasonable timeframes. Training that would have taken months on CPUs suddenly took weeks on GPUs. The entire field of modern AI might have stalled without this convergence of algorithmic insight and hardware accessibility.

The GPGPU (General-Purpose computing on Graphics Processing Units) movement quickly expanded beyond AI. Molecular dynamics simulations, which model how atoms and molecules move and interact, saw dramatic speedups. Climate scientists began running higher-resolution weather models. Financial firms accelerated complex risk calculations. GPUs were no longer just graphics chips—they'd become the world's most accessible supercomputers.

The alignment between GPU architecture and neural network requirements borders on serendipitous. At their core, neural networks are mathematical structures built from matrix multiplications—the same operations GPUs were optimized to perform for rendering 3D graphics.

Consider what happens when training a neural network. During each training iteration, the network processes millions of parameters through repeated matrix multiplications, computes gradients via backpropagation (more matrix math), and updates weights across the entire network. Every single parameter can be updated independently, which is the textbook definition of an embarrassingly parallel problem.

This parallelism extends to batch processing. Instead of feeding one training example at a time to a neural network, modern frameworks process hundreds or thousands simultaneously. Each example's computation is independent, meaning a GPU can calculate gradients for an entire batch in the time a CPU would handle a handful of examples.

The memory bandwidth advantage proves equally important. Neural networks constantly shuttle data between memory and processing cores. High Bandwidth Memory in modern GPUs can deliver over 3 terabytes per second to processing cores, compared to roughly 100 gigabytes per second for typical CPU memory. When you're moving billions of weights and activations, that 30x bandwidth advantage translates directly to training speed.

Recent GPU generations feature specialized Tensor Cores that perform mixed-precision matrix operations in a single clock cycle, accelerating neural network training by another 8-10x compared to standard GPU cores.

Recent GPU generations have pushed this specialization further. NVIDIA's Tensor Cores perform mixed-precision matrix operations in a single clock cycle, accelerating neural network training by another 8-10x compared to standard GPU cores. The H200 GPUs combined with optimized software have achieved record-breaking performance on large language model inference, processing queries faster than users can read the responses.

In December 2020, DeepMind's AlphaFold system accomplished something many scientists considered decades away: accurately predicting protein structures from amino acid sequences. The breakthrough solved a problem that had stymied biologists for 50 years and would have been impossible without GPU-accelerated AI.

Proteins are the molecular machines of life, but their function depends entirely on their three-dimensional shape. A protein's sequence of amino acids is easy to determine through genetic analysis, but predicting how that chain will fold into a functional structure requires modeling astronomical numbers of possible configurations and molecular interactions. Traditional experimental methods like X-ray crystallography take months or years per protein, and the human genome alone encodes over 20,000 proteins.

AlphaFold approached the problem by training deep neural networks on all known protein structures, learning patterns that govern how amino acid sequences fold. But training networks large enough to capture these patterns required processing millions of protein examples through billions of parameters—a computational task that would have been infeasible on CPUs.

"Latest-generation GPUs accelerate protein structure inference over 100x compared to previous systems, enabling predictions in minutes that would take hours on CPUs."

— NVIDIA Developer Blog

The impact has been transformative. NVIDIA reports that latest-generation GPUs accelerate protein structure inference over 100x compared to previous systems. GPU-accelerated versions of AlphaFold2 can predict structures in minutes that would take hours on CPUs. DeepMind has since predicted structures for virtually every protein known to science—over 200 million predictions that would have taken centuries of lab work.

Drug discovery has been immediate beneficiary. Pharmaceutical companies now predict how potential drug molecules will interact with target proteins before expensive lab synthesis. Cancer researchers explore how mutations change protein structures to drive disease. Synthetic biologists design novel proteins for applications from biodegradable plastics to improved vaccines.

The AlphaFold story reveals a broader pattern: GPU-enabled AI is now tackling scientific problems that seemed fundamentally intractable. Climate scientists use GPU-accelerated models to predict extreme weather with unprecedented accuracy. Materials researchers screen millions of candidate compounds for next-generation batteries. Molecular dynamics simulations that took months now run in days, accelerating everything from understanding disease mechanisms to designing more efficient industrial catalysts.

The realization that GPUs were essential for AI training triggered one of the most dramatic supply chain disruptions in technology history. In 2023, Sam Altman publicly stated that OpenAI had delayed GPT-4.5's rollout because they'd literally run out of GPUs. Major tech companies found themselves competing for the same limited supply of high-end data center GPUs, pushing wait times to months and prices far above list.

The economic implications ripple across multiple industries. NVIDIA's market capitalization surged past $3 trillion in 2024, making it one of the world's most valuable companies. The AI GPU market grew to $17.6 billion in 2024 and shows no signs of slowing. Cloud providers are building data centers exclusively for AI workloads, with GPU clusters containing tens of thousands of interconnected GPUs.

This concentration of computing power raises important questions about AI democratization. Training cutting-edge language models requires thousands of high-end GPUs running for weeks, putting them beyond reach of most organizations. The GPU shortage has created a two-tier system where well-funded companies can push AI capabilities forward while researchers and startups struggle to access necessary hardware.

The GPU shortage created a two-tier AI system: well-funded companies push capabilities forward while researchers and startups struggle to access necessary hardware.

The shortage also affected unexpected sectors. Cryptocurrency miners, who'd driven previous GPU demand spikes, competed with AI labs for the same hardware. Gaming PC enthusiasts found high-end GPUs sold out for months. Some researchers explored decentralized approaches to pool existing hardware, but the specialized requirements of AI training made this challenging.

Environmental considerations have emerged as GPUs proliferate. Data centers filled with thousands of power-hungry GPUs consume massive amounts of electricity. However, NVIDIA research suggests that GPU acceleration actually improves energy efficiency compared to CPU-based alternatives—the same AI training task consumes far less total energy when run on GPUs, despite each GPU's higher power draw.

Geopolitical dimensions add another layer of complexity. The concentration of advanced GPU manufacturing in Taiwan and dependence on complex global supply chains creates strategic vulnerabilities that nations are scrambling to address through domestic semiconductor initiatives.

While NVIDIA's CUDA ecosystem has dominated AI computing, alternative architectures are emerging that challenge GPU supremacy. Google's TPUs (Tensor Processing Units) were designed specifically for neural network operations, eschewing graphics compatibility entirely. TPUs achieve higher performance per watt for certain AI workloads by specializing even further than GPUs.

AMD has made significant investments in GPU computing, developing ROCm as an alternative to CUDA. While AMD's software ecosystem lags behind NVIDIA's, their hardware offers competitive performance at potentially lower costs. Some researchers maintain multi-GPU codebases that work across vendors, though CUDA's maturity makes it the path of least resistance.

Intel's entry into discrete GPUs specifically targets the AI market, though they face the challenge of building both competitive hardware and a software stack that can match CUDA's depth. Apple's unified memory architecture in their M-series chips offers a different approach, eliminating traditional CPU-GPU boundaries but with limited support for the CUDA-dependent tools most AI researchers use.

Specialized AI accelerators like NPUs (Neural Processing Units) are appearing in everything from smartphones to laptops, designed for efficient inference rather than training. These accelerators can run trained models locally without cloud connectivity, important for privacy and latency-sensitive applications.

The competition reflects a broader question: Will future AI computing evolve toward even more specialized hardware, or will programmable architectures like GPUs maintain their flexibility advantage? Recent research explores alternatives to matrix multiplication itself, which could fundamentally reshape what hardware AI requires.

Meanwhile, software optimizations continue advancing. Techniques like model quantization let smaller GPUs run models that previously required massive hardware. Efficient attention mechanisms reduce the memory bandwidth bottleneck. These improvements democratize AI by making more capable models accessible on consumer hardware.

CUDA's introduction in 2006 proved that innovative hardware needs equally innovative software. But CUDA was just the beginning—the subsequent evolution of AI frameworks transformed how developers harness GPU power.

Early CUDA programming required developers to manually manage memory transfers, thread synchronization, and kernel optimization. Writing efficient GPU code demanded deep understanding of hardware architecture. This complexity limited GPU computing to specialists willing to master low-level programming.

PyTorch and TensorFlow changed everything by abstracting GPU operations behind high-level Python APIs. Researchers could write network architectures in intuitive code while frameworks automatically compiled operations to optimized GPU kernels. Training a model on GPU versus CPU became as simple as calling .cuda() on a data structure.

These frameworks incorporated years of optimization work. Automated mixed-precision training lets networks use lower-precision arithmetic where possible without sacrificing accuracy. Automatic differentiation handles the complex gradient calculations required for backpropagation. Memory management strategies ensure GPUs process data efficiently.

The software ecosystem extends beyond training. TensorRT optimizes trained models specifically for inference, making deployed models run faster with less memory. Distributed training frameworks coordinate thousands of GPUs to train models too large for any single device. Monitoring tools profile GPU utilization to identify bottlenecks.

The explosive growth of AI demands has exposed limitations even in cutting-edge GPUs. Training frontier models like GPT-4 requires coordinating tens of thousands of GPUs, where communication between devices becomes a bottleneck. Memory capacity constraints force awkward partitioning of large models across multiple GPUs.

Several trends are emerging to address these challenges. Blackwell architecture GPUs integrate multiple chips into single packages with ultra-high-bandwidth interconnects, effectively creating larger logical GPUs. High Bandwidth Memory continues evolving, with HBM3 offering 50% more bandwidth than previous generations.

Specialized interconnect technologies like NVIDIA's NVLink enable GPU-to-GPU communication at speeds approaching memory bandwidth, treating multiple physical GPUs as one massive parallel processor. This disaggregated approach may prove more flexible than building ever-larger monolithic chips.

Alternative computing paradigms are also being explored. Analog computing chips promise to perform neural network operations with dramatically lower power consumption by using physical properties of materials rather than digital logic. Photonic computing could use light instead of electricity for certain operations, potentially achieving even higher speeds.

Quantum computing remains mostly theoretical for AI applications, though some researchers explore quantum algorithms for specific optimization problems. Brain-inspired neuromorphic chips take a radically different approach, implementing spiking neural networks that more closely mimic biological neurons.

More immediately, software innovations may reduce hardware requirements. Efficient sparse matrix operations could accelerate models while using less memory. Better quantization techniques might enable high-quality models on less powerful hardware. Algorithmic improvements could reduce the computational requirements for achieving specific capabilities.

The GPU revolution has created a stark divide in AI capabilities. Organizations with access to massive GPU clusters can train models that others can barely run, much less train from scratch. This concentration of computing power has important implications for who shapes AI's future.

Some researchers argue the field needs to focus more on efficient model architectures that achieve good performance without enormous GPU requirements. Techniques like model compression can reduce trained model sizes by 90% with minimal accuracy loss. Knowledge distillation lets small models learn from large ones, potentially democratizing access to capabilities.

Cloud GPU rental services have made some high-end hardware more accessible, though costs remain substantial for serious training workloads. Some universities and research institutions provide GPU access to students and researchers, but demand typically exceeds supply.

Open-source frameworks and pretrained models help level the playing field. Rather than training from scratch, researchers can fine-tune existing models for specific tasks—a process requiring far fewer GPU-hours than original training. However, this approach means most researchers build on foundations laid by well-resourced organizations.

The broader question is whether AI development will remain concentrated among entities that can afford massive GPU infrastructure, or whether innovations in algorithms and efficiency will enable wider participation. The answer will significantly shape which problems AI addresses and whose perspectives influence its development.

The GPU's journey from gaming peripheral to AI workhorse offers several insights about technological evolution. First, breakthrough applications often emerge from unexpected places—no one designing GPUs for graphics anticipated they'd revolutionize artificial intelligence. The key was creating programmable, powerful hardware that creative minds could repurpose.

Second, software ecosystems matter as much as hardware. CUDA's introduction was arguably more important than any specific GPU advancement, as it made the hardware accessible to researchers who weren't low-level programming experts. The subsequent emergence of high-level frameworks like PyTorch completed the democratization.

Third, the specialization-versatility tradeoff shapes computing architecture. CPUs remain essential for the vast majority of computing tasks that don't involve massive parallelism. GPUs excel at specific workloads but would be terrible at running an operating system. Future architectures will likely become even more specialized as we identify common computational patterns worth optimizing.

Finally, hardware and algorithms co-evolve. Modern neural network architectures are designed with GPU capabilities in mind—attention mechanisms, transformer blocks, and convolutions all map efficiently to GPU execution. Future algorithms will adapt to whatever computational resources become available.

The transformation of GPUs from graphics engines to AI accelerators represents a fundamental shift in how we think about computation. For decades, computing progress followed CPU improvements—faster clock speeds, more sophisticated architectures, better branch prediction. Moore's Law shaped our expectations.

The GPU revolution demonstrated that some of computing's most important problems require different approaches. Massive parallelism, specialized architectures, and application-specific optimization can deliver improvements that general-purpose hardware cannot match. This realization is reshaping the entire semiconductor industry.

We're entering an era of heterogeneous computing where systems incorporate multiple specialized processors—traditional CPUs for sequential tasks, GPUs for parallel workloads, NPUs for efficient inference, and potentially other accelerators for specific domains. Software will need to intelligently distribute work across these resources.

The implications extend beyond technology. GPU-accelerated AI is already transforming drug discovery, climate modeling, materials science, and countless other fields. As these computational capabilities continue advancing, they'll enable scientific breakthroughs we can barely imagine. The protein folding problem seemed intractable until AlphaFold solved it using GPU-trained models—what other "impossible" problems will fall to similar approaches?

At the same time, the concentration of GPU computing power raises questions about equity and access that the technology community needs to address. The most powerful AI systems shouldn't be the exclusive domain of wealthy organizations. Finding ways to democratize access to advanced computing resources while encouraging open research will be crucial.

The GPU story is ultimately about recognizing potential in unexpected places and building the software infrastructure to unlock it. Those gaming chips rendering explosions and aliens contained the computing architecture our future needed—we just had to figure out how to harness it. What other specialized hardware sitting in labs or living rooms might hold similar untapped potential? The next computing revolution may already be here, waiting for someone to see it differently.

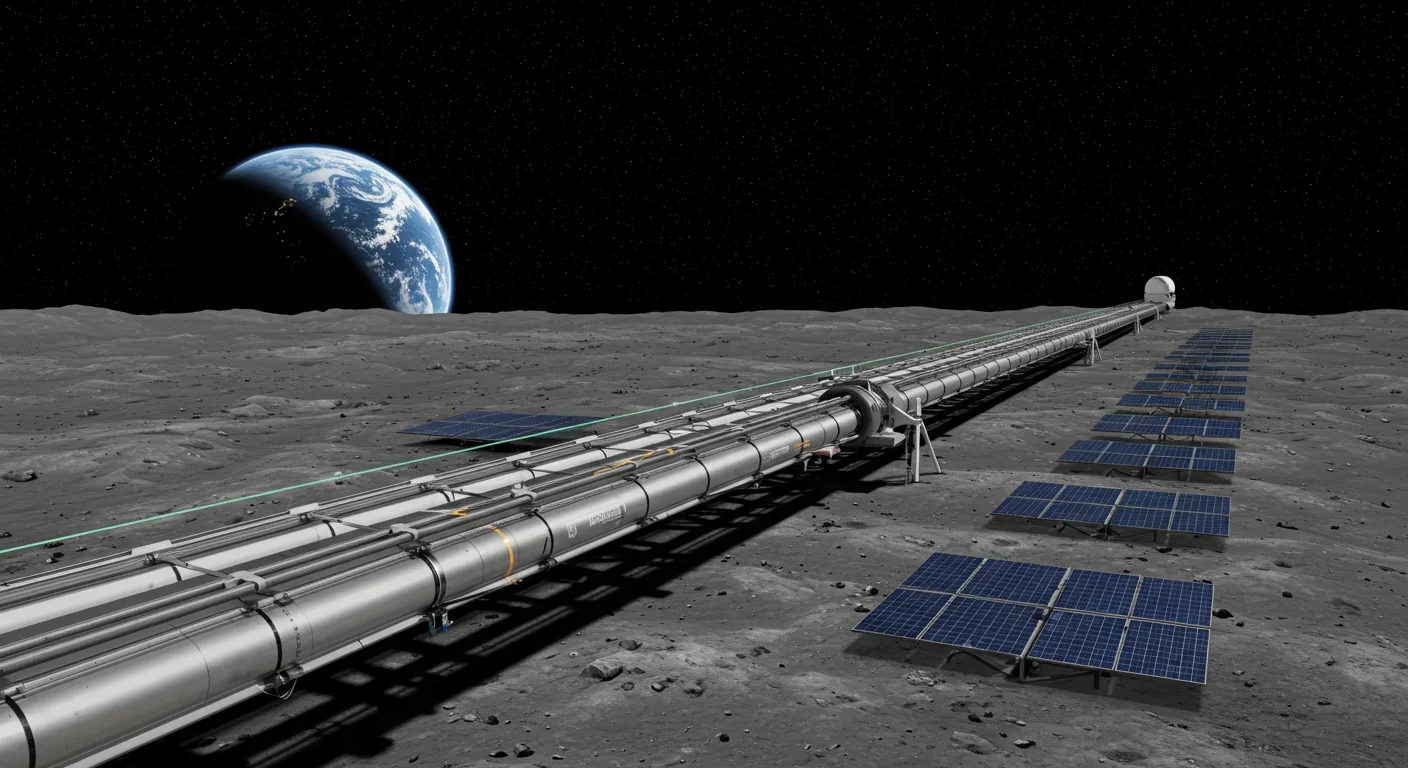

Lunar mass drivers—electromagnetic catapults that launch cargo from the Moon without fuel—could slash space transportation costs from thousands to under $100 per kilogram. This technology would enable affordable space construction, fuel depots, and deep space missions using lunar materials, potentially operational by the 2040s.

Ancient microorganisms called archaea inhabit your gut and perform unique metabolic functions that bacteria cannot, including methane production that enhances nutrient extraction. These primordial partners may influence longevity and offer new therapeutic targets.

CAES stores excess renewable energy by compressing air in underground caverns, then releases it through turbines during peak demand. New advanced adiabatic systems achieve 70%+ efficiency, making this decades-old technology suddenly competitive for long-duration grid storage.

Human children evolved to be raised by multiple caregivers—grandparents, siblings, and community members—not just two parents. Research shows alloparenting reduces parental burnout, improves child development, and is the biological norm across cultures.

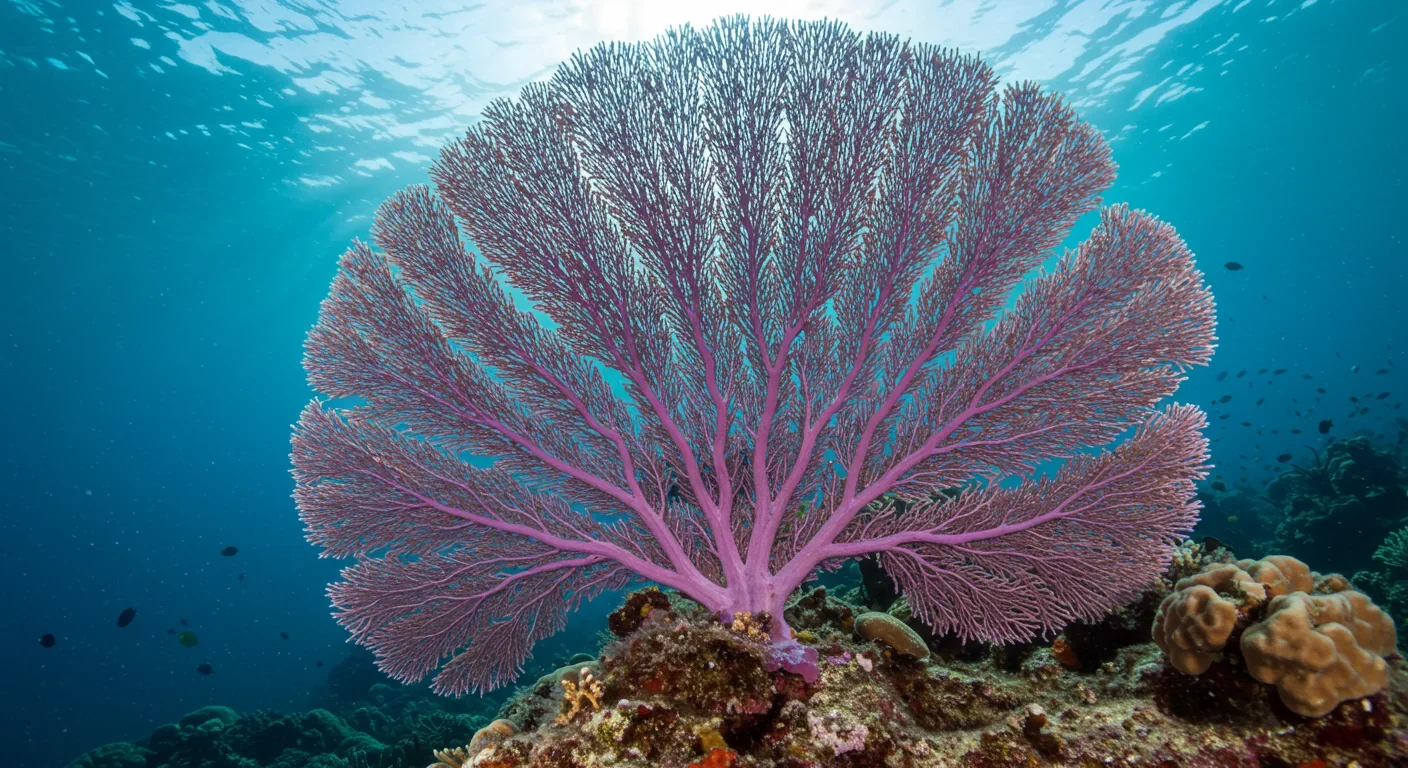

Soft corals have weaponized their symbiotic algae to produce potent chemical defenses, creating compounds with revolutionary pharmaceutical potential while reshaping our understanding of marine ecosystems facing climate change.

Generation Z is the first cohort to come of age amid a polycrisis - interconnected global failures spanning climate, economy, democracy, and health. This cascading reality is fundamentally reshaping how young people think, plan their lives, and organize for change.

Zero-trust security eliminates implicit network trust by requiring continuous verification of every access request. Organizations are rapidly adopting this architecture to address cloud computing, remote work, and sophisticated threats that rendered perimeter defenses obsolete.