How Transformers Replaced Word2Vec in NLP

TL;DR: Modern GPUs outperform CPUs in AI and parallel computing by deploying thousands of simple cores instead of a few complex ones, revolutionizing how we approach computationally intensive problems from deep learning to scientific simulation.

The future of computing doesn't belong to the strongest processors—it belongs to the ones that work together. While traditional CPUs have spent decades getting faster and smarter, a quiet revolution has been unfolding in the silicon trenches. Graphics Processing Units, once relegated to rendering video game explosions, now power everything from artificial intelligence to climate modeling. Their secret? Thousands of "dumb" cores that accomplish what a few brilliant ones cannot. This counterintuitive approach is reshaping how we solve humanity's most complex problems, and understanding why it works reveals fundamental truths about computation itself.

Modern CPUs are marvels of sequential processing, with each core designed to handle complex instructions independently. A high-end CPU might have 16 to 64 cores, each optimized for low-latency execution and flexible task switching. They excel at running your operating system, managing databases, and handling the unpredictable flow of everyday computing.

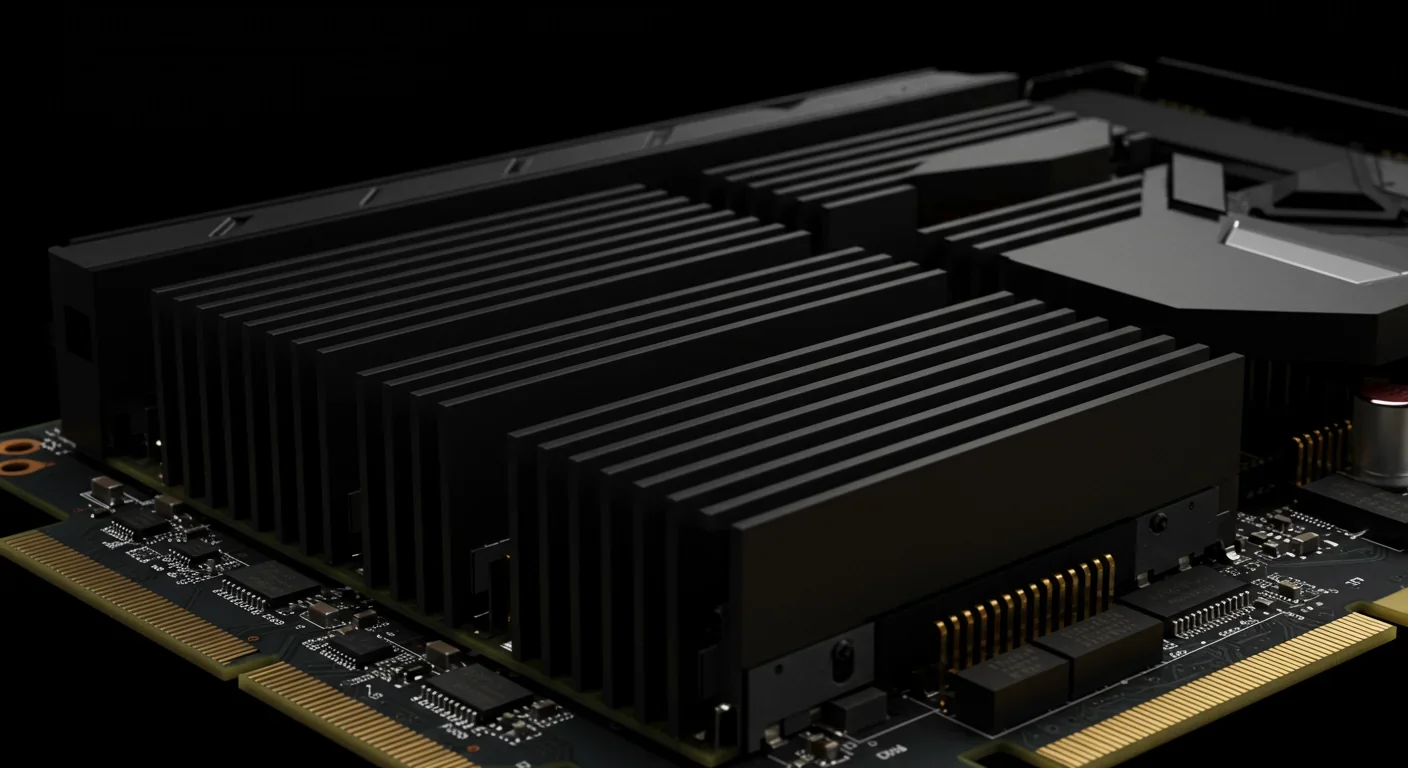

GPUs take a radically different approach. NVIDIA's latest datacenter chips pack over 18,000 CUDA cores into a single processor. AMD's competing architectures offer similar counts. Each individual core is simpler and slower than a CPU core, lacking the sophisticated branch prediction and out-of-order execution that make CPUs so versatile. But what they lack in individual capability, they compensate for through sheer numbers.

The key architectural difference lies in how these processors organize work. CPUs are designed for task parallelism—running different programs simultaneously, each doing different things. GPUs implement data parallelism—running the same operation on massive datasets simultaneously. This distinction matters enormously when you're training a neural network that needs to multiply millions of matrices or rendering a scene that requires calculating the color of millions of pixels.

Think of it like building construction. A CPU is a master carpenter who can handle any specialized task. A GPU is a crew of thousands of workers, each knowing only how to hammer nails, but capable of framing an entire building in the time it takes the master carpenter to complete a single wall.

The mathematical foundation for GPU superiority lies in Amdahl's Law, which quantifies the speedup you can achieve by parallelizing a computation. If 95% of your task can run in parallel and you have infinite processors, the theoretical speedup caps at 20x. Real-world GPU performance often exceeds this because modern workloads like deep learning and scientific simulation are embarrassingly parallel—meaning 99% or more of the computation can happen simultaneously.

Consider training a large language model. The process involves computing gradients for billions of parameters across millions of training examples. Each gradient calculation is independent, making it perfectly suited for parallel execution. Studies show that GPUs deliver 10-100x speedups over CPUs for these workloads, with some neural network training tasks seeing 300x improvements.

Graphics rendering, the original GPU application, demonstrates similar benefits. Calculating the color of pixel 1,000,000 doesn't depend on knowing the color of pixel 1. When you have millions of independent calculations, having thousands of processors working simultaneously creates massive throughput gains that no amount of individual core optimization can match.

The memory architecture reinforces these advantages. Modern GPUs feature high-bandwidth memory (HBM) with transfer rates exceeding 3TB/s—roughly 10x faster than typical CPU memory. This bandwidth feeds the thousands of cores with data fast enough to keep them productive. GPUs also implement sophisticated memory hierarchies with shared memory spaces that allow related threads to exchange data efficiently without routing through the slower main memory.

GPUs weren't always general-purpose computing devices. For decades, they existed solely to accelerate graphics rendering—calculating triangles, applying textures, and implementing lighting models. The transformation began in the early 2000s when researchers realized that the same parallel architecture useful for graphics could tackle scientific computing problems.

Early general-purpose GPU (GPGPU) programming was painful. Researchers had to disguise their calculations as graphics operations, pretending their matrix multiplication was actually a pixel shader. Everything changed in 2006 when NVIDIA introduced CUDA—a programming framework that let developers write parallel code directly, without graphics pretense. Suddenly, anyone with C programming skills could harness thousands of cores.

"CUDA's arrival coincided with the deep learning revolution. Neural networks require exactly the kind of massive matrix operations that GPUs excel at."

— NVIDIA Developer Blog

CUDA's arrival coincided with the deep learning revolution. Neural networks require exactly the kind of massive matrix operations that GPUs excel at. In 2012, when Alex Krizhevsky used CUDA-enabled GPUs to train AlexNet—a deep neural network that dramatically outperformed previous image recognition systems—he demonstrated that deep learning's computational demands perfectly matched GPU capabilities. This wasn't coincidence; it was architectural destiny.

The ecosystem expanded rapidly. AMD developed OpenCL, an open-standard alternative to CUDA. Software frameworks like TensorFlow and PyTorch built GPU acceleration into their cores. Today, training a state-of-the-art language model without GPUs is essentially impossible—the time required would stretch from months to decades.

NVIDIA's 2017 Volta architecture introduced Tensor Cores, specialized units designed specifically for the mixed-precision matrix operations common in AI. These cores can perform 64 floating-point operations per clock cycle for specific matrix operations—four times what traditional CUDA cores accomplish. The Ampere architecture followed in 2020 with third-generation Tensor Cores and structural sparsity support, essentially allowing neural networks to skip unnecessary calculations. Each generation pushes the boundaries of what parallel architectures can achieve.

Raw performance numbers reveal the magnitude of GPU advantages in appropriate workloads. For training deep neural networks, modern GPUs deliver 15-30 teraFLOPS (trillion floating-point operations per second) for standard precision work, with specialized Tensor Cores pushing into the hundreds of teraFLOPS for mixed-precision AI operations. High-end server CPUs, by comparison, typically achieve 1-3 teraFLOPS.

Energy efficiency matters as much as raw speed. Analysis shows that GPUs deliver 5-10x better performance per watt for parallel workloads. When you're running a datacenter with thousands of processors, this efficiency translates directly to reduced electricity costs and cooling requirements. Training GPT-3 reportedly consumed 1,287 MWh of electricity; doing the same work on CPUs would have required 10-20x more energy.

GPUs aren't universally superior. CPUs maintain massive advantages for sequential operations, complex branching logic, and tasks requiring frequent interaction with operating system services.

But GPUs aren't universally superior. CPUs maintain massive advantages for sequential operations, complex branching logic, and tasks requiring frequent interaction with operating system services. Running a database transaction processor or web server on a GPU would be absurdly inefficient. The CPU's sophisticated branch prediction, larger caches, and flexible instruction scheduling excel when the code doesn't follow predictable parallel patterns.

Context switching reveals another GPU limitation. CPUs can switch between different tasks in microseconds, making them ideal for multitasking environments. GPU context switches take milliseconds—a thousand times longer. This is why your computer uses the CPU to manage the user interface, file system, and dozens of background processes, while delegating specific compute-intensive tasks to the GPU.

Memory capacity presents practical constraints too. While GPUs feature extremely fast memory, they typically offer far less of it. A high-end GPU might have 80GB of memory, while server CPUs can address terabytes through standard DRAM. For workloads requiring enormous datasets that don't fit in GPU memory, the constant shuttling of data between CPU and GPU memory spaces can eliminate performance advantages.

The industry consensus points toward heterogeneous computing systems that combine CPUs and GPUs, each handling tasks suited to their architecture. Modern approaches coordinate both processor types intelligently, routing sequential control logic to CPUs while dispatching parallel number-crunching to GPUs.

Apple's M-series chips exemplify this trend, integrating CPU cores, GPU cores, and specialized neural processing units on a single chip with unified memory architecture. This allows different processor types to access the same data without copying it between separate memory spaces—eliminating a major performance bottleneck.

AMD's ROCm and NVIDIA's CUDA continue evolving to support increasingly sophisticated heterogeneous programming models. Frameworks now automatically partition workloads across available processors, dynamically adjusting the distribution based on current loads. Research demonstrates that optimal performance comes from treating CPUs and GPUs as collaborative partners rather than competitors.

The rise of specialized AI accelerators adds another dimension to heterogeneous computing. Google's TPUs (Tensor Processing Units), Amazon's Trainium, and similar custom silicon push specialization further—sacrificing general-purpose flexibility for even greater efficiency at specific neural network operations. These accelerators complement rather than replace GPUs, handling particular model types while GPUs remain the flexible option for diverse AI workloads.

"Edge computing devices increasingly incorporate GPU-like parallel processing capabilities, bringing AI inference to smartphones, security cameras, and autonomous vehicles."

— IBM Research

Edge computing devices increasingly incorporate GPU-like parallel processing capabilities. Smartphones now feature neural processing units that apply the same parallel architecture principles at a smaller scale. Security cameras, autonomous vehicles, and IoT devices embed specialized parallel processors to handle real-time AI inference locally, rather than sending data to cloud datacenters.

Understanding GPU architecture reveals a broader lesson about problem-solving. Sometimes, overwhelming a challenge with abundant, coordinated simplicity beats refined individual excellence. This principle applies beyond silicon—in distributed computing, where thousands of commodity servers outperform supercomputers; in collective intelligence, where crowd wisdom surpasses individual expertise; in biological systems, where ant colonies accomplish engineering feats no individual ant could conceive.

The GPU revolution succeeded not by making individual processors faster, but by recognizing that many problems have natural parallel structure waiting to be exploited. Matrix multiplication, image processing, physics simulation, cryptographic operations, and countless other computations can be decomposed into independent subtasks. Matching the architecture to the problem structure produces orders-of-magnitude performance improvements that raw clock speed increases could never achieve.

Programmers adapted their thinking too. Writing effective GPU code requires abandoning sequential thinking in favor of parallel patterns. Instead of asking "what happens next?", developers must think "what can happen simultaneously?" This mental shift has influenced modern software architecture broadly, even on CPU-only systems, leading to better utilization of multicore processors.

The accessibility of parallel computing has democratized advanced research. A decade ago, training sophisticated neural networks required supercomputer access. Today, researchers can rent powerful GPU instances for dollars per hour through cloud providers. Hobbyists experiment with AI models on gaming GPUs. This accessibility accelerates innovation by lowering barriers to entry.

Processor evolution continues pushing in multiple directions simultaneously. CPUs keep adding cores while improving single-thread performance. GPUs pack in more cores while adding specialized units for emerging workloads. The boundaries blur as CPU vendors add vector processing capabilities and GPU makers implement more sophisticated control logic.

Quantum computing represents a fundamentally different parallel processing paradigm, though practical quantum advantages remain limited to specific problem types. Photonic computing explores using light instead of electricity for certain parallel operations. Neuromorphic chips mimic brain architecture to achieve extreme energy efficiency for neural network inference. Each approach seeks to match hardware architecture to problem structure more precisely.

Software frameworks will increasingly abstract hardware details, automatically distributing work across whatever computing resources are available—lowering expertise barriers while maximizing efficiency.

Software frameworks will increasingly abstract these details, automatically distributing work across whatever computing resources are available. Developers will specify what they want computed rather than how to compute it, letting sophisticated compilers and runtime systems determine optimal processor allocation. This abstraction lowers expertise barriers while maximizing hardware utilization.

The economic implications are profound. AI development costs correlate directly with available computing power. Enterprises investing billions in datacenter GPUs gain competitive advantages in developing and deploying AI systems. Nations recognizing this dynamic are treating GPU capacity as strategic infrastructure, comparable to traditional industrial capabilities. The geopolitics of semiconductor manufacturing and GPU availability increasingly shape technological leadership.

Environmental considerations will constrain computing growth. Datacenters already consume several percent of global electricity production. Even with GPUs' superior efficiency for parallel workloads, the scale of AI training pushes total energy consumption upward. Future architectures must deliver not just faster computation, but dramatically more efficient computation. Innovations in low-precision arithmetic, sparsity exploitation, and algorithmic efficiency will matter as much as transistor counts.

The parallel computing revolution that GPUs pioneered is far from complete. As we tackle increasingly ambitious computational challenges—from simulating protein folding to modeling climate systems to training trillion-parameter AI models—the architectural lessons of embracing parallelism, matching hardware to workload characteristics, and coordinating abundant simple processors will guide computing's evolution. The story of GPU ascendance isn't just about faster chips; it's about recognizing that some problems yield not to individual brilliance, but to coordinated multitudes working in perfect parallel harmony.

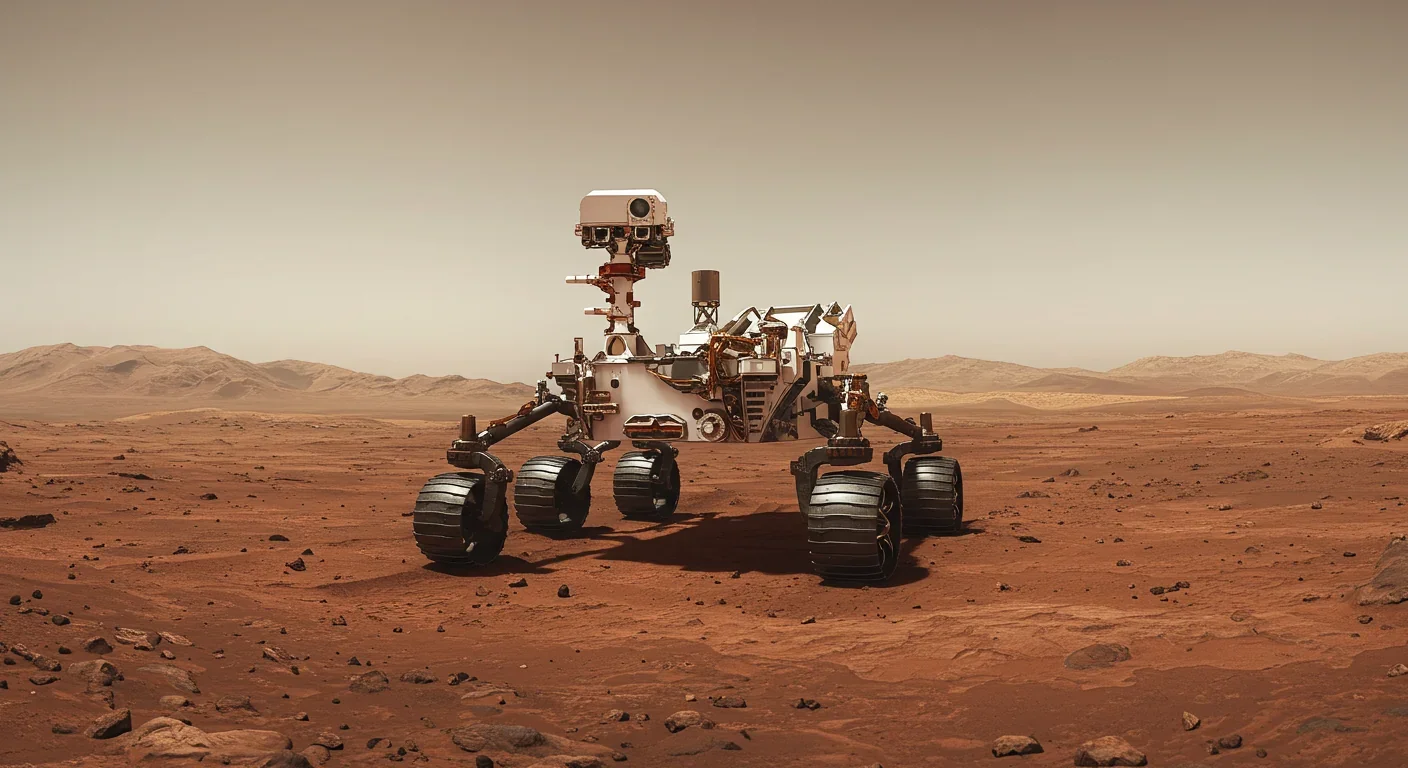

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

Cryptomnesia—unconsciously reproducing ideas you've encountered before while believing them to be original—affects everyone from songwriters to academics. This article explores the neuroscience behind why our brains fail to flag recycled ideas and provides evidence-based strategies to protect your creative integrity.

Cuttlefish pass the marshmallow test by waiting up to 130 seconds for preferred food, demonstrating time perception and self-control with a radically different brain structure. This challenges assumptions about intelligence requiring vertebrate-type brains and suggests consciousness may be more widespread than previously thought.

Epistemic closure has fractured shared reality: algorithmic echo chambers and motivated reasoning trap us in separate information ecosystems where we can't agree on basic facts. This threatens democracy, public health coordination, and collective action on civilizational challenges. Solutions require platform accountability, media literacy, identity-bridging interventions, and cultural commitment to truth over tribalism.

Transformer architectures with self-attention mechanisms have completely replaced static word vectors like Word2Vec in NLP by generating contextual embeddings that adapt to word meaning based on surrounding context, enabling dramatic performance improvements across all language understanding tasks.