Zero-Trust Security Revolution: Verify Everything Always

TL;DR: Dedicated ray tracing hardware in modern GPUs transformed photorealistic lighting from a Hollywood studio luxury into real-time gaming reality, combining specialized silicon with AI denoising to simulate physically accurate light behavior at playable frame rates.

For decades, the lighting in your favorite video games was a carefully crafted illusion. Artists painstakingly placed invisible light sources, baked shadows into textures, and used clever tricks to make flat surfaces appear reflective. It worked, but it was smoke and mirrors—nothing like how light actually behaves in the real world.

Then in 2018, something fundamental shifted. NVIDIA launched the RTX 2000 series, the first consumer graphics cards with dedicated silicon designed to trace rays of light through virtual worlds. Not approximations. Not tricks. Actual physics simulation happening fast enough for real-time interaction.

This wasn't just an incremental upgrade. It was the moment photorealistic rendering moved from Hollywood studios spending 60-160 hours per frame to gamers getting 60 frames per second. The breakthrough that lets you see your character's reflection in a puddle update in real-time as you move, with the correct distortion, color bleeding, and light bounce that makes it look genuinely real.

Walk outside and look at anything reflective—a car window, a storefront, water. Your brain instantly processes an impossibly complex light simulation. Photons from the sun bounce off countless surfaces, some absorbed, some reflected, eventually reaching your eyes with all their accumulated color and intensity information intact.

Traditional rasterization, the technique that powered graphics for forty years, doesn't simulate this. Instead, it converts 3D polygons into 2D pixels by projecting them onto your screen, then applies textures and lighting as a post-process. Reflections? Those are usually pre-rendered images mapped onto surfaces. Shadows? Calculated by rendering the scene multiple times from each light's perspective. It's fast because it's fake.

Ray tracing inverts the entire process. It shoots virtual light rays from the camera through each pixel, tracks them as they bounce around the scene, and calculates what color each pixel should be based on what those rays actually hit. When implemented properly, you get perfect reflections, accurate shadows, realistic light bleeding between surfaces, and caustics—those dancing patterns light makes through glass or water.

But there's a brutal computational cost. A single 4K frame contains over 8 million pixels. Each pixel might need hundreds or thousands of rays to produce a noise-free image. That's billions of calculations per frame, and you need to do it 60 times per second for smooth gameplay.

Before 2018, rendering a single frame of Toy Story 4 took 60-160 hours using path tracing. Consumer hardware couldn't come close to real-time ray tracing without a fundamental breakthrough in chip design.

Before 2018, this was impossible on consumer hardware. Pixar needed 60-160 hours to render a single frame of Toy Story 4 using path tracing—ray tracing's more sophisticated cousin. Professional rendering farms with 500 servers consumed 550,000 watts just to handle the workload for film production.

Something had to change at the hardware level.

The breakthrough came from adding specialized silicon that does one thing extraordinarily well: determining whether a ray of light intersects with geometry in a 3D scene.

Inside every modern NVIDIA RTX, AMD RDNA 2/3, and Intel Arc GPU sits dedicated ray tracing hardware—RT Cores in NVIDIA's case, Ray Accelerators for AMD. These aren't general-purpose processors. They're fixed-function units optimized for the two operations that consume most of ray tracing's processing time: traversing acceleration structures and testing for intersections.

Here's how it works. Before any rays get traced, the GPU builds a data structure called a Bounding Volume Hierarchy (BVH)—essentially a tree where each node contains a simple bounding box around a group of objects. When a ray enters the scene, instead of testing it against every single triangle (modern games can have billions), the RT core walks down this tree, quickly eliminating entire branches that the ray couldn't possibly hit.

This hierarchical rejection is massively faster than brute-force testing. But the real magic is that RT cores do this work independently from the shader cores handling traditional rendering, rasterization, and game logic. That parallel processing architecture is what makes the performance leap possible.

"RT cores in modern GPUs accelerate these calculations by 10-25x compared to traditional compute units."

— Ofzen and Computing, Complete Comparison Guide

The numbers tell the story. RT cores accelerate ray-intersection calculations by 10-25 times compared to doing the same work on traditional compute units. For collision detection tasks beyond graphics, researchers have seen speedups of 2.5x to nearly 7x by leveraging the same BVH traversal hardware.

AMD took a slightly different approach with their RDNA 2 architecture in 2020. Instead of fully dedicated RT cores, they integrated Ray Accelerators directly into each compute unit. These handle ray-box and ray-triangle intersection tests while sharing resources with traditional compute tasks. It's more flexible but generally offers less raw ray tracing performance than NVIDIA's dedicated approach.

Intel's Arc GPUs, despite being first-generation products launched in 2022, included RT units from day one. They recognized ray tracing wasn't optional—it was the future of graphics.

Even with dedicated hardware, ray tracing isn't free. The performance impact depends heavily on how aggressively developers use it.

Enabling ray tracing typically reduces frame rates by 30% to 65% compared to pure rasterization, depending on the quality settings. A game running at 80 FPS with rasterization might drop to 62 FPS with medium ray tracing, or 38 FPS with ultra settings. In extreme cases, frame rates can halve—from 60+ FPS to under 30 FPS when full ray-traced shadows and reflections are enabled.

That's why almost no games use pure ray tracing. Instead, they employ hybrid rendering: rasterization handles the primary geometry and opaque surfaces (about 80-90% of the pixel workload), while ray tracing selectively adds reflections, shadows, ambient occlusion, and global illumination. You get the visual wow moments where they matter most without completely tanking performance.

Unreal Engine 5's Lumen system exemplifies this trade-off. It uses a mix of software ray tracing and traditional techniques to deliver roughly 80% of full ray tracing quality at just 40% of the performance cost. That's the kind of engineering compromise that makes advanced lighting accessible to developers working on games that need to run on a wide range of hardware.

Hybrid rendering is the current standard: rasterization handles 80-90% of the workload, while ray tracing selectively adds reflections, shadows, and global illumination where they create the biggest visual impact.

The memory bandwidth demands are equally serious. Each ray traced needs to fetch BVH node data, texture information, and material properties. High-end GPUs mitigate this with massive caches and clever memory hierarchy designs, but the data movement remains a significant bottleneck, especially at higher resolutions.

Different GPU tiers handle ray tracing with vastly different capabilities. An RTX 4080 can manage ray-traced games at 4K with demanding settings. An RTX 3060 works well at 1080p but struggles at higher resolutions. Budget cards from previous generations might technically support ray tracing but deliver unplayable frame rates without aggressive quality compromises.

That performance reality shapes how developers implement ray tracing. Most treat it as a premium feature for high-end systems rather than a baseline requirement.

Raw ray tracing performance told only half the story. The other breakthrough came from an unexpected direction: artificial intelligence.

The fundamental problem is noise. Fire just a few rays per pixel and you get a grainy, speckled image that looks terrible. Traditional path tracing uses hundreds or thousands of rays per pixel to eliminate that noise through pure brute force. Real-time frame rates can't afford that luxury.

NVIDIA's solution was DLSS (Deep Learning Super Sampling)—a neural network trained on tens of thousands of high-quality reference images. Instead of rendering at native resolution, the GPU renders at a lower resolution (say, 1080p instead of 4K) with ray tracing enabled, then uses AI to reconstruct a higher-resolution image that's often sharper than native rendering.

DLSS 3.5 with Ray Reconstruction improved frame rates by 55% while actually delivering better image quality than native resolution in many scenarios. The AI model learned to recognize and preserve fine details that traditional upscaling would blur.

"DLSS 3 uses its Super Resolution AI to recreate three-quarters of an initial frame, roping in Frame Generation to complete the second frame."

— Windows Central, Super Resolution Guide

But DLSS 3 went further. It introduced frame generation, where the AI reconstructs three-quarters of one frame, then generates an entirely new interpolated frame between rendered frames. You render 30 frames per second, but the display shows 60. In demonstrations, Cyberpunk 2077 jumped from 30 FPS to over 235 FPS with DLSS 4's multi-frame generation on RTX 5000 series cards.

This isn't just upscaling—it's using AI to solve the fundamental performance barrier that kept ray tracing out of real-time applications for decades.

AMD responded with FSR (FidelityFX Super Resolution), which started as a spatial upscaling technique without requiring machine learning hardware. FSR 2 added temporal data and improved ray tracing support. FSR 3 brought frame generation to compete with DLSS 3, offering similar benefits across a wider range of AMD hardware, including older cards without dedicated AI accelerators.

Intel's XeSS took a middle path, designed to work both on Arc GPUs with dedicated AI hardware (XMX units) and on competitors' cards using traditional shaders. XeSS 1.3 delivered about 10% frame rate improvements on discrete Arc GPUs, with XeSS 2 adding frame generation for their Battlemage architecture.

Microsoft recognized the fragmentation problem and introduced DirectSR—not another upscaling technology, but an API providing a single code path for developers to support DLSS, FSR, and XeSS simultaneously. Instead of implementing three separate solutions, studios can use DirectSR and let the driver route to whichever technology the player's GPU supports.

These AI denoising techniques transformed ray tracing from a niche feature for enthusiasts with $1,200 GPUs into something accessible on mid-range hardware. You still need the RT cores to do the actual ray tracing, but AI makes it practical at playable frame rates.

Hardware capability meant nothing without software support. Getting ray tracing from a technical demonstration to mainstream feature required buy-in from engine developers, game studios, and middleware providers.

The transformation happened faster than most expected. Battlefield V launched in 2018 as one of the first games with ray-traced reflections, showing real-time reflections in vehicle surfaces and water that actually matched the surrounding environment. Control followed in 2019, using ray tracing for reflections, shadows, and diffuse global illumination to create its brutalist architecture with genuinely eerie, realistic lighting.

Metro Exodus, Cyberpunk 2077, and Control became the poster children for what ray tracing could do. Cyberpunk's neon-soaked Night City uses ray tracing for reflections, shadows, and global illumination, creating lighting that fundamentally changes how the world feels. The difference between ray tracing on and off isn't just "prettier"—it's the difference between a game that looks good and one that looks believable.

Game engines moved quickly to integrate ray tracing APIs. Unreal Engine, Unity, CryEngine, and proprietary engines from major studios added support within two years of the RTX 2000 launch. Microsoft's DirectX Raytracing (DXR) API and Vulkan's ray tracing extension provided standardized ways for developers to access the hardware, smoothing the adoption curve.

But gaming is just one application. Architecture firms use real-time ray tracing for client walkthroughs of buildings that don't exist yet, showing accurate daylighting and material interactions. Product designers iterate on car interiors and consumer electronics with physically accurate materials rather than waiting hours for each render. Film previz teams block out shots with lighting quality that used to require finished VFX.

Medical visualization, scientific simulation, and VR training scenarios all benefit from the same hardware. When you can accurately simulate light transport in real-time, it opens doors beyond entertainment.

The console market validated ray tracing's mainstream arrival. Both the PlayStation 5 and Xbox Series X launched in 2020 with AMD RDNA 2 GPUs including ray tracing hardware. That guaranteed a baseline install base of millions of systems capable of ray tracing, giving developers confidence to design around the feature rather than treat it as an optional PC enhancement.

By 2025, ray tracing appears in over 200 shipping games, from indie titles to AAA blockbusters. It's no longer a question of "if" developers will use ray tracing, but "how much" and "for which effects."

Here's what's often misunderstood: even with dedicated hardware and AI upscaling, pure ray tracing remains impractical for real-time applications on consumer hardware. The computational demands are still too high.

What we actually have is a carefully balanced hybrid approach. Rasterization still does the heavy lifting for primary visibility—figuring out what geometry is visible from the camera. That's what rasterization has always been good at, and decades of optimization make it blazingly fast.

Ray tracing comes in as a secondary pass for specific effects where physical accuracy matters most:

Reflections get the most dramatic visual improvement. Instead of screen-space approximations that break down when reflected objects are off-screen, ray-traced reflections show the actual environment, correctly positioned and colored. Puddles on streets reflect buildings above. Chrome surfaces show accurate distortions. Mirrors work like mirrors.

Shadows benefit from soft, contact-hardening shadows that look natural. Traditional shadow maps struggle with soft shadows from large light sources and contact shadows where objects meet surfaces. Ray tracing handles both naturally because it's simulating actual light occlusion.

Global illumination simulates light bouncing between surfaces—the indirect lighting that gives scenes depth and cohesion. A red wall casts a subtle red tint on nearby white surfaces. Light spilling through a window brightens an entire room, not just the surfaces directly hit by sun rays. This is where ray tracing really sells the physicality of a space.

Ambient occlusion darkens crevices and contact points between objects, grounding them in the scene. Ray tracing calculates this more accurately than screen-space approximations, especially for complex geometry.

Most games use ray tracing for two or three specific effects, not all of them. The performance budget determines which effects get the ray tracing treatment based on which deliver the biggest visual return.

Most games use ray tracing for two or three of these effects, not all of them. The performance budget determines which effects get the ray tracing treatment. Developers profile their scenes, identify where ray tracing delivers the biggest visual return, and apply it strategically.

Variable rate shading reduces ray counts in peripheral vision and less important screen areas where players won't notice reduced quality. Temporal accumulation reuses ray tracing data from previous frames, amortizing the cost over time. Clever spatial filtering spreads rays more efficiently across the frame.

These optimizations are why hybrid rendering works. Pure ray tracing might be the ultimate goal, but hybrid techniques deliver 90% of the visual benefit at 30% of the performance cost. That's engineering pragmatism meeting computational reality.

Understanding what makes RT cores special requires looking at the actual hardware architecture and how it differs from traditional GPU compute.

A conventional GPU shader core is versatile—it can handle vertex transformations, pixel shading, compute operations, and basically any programmable graphics workload. That flexibility comes at a cost: it's not optimized for any particular task.

RT cores are the opposite. They're fixed-function units designed specifically to traverse BVH structures and perform ray-geometry intersection tests. No flexibility, just raw speed for one critical operation.

When a ray enters the scene, the RT core starts at the root of the BVH tree. It tests whether the ray intersects the bounding box at that node. If not, it instantly discards that entire branch of the tree—potentially millions of triangles eliminated in a single test. If the ray does intersect, it descends to the child nodes and repeats the process.

Once the traversal reaches leaf nodes containing actual geometry, the RT core performs ray-triangle intersection tests—pure computational geometry determining the exact point where the ray hits a triangle, if at all. These tests involve dot products, cross products, and conditional logic happening at a scale of billions of calculations per frame.

The specialized silicon implements these operations in fixed gates that execute far faster and more efficiently than equivalent shader code. It's the same principle behind dedicated matrix multiplication units in AI accelerators—when a specific operation dominates your workload, building custom hardware for it pays enormous dividends.

Modern BVH construction happens on the GPU as part of the rendering pipeline, often as a post-processing step after initial scene setup. Researchers have demonstrated techniques like converting axis-aligned bounding boxes to oriented bounding boxes that better fit geometry, achieving 18.5% improvement for primary rays and 32.4% for secondary rays with up to 65% gains in some scenarios. These optimizations happen transparently to developers but make ray tracing progressively more efficient.

Memory hierarchy matters enormously. RT cores need rapid access to BVH node data, which means large, fast caches. NVIDIA's Ada Lovelace architecture (RTX 4000 series) doubled ray tracing performance partly through architectural improvements to cache and memory subsystems, not just by adding more RT cores.

The data flow typically works like this: shader cores set up rays (origin, direction, ray parameters), dispatch them to RT cores, which perform traversal and intersection tests, then return hit information back to shaders. Those shaders use the hit data to fetch textures, evaluate materials, spawn new rays for reflections or shadows, and eventually determine the final pixel color. The back-and-forth between RT cores and shaders happens millions of times per frame, making the interconnect bandwidth another critical bottleneck.

It's a delicate dance of specialized hardware, memory architecture, and shader coordination. The RT cores get the spotlight, but they're one part of a larger system optimized for ray tracing workloads.

The performance conversation often overlooks a practical concern: power consumption and heat. Ray tracing doesn't just cost frame rate—it costs watts.

A high-end GPU can consume 600 watts under full load, comparable to running a washing machine or nearly as much as a refrigerator. When you enable ray tracing, GPU utilization spikes as both the RT cores and shader cores work harder. That increased utilization translates directly to higher power draw and more heat generation.

For desktop PCs with robust cooling and power supplies, this is manageable, if expensive on electricity bills during extended gaming sessions. For laptops, it's a more serious constraint. Gaming laptops already struggle with thermals; adding ray tracing can trigger thermal throttling, where the GPU reduces performance to avoid overheating. Battery life takes a massive hit—expect 30-50% less runtime when ray tracing is enabled on battery power.

The console world handles this through fixed power budgets. PlayStation 5 and Xbox Series X have thermal designs built around specific power envelopes. Games must fit their ray tracing implementation within those constraints, which explains why console ray tracing typically offers lower quality settings than high-end PCs. It's not a capability gap—it's a thermal reality.

Efficiency improvements matter as much as raw performance gains for this reason. Every generation of GPUs aims to deliver more ray tracing performance per watt. NVIDIA's transitions from Turing (RTX 2000) to Ampere (RTX 3000) to Ada Lovelace (RTX 4000) each brought significant efficiency improvements, not just speed increases. AMD's progression from RDNA 2 to RDNA 3 similarly focused on performance-per-watt gains.

From a broader environmental perspective, this matters. Gaming is a multi-billion-user activity. If ray tracing becomes standard but significantly increases energy consumption across millions of systems running for hours daily, the aggregate energy impact is substantial. Data centers using GPUs for rendering, AI, and scientific computing already consume massive amounts of power. Efficiency isn't just about saving money—it's about making these capabilities sustainable at scale.

The industry recognizes this. Recent GPU architectures emphasize dynamic power management, shutting down unused blocks, throttling clock speeds during light workloads, and intelligently routing work to the most efficient processing units. Ray tracing benefits from these improvements like any other workload.

Ray tracing hardware didn't end the evolution—it started it. The trajectory from here gets interesting.

First-generation RT cores (NVIDIA Turing, AMD RDNA 2) proved the concept. Second-generation (Ampere, RDNA 3) improved performance and efficiency. Third-generation (Ada Lovelace, upcoming architectures) added capabilities like better BVH refitting for dynamic scenes and more sophisticated intersection primitives.

Future iterations will likely add hardware support for more complex ray types—curved surfaces, volumetric rays for fog and smoke, caustic sampling for glass and water effects. Current RT cores focus on basic ray-triangle tests; extending that to implicit surfaces, subdivision surfaces, and procedural geometry could eliminate preprocessing overhead and memory consumption for complex models.

Machine learning integration will deepen. Current AI denoising happens as a separate pass after ray tracing. Future architectures might integrate neural network inference directly into the ray tracing pipeline, allowing adaptive sampling that fires more rays where the network predicts high uncertainty and fewer rays where it's confident. That closed-loop approach could dramatically improve quality-per-ray.

Multi-bounce global illumination remains expensive. Most games limit rays to one or two bounces—a ray hits a surface, spawns a reflection or shadow ray, then stops. Realistic global illumination needs many bounces to capture complex light transport. Architectural changes like caching radiance data across frames or using low-resolution light probes updated with ray tracing could make many-bounce global illumination practical.

The software side evolves in parallel. Path tracing—ray tracing's more rigorous form that simulates all light transport through physically based random walks—is starting to appear in games. Minecraft RTX uses full path tracing for its relatively simple geometry. More complex games are experimenting with hybrid path tracing where primary visibility uses rasterization but all lighting is path traced.

Virtual production for film and television increasingly relies on real-time ray tracing. LED stages showing photorealistic environments to actors need correct lighting that responds to camera movement instantly. Ray tracing hardware makes that possible, fundamentally changing how films are shot.

Beyond graphics, the same hardware accelerates scientific visualization, medical imaging, autonomous vehicle simulation, and any domain that needs fast spatial queries. Researchers are exploring using RT cores for collision detection in physics engines, protein docking simulations, and computational geometry problems. The investment in ray tracing hardware may pay dividends far beyond prettier games.

The long-term vision approaches a unified rendering architecture where rasterization disappears entirely. If ray tracing becomes efficient enough through hardware evolution and algorithmic improvements, the hybrid approach becomes unnecessary. Developers could design scenes using pure physics-based lighting without worrying about rasterization's limitations. We're probably a decade away from that on consumer hardware, but the direction is clear.

Technology advances don't exist in a vacuum. Hardware ray tracing is changing who can create photorealistic content and what that means for visual culture.

Before accessible ray tracing, achieving film-quality CGI required expensive render farms, specialized expertise, and—most importantly—time. A solo artist or small indie studio couldn't produce work that visually competed with major studios. The technical barrier was too high.

Now, a developer with a $1,500 workstation can produce renders that approach professional quality. Real-time iteration means artists see results immediately, experiment more freely, and spend creative energy on vision rather than waiting for renders. That democratization opens doors for creators who couldn't previously access these tools.

But it also raises expectations. As photorealism becomes achievable, audiences come to expect it. Games without ray tracing start to look dated faster. The visual language of "good graphics" shifts from "impressive for real-time" to "approaching cinema." That puts pressure on developers, especially smaller teams, to meet rising visual standards or risk seeming amateurish.

There's a quality gap forming between haves and have-nots. Players with RT-capable GPUs get the intended visual experience; those without see a compromised version. It's not unlike how high-refresh monitors create a divide in competitive gaming, but this one affects the artistic vision itself. Developers must decide: design for ray tracing and scale down, or design for maximum compatibility and treat ray tracing as an optional enhancement?

The economics shift too. GPUs with ray tracing capability cost more. That puts cutting-edge visuals behind a higher economic barrier, even as the technology democratizes professional-level tools for creators. It's complicated. The same technology that empowers small studios also makes high-end consumer hardware more expensive, potentially reducing the audience that can fully experience their work.

Education needs to catch up. Graphics programming curricula built around rasterization for decades now need to teach ray tracing fundamentals, BVH construction, importance sampling, and denoising techniques. The skill set for junior graphics programmers is shifting. That's a positive evolution, but it represents a knowledge barrier for self-taught developers and those in regions without updated educational resources.

Accessibility considerations matter too. Ray tracing effects that enhance realism for most players might cause problems for others. Photorealistic lighting can obscure gameplay-critical elements that were visible with traditional lighting. Reflections and global illumination create visual complexity that some players find overwhelming. Games need options to adjust or disable these effects not just for performance, but for varied human visual processing capabilities.

Should you care about ray tracing? The answer depends entirely on what you do and what you value.

For PC gamers with high-end hardware, ray tracing delivers a clear upgrade in visual quality, especially in single-player games where you can appreciate environmental detail. The difference in games like Cyberpunk 2077, Control, or Metro Exodus is substantial enough to justify the performance cost on an RTX 4070 or higher. Competitive multiplayer players often disable it for maximum frame rates, which is rational—a reflection doesn't help you win.

For budget-conscious gamers, ray tracing might not be worth the premium. An RTX 3060 can handle ray tracing at 1080p with DLSS enabled, but you're paying extra for a feature you'll sometimes disable to maintain performance. At this tier, spending on a better rasterization-focused GPU might deliver more consistent results.

For console players, ray tracing is part of the current-generation experience whether you explicitly seek it or not. PS5 and Xbox Series X include it, and developers increasingly design for it. You don't make a purchasing decision around it—it's just there, working within the console's fixed capabilities.

For 3D artists and designers, ray tracing is transformative. Real-time feedback while working with physically accurate materials and lighting eliminates the render-wait-revise cycle that killed productivity. For professional visualization, architecture, and product design, ray tracing isn't optional—it's the baseline expectation for modern workflows.

For developers, ray tracing simplifies some workflows while complicating others. Lighting becomes more physically accurate and thus easier to reason about, but performance optimization gets trickier. You're trading artistic control (hand-placed fake lights that looked good) for physical correctness (real light behavior that might not look how you expected). It's a different skill set, not necessarily easier or harder.

For VR users, ray tracing is largely impractical right now. VR's performance demands—90+ FPS per eye at high resolution—exceed what most GPUs can deliver with ray tracing enabled. Some architectural and training applications use it because frame rate matters less than accuracy, but gaming VR still relies on rasterization. Future hardware might change this equation.

The pragmatic take: ray tracing is genuinely important technology that will define the next generation of interactive graphics. But today, in 2025, it's still a feature that shines on high-end systems and struggles on lower-end hardware. Judge it based on your specific use case and hardware budget, not on hype.

Several specific research directions and engineering challenges will define ray tracing's next phase.

Denoising quality remains a challenge. Current AI denoisers occasionally produce artifacts—ghosting in motion, over-smoothing of fine details, or temporal instability that makes images shimmer between frames. Improving denoiser quality without increasing latency or computational cost requires better training data, more efficient network architectures, or hybrid approaches that combine traditional filtering with machine learning.

BVH construction for dynamic scenes needs work. When objects move, the BVH needs updating. Current approaches either rebuild from scratch (expensive) or incrementally refit the existing tree (fast but produces suboptimal trees that hurt traversal performance). Better algorithms for incremental BVH updates could make ray tracing more practical for physics-heavy games with lots of moving objects.

Coherence and ray scheduling offer untapped performance. Rays that travel in similar directions and hit nearby geometry can share cache data and BVH traversal work. Sorting rays by direction and scheduling them efficiently could improve throughput. Some research explores GPU architecture changes to better exploit ray coherence, but it requires close coordination between hardware and software.

Compressed BVH formats reduce memory footprint and bandwidth. Current BVHs are memory-hungry, limiting scene complexity. Quantized rotation encodings and compressed node formats can shrink BVH size significantly with minimal quality loss. Getting these into production requires hardware support for decompression during traversal, which means GPU architecture changes across vendors.

Specialized ray types could accelerate common effects. Most RT cores optimize for primary rays (camera to scene) and secondary rays (shadows, reflections). Volumetric rays for fog, smoke, and atmospheric effects have different performance characteristics. Caustic rays for light focusing through glass or water need importance sampling. Hardware tailored to these specific ray types might offer big wins for rendering effects that currently struggle.

Integration with mesh shading and geometry pipelines remains messy. Modern GPUs have sophisticated geometry processing with mesh shaders, primitive amplification, and tessellation. Ray tracing mostly operates on static triangle meshes, creating an impedance mismatch. Tighter integration could enable procedurally generated geometry to be ray traced efficiently without materialization in memory.

Cross-vendor standardization continues to improve but isn't seamless. DirectX Raytracing and Vulkan ray tracing extensions provide common APIs, but GPU vendors implement them differently. NVIDIA's RT cores, AMD's Ray Accelerators, and Intel's ray tracing units have different performance characteristics for identical code. Libraries like Microsoft's DirectSR help, but developers still optimize separately per vendor.

These aren't just academic concerns—they directly affect what developers can ship and how games look and perform. Solving them requires hardware-software co-design, industry collaboration, and sustained research investment.

What happened with hardware ray tracing isn't just a performance milestone. It's the moment interactive graphics shifted from approximating reality to simulating it.

For forty years, real-time graphics meant clever faking. Artists learned to paint highlights where reflections should be, place invisible lights where none existed, and bake shadows into textures. It worked because humans are good at interpreting visual shorthand. But it was always, fundamentally, a trick.

Ray tracing removes the tricks. Light behaves like light. Reflections reflect. Shadows fall where physics says they should. The visual language stops being about clever imitation and starts being about accurate simulation.

That shift changes everything downstream. Artists work differently when materials behave predictably. Designers create spaces differently when lighting is physically based. Players perceive worlds differently when visual coherence matches their expectations from reality. The uncanny valley shrinks because the fundamentals are correct, even if polygon counts and texture resolution aren't perfect.

We're still in the early phase. Hardware ray tracing is six years old—barely out of infancy for a technology that will define decades of visual computing. Performance will improve. Quality will increase. The techniques will mature. In ten years, we'll look back at 2025's ray tracing the way we now view early 2000s per-pixel lighting—a crucial step, but primitive compared to what came after.

The implications reach beyond games and graphics. Real-time physical simulation at this level enables scientific visualization, architectural walkthroughs, medical training, autonomous vehicle testing, and applications we haven't imagined yet. When you can simulate reality fast enough to interact with it, you create tools for understanding and manipulating the world.

But the core achievement is this: Silicon learned to see. Not to process images, but to trace light itself. That's the revolution. Everything else is just the beginning of figuring out what we can do with it.

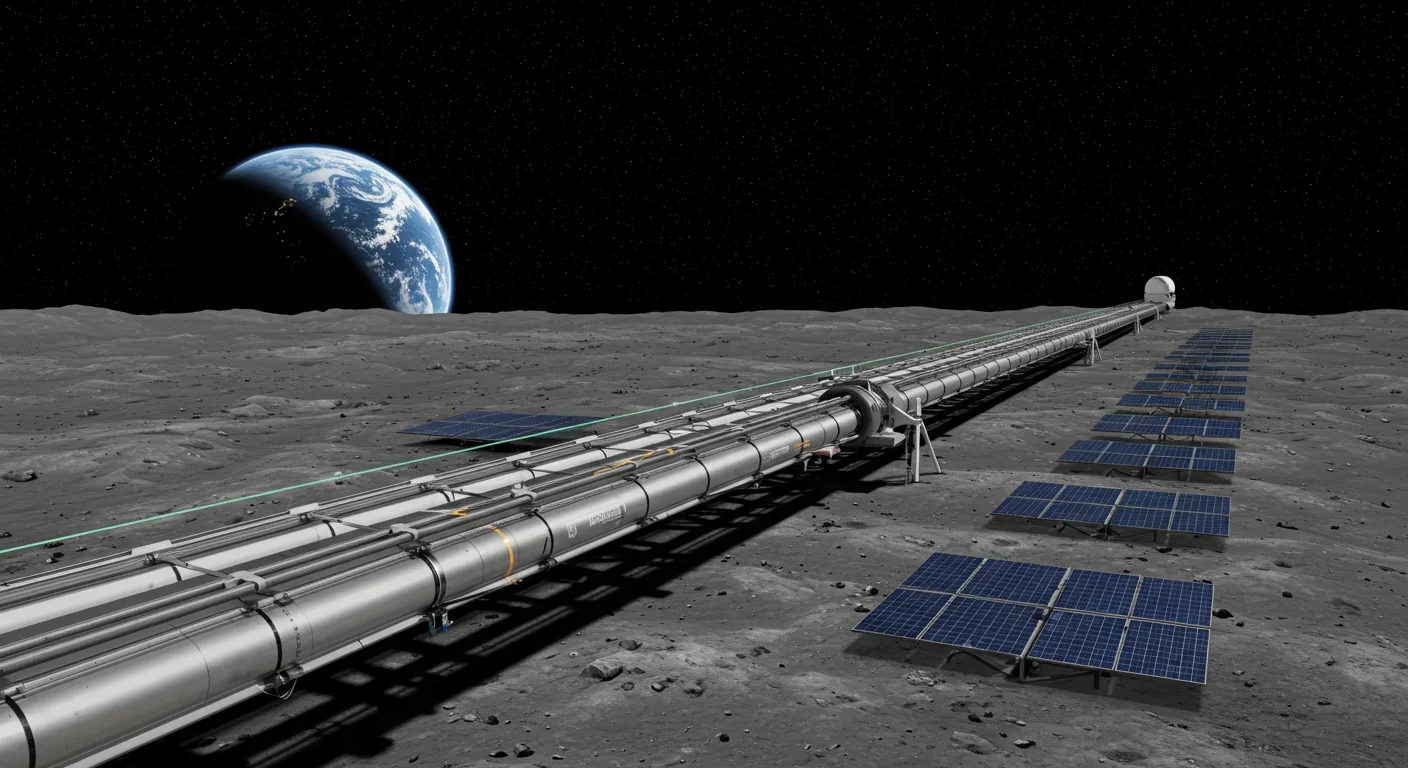

Lunar mass drivers—electromagnetic catapults that launch cargo from the Moon without fuel—could slash space transportation costs from thousands to under $100 per kilogram. This technology would enable affordable space construction, fuel depots, and deep space missions using lunar materials, potentially operational by the 2040s.

Ancient microorganisms called archaea inhabit your gut and perform unique metabolic functions that bacteria cannot, including methane production that enhances nutrient extraction. These primordial partners may influence longevity and offer new therapeutic targets.

CAES stores excess renewable energy by compressing air in underground caverns, then releases it through turbines during peak demand. New advanced adiabatic systems achieve 70%+ efficiency, making this decades-old technology suddenly competitive for long-duration grid storage.

Human children evolved to be raised by multiple caregivers—grandparents, siblings, and community members—not just two parents. Research shows alloparenting reduces parental burnout, improves child development, and is the biological norm across cultures.

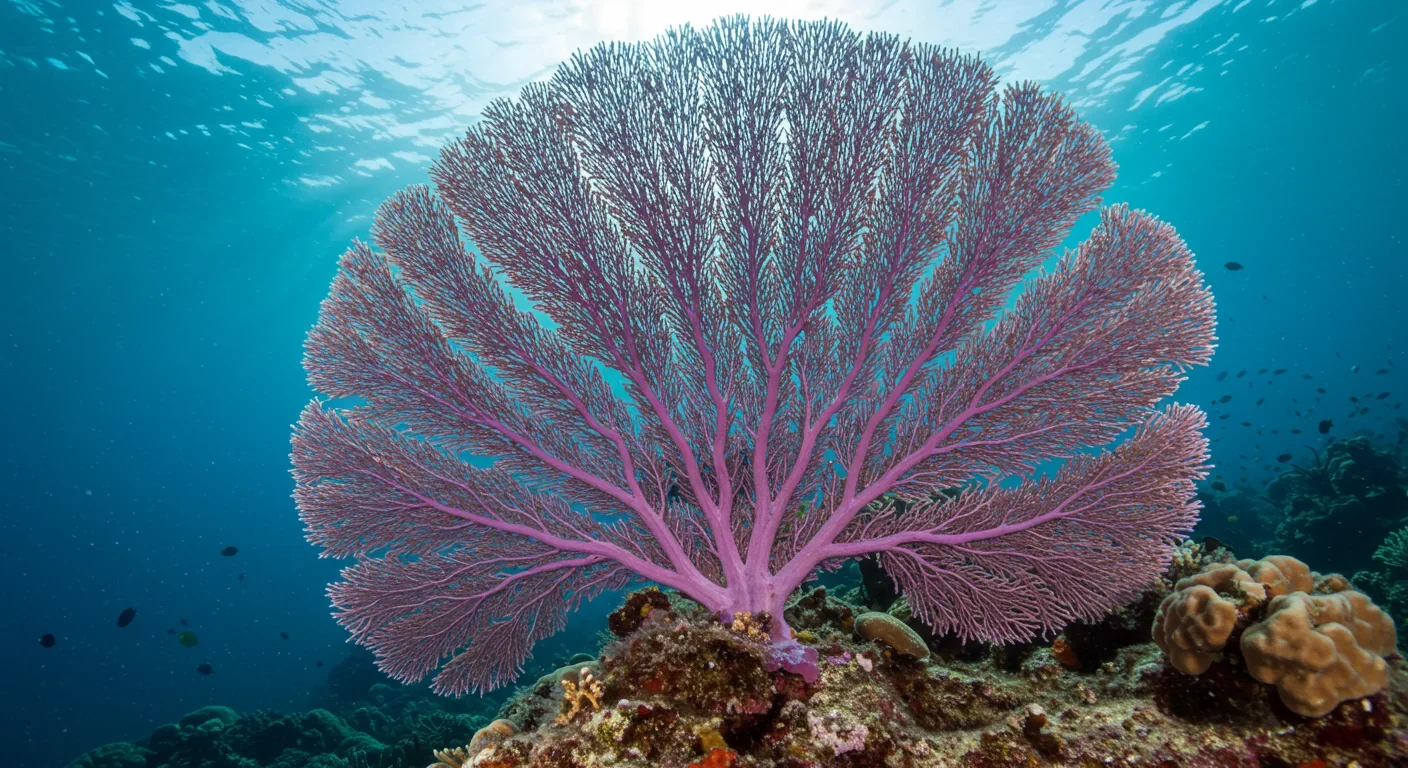

Soft corals have weaponized their symbiotic algae to produce potent chemical defenses, creating compounds with revolutionary pharmaceutical potential while reshaping our understanding of marine ecosystems facing climate change.

Generation Z is the first cohort to come of age amid a polycrisis - interconnected global failures spanning climate, economy, democracy, and health. This cascading reality is fundamentally reshaping how young people think, plan their lives, and organize for change.

Zero-trust security eliminates implicit network trust by requiring continuous verification of every access request. Organizations are rapidly adopting this architecture to address cloud computing, remote work, and sophisticated threats that rendered perimeter defenses obsolete.