How Transformers Replaced Word2Vec in NLP

TL;DR: Tensor cores, specialized hardware introduced by NVIDIA in 2017, transformed AI from a research pursuit to a trillion-dollar industry by accelerating matrix operations 5-20x, cutting training costs by 80%, and enabling real-time inference at scale across every sector.

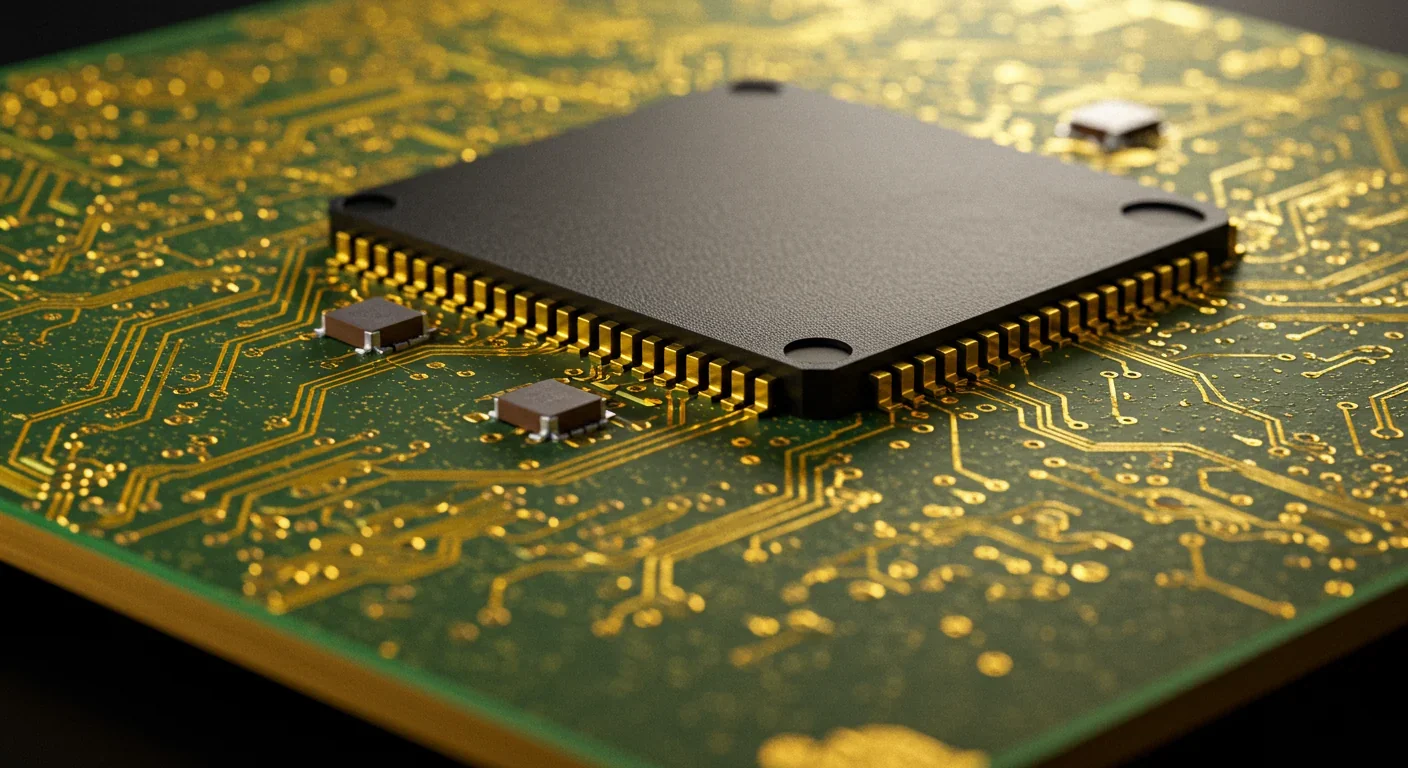

The next time you ask ChatGPT a question or watch as Midjourney conjures an image from text, pause for a second. That response traveling back to you in milliseconds? It's powered by hardware innovation that didn't exist seven years ago. In 2017, NVIDIA introduced something called tensor cores—specialized circuits designed to excel at one task: multiplying matrices really, really fast. Today, those cores are why AI went from a research curiosity to something reshaping every industry on Earth.

Before tensor cores arrived, training a large language model meant renting expensive GPU clusters for weeks or months. The costs alone kept AI capabilities locked behind corporate and academic walls. NVIDIA's Volta architecture changed that equation overnight, delivering up to 12x improvements in peak performance and cutting training times by 5x compared to the previous generation. Suddenly, what took a month could happen in a week. What cost millions could cost hundreds of thousands. The floodgates opened.

But tensor cores aren't just about speed. They represent a fundamental rethinking of how we do computation for AI. Traditional GPU cores execute one instruction per clock cycle—fine for graphics, inefficient for the matrix operations that dominate deep learning. Tensor cores, by contrast, perform fused multiply-add operations on entire 4x4 matrices in a single cycle. It's like comparing a worker placing one brick at a time to a crew dropping entire wall sections into place. Different tools for different jobs.

If you've spent any time with GPUs, you know about CUDA cores—the general-purpose workhorses that handle everything from rendering video games to scientific simulations. CUDA cores are flexible, but that flexibility comes with a cost. Each core processes one floating-point operation per cycle, which means even straightforward matrix math requires coordinating thousands of individual operations across hundreds of cores.

Tensor cores throw out that flexibility in exchange for raw throughput. They're purpose-built for matrix multiplication—the backbone of neural network training and inference. When you multiply two matrices, you're essentially performing thousands of multiply-and-add operations. A tensor core does those operations in bulk, processing 4x4 tiles of data simultaneously. The result? Massive acceleration for AI workloads while using the same silicon real estate more efficiently.

Tensor cores can process 4x4 matrix operations in a single cycle, while CUDA cores must coordinate thousands of individual operations—a fundamental architectural difference that makes AI training 5-20x faster.

Here's the trade-off: tensor cores can't do general-purpose computing. You can't use them to render graphics or run physics simulations. They're specialists, and specialists don't multitask. But in the world of deep learning, specialization is exactly what you need. Neural networks spend 90% of their compute time on matrix operations. Optimize that, and everything else becomes background noise.

The architectural innovation extends beyond raw compute. Tensor cores support multiple precision formats—FP16, INT8, even FP4 on newer Blackwell architectures. Lower precision means faster computation and less memory bandwidth, which translates to lower power consumption. On Blackwell chips, FP4 operations consume just 16.75 watts per streaming multiprocessor, compared to 55 watts for FP8. That 63% reduction in power isn't just an efficiency win—it's the difference between economically viable AI inference and burning cash.

NVIDIA's first-generation tensor cores debuted with the Volta architecture in 2017. The V100 GPU packed 640 tensor cores, each capable of handling FP16 matrix multiplication. Compared to the Pascal generation before it, Volta delivered that 12x increase in theoretical TFLOPS. In practical benchmarks, training times dropped by 5x. For researchers working on image recognition or natural language processing, that meant experiments that used to take a week now finished over a weekend.

Turing, released in 2018, expanded beyond FP16 to support INT8, INT4, and even INT1 precision. The architectural shift wasn't just about adding new formats—it was about recognizing that different parts of AI workflows need different levels of accuracy. Inference doesn't need the same precision as training. Lower precision formats let you run models faster, cheaper, and with less energy. Turing delivered a 32x performance boost over Pascal for certain workloads, making real-time AI inference feasible on consumer hardware for the first time.

Ampere arrived in 2020 with third-generation tensor cores supporting FP64, TF32, and bfloat16. TF32, in particular, became a game-changer. It offered FP32-like accuracy with FP16-level performance, achieving up to 20x speedups without requiring code changes. Developers could take existing models, flip a switch, and immediately see massive improvements. Ampere also introduced sparsity acceleration, exploiting the fact that many neural networks contain mostly zero values. By skipping those zeros, sparse models could run substantially faster.

"Third-generation Tensor Cores with FP16, bfloat16, TensorFloat-32 (TF32) and FP64 support and sparsity acceleration."

— Wikipedia: Ampere microarchitecture

Then came Hopper in 2022, bringing FP8 precision and a fourth-generation tensor core design. FP8 struck a balance between the speed of INT8 and the flexibility of FP16, becoming the preferred format for large language model training. NVIDIA claimed 30x improvements for LLM workloads compared to Ampere. In practice, that meant training billion-parameter models became accessible to mid-sized companies, not just tech giants.

The latest generation, Blackwell, pushes even further. Fifth-generation tensor cores support FP4 and FP6 formats, targeting the explosive growth in AI inference. As models get deployed at scale—think every ChatGPT query, every recommendation system, every autonomous vehicle making split-second decisions—inference costs dwarf training costs. FP4's 63% power reduction matters enormously when you're running millions of inference requests per second. Blackwell also unifies INT32 and FP32 execution units, reducing idle cycles for mixed workloads and improving overall throughput.

NVIDIA may have pioneered tensor cores, but they're no longer the only game in town. AMD's CDNA architecture, introduced with the MI100 accelerator in 2020, includes matrix cores—AMD's equivalent to tensor cores. While AMD's approach differs architecturally, the goal is identical: accelerate matrix operations for AI workloads. AMD claims competitive performance on AI training benchmarks, though NVIDIA's software ecosystem and CUDA dominance give it a persistent advantage.

AMD's matrix cores use a different instruction set, optimized for the ROCm platform rather than CUDA. For organizations already invested in AMD hardware or looking to avoid vendor lock-in, that matters. But for most developers, NVIDIA's tooling, libraries, and community support make it the default choice. PyTorch and TensorFlow both optimize heavily for CUDA, and frameworks like mixed precision training are tightly integrated with NVIDIA's hardware.

Beyond traditional GPU vendors, specialized AI accelerators are emerging. Google's TPUs, Amazon's Inferentia chips, and startups like Cerebras and Graphcore all target AI workloads with custom silicon. These chips often outperform general-purpose GPUs on specific tasks, but they lack the flexibility and ecosystem that makes NVIDIA's tensor cores so widely adopted. For now, tensor cores sit at the sweet spot: specialized enough to deliver massive performance gains, general enough to handle diverse AI workloads.

The practical impact of tensor cores shows up in two places: training and inference. Training is where models learn—you feed them data, they adjust internal parameters, and over millions of iterations, they get better at their task. Training large language models or image generators requires days or weeks of compute, even with modern hardware. Every percentage point of speedup translates directly to cost savings and faster iteration cycles.

Inference is where trained models do useful work. Every time you use voice recognition, translate text, or get a product recommendation, that's inference. Unlike training, inference happens millions or billions of times per day. Small efficiency gains compound enormously. If you can shave 10 milliseconds off each inference request, you might save millions of dollars annually in cloud computing costs. Tensor cores make that possible, especially when combined with low-precision formats like FP8 or INT8.

Every 10 milliseconds saved per inference request can translate to millions in annual savings for companies running billions of AI queries daily.

Consider a practical example: optimizing PyTorch performance for a production model. Without tensor cores, you're limited to FP32 arithmetic on CUDA cores. With tensor cores, you enable automatic mixed precision (AMP), which intelligently uses FP16 or TF32 for most operations while keeping FP32 for stability-critical calculations. The result? 2-3x faster training with minimal accuracy loss. For a model that took a week to train, that's cutting it to two or three days. For a company running hundreds of training jobs, that's the difference between feasible and impossible.

Inference optimization goes further. Techniques like quantization reduce model weights to INT8 or even INT4, dramatically shrinking memory requirements and speeding up computation. Tensor cores make quantization practical by natively supporting these formats. A model that required four GPUs to run in FP32 might fit on a single GPU in INT8, slashing deployment costs by 75%. That's why companies like OpenAI and Anthropic can offer API access at prices that would've been unthinkable five years ago.

The economic implications of tensor cores extend far beyond hardware costs. Before 2017, training state-of-the-art models was prohibitively expensive. OpenAI's GPT-2, released in 2019, cost an estimated $50,000 to train. GPT-3, released a year later, reportedly cost millions. Those numbers would've been even higher without tensor cores. Volta's 5x training speedup effectively cut costs by 80%, making ambitious AI projects financially viable.

Inference costs follow a similar trajectory. As AI applications scale from thousands to billions of users, every watt and every millisecond matters. Cloud providers like DigitalOcean offer H100 GPU instances specifically because tensor cores make AI workloads economical at scale. The alternative—running models on CPU or older GPU architectures—would cost 10-20x more, pricing out most applications.

This cost reduction democratized AI development. Startups can now train custom models for niche applications without raising venture capital. Researchers at smaller universities can experiment with architectures that require massive compute. Developers can fine-tune open-source models for specific use cases. None of that happens without tensor cores bridging the gap between research-grade hardware and commercially viable products.

The ripple effects touch every industry. Healthcare companies use AI to analyze medical images, accelerated by tensor cores. Financial firms run fraud detection models processing millions of transactions per second. Autonomous vehicle companies simulate billions of miles of driving to train perception systems. In each case, tensor cores are the enabler—not the only factor, but the one that made the economics work.

Despite their advantages, tensor cores aren't a universal solution. Their specialization is both strength and weakness. Tensor cores excel at matrix multiplication, but AI workloads include more than just matrix math. Data loading, preprocessing, activation functions, and memory management all happen on CUDA cores or CPUs. If those components bottleneck your pipeline, tensor cores sit idle, wasting potential.

Memory bandwidth is another constraint. Tensor cores can process data faster than memory can deliver it, especially for large models. Blackwell's cache hierarchy tries to address this with a 65MB L2 cache and optimized memory controllers, but memory remains the limiting factor for many workloads. This is why techniques like sparse graph neural networks matter—by reducing the amount of data that needs moving, you let tensor cores spend more time computing and less time waiting.

"Blackwell's warp scheduler is optimized for low-precision, high-ILP workloads, enabling efficient per-thread instruction throughput over Hopper's deeper buffering strategy."

— Dissecting the NVIDIA Blackwell Architecture with Microbenchmarks

Lower precision formats introduce their own challenges. FP8 and FP4 are great for inference, but training with them requires careful tuning. Numerical stability becomes a concern—too little precision and gradients vanish, models fail to converge, and you waste expensive compute time debugging. Mixed precision training frameworks help, but they add complexity. Not every model benefits equally from low precision, and figuring out which precision to use where often involves trial and error.

Vendor lock-in is real. NVIDIA's CUDA ecosystem is deeply entrenched, and tensor cores are optimized for CUDA workflows. If you want to switch to AMD or custom accelerators, you're rewriting code, retraining models, and potentially losing performance. Open standards like OpenCL exist, but they lag behind CUDA in performance and adoption. For most organizations, that means staying on NVIDIA's platform, which limits competitive pressure and keeps prices high.

Looking forward, tensor cores are evolving in two directions: broader precision support and tighter integration with emerging AI techniques. FP8 investigations across accelerators show how hardware and algorithms co-evolve—hardware enables lower precision, which drives algorithm research, which demands even lower precision support. It's a feedback loop accelerating AI progress.

We're also seeing specialization within specialization. Arbitrary-precision tensor cores that dynamically adjust precision based on workload requirements represent the next frontier. Imagine a chip that uses FP32 for numerically sensitive operations, FP8 for most matrix math, and FP4 for inference—all managed automatically. That level of optimization could deliver another order-of-magnitude improvement in efficiency.

Software innovation matters as much as hardware. Tools like LLM Compressor squeeze more performance from existing tensor cores by optimizing model architectures for sparse computation. As models grow larger, these techniques become essential. A 70-billion-parameter model might have 50% sparse weights—skip those, and you effectively double throughput without changing hardware.

Beyond AI, tensor cores are finding new applications. Scientific computing, drug discovery, climate modeling—any field that relies heavily on matrix operations can benefit. As researchers explore tensor cores for memory-bound kernels, we're learning which problems match their strengths and which don't. That knowledge feeds back into hardware design, creating better specialized accelerators for domains beyond AI.

If you're building AI applications, understanding tensor cores isn't optional anymore. Choosing the right hardware, precision format, and optimization techniques directly impacts your costs and capabilities. A model that trains in three days instead of ten might mean you can iterate three times faster, delivering features your competitors can't match. An inference pipeline that costs 30% less means you can price your product more aggressively or operate at lower margins.

For technical decision-makers, tensor cores represent a bet on continued AI growth. Investing in tensor core-enabled hardware means faster training, cheaper inference, and better ROI on AI projects. But it also means committing to NVIDIA's ecosystem, at least for now. As alternatives mature, that calculation might shift, but today, tensor cores are the standard.

For researchers and students, tensor cores open doors. Experiments that required supercomputer access five years ago now run on a single GPU. Open-source models become playgrounds for innovation. Mixed precision training techniques that maximize tensor core utilization are skills worth learning—they'll differentiate you in a competitive job market.

Understanding tensor cores, precision formats, and optimization techniques isn't just technical knowledge—it's the difference between competitive advantage and falling behind in AI development.

And for everyone else—the people using AI without thinking about the hardware underneath—tensor cores are why AI went from science fiction to everyday tool. They're why your phone understands your voice, why Netflix recommends shows you actually want to watch, and why photo apps can remove backgrounds instantly. None of those applications would exist at scale without the computational efficiency tensor cores enable.

The broader lesson? Specialization works. For decades, computing meant general-purpose CPUs. Then GPUs proved that specialized hardware could accelerate specific workloads by orders of magnitude. Tensor cores take that principle further, showing that even within GPUs, specialization pays off. As AI continues evolving, we'll likely see more specialized accelerators—not fewer. Each one targeting narrower workloads, but delivering performance improvements that justify their existence.

So what comes next? If tensor cores taught us anything, it's that hardware and software evolve together. The next breakthrough might come from new memory architectures, photonic computing, or quantum-inspired algorithms. But it'll almost certainly involve some form of specialized hardware designed specifically for the workload.

For anyone building with AI, staying adaptable matters more than ever. Today's FP8 training pipeline might shift to FP4 next year. Today's single-GPU inference might move to disaggregated architectures where compute and memory live on separate chips. Understanding the fundamentals—why tensor cores work, what trade-offs they make—prepares you to evaluate whatever comes next.

The AI revolution is far from over. Models will keep growing. Applications will keep expanding. And hardware will keep evolving to meet those demands. Tensor cores won't be the final answer, but they're the foundation everything else is building on. Seven years ago, they were a gamble—a bet that AI workloads were different enough to justify specialized silicon. Today, they're essential infrastructure powering a trillion-dollar industry.

In another seven years? We'll probably look back at today's tensor cores the way we now view Volta—impressive for their time, but quaint compared to what came after. That's the nature of exponential progress. What feels revolutionary today becomes table stakes tomorrow. The important thing is recognizing these inflection points when they happen and understanding what they enable. Because the next big thing in AI won't announce itself. It'll just show up in a data sheet, offering 10x better performance for some niche workload nobody thought was important yet. And then, suddenly, everything will change again.

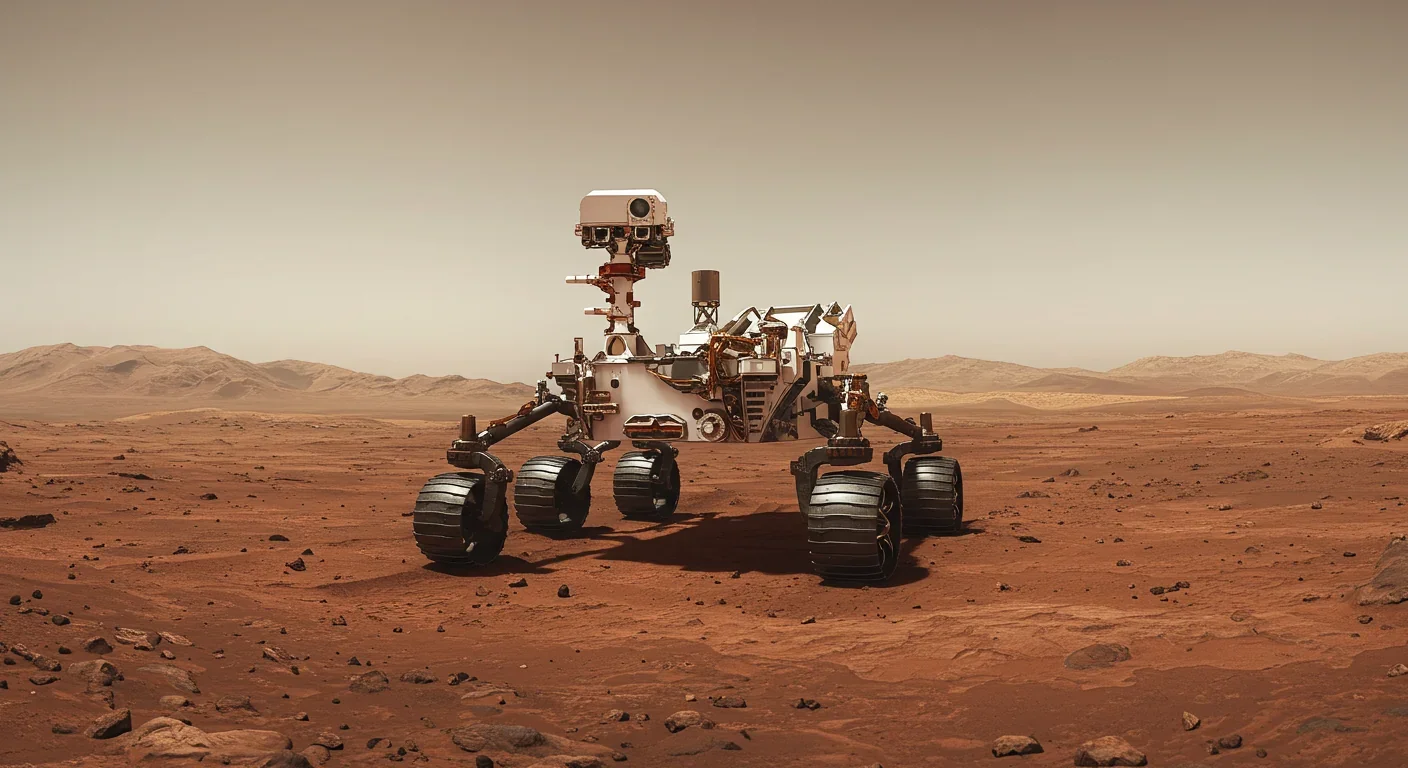

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

Cryptomnesia—unconsciously reproducing ideas you've encountered before while believing them to be original—affects everyone from songwriters to academics. This article explores the neuroscience behind why our brains fail to flag recycled ideas and provides evidence-based strategies to protect your creative integrity.

Cuttlefish pass the marshmallow test by waiting up to 130 seconds for preferred food, demonstrating time perception and self-control with a radically different brain structure. This challenges assumptions about intelligence requiring vertebrate-type brains and suggests consciousness may be more widespread than previously thought.

Epistemic closure has fractured shared reality: algorithmic echo chambers and motivated reasoning trap us in separate information ecosystems where we can't agree on basic facts. This threatens democracy, public health coordination, and collective action on civilizational challenges. Solutions require platform accountability, media literacy, identity-bridging interventions, and cultural commitment to truth over tribalism.

Transformer architectures with self-attention mechanisms have completely replaced static word vectors like Word2Vec in NLP by generating contextual embeddings that adapt to word meaning based on surrounding context, enabling dramatic performance improvements across all language understanding tasks.