Zero-Trust Security Revolution: Verify Everything Always

TL;DR: Scientists are encoding digital data into synthetic DNA molecules, achieving storage densities a million times greater than SSDs with millennium-scale durability. Recent breakthroughs in write speed and AI-powered retrieval are moving DNA storage from laboratory curiosity toward commercial viability for archival applications.

We generate 400 million terabytes of data every single day. By 2033, humanity will produce an estimated 181 zettabytes of information annually, a number so staggering it barely registers as comprehensible. Meanwhile, the storage infrastructure we've built to contain this torrent is starting to crack under the weight of its own physics.

Hard drives fail after three to five years. Magnetic tape degrades within decades. Data centers consume roughly 3% of global electricity, with that figure projected to triple by 2030. We're hurtling toward a collision between exponential data growth and the fundamental limitations of silicon and spinning platters.

But inside the double helix of DNA, scientists have discovered something remarkable: a storage medium capable of holding 215,000 terabytes in a single gram, stable for thousands of years, requiring zero power to maintain. This isn't speculative futurism anymore. In labs from Beijing to Seattle, researchers are encoding everything from Wikipedia to wedding photos into synthetic genetic code, building the foundation for what could become the most transformative shift in data infrastructure since the invention of the hard drive.

The concept sounds almost absurdly simple. DNA uses four chemical bases—adenine, guanine, cytosine, and thymine—to encode genetic information. Digital computers use binary code: ones and zeros. Map binary pairs to DNA bases (00 to A, 01 to C, 10 to G, 11 to T), synthesize those sequences as physical molecules, and you've transformed digital files into biochemistry.

What makes this translation so powerful is density. A standard microSD card stores maybe 1 terabyte per cubic centimeter. DNA packs a million times more information into the same space, achieving theoretical densities approaching 215 petabytes per gram. The entire contents of the Library of Congress could fit in a speck invisible to the naked eye. Every movie ever made, all of YouTube, every book ever written—the sum total of recorded human culture—could be stored in a container the size of a sugar cube.

DNA storage achieves densities a million times greater than SSDs and remains stable for millennia without power—a combination impossible with any electronic storage medium.

The durability is equally startling. When researchers extracted DNA from 5,000-year-old human remains, the genetic code was still readable. Under proper storage conditions (cool, dark, dry), DNA molecules remain stable for millennia, vastly outlasting any electronic storage medium humans have invented. Hard drives rust. Tape deteriorates. Optical discs delaminate. DNA, protected by simple desiccation, sits inert and intact.

Harvard geneticists George Church and Sriram Kosuri demonstrated the concept in 2012, encoding a 53,400-word book into synthetic DNA. Four years later, University of Washington and Microsoft researchers encoded four image files, compressing data that would fill a Walmart supercenter into something the size of a pencil eraser. By 2019, scientists had encoded all 16 gigabytes of English Wikipedia text into DNA molecules. The proof-of-concept phase was essentially over.

The past five years have shifted focus from "can we do this?" to "how do we scale it?" That transition is happening faster than most people realize.

In 2018, Microsoft and the University of Washington stored and retrieved 200 megabytes using a fully automated system. That was significant because manual pipetting by graduate students wasn't going to scale to enterprise storage. By 2021, CATALOG Technologies developed a custom DNA writer capable of encoding data at 1 megabit per second, orders of magnitude faster than previous methods. The company demonstrated massively parallel search of DNA-stored data at the end of 2022, solving one of the technology's most vexing problems: random access.

Traditional DNA storage required sequencing entire pools of molecules to find specific files. CATALOG's breakthrough allows targeted retrieval, similar to how hard drives access specific sectors without reading everything. That shift transforms DNA from a write-once archive into something approaching practical storage.

The real inflection point came in 2024 with two parallel advances. Researchers at Peking University published a Nature paper describing "epi-bits", a method that uses epigenetic modifications (chemical tags added to DNA without altering the base sequence) to encode data. This approach eliminated the need for costly chemical synthesis of new DNA strands. Using an automated platform, they wrote 275,000 bits onto five DNA templates, including two high-definition photos of a tiger and panda. Error rates dropped to 1.42%, low enough for commercial viability with standard error-correction codes. The system achieved 350-bit parallelism per writing reaction, slashing both time and cost.

"We're essentially repurposing DNA to store digital data—pictures, videos, documents—in a manageable way for hundreds or thousands of years."

— Luis Ceze, PhD, University of Washington

Meanwhile, Israeli researchers at Technion developed DNAformer, an AI tool that makes DNA data retrieval 3,200 times faster than previous methods. Using transformer-based neural networks (the same architecture behind ChatGPT), DNAformer processes 100 megabytes in 10 minutes versus several days previously, while improving accuracy by up to 40% on noisy real-world datasets. The model identifies correct patterns from flawed sequencing reads and applies multiple safety layers before decoding.

These weren't academic curiosities. They solved two critical bottlenecks—write speed and read accuracy—that had kept DNA storage trapped in the laboratory.

Money follows feasibility. In May 2025, Atlas Data Storage emerged from stealth with $155 million in seed funding, one of the largest seed rounds in synthetic biology history. Investors included ARCH Venture Partners, Bezos Expeditions, and In-Q-Tel (the CIA's venture arm). Atlas licensed DNA storage assets from Twist Bioscience, a publicly traded company with a proprietary semiconductor-based DNA synthesis platform that miniaturizes the chemistry necessary for high-throughput production.

The market signal is unambiguous. DNA storage is projected to grow from $1.5 billion in 2025 to $5.5 billion by 2033, driven by institutional demand for cold archival storage. Think compliance data for financial firms, historical medical records, satellite imagery, climate datasets, and cultural preservation archives. These applications share common traits: massive volume, infrequent access, and requirements for decades-long retention.

Microsoft has been particularly aggressive. The company's DNA Storage Project treats the technology as foundational infrastructure for cloud computing. Luis Ceze, a University of Washington professor collaborating with Microsoft, framed the opportunity bluntly: "We're essentially repurposing DNA to store digital data—pictures, videos, documents—in a manageable way for hundreds or thousands of years."

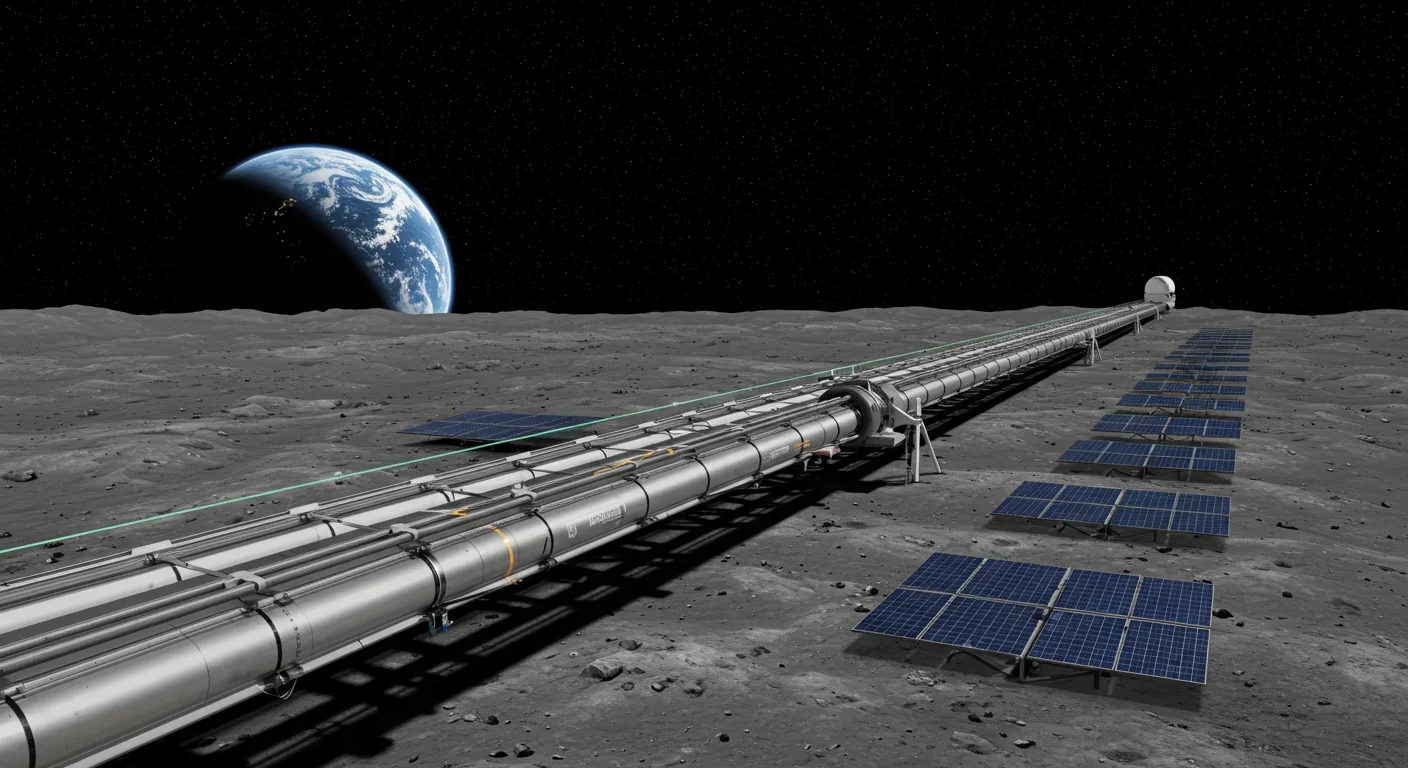

Space agencies are paying attention too. NASA's Artemis program and the European Space Agency are funding research into DNA storage for archiving mission logs and scientific data. The appeal is obvious: radiation-resistant, zero-power storage that can survive extreme temperatures. For lunar bases or Mars missions, where every gram of payload costs thousands of dollars to launch, DNA offers unmatched efficiency.

A consortium including Fraunhofer Institutes and Infineon Technologies developed the BIOSYNTH microchip platform, a CMOS-based system that replaces bulky lab equipment with portable, low-energy devices for on-demand DNA synthesis. This isn't just miniaturization; it's enabling technology for autonomous data encoding in remote or extraterrestrial environments.

Space missions are driving DNA storage innovation—where every gram counts and power is scarce, DNA's radiation resistance and zero-energy retention become mission-critical advantages.

Nothing about DNA storage is cheap yet. In 2013, encoding 1 megabyte cost $12,400, while retrieval ran about $220. Those figures have improved but remain prohibitively expensive for most applications. Current estimates put synthesis costs around $0.10 per DNA base, meaning a single megabyte (8 million bits, requiring roughly 2 million bases) still runs into thousands of dollars.

Reading data through DNA sequencing has become dramatically more affordable thanks to genomics. Illumina's latest sequencers can read human genomes for under $200, and that technology directly translates to data retrieval. Sequencing costs have dropped faster than Moore's Law for two decades. Writing DNA, however, hasn't followed the same trajectory.

Traditional chemical synthesis—the phosphoramidite method used since the 1980s—proceeds sequentially, adding one base at a time. It's slow, requires toxic solvents like acetonitrile, and scales poorly. Emerging alternatives include enzymatic synthesis, which uses natural DNA polymerase enzymes to build strands. Several startups, including Ansa Biotechnologies and Molecular Assemblies, are commercializing enzymatic platforms that promise ten to hundred-fold cost reductions and faster throughput.

Peking University's epi-bit method offers another route. By modifying existing DNA templates rather than synthesizing new strands, the technique bypasses synthesis entirely. Sixty volunteers from diverse academic backgrounds successfully used epi-bit writing kits to encode personalized data, suggesting the method could democratize DNA storage beyond specialist labs.

The economic crossover point depends on access patterns. For data written once and rarely read (cold storage), DNA becomes competitive when synthesis costs drop below $0.01 per base. Industry experts project that threshold could be reached between 2028 and 2032 with enzymatic synthesis at scale. For active storage, where files are accessed frequently, DNA remains impractical. Read/write cycles measured in hours or days can't compete with nanosecond latency of SSDs.

Speed is the most obvious barrier. Even with DNAformer's acceleration, retrieving 100 megabytes takes 10 minutes. Writing is worse. Synthesizing 1 megabyte of data can take hours to days depending on the method. For comparison, a modern SSD writes 1 megabyte in milliseconds.

Error rates complicate everything. DNA synthesis introduces mistakes—wrong bases inserted, deletions, duplications. Sequencing adds its own noise. Current systems achieve error rates around 1-2%, which sounds low until you realize that 1% of 8 million bits (1 megabyte) is 80,000 errors. Robust error-correction codes can fix this, but they add overhead, reducing effective storage density by 20-50%.

Durability requires careful handling. DNA molecules degrade through hydrolysis and oxidation. Proper storage (desiccated, sealed, cool) extends lifespan dramatically, but mishandling can destroy data in weeks. Encapsulation in silica beads or synthetic polymers improves robustness but adds cost.

Random access remains primitive. While CATALOG demonstrated targeted retrieval, most DNA storage systems require sequencing large pools to find specific files. Clever encoding schemes (using molecular "barcodes" and address sequences) help, but searching a DNA archive is still orders of magnitude slower than querying a database on a conventional drive.

Standardization is nonexistent. Different research groups use incompatible encoding schemes, error-correction codes, and storage formats. Without industry-wide standards, DNA archives created today might be unreadable by future systems. The DNA Data Storage Alliance, formed in 2020 by Microsoft, Twist, Illumina, and Western Digital, is working toward interoperability, but progress is slow.

"The opportunity to create an entirely new storage medium does not arise often. At Atlas Data Storage, we are pioneering the use of DNA for high-capacity storage."

— Varun Mehta, CEO of Atlas Data Storage

The technology isn't general-purpose yet, but specific niches are emerging.

Archival storage is the obvious fit. Financial institutions must retain transaction records for decades. Healthcare systems warehouse medical images and patient histories. Government agencies preserve tax returns, census data, and legal documents. Libraries and museums digitize cultural artifacts. All of this cold data, written once and rarely accessed, represents a multi-billion-dollar market where density and durability matter more than speed.

Long-term research data is another natural application. Climate science, astronomy, genomics, and particle physics generate petabytes of irreplaceable observational data. NASA is exploring DNA storage precisely because decades-old satellite imagery and Mars rover data remain scientifically valuable.

Biosecurity presents a darker use case. DNA synthesis already requires screening to prevent malicious actors from ordering pathogen genomes. Encoding digital data into DNA adds another layer of concern: biological storage media could theoretically encode malware or classified information that evades conventional security scans. The same attributes that make DNA appealing for preservation also make it attractive for covert data transport.

Blockchain and cryptocurrency communities have shown interest. Immutability is central to distributed ledgers, and DNA's durability aligns with that principle. In 2019, researchers encoded a Bitcoin wallet into DNA, demonstrating feasibility if not practicality.

Artistic and cultural projects are happening now. Musicians have encoded albums into DNA. Photographers store portfolios in vials. These are mostly symbolic gestures, but they signal a broader recognition that digital preservation is fragile and DNA offers something qualitatively different.

DNA storage isn't just a technical curiosity. It represents a conceptual shift in how we think about information and materiality.

For most of computing history, information has been ephemeral, requiring constant energy input to persist. Hard drives spin. DRAM refreshes. Flash memory wears out. DNA flips that relationship: information becomes a physical object, stable in its material form, readable without power. This has philosophical implications. Information encoded in DNA resembles printed books more than databases—artifacts rather than processes.

The convergence of biology and computing accelerates in both directions. DNA storage pulls sequencing, synthesis, and molecular manipulation into the digital infrastructure stack. Simultaneously, biological research increasingly relies on computational tools (AI models predicting protein structures, for instance). The boundary between wetware and software is blurring.

Environmental considerations cut both ways. DNA storage eliminates energy consumption for data retention, potentially reducing data center emissions significantly. On the other hand, current synthesis methods use toxic solvents, generate chemical waste, and require specialized facilities. Enzymatic synthesis promises greener chemistry, but scalability remains unproven.

Security takes on new dimensions. Encrypting DNA-stored data is straightforward—encrypt before encoding—but physical security is messier. DNA molecules can be copied with PCR (polymerase chain reaction), effectively cloning data without detection. Imagine a breach where an attacker swabs a storage facility and amplifies samples in any molecular biology lab. Traditional cybersecurity assumes data lives in electronic systems; DNA storage requires rethinking threat models.

Privacy concerns amplify in unexpected ways. If DNA storage becomes widespread, distinguishing synthetic data-encoding DNA from biological genetic material could become challenging. Imagine forensic confusion when investigators can't tell if DNA at a crime scene contains evidence of identity or just someone's photo library. Regulatory frameworks don't yet account for this ambiguity.

Industry projections suggest DNA storage transitions from research to commercial deployment in phases.

2025-2027: Niche deployment. Early adopters—research institutions, government archives, compliance-heavy industries—begin pilot programs for ultra-cold storage. Costs remain high ($1,000+ per megabyte), limiting use to data with exceptional long-term value. Companies like Atlas, CATALOG, and Biomemory compete to establish platforms and standards.

2027-2030: Cost reduction and automation. Enzymatic synthesis reaches industrial scale, driving costs below $100 per megabyte. Automated write/read systems mature, reducing manual intervention. First-generation DNA storage appliances ship, targeting data centers with multi-petabyte archival needs. Market size reaches $2-3 billion.

2030-2035: Mainstream archival. Synthesis costs drop below $10 per megabyte, crossing the viability threshold for broader cold storage. Hyperscale cloud providers integrate DNA storage tiers for infrequently accessed data. Standards emerge for encoding, error correction, and interoperability. Space missions incorporate DNA storage for mission-critical data. Market exceeds $5 billion.

Post-2035: Ubiquity and divergence. DNA storage becomes default for long-term retention, much like tape today. New applications emerge: consumer-grade time capsules, legal "smart contracts" encoded in DNA, biological barcoding of physical goods. The technology forks into specialized branches—ultra-high-density research systems, portable field devices, consumer kits for personal archiving.

Speed improvements lag behind cost reductions. DNAformer and similar AI tools will continue accelerating read times, but fundamental biochemical kinetics limit how fast DNA can be synthesized or sequenced. DNA storage will never match SSD performance for active workloads. Instead, it occupies a new category: ultra-dense, ultra-durable, ultra-cold archival storage.

Every storage technology shapes what we preserve and what we forget. Clay tablets captured laws and inventories but not songs. Paper records empires but degrades in humidity. Magnetic tape enables video archives but fails after decades. What does DNA storage prioritize?

Longevity becomes feasible at scale. For the first time, we can realistically imagine preserving humanity's digital record across centuries without continuous migration to new media. That sounds utopian until you consider the implications. Who decides what gets encoded into millennium-grade storage? Whose histories, languages, and cultural expressions become permanent versus ephemeral?

Centralization pressures increase. DNA synthesis and sequencing require specialized facilities and expertise. Unlike hard drives, which anyone can buy and use, DNA storage concentrates control among institutions with molecular biology infrastructure. This could reinforce existing power asymmetries in who controls historical narratives.

The cost-permanence ratio inverts established practices. Currently, important data gets multiple backups across geographically distributed systems. DNA storage flips this: exorbitantly expensive to write but incredibly durable once encoded. The economics favor "write once, keep forever" models, which could lock in decisions about cultural preservation with less flexibility to revise or expand.

At the same time, DNA storage democratizes preservation in unexpected ways. Peking University's epi-bit kits let non-specialists encode data, hinting at a future where individuals create personal DNA archives as easily as burning a DVD once was. Imagine encoding your life's photos, videos, and documents into a vial you can pass down through generations, readable centuries later with the biochemical equivalent of a phonograph.

We're standing at an odd intersection. Our civilization produces more data than we can meaningfully store using current technology. Simultaneously, we've discovered that the same molecular machinery responsible for biological inheritance can serve as a digital archive of almost unlimited capacity. The transition from proof-of-concept to deployed infrastructure is happening now, driven by investment, technical breakthroughs, and the brutal physics of exponential data growth.

Whether DNA storage becomes the optical disc of the 2030s (overhyped, underutilized) or the magnetic tape of the digital age (boring, ubiquitous, essential) depends on cost curves, standardization, and whether the promise of millennia-scale durability compels institutions to rethink archival strategies.

One thing seems certain: the double helix, which has carried genetic information for four billion years, is about to start carrying our holiday photos, tax returns, and streaming libraries too. Evolution, meet digital infrastructure.

Lunar mass drivers—electromagnetic catapults that launch cargo from the Moon without fuel—could slash space transportation costs from thousands to under $100 per kilogram. This technology would enable affordable space construction, fuel depots, and deep space missions using lunar materials, potentially operational by the 2040s.

Ancient microorganisms called archaea inhabit your gut and perform unique metabolic functions that bacteria cannot, including methane production that enhances nutrient extraction. These primordial partners may influence longevity and offer new therapeutic targets.

CAES stores excess renewable energy by compressing air in underground caverns, then releases it through turbines during peak demand. New advanced adiabatic systems achieve 70%+ efficiency, making this decades-old technology suddenly competitive for long-duration grid storage.

Human children evolved to be raised by multiple caregivers—grandparents, siblings, and community members—not just two parents. Research shows alloparenting reduces parental burnout, improves child development, and is the biological norm across cultures.

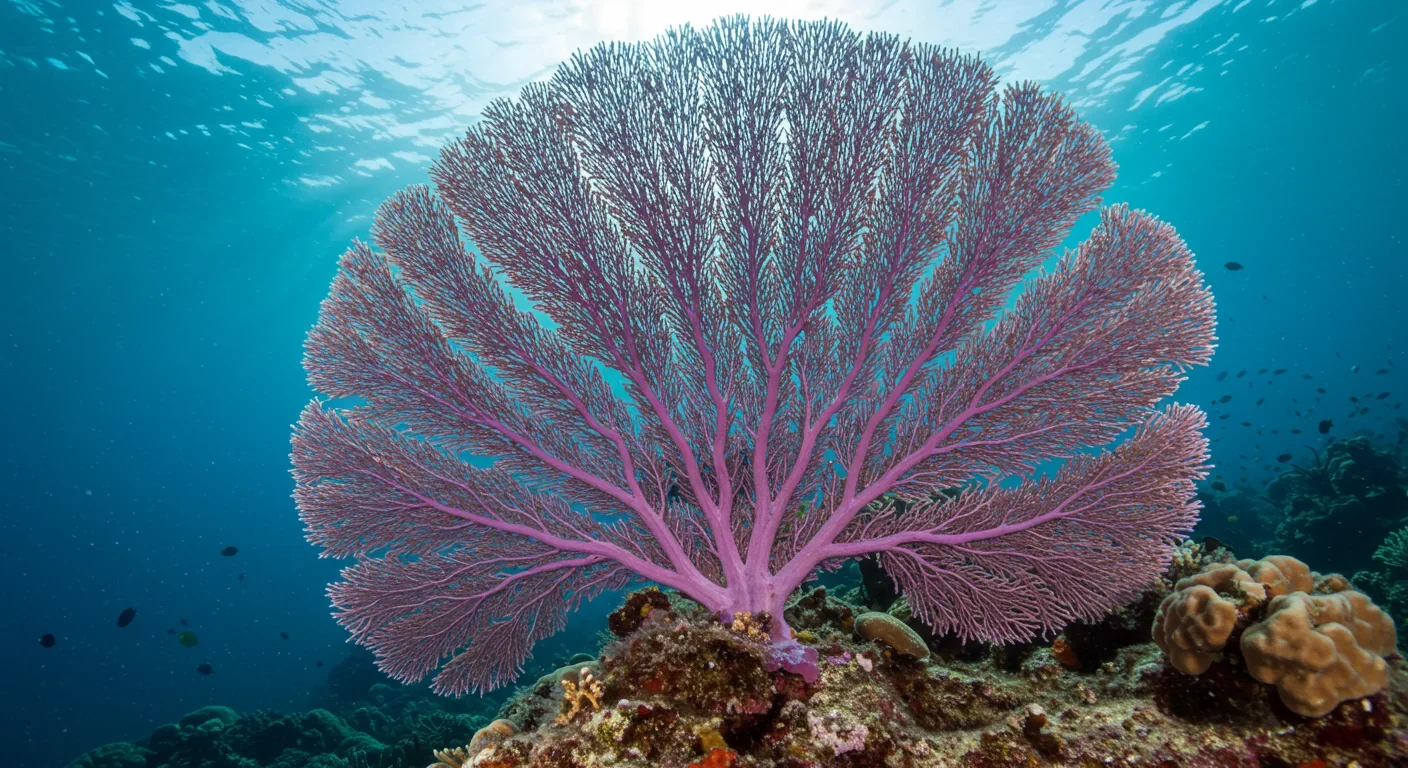

Soft corals have weaponized their symbiotic algae to produce potent chemical defenses, creating compounds with revolutionary pharmaceutical potential while reshaping our understanding of marine ecosystems facing climate change.

Generation Z is the first cohort to come of age amid a polycrisis - interconnected global failures spanning climate, economy, democracy, and health. This cascading reality is fundamentally reshaping how young people think, plan their lives, and organize for change.

Zero-trust security eliminates implicit network trust by requiring continuous verification of every access request. Organizations are rapidly adopting this architecture to address cloud computing, remote work, and sophisticated threats that rendered perimeter defenses obsolete.