The Polycrisis Generation: Youth in Cascading Crises

TL;DR: Social media platforms use algorithmic curation to create digital echo chambers that function like political gerrymandering, manipulating what users see to maximize engagement at the expense of democratic discourse and shared truth.

When you scroll through your social media feed, you're not seeing the digital world as it is—you're seeing a carefully engineered version designed specifically for you. Just as political gerrymandering divides voters into districts to manipulate election outcomes, social media platforms use algorithmic curation to segment users into invisible echo chambers that shape what they see, believe, and ultimately how they vote. This isn't speculation. It's measurable, documented, and happening right now.

Think of your social media feed as a voting district drawn by an algorithm instead of a legislature. During the 2020 U.S. presidential election, Twitter's algorithms created two dominant user clusters—one around @realDonaldTrump and one around @JoeBiden—that together comprised 88 percent of all political discussion on the platform. These weren't naturally forming communities. They were the direct result of Twitter's recommendation system, which distills approximately 500 million daily tweets into a select few "top tweets" that organize how users think about politics.

The mechanics are deceptively simple. Algorithms prioritize content based on engagement metrics—likes, shares, comments, and watch time—rather than accuracy, importance, or democratic value. A 2024 field experiment on X/Twitter demonstrated just how powerful this curation can be: when researchers manipulated feeds to decrease exposure to content expressing antidemocratic attitudes and partisan animosity, users reported more positive feelings toward the opposing party. When exposure increased, negative feelings and emotions like anger and sadness spiked immediately.

Platforms don't just filter content passively. They actively reshape it. Facebook's News Feed algorithm reduces politically cross-cutting content by 5% for conservatives and 8% for liberals, systematically narrowing the information diet for both sides. Google's search algorithm considers 57 distinct data points including device type and physical location to personalize results, meaning two people searching for the same term can receive completely different information.

Your social media feed is not a neutral window on the world—it's a carefully engineered district designed to maximize engagement, not inform citizenship.

Political gerrymandering works by packing opposition voters into a few districts while spreading your supporters across many. Algorithmic gerrymandering does something similar but more insidious: it creates personalized reality bubbles where dissenting views are systematically filtered out through the quiet machinery of engagement optimization.

Consider the 2016 election. Facebook introduced a major algorithm change in mid-2015 that pivoted users away from journalism toward content from friends and family. By June 2016, data from CrowdTangle showed that traffic dropped noticeably at CNN, ABC, NBC, CBS, Fox News, The New York Times, and The Washington Post. This wasn't a technical glitch—it was a strategic business decision that suppressed credible journalism during a critical election period.

The parallel becomes clearer with TikTok's recommendation algorithm during the 2024 presidential race. In a rigorous audit involving 323 independent experiments across three states, researchers found that Republican-seeded accounts received 11.8% more party-aligned recommendations compared to Democratic-seeded accounts. These asymmetries persisted even after controlling for video engagement metrics, suggesting the bias was built into the algorithm itself.

Understanding how these digital districts function requires looking at the psychological mechanisms platforms exploit. Algorithms typically promote emotionally provocative or controversial material because it generates the metrics platforms need: clicks, shares, time on site. This creates feedback loops where polarizing narratives get amplified while nuanced perspectives disappear.

Eli Pariser coined the term "filter bubble" around 2010 to describe how people become separated from information that disagrees with their viewpoints, effectively isolating them in cultural or ideological bubbles. What makes this particularly dangerous is that users often don't realize they're in a bubble. The algorithm adapts invisibly, learning from every click, every pause, every scroll.

An agent-based model published in 2024 tested this dynamic systematically. Researchers created 2,500 simulated agents in networks that mimicked real social media environments. When they activated selective exposure algorithms that filtered information to match users' existing beliefs, polarization increased dramatically—especially in networks where people were already connected to like-minded individuals. The synergy between offline ties and online algorithmic filtering created a multilayer reinforcement effect stronger than either factor alone.

"The presence of homophily-based social networks composed of a majority of like-minded individuals produced greater polarisation compared to random networks. This was aggravated in the presence of social media filtering algorithms."

— Applied Network Science, 2024

What's particularly concerning is that this manipulation doesn't require changing what people do—only what they see. In the X/Twitter field experiment, users' engagement patterns didn't change when researchers altered their feed composition. They still liked, shared, and commented at the same rates. But their feelings toward opposing political parties shifted significantly based solely on the algorithmic curation of their information environment.

None of this is accidental. It's the inevitable result of how social media platforms make money. Platform business models rely on engagement metrics as revenue engines, creating powerful incentives to maximize time on site and interaction rates. Every second you spend scrolling is another opportunity to serve you an ad. Every piece of content that triggers an emotional response keeps you coming back.

A systematic review of 78 peer-reviewed studies from 2015 to 2025 found that social media algorithms "seldom privilege content according to journalistic significance or professional editorial judgment." Instead, they amplify material designed to stimulate reactions, reshaping what counts as news in digital spaces. Newsrooms have responded by adopting dashboards and audience analytics, shifting gatekeeping power away from human editorial norms toward data-driven logics that prioritize "shareworthiness" over importance.

Content creators learn that nuanced analysis gets ignored while hot takes and provocative claims get amplified. Algorithmic exploitation is used for disinformation during elections, sometimes resulting in violence and polarization. Extremist groups like ISIS and al-Qaeda have effectively used platforms like X, Telegram, and YouTube to spread propaganda precisely because algorithms reward emotionally provocative content.

The platforms know this. Facebook engineers warned internally in 2015 about the potential impact of algorithm changes on news visibility. Roger McNamee, an early Facebook investor, issued similar warnings in 2016. The changes went ahead anyway because the business model demands it.

The consequences extend far beyond individual filter bubbles. When millions of people inhabit separate information realities, democratic deliberation becomes nearly impossible. How can citizens have productive debates about policy when they can't even agree on basic facts?

The 2016 election provided a clear case study. The algorithm changes that suppressed journalism didn't affect all news sources equally. Research shows the suppression particularly impacted mainstream outlets while allowing fake news and hyper-partisan content to flourish. Sensational falsehoods spread faster than fact-checked reporting.

Facebook's emotional contagion experiment in 2014 demonstrated the scale of potential manipulation. The platform nudged 689,000 users by altering their displayed content, resulting in measurable shifts in self-reported moods. If an algorithm can change how hundreds of thousands of people feel without their knowledge or consent, what else can it influence?

In 2014, Facebook manipulated the emotions of 689,000 users without their knowledge by altering what appeared in their feeds—proving that algorithmic curation can directly influence how people feel and potentially how they vote.

More recent evidence suggests the impacts are intensifying. Studies of the 2020 and 2024 elections show algorithmically driven interactions creating a "mixed reality" where virtual and real-world dynamics intersect, leading to increased polarization and the erosion of democratic deliberation. The homogenous retweet communities function as digital districts, enabling concentrated influence and amplified misinformation within demographic-specific clusters.

Perhaps nowhere is algorithmic gerrymandering more dangerous than in how it amplifies extremist content and spreads misinformation. Because algorithms prioritize engagement over accuracy, content that triggers strong emotions—particularly fear, anger, and outrage—gets disproportionate visibility.

Academic Joe Burton's research indicates that algorithmic biases heighten engagement through these negative emotions, inadvertently giving rise to extremist ideologies. TikTok's "For You" page frequently recommends far-right material to users who show even mild interest in related topics. The recommendation engine doesn't evaluate whether content is true or constructive—only whether it will keep users watching.

AI-driven moderation can help—YouTube's machine-learning model introduced in 2023 reduced flagged extremist videos by 30 percent. But this creates a new problem: who decides what counts as extremist? AI moderation can amplify racial bias, flagging tweets in African American English up to twice as often as other speech, according to two computational linguistic studies from 2019.

This isn't just a technical bug. It's evidence that algorithms reproduce and scale the biases of their creators and training data. When platforms use automated systems to moderate billions of pieces of content, these biases affect millions of users, systematically disadvantaging activists and communities of color.

Governments worldwide are starting to recognize algorithmic manipulation as a threat to democracy, though regulatory responses remain fragmented. The European Union's Digital Services Act represents the most comprehensive attempt to date, requiring platforms to provide greater transparency about their algorithmic systems and risk assessments.

In the United States, regulatory efforts have been slower. Various bills have been proposed to address algorithmic transparency, but few have become law. Twitter released its recommendation algorithm code on GitHub in March 2024, an unprecedented transparency move, but the code alone doesn't reveal how the system makes decisions in practice or what data it uses to train its models.

GDPR has provided some leverage by forcing companies to treat people as individuals with rights over their data, requiring opt-out and consent mechanisms. Several tech companies have faced substantial fines for violations, demonstrating that regulation can have teeth. But data protection laws weren't designed specifically for algorithmic curation, leaving significant gaps.

Some researchers argue for more fundamental reforms: requiring platforms to offer chronological feeds as a default, mandating algorithmic audits by independent researchers, or even breaking up the largest platforms. Others suggest that transparency alone isn't enough because even when algorithms are open-sourced, their complexity makes meaningful accountability difficult.

Different countries are taking dramatically different approaches to algorithmic governance, reflecting varying priorities around free speech, privacy, and state control. China's approach centers on state oversight and censorship, with algorithms required to promote "positive energy" and socialist values. The government directly audits recommendation systems and can order content removed.

Europe has pursued a rights-based approach, treating algorithmic fairness and transparency as extensions of existing privacy protections. The Digital Services Act creates a framework for risk assessment, requiring very large online platforms to analyze systemic risks including effects on democratic discourse.

India's approach has been more reactive, with the government issuing takedown orders for specific content and occasionally threatening platform bans. Sometimes platforms deliberately take down activists' posts at the request of governments, blurring the line between content moderation and political censorship.

The United States has relied primarily on market forces and platform self-regulation. This has produced some voluntary transparency initiatives, like Twitter's code release, but leaves fundamental business model incentives unchanged. The result is a global patchwork where the same platform may operate under radically different rules depending on user location.

"Opaque recommenders depress trust, while transparent ones can mitigate skepticism."

— Frontiers in Communication, 2025

Individual action can't solve a systemic problem, but users aren't entirely powerless. The first step is awareness: recognizing that your feed is not a neutral window on the world but a curated selection optimized for engagement, not enlightenment.

Practical strategies include deliberately seeking out sources that challenge your viewpoints. If your feed uniformly confirms what you already believe, that's a red flag. Follow journalists and experts who cover issues from multiple angles. Use platforms' chronological feed options when available—they're less algorithmically manipulated.

Consider using RSS feeds or newsletter subscriptions to control your information diet directly. Browser extensions exist that can modify social media feeds, reducing algorithmic interference. Some users maintain separate accounts with different behavior patterns to see how the algorithm responds—essentially auditing the filter bubble in real time.

A/B testing is how platforms systematically discover what works best for each user segment, testing small variations to maximize engagement. Understanding this helps you recognize when you're being experimented on. If different friends see different versions of the same post, there's a good chance you're in someone's test group.

Question your emotional responses. If something makes you immediately angry or anxious, pause before sharing. That's often exactly the reaction the algorithm wants because it drives engagement. Check whether claims are supported by credible sources before amplifying them.

If content makes you immediately angry or anxious, pause. That emotional spike is exactly what the algorithm is designed to trigger—because outrage drives engagement.

Support platforms and services that align their business models with user wellbeing rather than pure engagement. Some social networks are experimenting with subscription models that don't rely on advertising, reducing the incentive to manipulate feeds. Others use algorithmic ranking that prioritizes quality signals over engagement metrics.

Finally, advocate for regulation. Individual behavior changes matter, but systemic problems require systemic solutions. Support legislation that mandates algorithmic transparency, requires platforms to offer chronological feeds, and funds independent research into how these systems affect democracy.

Looking ahead, the trajectory depends on choices we make now. One path leads to increasingly sophisticated algorithmic manipulation, where AI systems predict and shape user behavior with uncanny precision. We're already seeing early versions: recommendation engines that don't just respond to preferences but actively cultivate them.

The other path involves reimagining how digital platforms operate. What if algorithms optimized for informed citizenship rather than engagement? What if platforms faced legal liability for amplifying misinformation? What if users owned their data and could audit how algorithms use it?

Some promising developments suggest this isn't pure fantasy. The Oversight Board reviewing Meta's content decisions represents a novel experiment in independent platform governance. Open-source social networks like Mastodon offer alternatives to corporate algorithmic control.

What seems clear is that the current model—extractive platforms optimizing for engagement at democracy's expense—is unsustainable. Either we'll develop new governance structures that align platform incentives with public good, or we'll continue fragmenting into incompatible information realities until democratic institutions can no longer function.

Algorithmic gerrymandering represents one of the defining challenges of our era. Just as political gerrymandering undermines representative democracy by manipulating electoral maps, algorithmic curation undermines deliberative democracy by manipulating information maps.

The filter bubbles created by recommendation algorithms aren't just making us more polarized—they're making us less capable of recognizing we're polarized. When everyone inhabits their own personalized reality, the very concept of shared truth becomes unstable.

But this isn't inevitable. We've regulated powerful information intermediaries before—think of telecommunications, broadcasting, or publishing. The current moment demands similar collective action: laws that require transparency, standards that prioritize accuracy over engagement, and business models that serve users rather than exploit them.

The digital public square doesn't have to be a collection of engineered echo chambers. It could be a genuine commons where diverse perspectives encounter each other, where algorithms surface the most important information rather than the most inflammatory.

Getting there requires recognizing algorithmic curation for what it is: not a neutral technology, but a form of power that shapes how hundreds of millions of people understand reality. Like any form of power, it needs accountability, oversight, and constant vigilance to ensure it serves the many rather than the few.

Your feed is not reality. It's a map drawn by an algorithm with its own agenda. The first step toward reclaiming democratic discourse is seeing the map for what it is—and demanding better cartographers.

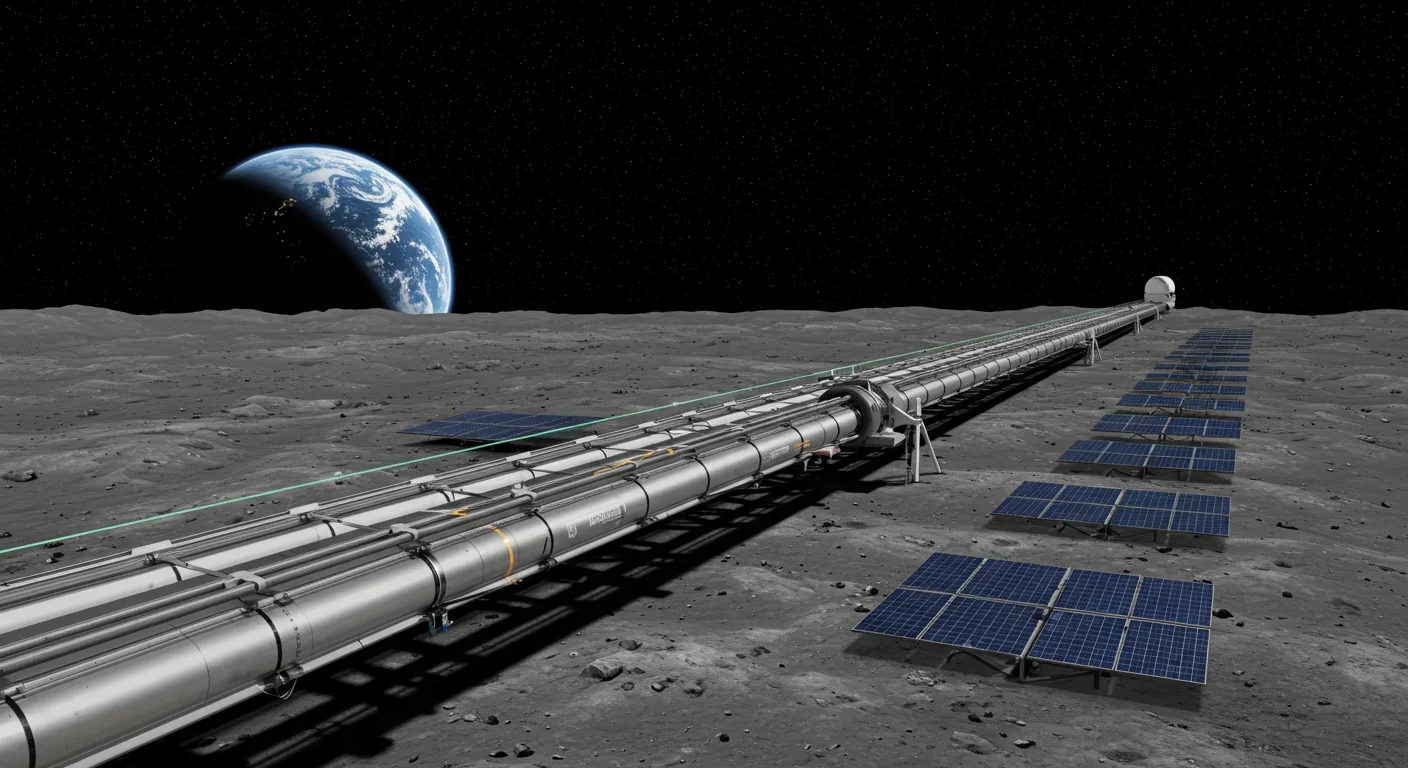

Lunar mass drivers—electromagnetic catapults that launch cargo from the Moon without fuel—could slash space transportation costs from thousands to under $100 per kilogram. This technology would enable affordable space construction, fuel depots, and deep space missions using lunar materials, potentially operational by the 2040s.

Ancient microorganisms called archaea inhabit your gut and perform unique metabolic functions that bacteria cannot, including methane production that enhances nutrient extraction. These primordial partners may influence longevity and offer new therapeutic targets.

CAES stores excess renewable energy by compressing air in underground caverns, then releases it through turbines during peak demand. New advanced adiabatic systems achieve 70%+ efficiency, making this decades-old technology suddenly competitive for long-duration grid storage.

Human children evolved to be raised by multiple caregivers—grandparents, siblings, and community members—not just two parents. Research shows alloparenting reduces parental burnout, improves child development, and is the biological norm across cultures.

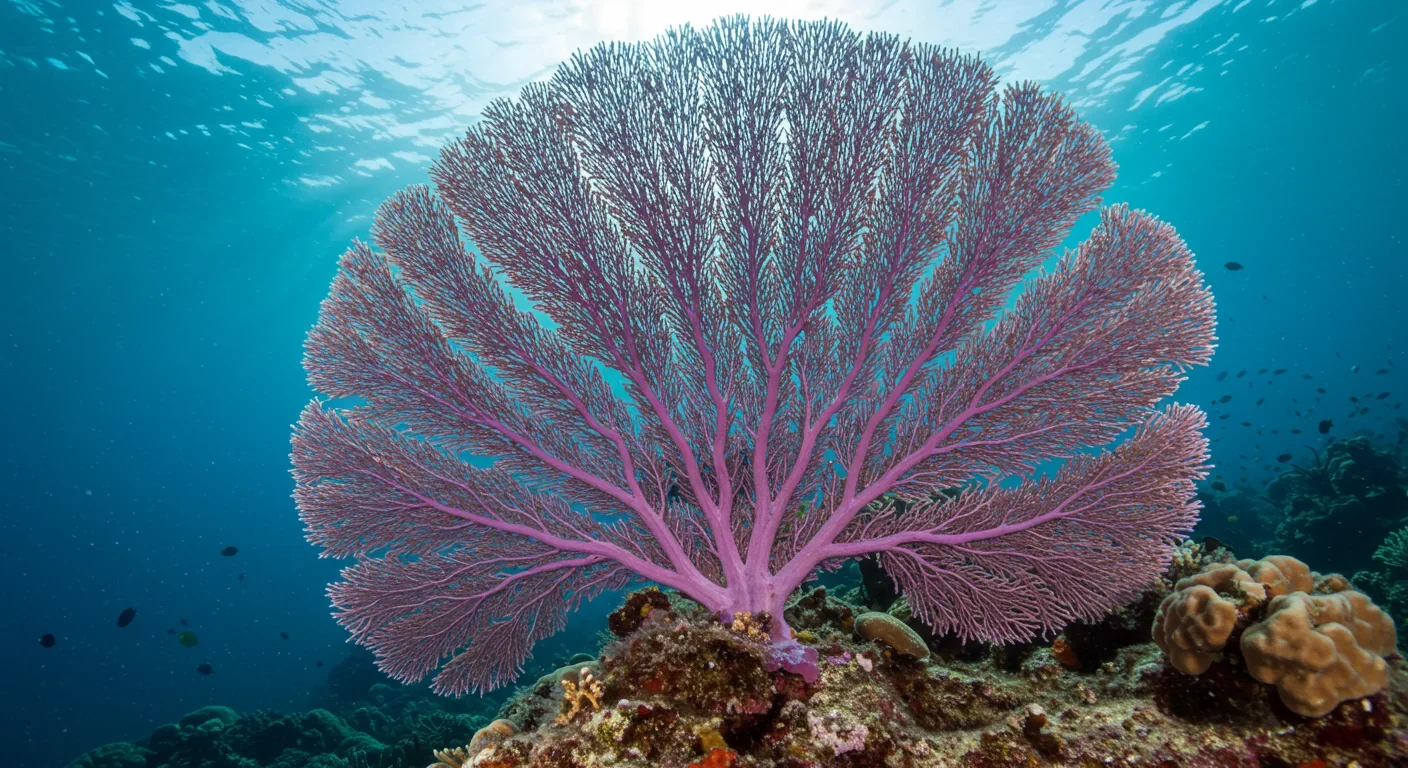

Soft corals have weaponized their symbiotic algae to produce potent chemical defenses, creating compounds with revolutionary pharmaceutical potential while reshaping our understanding of marine ecosystems facing climate change.

Generation Z is the first cohort to come of age amid a polycrisis - interconnected global failures spanning climate, economy, democracy, and health. This cascading reality is fundamentally reshaping how young people think, plan their lives, and organize for change.

Zero-trust security eliminates implicit network trust by requiring continuous verification of every access request. Organizations are rapidly adopting this architecture to address cloud computing, remote work, and sophisticated threats that rendered perimeter defenses obsolete.