The Renting Trap: Wealth Extraction from Working Families

TL;DR: Social media algorithms aren't just showing you content—they're reshaping your beliefs. New research reveals how recommendation systems push users toward extremism through engagement-driven feedback loops, exploiting psychological biases while gradually radicalizing worldviews.

You open TikTok to watch a quick video about home workouts. Thirty minutes later, you're deep into conspiracy theories about government surveillance. Sound familiar? Your apps are designed to do this.

Recommendation algorithms aren't just showing you what you like. They're gradually reshaping what you believe. New research reveals these systems can push ordinary users toward increasingly extreme viewpoints without them noticing.

Every scroll, every click sends signals. Platforms track these behaviors obsessively because their business model depends on keeping your eyes glued to the screen. The longer you watch, the more ads they serve, the more money they make.

Studies show that toxic videos get 2.3% more interactions than neutral content. Partisan posts receive roughly double the engagement of balanced perspectives. When researchers analyzed over 51,000 political TikTok videos during the 2024 election, the algorithm systematically amplified the most inflammatory content. Not because anyone programmed it to spread extremism, but because rage keeps people watching.

Algorithms don't care about truth or your mental health. They care about engagement metrics. If showing you increasingly extreme content keeps you scrolling, that's what you'll see.

This creates a "feedback loop." You watch one video about election fraud. The algorithm serves you another. Then another. Within days, your feed looks nothing like your friend's feed. You've entered an "echo chamber," where every piece of content reinforces the last.

Why does this work so well? Because these systems exploit fundamental quirks in how our brains process information. We're wired with confirmation bias, the tendency to seek out information that confirms what we already think. Social media algorithms supercharge this.

When you see content that aligns with your beliefs, your brain releases dopamine. The algorithm learns this pattern and keeps feeding you that validation hit. You're mainlining content designed to make you feel certain, righteous, and convinced that everyone who disagrees is stupid or malicious.

A recent field experiment on X demonstrated this with disturbing clarity. Researchers manipulated feeds to show more posts containing antidemocratic attitudes and partisan animosity. After just one week, participants showed measurably increased hostility toward the opposing party, their warmth dropping by 2.48 degrees on a 100-point scale.

The opposite worked too. When researchers downranked inflammatory content, users became more tolerant, their warmth increasing by 2.11 degrees. One week of algorithmic intervention reversed what normally takes three years of gradual polarization. Your feed is actively shaping your political emotions, and you probably don't notice.

The process follows predictable stages. First, you get curious. The algorithm shows beginner-level content. Then it gradually introduces more extreme perspectives, each slightly more radical than the last. This "radicalization pipeline" has been documented across YouTube, Facebook, Reddit, and TikTok.

Young men searching for fitness content get recommended videos about masculinity, then men's rights, then how feminism is destroying civilization. The progression feels natural because each step is small.

The mechanics aren't theoretical. Take the "alt-right pipeline" on YouTube, where researchers tracked how viewers moved from mainstream conservative content to white nationalist channels through algorithmic recommendations. The journey started with videos about free speech, moved through anti-feminist content, and ended at explicitly racist channels.

During the 2024 election, TikTok's algorithm showed stark patterns. Immigration content with toxic language received 3.5% higher engagement. After Trump's conviction, videos with severe toxicity saw immediate spikes. The algorithm detected what was getting attention and flooded feeds with more.

Facebook's internal documents revealed its algorithm amplified divisive content at alarming rates. One study found users have one friend with opposing views for every four who share their ideology. But the algorithm reduces exposure to cross-cutting content by 5% for conservatives and 8% for liberals, systematically narrowing perspectives.

Because the radicalization isn't a bug. It emerges from how these companies make money. Every major platform runs on advertising revenue tied to engagement time. The more you scroll, the more money they make.

Balanced content doesn't generate massive engagement. But content claiming "they're trying to destroy everything you care about"? That gets shared. Platform design choices prioritize watch time over user wellbeing. Companies that optimize for engagement grow faster and dominate the market.

The challenge is structural. Even well-intentioned executives struggle because they're competing against platforms without such constraints. If Facebook reduces inflammatory content and Instagram doesn't, users might migrate. Facebook's own studies documented that its algorithm amplified polarizing content because it generated reactions. When engineers proposed changes, executives worried about declining engagement.

TikTok's algorithm is unusually opaque. The company provides limited data to researchers, making it difficult to audit what it does. We know it amplifies engaging content, and we know extreme content tends to be engaging, but exact mechanisms remain mysterious.

How do you know if you're being radicalized? It's harder to spot than you'd think because it feels like natural discovery. But there are telltale signs.

Check your content diet. If most of what you see confirms your beliefs and makes you angry at the same group, you're probably in an echo chamber. When your feed presents a simple narrative where you're right and they're wrong, that's a red flag.

Notice your emotions. Are you consuming content that makes you anxious or self-righteous? That intensity signals the algorithm is serving engagement bait rather than balanced information.

Examine sources. If you're getting information from social media creators rather than established organizations, you're vulnerable. The algorithm preferentially promotes emotionally charged content over factually accurate reporting.

Test your perspective. Can you explain the other side's position in a way they'd recognize? If your mental model of people who disagree is that they're evil or stupid, you've been fed a caricature. Real humans rarely fit neat ideological boxes.

Watch for escalation. If you started watching content about healthy eating and now believe doctors are conspiring to keep people sick, you've gone down a radicalization pipeline.

Notice isolation. Are you convinced mainstream sources all lie and only your preferred creators tell truth? That's classic radicalization. Healthy skepticism questions everything, including alternative sources.

Once you recognize you're in an algorithmic bubble, what do you do? You have more control than you think, but it requires conscious effort.

Start by diversifying sources. Follow people you disagree with, not to argue but to understand. The algorithm won't naturally show opposing viewpoints, so actively seek them out. This doesn't mean accepting bad arguments, but exposing yourself to legitimate differences of opinion.

Use the "not interested" button liberally. When you notice increasingly extreme content, tell the algorithm to stop. This won't completely solve the problem but can disrupt the pipeline.

Set time limits. The longer you're on these platforms, the more opportunity algorithms have to shape your thinking. Decide in advance how much time you'll spend daily and stick to it.

Seek primary sources. If you see a claim about what someone said, watch the original video or read the original statement. The algorithm amplifies outrage-inducing interpretations, often misleading or false.

Take regular breaks from platforms entirely. This gives your brain time to recalibrate. When you're immersed in algorithmically curated content constantly, it's hard to notice how skewed your information diet has become.

Use browser extensions designed to counteract algorithmic manipulation. Researchers have created tools that modify feeds to show more diverse perspectives. These aren't perfect but can help disrupt filter bubbles.

Read long-form content regularly. Books and in-depth journalism force your brain to engage with complex arguments that don't fit into 60-second videos. This builds cognitive resistance to oversimplified narratives.

Some platforms have attempted reforms, though results are mixed. Facebook redesigned its Trending page to show multiple news sources per headline instead of a single source. YouTube implemented changes to reduce recommendations of conspiracy theories, though external researchers note the tension remains.

Regulatory efforts are picking up. The European Union's Digital Services Act requires large platforms to assess and mitigate systemic risks, including algorithmic amplification of harmful content. In the United States, proposals range from requiring transparency to holding platforms liable for content their algorithms actively promote.

Some researchers advocate for "middleware" solutions where third-party developers create alternative algorithms. Users would choose which algorithm curates their feed rather than being locked into the company's engagement-maximizing system. This could allow algorithms optimized for information quality or diverse perspectives.

The long-term solution isn't avoiding technology or hoping platforms fix themselves. It's building collective digital literacy so people can navigate these systems without being manipulated.

Parents should talk to kids about how algorithms work and why feeds might not represent reality. This needs to happen early, before children have internalized the algorithmic worldview. Young people are particularly vulnerable because they lack experience to recognize manipulation.

Schools should teach media literacy as a core subject. Students need to understand how to evaluate sources, recognize manipulation, identify bias, and think critically about information regardless of source.

We need better tools for tracking our own digital consumption. Imagine a system showing exactly what percentage of your content comes from which ideological perspectives, how your feed has changed over time, or which topics trigger your engagement. This transparency could help users notice when they're being led toward extremism.

Most people scrolling right now think they're in control. They believe they're choosing what to watch, deciding what to believe, forming their own opinions. And technically they are, but those choices are being shaped by invisible systems optimized for engagement, not truth.

You're not powerless. Every choice about what to watch, who to follow, and how much time to spend shapes what the algorithm shows next. Understanding that you're in a conversation with an AI system designed to keep you engaged changes everything.

Next time you open your app, before scrolling, ask yourself: Am I choosing what I want to see, or is the algorithm choosing for me? Are my beliefs becoming more extreme or more nuanced? Am I angrier than six months ago, and if so, why?

These aren't easy questions, and answers might be uncomfortable. But they're necessary. Because right now, every day, these systems are gradually reshaping millions of minds. The only question is whether we'll notice before it's too late.

Your feed isn't just entertainment or information. It's an influence engine running 24/7. The choice isn't whether to engage but how to engage without being consumed. Start paying attention to what you're paying attention to. Your beliefs, your relationships, and your grip on reality might depend on it.

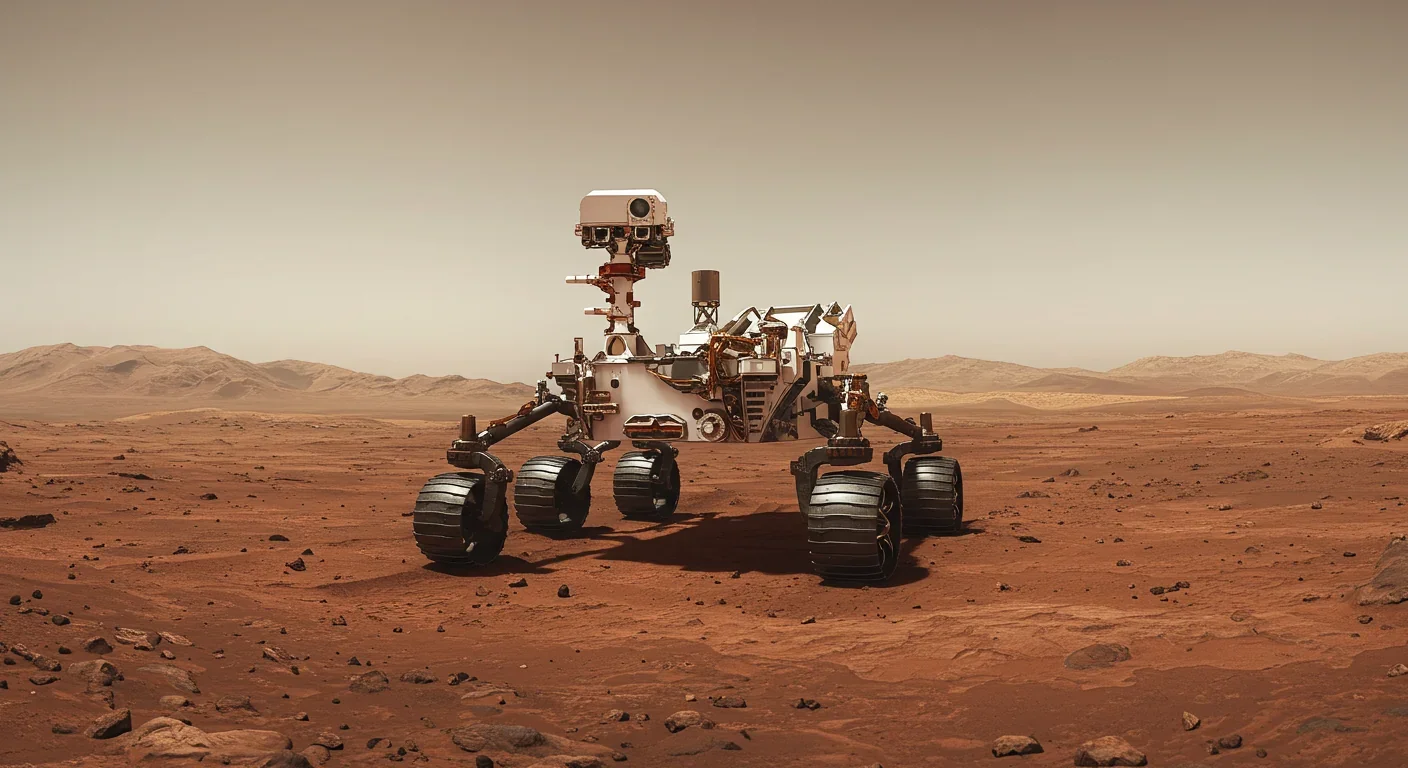

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

The Baader-Meinhof phenomenon explains why newly learned information suddenly seems everywhere. This frequency illusion results from selective attention and confirmation bias—adaptive evolutionary mechanisms now amplified by social media algorithms.

Plants and soil microbes form powerful partnerships that can clean contaminated soil at a fraction of traditional costs. These phytoremediation networks use biological processes to extract, degrade, or stabilize toxic pollutants, offering a sustainable alternative to excavation for brownfields and agricultural land.

Renters pay mortgage-equivalent amounts but build zero wealth, creating a 40x wealth gap with homeowners. Institutional investors have transformed housing into a wealth extraction mechanism where working families transfer $720,000+ over 30 years while property owners accumulate equity and generational wealth.

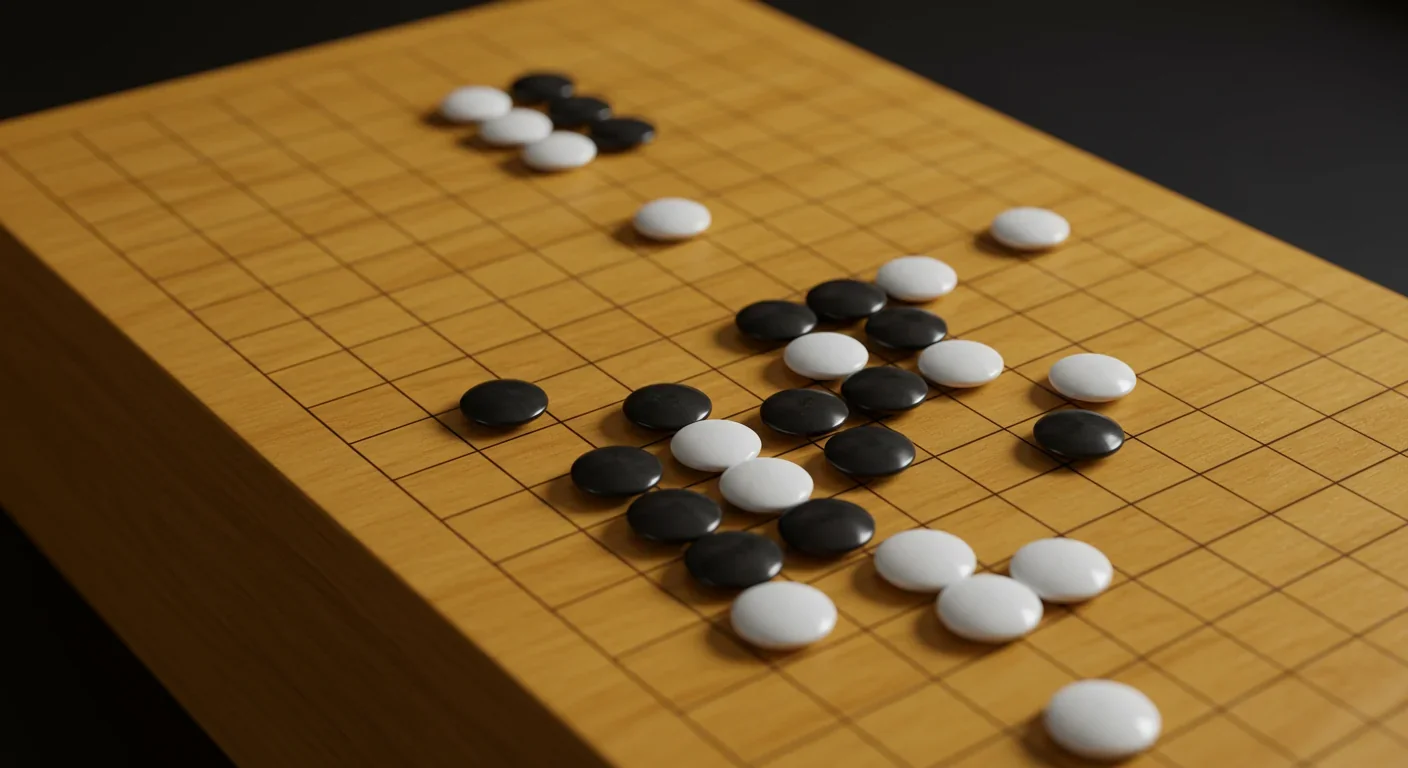

AlphaGo revolutionized AI by defeating world champion Lee Sedol through reinforcement learning and neural networks. Its successor, AlphaGo Zero, learned purely through self-play, discovering strategies superior to millennia of human knowledge—opening new frontiers in AI applications across healthcare, robotics, and optimization.