Cities Turn Social Media Into Public Service Powerhouses

TL;DR: Behind every post you see online, an invisible system of AI algorithms, platform policies, government regulations, and exploited human moderators decides what billions can say. Meta's 2025 shift to community notes, the EU's Digital Services Act fining platforms up to 6% of revenue, and content moderators earning $80/month while reviewing beheadings reveal a power structure where accountability is deliberately obscured—and your digital speech is shaped by forces you never see.

Every minute, 500 hours of video upload to YouTube. Seventy million posts flood Facebook. Billions of tweets, snaps, and TikToks cascade through our digital ecosystem. And behind this torrent of human expression sits an invisible force—part algorithm, part human, part corporate policy, part government mandate—determining what billions of people see, share, and say.

You might think you control your social media feed. But you don't. Someone else—or more accurately, something else—already decided what you'll read before you woke up this morning.

The question isn't whether content moderation exists. It's who holds the keys to that power—and whether we should trust them with it.

Content moderation isn't a single entity. It's a complex, multi-layered system where power is distributed—and often obscured—across five major stakeholders, each with competing interests and conflicting mandates.

Platform Engineers and Policy Teams: At the top sit the tech giants—Meta, Google, ByteDance, Twitter/X. Their product designers embed values into every algorithm. When Mark Zuckerberg announced in January 2025 that Meta would eliminate third-party fact-checkers and replace them with community notes "similar to X," he wasn't just tweaking a policy. He was redefining who gets to arbitrate truth for 3 billion users. His reasoning? Third-party moderators were "too politically biased."

But who decides what's biased? The platforms themselves. Meta's internal program XCheck selectively enforces stricter standards for high-profile users, potentially affecting their content visibility beyond standard public policy. This isn't transparency—it's preferential treatment baked into code.

AI Systems: Algorithms now handle the bulk of moderation decisions. Facebook's AI scans billions of posts in real-time, flagging hate speech, violence, and nudity before human eyes ever see them. YouTube's machine-learning model reduced flagged extremist videos by 30% in 2023. Sounds efficient—until you realize that these same systems mistakenly flagged posts from the Auschwitz Museum as violating community standards, and blocked ads from struggling small businesses during the pandemic.

The problem? AI learns from data created by humans—data steeped in historical inequity. When the majority of AI developers for platforms like Facebook, Instagram, and TikTok have been part of the white male culture of Silicon Valley, the unconscious biases of those developers embed themselves into the systems they create. The result: algorithmic shadowbanning that disproportionately suppresses BIPOC and LGBTQ+ voices, even when their content violates no rules.

Government Regulators: Governments are no longer passive observers. The European Union's Digital Services Act (DSA), which entered force in November 2022, requires platforms with more than 45 million EU users to undergo annual audits, disclose algorithmic details, and face fines up to 6% of global revenue for non-compliance. Very Large Online Platforms (VLOPs)—those reaching more than 10% of the EU's 450 million consumers—must provide transparency reports, risk assessments, and independent audits.

In the United States, Section 230 of the Communications Decency Act has long shielded platforms from liability for user content. But that protection is cracking. FCC Chairman Brendan Carr has signaled an aggressive push to roll back Section 230, and recent court rulings have started to carve out exceptions. In Anderson v. TikTok (2023), the Third Circuit held that TikTok's recommendation algorithm could be considered platform conduct, allowing liability claims that Section 230 would otherwise bar. When platforms actively promote dangerous content, they're no longer neutral intermediaries—they're publishers.

Outsourced Human Moderators: Behind the AI veneer is an army of human content moderators—mostly invisible, mostly exploited. Meta, ByteDance, and others outsource moderation to companies like Accenture, Telus, and Sama, employing workers in Colombia, Kenya, Ghana, and the Philippines. These moderators process 700 to 1,000 cases per shift—averaging 7 to 12 seconds per decision. They view beheadings, child abuse, and torture for wages as low as $80 per month. Many report depression, PTSD, and suicidal ideation. When moderators in Kenya attempted to unionize, they were fired. One moderator, Solomon, attempted suicide after being denied psychological support, then was terminated and flown back to Africa, where he was offered a lower-paid role.

This is content moderation's dirty secret: it's a new factory floor of exploitation, hidden behind layers of subcontracting that shield Big Tech from accountability.

Civil Society and User Communities: The final stakeholder is us—the users. Civil society organizations like the EDPS, EDPB, and grassroots advocacy groups have shaped policy through coordinated pressure. In one notable case, RINJ's campaign led Facebook to update its hate-speech policy after threatening advertiser boycotts. On X, Community Notes—crowdsourced fact-checks—now provide context to potentially misleading posts. But only 1–5% of users ever see these notes, and the system is vulnerable to political manipulation, especially during elections.

The power structure is clear: platforms design the rules, AI enforces them at scale, governments impose legal constraints, outsourced laborers handle the overflow, and users provide feedback that's often ignored. It's a system where accountability is deliberately obscured.

Content moderation today is a hybrid system: AI does the heavy lifting, humans provide the nuance. But the balance is tilting dangerously toward automation.

The AI Moderation Pipeline: When you post a photo, tweet, or video, it doesn't go live unchecked. First, the content is preprocessed—stripped of metadata, resized, and tokenized. Then AI systems analyze it using natural language processing (NLP) for text and computer vision for images. These models scan for hate speech, nudity, violence, misinformation, and copyright violations. If the AI flags the content, it either removes it automatically or queues it for human review.

YouTube's Content ID is a textbook example. It creates digital "fingerprints" of copyrighted works, then scans every upload to detect matches. In 2021, YouTube processed 1.5 billion Content ID claims. When a match is found, the copyright owner can monetize the video, block it, or take it down. Sounds fair—except the system has a 40% failure rate, according to Universal Music Publishing Group. And it's riddled with false positives. White noise videos, Beethoven recordings whose copyrights expired, and even creators' own music have been flagged. One musician, Miracle of Sound, had his channel struck by the distributor of his own music.

The power imbalance is stark: only about 9,000 rights holders—mostly major studios and labels—have access to Content ID. Independent creators don't. This creates a two-tiered system where large corporations can claim content instantly, while small creators must navigate a labyrinthine appeals process that favors the accuser.

When AI Goes Wrong: AI moderation is prone to two types of errors: false positives (removing content that should stay) and false negatives (allowing harmful content to spread).

False positives are rampant. In 2020, Meta's automated systems over-enforced anti-terrorist content during the Israel-Gaza conflict, wrongfully removing posts containing the Arabic word "shaheed" (martyr) and the phrase "from the river to the sea"—historically significant expressions that were not inherently hateful. Over 7 million appeals were filed in February 2024 for hate-speech content removed on Meta platforms, with 80% including contextual reasons such as satire or awareness-raising.

False negatives are just as dangerous. TikTok's own internal report revealed that over 30% of content normalizing pedophilia and 100% of content fetishizing minors slipped through its filters. On X, out of 8.9 million posts reported for child safety violations in the first half of 2024, only 14,571 were removed—a removal rate of 0.16%.

Why does AI fail? Three reasons:

1. Training Data Bias: Many algorithms are developed using datasets from the Global North, predominantly in English. When applied to low-resource languages like Burmese, Amharic, or Sinhala, they collapse. In 2015, Facebook had only two Burmese-speaking moderators, despite widespread anti-Rohingya content that contributed to ethnic violence in Myanmar. Meta's reliance on machine translation rather than native-language training data creates systematic bias.

2. Lack of Context: AI can't understand sarcasm, irony, or cultural nuance. A post raising awareness of breast cancer might be flagged as nudity. A historical discussion about slavery might be censored as hate speech. Without contextual awareness, AI makes oversimplified judgments that invade free speech.

3. Opacity: Even platform developers often don't understand how their algorithms work. Deep learning models are "black boxes"—they deliver results, but the internal logic is inscrutable. This opacity undermines accountability. If you don't know why your post was removed, you can't appeal effectively.

The Human Backup: When AI can't decide, humans step in. But they're overwhelmed. Facebook increased its content moderators from 4,500 to 7,500 in 2017, but that's a drop in the ocean for a platform with 3 billion users. TikTok reached 10,000 moderators in early 2022. They work in grueling conditions, reviewing graphic content in rapid succession. And they receive minimal support. Facebook provides counseling services and resiliency training, but these programs are often inadequate. One moderator in Colombia told researchers, "We have to moderate a lot of cases. In a normal shift, we have to go through 700 to 1,000 cases, which means we have to accomplish an average time of 7-12 seconds to moderate each case."

The pressure to meet quotas incentivizes speed over accuracy. Mistakes are inevitable. And when mistakes happen, the platforms blame the contractors, who blame the moderators. No one is held accountable.

Content moderation doesn't happen in a vacuum. It's shaped by legal frameworks that vary wildly across jurisdictions, creating a patchwork of rules that platforms struggle—or refuse—to navigate.

Section 230: America's Shield: In the United States, Section 230 of the Communications Decency Act is the bedrock of online speech. Passed in 1996, it states: "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider." In plain English: platforms aren't liable for what users post.

Section 230 was intended to encourage platforms to self-regulate without fear of lawsuits. And it worked—arguably too well. Platforms like Facebook, YouTube, and Reddit grew into behemoths, hosting billions of user posts with minimal legal risk. But Section 230 has become a double-edged sword. Critics argue it enables platforms to shirk responsibility for harmful content, from misinformation to hate speech to terrorist propaganda.

Recent legal challenges are testing the limits. In Lemmon v. Snap (2021), parents sued Snapchat over its Speed Filter, which incentivized dangerous driving and led to fatal accidents. The Ninth Circuit ruled that the filter was a product feature, not user content, and thus not protected by Section 230. Similarly, in Anderson v. TikTok (2023), the Third Circuit found that TikTok's recommendation algorithm promoting the "Blackout Challenge" could constitute platform conduct, opening the door to liability.

These rulings signal a shift: when platforms actively promote content through algorithms, they're no longer passive conduits. They're publishers. And publishers can be sued.

The EU's Digital Services Act: A New Standard: The European Union has taken a different approach. The Digital Services Act, which entered force in November 2022, establishes a tiered regulatory framework. Platforms with more than 45 million EU users—designated as Very Large Online Platforms (VLOPs)—face the most stringent obligations:

- Algorithmic Transparency: Platforms must disclose how their algorithms work, including the main parameters used for content recommendation and targeted advertising.

- Risk Assessments: VLOPs must evaluate risks to fundamental rights, electoral integrity, and public health, and implement measures to mitigate those risks.

- Independent Audits: Platforms must undergo annual audits by external reviewers.

- User Redress: Users must be able to appeal content removal decisions and receive explanations.

- No Profiling of Minors: Targeted advertising to minors or using sensitive personal data (ethnicity, political views, sexual orientation) is banned outright.

Violations can result in fines up to 6% of global revenue—billions of dollars for companies like Meta and Google. The DSA's reach extends beyond Europe through the "Brussels effect": platforms find it easier to apply EU standards globally than to maintain separate systems for different markets.

But the DSA creates a "transparency paradox." Full disclosure of algorithmic code could mislead users rather than inform them, and it risks exposing proprietary systems. High-risk AI systems must provide accuracy indicators and error rates, but the DSA doesn't specify how detailed those disclosures should be. Critics worry that platforms will comply with the letter of the law while concealing the spirit.

Other National Approaches: India's IT Rules 2021 require platforms to trace the first originator of content and remove flagged content within 36 hours. Germany's Netz law forces platforms to remove harmful content within tight deadlines. The UK's Online Safety Bill mandates public education initiatives to improve media literacy.

Each framework reflects different priorities: the U.S. prioritizes platform freedom, the EU emphasizes user rights, and authoritarian regimes use moderation laws to suppress dissent. Platforms must navigate all of them simultaneously, creating compliance nightmares and strategic loopholes.

Algorithmic bias isn't a bug—it's a feature of systems designed without diverse input, trained on imbalanced data, and deployed without accountability.

Language Bias: Meta's moderation systems perform markedly worse on low-resource languages. Nicholas & Bhatia (2023) found that Meta's AI models for languages like Burmese, Amharic, and Sinhala/Tamil rely heavily on machine translation and limited training data, causing errors that expose users to unchecked hate speech and misinformation. In contrast, high-resource languages like English, Spanish, and Chinese have robust datasets and native-speaking moderators. The result: a two-tiered moderation landscape where Western users receive better protection than users in the Global South.

Racial and Identity Bias: Shadowbanning—the practice of suppressing content without notifying the creator—disproportionately affects BIPOC and LGBTQ+ influencers. Algorithms prioritize engagement metrics, and content that challenges dominant norms often receives lower engagement, creating a feedback loop. Influencers like Jeff Aririguzo (@Jeffsofresh) and Samuel Snell were shadowbanned for discussing racism and emerging technologies. One LGBTQ+ creator noted, "Being told over and over again that you are 'inappropriate' or unwanted, simply for being yourself is both exhausting and traumatizing."

The bias originates from homogeneous developer teams. The majority of AI developers for major platforms have been part of the white male culture of Silicon Valley. Their unconscious biases—what counts as "normal," "safe," or "appropriate"—embed themselves in the code. When the training data reflects historical inequities, the AI amplifies them.

Political Bias: All stakeholders accuse platforms of political bias—often contradicting each other. Conservatives claim platforms censor right-wing voices; progressives argue platforms amplify right-wing misinformation. The truth is more nuanced. Platforms moderate based on engagement and advertiser pressure, not ideology. But the perception of bias is self-reinforcing. When Elon Musk acquired Twitter in 2022 and rebranded it as X, he framed moderation changes as restoring "free speech." Yet X's first transparency report revealed 5.3 million account suspensions and 10.6 million post removals in the first half of 2024—a 300% increase from pre-Musk levels. Musk's rhetoric and his actions diverged.

Meta's recent decision to move its content moderation teams from California to Texas was framed as reducing bias. But critics argue it's a symbolic move to align with conservative politics, potentially affecting policies on abortion content and LGBTQ+ issues. If moderation is shaped by the political geography of the teams enforcing it, then bias is inevitable.

Economic Bias: Content ID and similar systems concentrate power in the hands of large copyright holders. Only 9,000 entities have access to YouTube's Content ID, and they can monetize disputes while creators wait for resolution. Smaller creators lack the resources to fight false claims. This isn't just unfair—it's anti-competitive. It stifles creative expression and skews the digital economy toward established players.

Platforms claim to be transparent. But transparency without accountability is theater.

Transparency Reports: Meta, Google, X, and TikTok publish transparency reports detailing content removals, government requests, and appeals. But these reports are often vague. They list aggregate numbers—millions of posts removed, thousands of appeals granted—without explaining why specific decisions were made or revealing the algorithms behind them.

Meta shut down its CrowdTangle analytics tool in August 2024, hindering researchers' ability to track misinformation. When independent researchers requested access to data under the DSA, platforms provided limited datasets or restricted API access, citing privacy and security concerns. The DSA mandates data access for VLOPs, but enforcement is uneven.

Algorithmic Audits: The DSA requires independent audits, but who audits the auditors? Platforms choose their own auditors, creating potential conflicts of interest. And audits focus on compliance with stated policies, not whether those policies are just or effective.

Community Notes: A Step Forward or a Cop-Out?: X's Community Notes and Meta's planned rollout represent a shift toward crowdsourced moderation. Contributors can add context to posts, and notes appear when users from across the political spectrum agree they're helpful. A 2024 UCSD study found that Community Notes successfully offset false COVID-19 health information.

But there are serious limitations. Only 1–5% of users see published notes. Notes can take hours or days to appear—by which time misinformation has already spread. The system is vulnerable to manipulation by coordinated groups. And it shifts responsibility from the platform to users, allowing platforms to claim they're fostering free speech while abdicating oversight.

Meta's Zuckerberg said, "We expect Community Notes to be less biased than the third-party fact-checking program it replaces because it allows more people with more perspectives to add context." But less biased doesn't mean unbiased. And without corporate accountability, who answers when the system fails?

Every post you flag, every video removed, every account suspended—someone, somewhere, made that decision. And that someone is likely underpaid, overworked, and traumatized.

The Invisible Workforce: Meta, ByteDance, Google, and others outsource moderation to companies like Accenture, Sama, Telus, and Teleperformance. These contractors operate in countries with weak labor protections: Colombia, Kenya, Ghana, the Philippines. Moderators are paid poverty wages—as low as 1,300 Ghanaian cedis (~$80) per month, with bonuses up to 4,900 cedis (~$315)—far below the cost of living.

They work 18- to 20-hour shifts, reviewing 700 to 1,000 cases per day. That's 7 to 12 seconds per decision. They see the worst of humanity: beheadings, child sexual abuse, torture, animal cruelty. Many suffer from depression, PTSD, and suicidal ideation. One moderator, Michał Szmagaj, said, "The pressure to review thousands of horrific videos each day—beheadings, child abuse, torture—takes a devastating toll on our mental health."

Union Suppression: When moderators in Kenya tried to unionize, they were fired. Telus fired union activists who worked as moderators for TikTok in Turkey. In May 2023, 150 content moderators from nine countries gathered in Nairobi to launch the first African Content Moderators Union. They demand living wages, safe working conditions, mental health support, and union recognition. But Big Tech hides behind layers of subcontracting, claiming it's not responsible for contractor practices.

Meta moved its moderation outsourcing from Kenya to a secret site in Accra, Ghana, after lawsuits in Kenya. Conditions in Accra are reported to be even worse. Workers are told not to disclose their employment, even to family. One moderator, Abel, said, "They told me not to tell anyone I worked for Meta, not even my family." When Solomon attempted suicide after being denied psychological support, he was terminated, flown back to Africa, and offered a lower-paid role.

This is the reality of content moderation: a global supply chain of exploitation, where the most vulnerable workers bear the psychological cost of keeping our feeds clean.

The Call for Regulation: Equidem, a labor rights organization, interviewed 113 content moderators and data-labelers across Colombia, Kenya, and the Philippines. Their report, "Content Moderation is a New Factory Floor of Exploitation," calls for cross-border labor standards, transparency in subcontracting, and mental health protections formalized in contracts—not optional add-ons.

Regional bodies like the African Union, ASEAN, SAARC, and CARICOM are positioned to lead these efforts. But so far, governments have been passive. The Kenyan court heard a landmark case in 2023-2024 brought by former Meta moderators against Meta and Sama, but the government itself took no systemic action.

The trajectory of content moderation will shape the future of online speech, democracy, and human rights. Three forces will drive that future: technological evolution, regulatory pressure, and civil society mobilization.

Technological Evolution: AI will get better—but so will the threats. Deepfakes, synthetic media, and AI-generated disinformation are already straining moderation systems. TikTok now requires creators to label AI-generated or significantly altered content, and its algorithm proactively detects deepfakes. But adversarial AI can evade detection. Extremist groups adapt their messaging to evade filters, using coded language, memes, and encrypted channels.

Large language models (LLMs) like GPT-4 are being deployed to provide "second opinions" before enforcement actions. Meta announced in January 2025 that it would use LLMs to review flagged content, aiming to reduce false positives. But LLMs inherit biases from their training data, and they can be gamed. The race between moderation AI and adversarial AI is an arms race with no end.

Regulatory Pressure: The DSA is just the beginning. More countries will follow the EU's lead, demanding algorithmic transparency, risk assessments, and user redress. In the U.S., Section 230 reform is on the table. If it's repealed, platforms will face a stark choice: moderate everything to avoid liability, or moderate nothing to avoid accusations of bias. Either extreme will harm free speech.

Algorithmic Impact Statements (AIS)—similar to environmental impact assessments—have been proposed as a transparency mechanism. Before deploying a new algorithm, platforms would disclose its social, ethical, and legal implications. But who enforces AIS? And can they be gamed?

Civil Society Mobilization: Users, advocacy groups, and workers are fighting back. Content moderators are unionizing. Civil society organizations like the EFF, EDPS, and RINJ are pressuring platforms through advertiser boycotts, legal challenges, and public campaigns. Community Notes and similar crowdsourced tools empower users to shape moderation—though they're not panaceas.

Digital constitutionalism—a framework treating platforms as public infrastructures with responsibilities to protect communication rights—is gaining traction. Scholars argue that platforms should be subject to democratic oversight, not just market forces. But operationalizing digital constitutionalism across diverse legal and cultural contexts is a monumental challenge.

The Stakes: If we fail to get content moderation right, the consequences are dire. Unchecked hate speech fuels violence—as seen in Myanmar, where Facebook's failure to moderate anti-Rohingya content contributed to ethnic cleansing. Misinformation undermines democracy—as seen in the 2016 and 2020 U.S. elections. Over-moderation stifles dissent—as seen in authoritarian regimes using "content moderation" as a tool of censorship.

But if we succeed—if we build systems that are transparent, accountable, and fair—we can create a digital public sphere that respects free speech, protects vulnerable communities, and holds power to account.

You're not powerless. Here's how to navigate and challenge the moderation systems shaping your digital life:

1. Know the Rules: Read the community guidelines of the platforms you use. Understand what's allowed and what's not. When you're flagged, know your rights.

2. Appeal Aggressively: If your content is wrongly removed, appeal. Platforms are required under the DSA to provide explanations and redress. Use them.

3. Support Transparency Initiatives: Advocate for open-source algorithms, independent audits, and data access for researchers. Demand that platforms disclose how their moderation systems work.

4. Pressure Platforms: Use your voice—and your wallet. Boycott platforms that exploit moderators or suppress marginalized voices. Support civil society campaigns.

5. Educate Yourself: Media literacy is your best defense against misinformation. Learn to identify deepfakes, verify sources, and think critically.

6. Join the Fight: Support content moderator unions, digital rights organizations, and advocacy groups pushing for reform.

The question "Who decides what we can say online?" has no single answer. It's platforms, AI, governments, moderators, and you. The power is distributed—but it's not immutable. By understanding the system, demanding accountability, and mobilizing collectively, we can reclaim agency over our digital speech.

The future of online expression is being written right now. Will you be a passive consumer—or an active architect? The choice is yours.

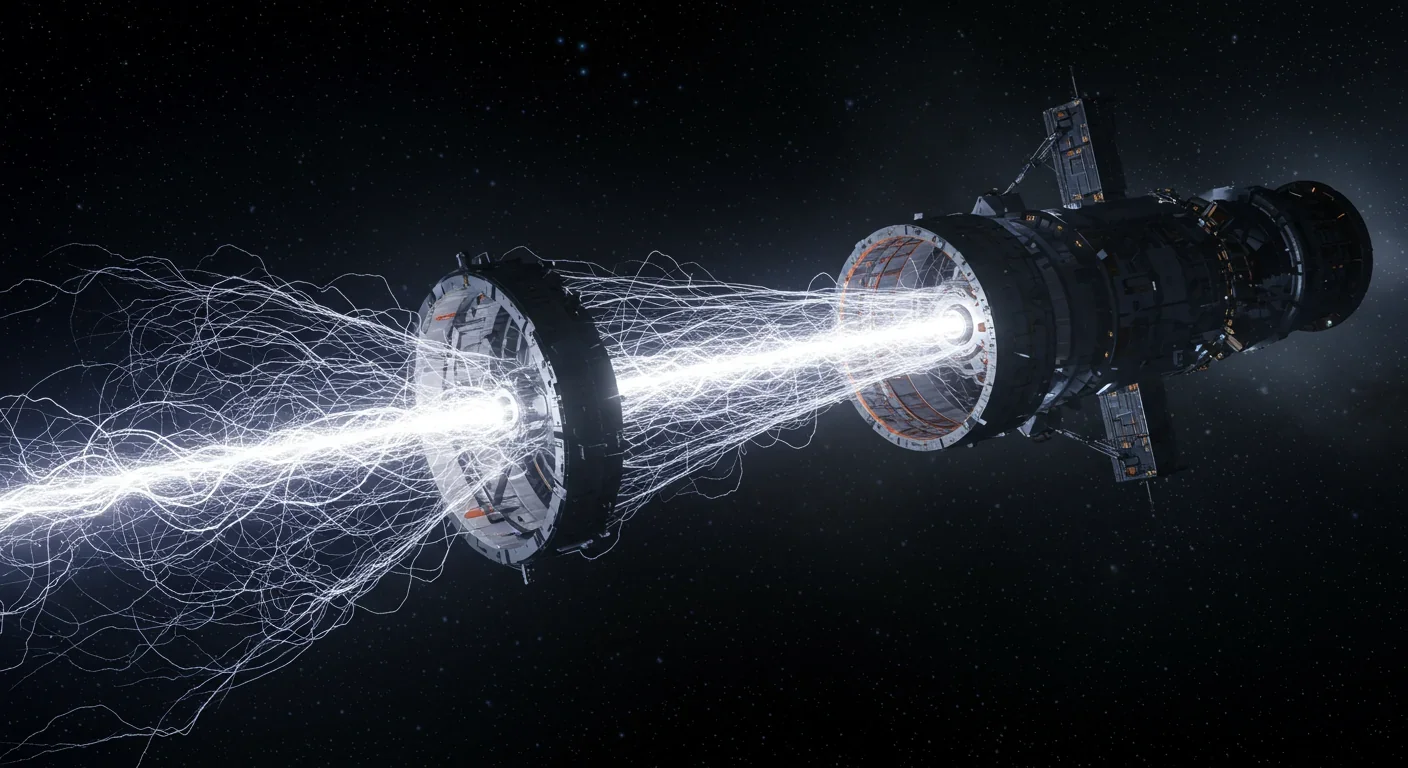

The Bussard Ramjet, proposed in 1960, would scoop interstellar hydrogen with a massive magnetic field to fuel fusion engines. Recent studies reveal fatal flaws: magnetic drag may exceed thrust, and proton fusion loses a billion times more energy than it generates.

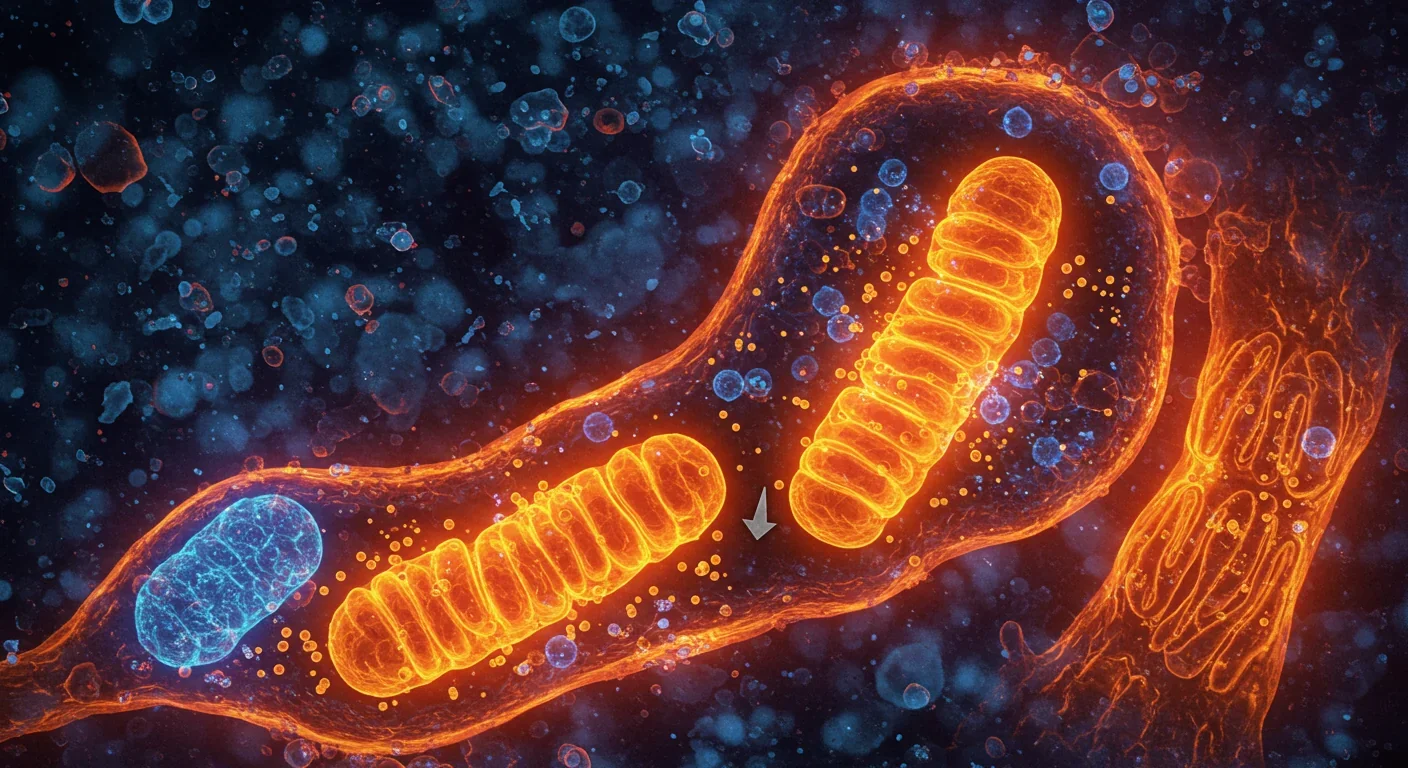

Mitophagy—your cells' cleanup mechanism for damaged mitochondria—holds the key to preventing Parkinson's, Alzheimer's, heart disease, and diabetes. Scientists have discovered you can boost this process through exercise, fasting, and specific compounds like spermidine and urolithin A.

Shifting baseline syndrome explains why each generation accepts environmental degradation as normal—what grandparents mourned, you take for granted. From Atlantic cod populations that crashed by 95% to Arctic ice shrinking by half since 1979, humans normalize loss because we anchor expectations to our childhood experiences. This amnesia weakens conservation policy and sets inadequate recovery targets. But tools exist to reset baselines: historical data, long-term monitoring, indigenous knowle...

Social media has created an 'authenticity paradox' where 5.07 billion users perform carefully curated spontaneity. Algorithms reward strategic vulnerability while psychological pressure to appear authentic causes creator burnout and mental health impacts across all users.

Scientists have decoded how geckos defy gravity using billions of nanoscale hairs that harness van der Waals forces—the same weak molecular attraction that now powers climbing robots on the ISS, medical adhesives for premature infants, and ice-gripping shoe soles. Twenty-five years after proving the mechanism, gecko-inspired technologies are quietly revolutionizing industries from space exploration to cancer therapy, though challenges in durability and scalability remain. The gecko's hierarch...

Cities worldwide are transforming governance through digital platforms, from Seoul's participatory budgeting to Barcelona's open-source legislation tools. While these innovations boost transparency and engagement, they also create new challenges around digital divides, misinformation, and privacy.

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.