Cities Turn Social Media Into Public Service Powerhouses

TL;DR: Deepfakes—AI-generated audio, video, and images—are threatening elections worldwide, deployed in over 60 countries during 2024. Detection tools lag 45-50% behind generation capabilities, and humans identify high-quality deepfakes correctly only 24.5% of the time. From India's 50 million robocalls to Slovakia's election-eve forgery, synthetic media exploits cognitive biases and erodes trust in all evidence—creating a "liar's dividend" where real recordings can be dismissed as fake. While legal frameworks are emerging (EU AI Act, U.S. state laws, FCC robocall bans), enforcement lags and First Amendment challenges complicate regulation. Defense requires layered approaches: AI detection tools, cryptographic provenance (C2PA), media literacy education, platform accountability, and international cooperation. The cost of creating convincing fakes now falls below the cost of verification, threatening democracy's foundation—a shared reality based on observable facts.

In January 2024, over 20,000 New Hampshire voters received a chilling phone call. The voice on the line sounded exactly like President Joe Biden, urging them to skip the Democratic primary. "Save your vote for the November election," the familiar voice instructed. Within hours, the robocall was exposed as a sophisticated deepfake—but by then, the damage was done. This wasn't a dystopian preview of future elections. This was democracy under siege, today.

Welcome to the age of synthetic reality, where seeing is no longer believing, and hearing is no longer proof. Deepfakes—AI-generated audio, video, and images so realistic they can fool experts—are no longer the stuff of cybersecurity conferences and academic papers. They've become weapons of mass manipulation, deployed in over 60 countries during the 2024 "super election year," when more than half the world's population headed to the polls. From India's 50 million AI-generated robocalls to Slovakia's election-eve audio forgery to France's viral videos of political leaders saying things they never said, deepfakes are rewriting the rules of political warfare.

But here's what makes this threat uniquely terrifying: detection technology is losing the arms race. While deepfake generators improve exponentially, detection tools lag 45-50% behind when deployed in real-world conditions. Humans fare even worse, correctly identifying high-quality deepfake videos only 24.5% of the time—worse than a coin flip. We're entering an era where authentic evidence can be dismissed as fake, and fabricated content accepted as truth. The very foundation of informed democracy—our ability to distinguish fact from fiction—is crumbling.

Deepfake technology didn't emerge overnight. Its origins trace back to 2014, when researcher Ian Goodfellow introduced Generative Adversarial Networks (GANs)—a revolutionary AI architecture where two neural networks compete in a zero-sum game. The "generator" creates synthetic content, while the "discriminator" tries to detect fakes. Through millions of iterations, the generator learns to produce media indistinguishable from authentic recordings.

Two years later, WaveNet pioneered realistic voice synthesis, but even that breakthrough required extensive training data. The game changed in 2020 when systems like 15.ai demonstrated they could clone voices using just 15 seconds of audio. Today's tools need even less. Voice cloning platforms can produce convincing synthetic speech from 30 seconds of decent-quality audio, analyzing voice fingerprints, speech patterns, phoneme transitions, and even breathing rhythms.

The visual deepfake revolution followed a parallel path. Early face-swapping required hours of processing and left telltale artifacts—unnatural blinking, weird lighting, skin texture inconsistencies. Modern diffusion models like Midjourney, DALL-E, and Stable Diffusion changed everything. They generate photorealistic images from simple text prompts, with consistent lighting, perfect skin texture, and cinematic quality that would cost thousands of dollars to produce traditionally.

But the real threat isn't the technology itself—it's the accessibility. Platforms like HeyGen and D-ID's "Live Portrait" allow anyone to create talking deepfake videos from a single photo and short audio clip. No technical expertise required. No expensive equipment. Just a smartphone and an internet connection. "For three or four years, everyone talked about the coming wave of deepfake manipulation," noted Rolf Fredheim, a researcher at Reset. "But for whatever reason it didn't happen. But there's reason to think it could be different now." He was right. 2024 proved to be the tipping point.

The 2024 Lok Sabha elections in India showcased deepfakes' full destructive—and paradoxically, constructive—potential. Political parties spent an estimated $50 million on authorized AI-generated content, using the technology for multilingual outreach and personalized voter engagement. The Bharatiya Janata Party (BJP) launched an AI chatbot called NaMo AI on WhatsApp to answer policy questions. The All India Dravida Munnetra Kazhagam (AIADMK) deployed AI-generated audio of deceased former Chief Minister Jayalalithaa at campaign rallies, her iconic voice rallying supporters from beyond the grave.

But alongside these sanctioned uses, malicious deepfakes proliferated wildly. Over 50 million AI-generated robocalls flooded constituencies, discussing local issues from irrigation in Maharashtra to healthcare in Bihar. Deepfakes of celebrities like Ranveer Singh and Aamir Khan appeared across social platforms, falsely endorsing political parties. An AI-generated voice clone of Congress leader Rahul Gandhi went viral, with him purportedly swearing in as Prime Minister. According to a McAfee survey, over 75% of Indian internet users encountered deepfake content during the election, with 80% expressing more concern than the previous year.

The psychological impact extends far beyond simple misinformation. Deepfakes exploit fundamental cognitive vulnerabilities: confirmation bias (we believe content that aligns with our preexisting views), availability heuristic (memorable content skews our perception of probability), authority bias (we trust voices of authority figures), and anchoring bias (initial information disproportionately influences our judgments). When a deepfake confirms what voters already suspect about an opponent, critical thinking shuts down.

The electoral calendar creates perfect conditions for deepfake weaponization. Two days before Slovakia's 2023 parliamentary elections, during the mandatory "election silence" period when media cannot publish political content, a fabricated audio recording surfaced on Telegram. It purportedly captured opposition leader Michal Šimečka discussing election rigging with a journalist, including plans to buy votes from the Roma minority. AFP fact-checkers quickly identified it as fake, noting "numerous signs of manipulation" in the voices and speech patterns. But the timing was surgical—the clip reached at least 100,000 Facebook views, 30,000 Instagram views, and 13,000 Telegram views before election day, amplified by former politicians and judges who lent it credibility with their trusted status.

Slovakia's vulnerability wasn't accidental. Only 18% of Slovaks trusted their government heading into the election, and trust in mainstream media sat 40 percentage points lower among those sympathetic to conspiracy theories about the Ukraine war. Encrypted messaging platforms like Telegram, where the deepfake spread unchecked, provided a safe haven beyond the reach of fact-checkers and content moderators. The election silence laws, designed to prevent last-minute manipulation, instead created a legal environment where deepfake misinformation could thrive without media counterbalancing.

Not all synthetic media applications in elections are sinister. The same AI tools that enable manipulation also offer unprecedented opportunities for democratic participation. India's 2024 election demonstrated AI's potential as a force for inclusion. The government's Bhashini platform provided real-time multilingual translation, allowing candidates to address voters across India's linguistic diversity. Parties created AI-personalized video messages addressing local issues—irrigation concerns in one constituency, healthcare in another—at a scale impossible through traditional campaigning.

"If used safely and ethically, this technology could be an opportunity for a new era in representative governance, especially for the needs and experiences of people in rural areas to reach Parliament," noted researchers analyzing the election. AI chatbots answered voter queries 24/7, democratizing access to policy information. Smaller parties with limited resources could compete with established players through AI-driven content creation, potentially leveling the political playing field.

In Pakistan, one instance showed how transparent deepfake use could enhance rather than undermine democracy. A deepfake audio was used over authentic footage of a political figure, but crucially, the content included both disclosure that AI was used and the politician's consent. This approach demonstrated that synthetic media, when properly labeled and authorized, can extend political communication without deception.

Even resurrection of deceased politicians, while ethically fraught, can serve commemorative and inspirational purposes when clearly marked as AI-generated tribute rather than authentic recording. The key distinction lies not in the technology itself but in transparency, consent, and intent. When voters know they're experiencing AI-enhanced content, they can engage critically. When they're deceived, democracy suffers.

The deepfake threat extends beyond individual fabrications to a more insidious phenomenon: the "liar's dividend." Law professors Bobby Chesney and Danielle Citron first identified this dynamic in 2019—the perverse reward that liars receive when deepfakes become common knowledge. Once the public knows sophisticated fakes exist, any politician caught on video or audio doing something incriminating can simply claim: "That recording isn't real—it's a deepfake."

This creates an asymmetric advantage for the corrupt and dishonest. Real evidence loses credibility. Genuine whistleblowers can be dismissed. Authentic footage becomes suspect. "The real risk is not a single fake video, but the erosion of trust in all digital media," explains Mohammed Khalil of DeepStrike. We're witnessing this erosion in real-time across democracies worldwide.

The speed of viral spread compounds the problem. An Elon Musk repost of a Kamala Harris-voiced deepfake reached over 129 million views before being labeled or removed. By the time fact-checkers debunk fabricated content, it has already shaped millions of opinions. Platform algorithms optimized for engagement amplify sensational content regardless of authenticity, creating a detection lag that favors rapid disinformation over slower verification.

Cross-platform propagation multiplies the threat. Coordinated networks share deepfakes simultaneously across X (formerly Twitter), Facebook, Instagram, Telegram, TikTok, and YouTube. A 2024 study analyzing U.S. election discussions found that over 75% of coordinated accounts remained active even after platform suspensions, demonstrating remarkable resilience. These networks don't just share existing deepfakes—they use AI-generated profile pictures and synthetic personas to appear as authentic grassroots movements.

The economic incentives of social media platforms create additional barriers to effective countermeasures. Engagement drives ad revenue, and controversial content—including deepfakes—generates engagement. Platform governance structures can inadvertently spread deepfakes faster while simultaneously offering intervention points for detection tools, creating a fundamental tension between business models and democratic integrity.

For marginalized groups, the consequences are particularly severe. Automated content moderation systems often lack cultural and linguistic nuance, leading to over-enforcement against minority content while missing coded language and targeted deepfakes. Meta's experience with breast cancer educational content demonstrates this problem—its text-over-image detection initially flagged legitimate health information as pornography until human review was enhanced, adding 2,500 pieces of content that would have been wrongfully removed.

Women candidates face a distinctive threat: deepfake pornography. Despite Brazil's 2024 ban on unlabeled AI-generated content during municipal elections, deepfake pornographic videos targeting female candidates persisted, demonstrating that legislation alone cannot stop determined attackers. The psychological toll on victims—and the chilling effect on women's political participation—represents an assault on democratic representation itself.

National responses to the deepfake threat reveal stark philosophical and practical differences. The United States prioritizes transparency and individual rights, with 18 states enacting legislation by 2024 regulating election deepfakes. California led the charge with multiple laws: AB-730 (2019) allows audio or visual political material as long as it's labeled as fake or parody; AB-2655 (2024) requires social media platforms to block or label election-focused deepfakes and create reporting systems; AB-2839 (2024) allows individual voters to sue deepfake creators for damages.

Yet enforcement remains sparse. No prosecutions have been reported in the 2024 cycle, and key legal challenges on First Amendment grounds have already eroded the strictest provisions. Federal Judge John Mendez issued a preliminary injunction blocking AB-2839 in October 2024, ruling that "most of it acts as a hammer instead of a scalpel" and noting that "even deliberate lies (said with 'actual malice') about the government are constitutionally protected" under the New York Times v. Sullivan precedent.

The Federal Communications Commission took decisive action in February 2024, banning AI-generated voices in robocalls after the Biden deepfake incident. The political consultant responsible now faces criminal charges for 26 offenses and a $6 million fine for call-spoofing regulations. Yet the New Hampshire case also demonstrates the challenge: by the time prosecutors charged the perpetrator, the election had passed and the damage was done.

The European Union takes a dramatically different approach through its AI Act, which transforms deepfake detection from optional security measure to mandatory compliance tool with severe penalties—up to €35 million or 7% of global revenue for prohibitive practices, €15 million or 3% for high-risk system violations, and €7.5 million or 1.5% for transparency violations. Starting August 2, 2025, Article 50 requires that any AI-generated or substantially manipulated content be clearly disclosed and made machine-detectable.

This creates a three-tier regulatory framework where every visual asset published after August 2026 carries potential liability. The regulation demands end-to-end visibility and documentation across supply chains, turning detection from a technical sidebar into a regulatory core. Yet the AI Act's deepfake definition remains vague, leaving "too much scope for what a deep fake is," according to legal analysts. Exceptions for "assistive functions" and content "not substantially altering" create loopholes that potentially deceptive material can exploit.

The Digital Services Act (DSA) adds another layer, obligating large social media platforms to label deepfakes through Article 35(1)(k). The dual regulatory framework creates potential for double-labeling without clear standards, though the overall intent—mandatory transparency—represents a fundamental shift in platform accountability.

China prioritizes social stability through its regulatory approach, mandating clear labeling of all deepfake content and holding platforms strictly liable for synthetic media spread. While this ensures rapid removal and attribution, it also concentrates power in state hands, raising concerns about censorship and surveillance.

The Philippines' Deepfake Accountability and Transparency Act (Bill 10,567) prohibits distribution of deceptive deepfakes within 90 days of an election, with fines up to $10,000 and higher penalties if violations intend to incite violence. The Department of Information and Communications Technology is collaborating with OpenAI and Google to embed watermarks in AI-generated content, providing verifiable traces for detection algorithms.

International coordination remains elusive. The patchwork of national laws creates protection gaps that sophisticated actors exploit, forum-shopping for jurisdictions with weak enforcement. A deepfake created in one country can spread globally in minutes, yet prosecution requires navigating complex questions of jurisdiction, extradition, and conflicting legal standards. As researchers note, "harmonizing international regulations to mitigate risks associated with deepfake technology" remains an urgent but unfulfilled imperative.

The arms race between deepfake generation and detection defines our immediate future. Modern detection systems employ ensemble methods, combining multiple AI models trained on different authenticity aspects. Intel's FakeCatcher takes a unique biological approach, using Photoplethysmography (PPG) to analyze "blood flow" in video pixels—examining subtle color changes present in real videos but absent in deepfakes. The system can process 72 concurrent real-time detection streams, returning results in milliseconds with claimed 96% accuracy.

Yet that 96% accuracy evaporates in real-world deployment. Detection effectiveness drops 45-50% when tools leave controlled lab conditions and confront actual deepfakes circulating on social media. The rapid evolution of synthesis techniques requires continuous model retraining, and low-labeled, few-shot generation models like 15.ai (which needs just 15 seconds of training audio) continually outpace detection capabilities.

Language bias presents another critical vulnerability. Most detection research focuses on English, paying little attention to the world's most spoken languages like Chinese, Spanish, Hindi, and Arabic. This creates exploitable gaps for state actors targeting specific linguistic communities with deepfakes that detection systems aren't trained to catch.

Provenance technology offers a complementary defense. The Coalition for Content Provenance and Authenticity (C2PA)—founded in 2019 by Adobe, The New York Times, and Twitter, now including steering committee member Google—developed technical standards for embedding cryptographic metadata into digital content. This "Content Credentials" system documents a file's complete history: publisher, device used, location and time of recording, editing steps, and chain of custody.

The C2PA embeds a minimalist "CR" icon into media in a machine-readable format, allowing consumers to verify authenticity with easy-to-use tools. Google is integrating these credentials into Search, Ads, and YouTube, potentially providing users real-time context on whether content was AI-generated. Sony announced its Camera Verify system in June 2025 for press photographers, using C2PA digital signatures to authenticate news imagery.

Yet provenance has critical limitations. C2PA verifies origin and editing history—not truthfulness. A malicious actor could create a genuine provenance chain from a fabricated original. The system only works if widely adopted across platforms and devices, but as of 2025, "very little internet content uses C2PA," according to industry assessments. Attackers can also bypass safeguards by altering provenance metadata, removing or forging watermarks, and mimicking digital fingerprints.

Watermarking offers another detection avenue. Several startups are developing systems that embed inaudible acoustic signatures into synthetic audio, detectable by specialized software. But again, effectiveness depends on universal adoption by creation platforms—an unlikely scenario when some actors have incentives to enable untraceable synthetic media.

Biometric-based detection using PPG analysis shows promise for field-deployable, real-time social media moderation, but scaling such systems to analyze billions of daily uploads presents enormous computational and cost challenges. The question isn't whether detection technology can work—it's whether it can keep pace with increasingly sophisticated generation tools deployed by adversaries with significant resources.

Media literacy emerges as the most democratically sustainable defense. Inoculation theory, developed by psychologist William J. McGuire in 1961, suggests that exposing people to weak counterarguments triggers "refutational preemption"—building resistance to persuasive attacks. A 2022 study by Jon Roozenbeek exposed 29,116 participants to short pre-bunking videos about manipulative tactics. Those who watched showed approximately 5% improvement in detecting manipulation—modest but significant at scale.

More recently, researchers demonstrated that AI-generated prebunking can effectively reduce belief in election-related myths. In a preregistered two-wave experiment with 4,293 U.S. voters, participants exposed to AI-crafted prebunking messages showed significant decreases in belief in specific election rumors, with effects persisting at least one week. The study concludes that "these results provide a clear pathway for building prebunking arguments at scale, such as via chatbot delivery."

Crucially, prebunked individuals engage in "post-inoculation talk"—spreading resistance through social networks by discussing what they learned. This viral mechanism extends inoculation's reach beyond direct exposure, creating community-level resilience.

Debunking after exposure also works, according to a Joint Research Centre study published in Nature examining 5,000 European participants across Germany, Greece, Ireland, and Poland. Both prebunking and debunking reduced agreement with false claims, credibility ratings, and likelihood of sharing misinformation. Debunking had a slight edge, likely because it refutes specific misinformation with concrete evidence rather than building general skepticism.

The effectiveness of both approaches depends on source credibility and audience trust levels. Public authority debunking works better for high-trust audiences but can backfire with low-trust populations, potentially entrenching beliefs through perceived manipulation. This suggests interventions must be tailored to audience segments—a complex challenge for platforms serving billions with diverse trust profiles.

The European Commission supports coordinated verification through initiatives like EU vs Disinfo (exposing pro-Kremlin disinformation), the European Digital Media Observatory (EDMO, bringing together fact-checkers and researchers), and Trustworthy Public Communications (providing evidence-based guidance). These efforts demonstrate that effective countermeasures require coordination among institutions, media organizations, platforms, and civil society—no single entity can combat the threat alone.

As citizens navigating this treacherous information landscape, we need new skills and systematic approaches. Critical consumption habits: Before sharing political content, pause and verify. Check multiple reputable sources. Look for original reporting rather than aggregated claims. Be especially skeptical of emotionally charged content that confirms your biases—that's precisely when cognitive vulnerabilities are most exploited.

Technical awareness: Learn to recognize common deepfake artifacts. Watch for unnatural facial movements, inconsistent lighting across a scene, strange blurring around edges, audio that doesn't quite sync with lip movements, and uncanny valley discomfort that signals something is slightly "off." While sophisticated deepfakes minimize these tells, many circulating fakes still exhibit detectable flaws.

Provenance checking: When major platforms integrate C2PA credentials, use verification tools before accepting content as authentic. Look for the "CR" icon and review content history. Absence of credentials doesn't prove manipulation, but their presence and verification provides confidence.

Source triangulation: Don't rely on a single channel for political information. Cross-reference claims across outlets with different editorial perspectives. If a dramatic revelation appears only on social media without mainstream coverage, treat it with extreme skepticism until verified.

Contextual reasoning: Ask cui bono—who benefits from this content? Timing matters enormously. Content released during election silence periods or immediately before voting, when fact-checking has minimal time to work, deserves heightened suspicion.

Procedural verification for high-stakes decisions: Organizations should implement mandatory verification protocols for significant actions. The 2024 Arup fraud case, where deepfake video convinced an employee to transfer substantial funds, demonstrates that awareness training alone is insufficient. Procedural controls—like requiring voice confirmation for high-value transactions—outperform detection training.

For journalists and fact-checkers, the challenge intensifies. Traditional verification methods—confirming sources, checking backgrounds, reviewing documents—remain essential but insufficient. Technical verification using detection tools, reverse image searches, metadata analysis, and expert consultation must become standard practice. Speed matters; the window for effective debunking closes within hours as content goes viral.

Media organizations should adopt transparency standards, clearly labeling AI-generated or AI-enhanced content and explaining how verification was conducted. Building audience trust requires demonstrating rigorous editorial processes, not just asserting credibility.

Platforms bear particular responsibility. They should deploy advanced detection tools at scale, implement real-time AI-driven monitoring, and prioritize swift content review when deepfakes are flagged. Labeling altered content—rather than removing it entirely—preserves free expression while informing users, a less intrusive yet effective countermeasure. Collaboration across platforms to share threat intelligence and coordinate responses can increase effectiveness dramatically.

Blockchain-based verification and decentralized crowdsourced authentication networks offer promising approaches. While no single technical solution suffices, layered strategies combining AI detection, cryptographic provenance, human expertise, and community reporting create defense in depth.

Policymakers must balance competing values: preventing manipulation without stifling legitimate speech, enabling enforcement without creating surveillance infrastructure, and protecting elections without empowering censorship. The evidence suggests focusing on mandatory disclosure rather than content bans—requiring clear labeling of AI-generated political content, harsh penalties for deceptive use, and platform accountability for failing to implement reasonable detection and labeling systems.

A proposed federal right of publicity statute addressing deepfakes must carefully interact with Section 230 protections and First Amendment rights, defining narrow exceptions for clearly deceptive electoral content without opening broader speech restrictions. International cooperation through treaties establishing minimum standards and extradition frameworks for cross-border deepfake crimes would close jurisdictional gaps.

Education systems should integrate digital literacy and critical media consumption into curricula from elementary school forward. Today's children will navigate an information environment far more complex than any previous generation faced. Teaching them to question, verify, and reason about media authenticity is as fundamental as teaching reading and mathematics.

We stand at a hinge point in democratic history. For the first time, the cost of producing convincing fabricated evidence has fallen below the cost of verifying authenticity. This inversion threatens the foundation of informed self-governance: a shared reality based on observable facts.

The deepfake crisis is fundamentally about trust—trust in institutions, media, evidence, and ultimately in the democratic process itself. When citizens can't trust what they see and hear, when politicians can dismiss genuine evidence as fabricated, when foreign actors can impersonate domestic voices at scale, the marketplace of ideas collapses into cacophony.

Yet history teaches us that new communication technologies always disrupt truth ecosystems before societies adapt. The printing press unleashed waves of religious and political propaganda before norms and institutions evolved to manage it. Photography was immediately weaponized for deception—retouched images appeared within decades—yet we developed forensic techniques and verification practices. Radio and television brought new manipulation vectors, and societies learned to scrutinize broadcast content critically.

We will adapt to deepfakes too. The question is how much damage occurs during adaptation, and whether our democratic institutions survive the transition intact. The encouraging news is that solutions exist: detection technology is improving, provenance systems are deploying, legal frameworks are emerging, and media literacy initiatives are scaling. The discouraging news is that implementation lags far behind the threat's evolution.

The 2024 election cycle demonstrated that deepfakes are here, they're effective, and they're being weaponized at scale. Despite early fears, the worst-case scenarios didn't materialize—public awareness and partial countermeasures limited some damage. But adversaries are learning. Generation tools are improving. Costs are falling. Access is widening.

The coming years will determine whether deepfakes become democracy's fatal vulnerability or whether we build sufficient societal immunity. That outcome depends on choices made now by technology companies, policymakers, media organizations, educators, and individual citizens. We need layered defenses: technical tools plus legal frameworks plus institutional reforms plus public education plus international cooperation.

The deepfake you don't see is the most dangerous one. Not because it's perfectly crafted, but because you stopped looking critically. Our greatest vulnerability isn't technology—it's complacency. In an age of synthetic media, perpetual vigilance isn't paranoia; it's citizenship. The price of democracy has always been eternal watchfulness. We just didn't expect to need watching eyes quite this sharp.

Every time you pause before sharing, every time you verify before believing, every time you question rather than accept, you're defending democracy. The battlefield has shifted from town squares to timelines, from printing presses to neural networks. But the war for truth, accountability, and informed self-governance continues. And in that war, we're all soldiers now.

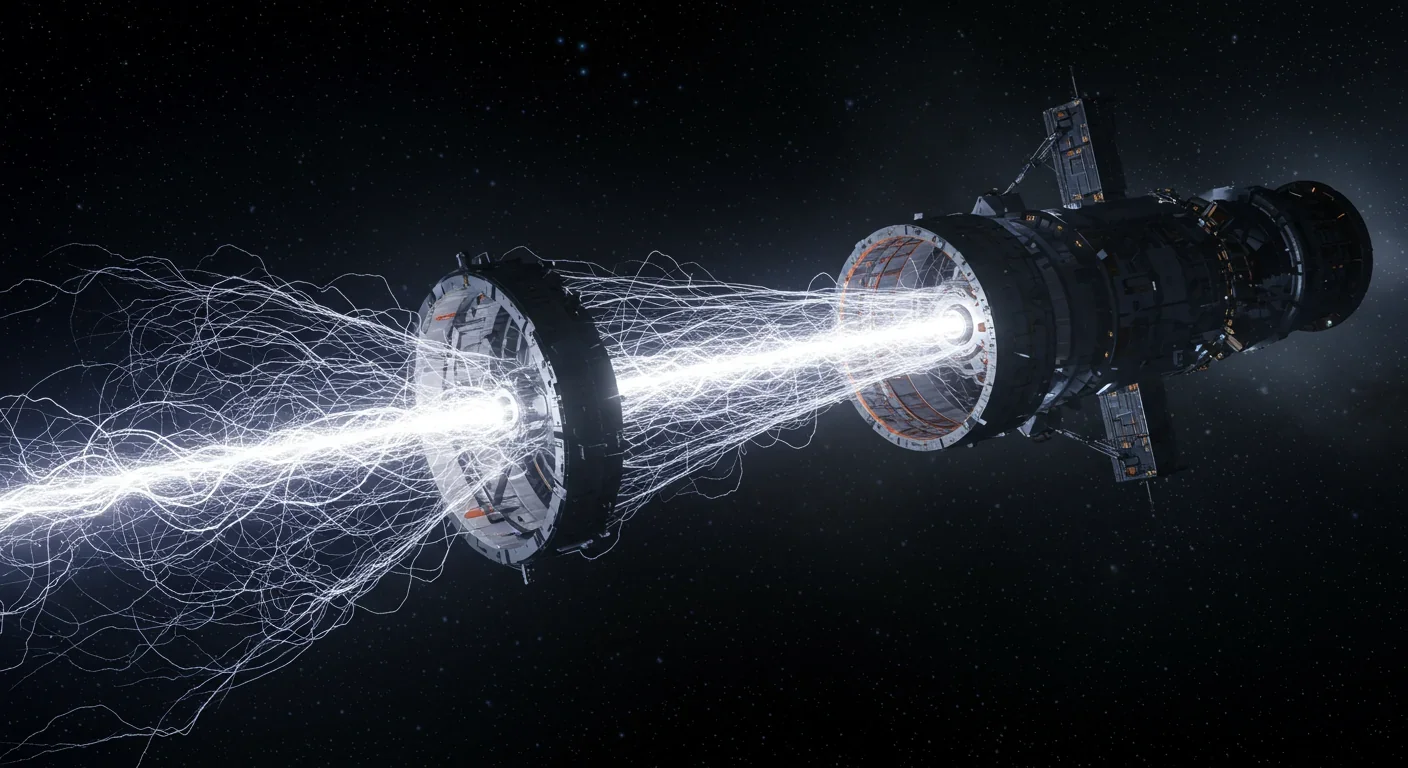

The Bussard Ramjet, proposed in 1960, would scoop interstellar hydrogen with a massive magnetic field to fuel fusion engines. Recent studies reveal fatal flaws: magnetic drag may exceed thrust, and proton fusion loses a billion times more energy than it generates.

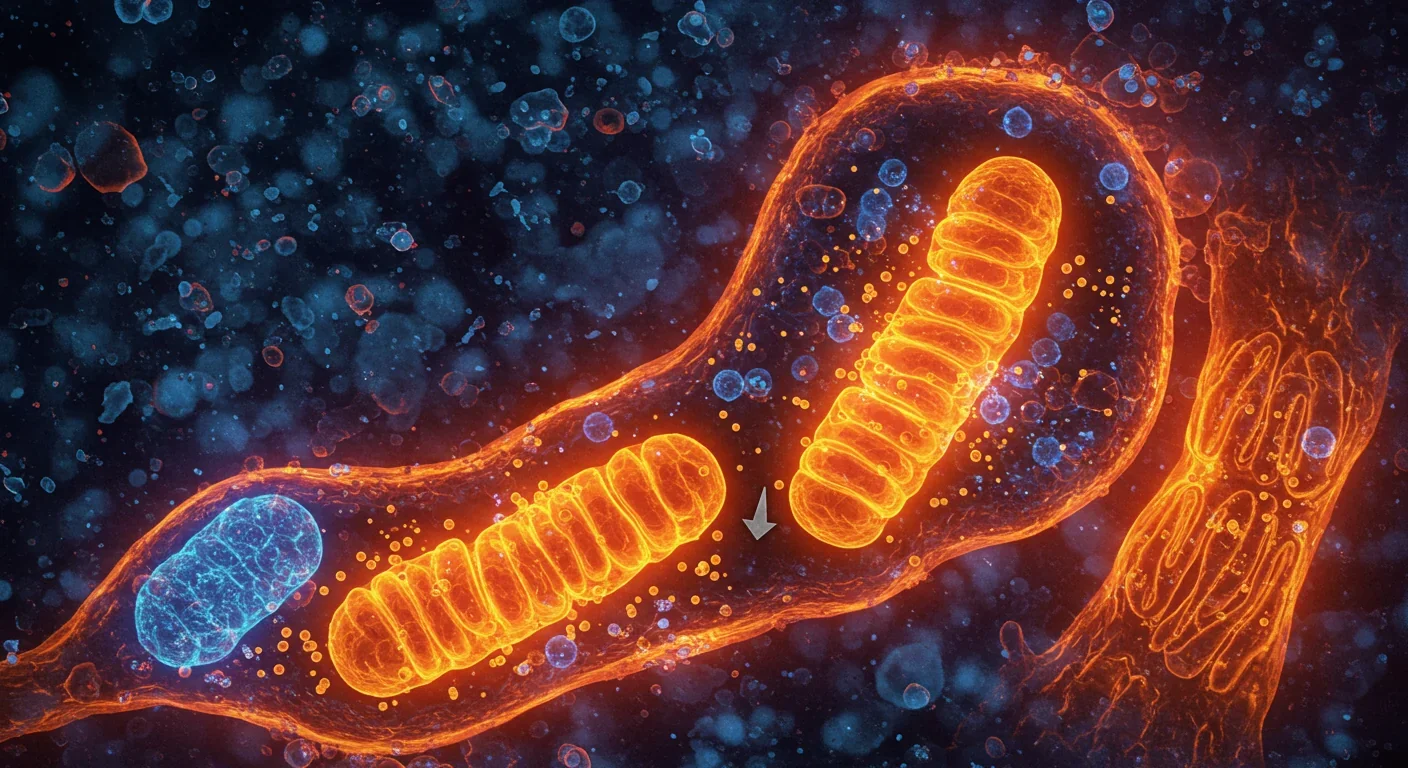

Mitophagy—your cells' cleanup mechanism for damaged mitochondria—holds the key to preventing Parkinson's, Alzheimer's, heart disease, and diabetes. Scientists have discovered you can boost this process through exercise, fasting, and specific compounds like spermidine and urolithin A.

Shifting baseline syndrome explains why each generation accepts environmental degradation as normal—what grandparents mourned, you take for granted. From Atlantic cod populations that crashed by 95% to Arctic ice shrinking by half since 1979, humans normalize loss because we anchor expectations to our childhood experiences. This amnesia weakens conservation policy and sets inadequate recovery targets. But tools exist to reset baselines: historical data, long-term monitoring, indigenous knowle...

Social media has created an 'authenticity paradox' where 5.07 billion users perform carefully curated spontaneity. Algorithms reward strategic vulnerability while psychological pressure to appear authentic causes creator burnout and mental health impacts across all users.

Scientists have decoded how geckos defy gravity using billions of nanoscale hairs that harness van der Waals forces—the same weak molecular attraction that now powers climbing robots on the ISS, medical adhesives for premature infants, and ice-gripping shoe soles. Twenty-five years after proving the mechanism, gecko-inspired technologies are quietly revolutionizing industries from space exploration to cancer therapy, though challenges in durability and scalability remain. The gecko's hierarch...

Cities worldwide are transforming governance through digital platforms, from Seoul's participatory budgeting to Barcelona's open-source legislation tools. While these innovations boost transparency and engagement, they also create new challenges around digital divides, misinformation, and privacy.

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.