Prompt Engineering: The Essential AI Literacy for 2025

TL;DR: Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.

By 2030, artificial intelligence will be writing code, diagnosing diseases, and composing symphonies. But there's a problem: the data fueling this revolution may be stolen.

Every major AI model, from ChatGPT to Claude, was trained on vast oceans of text, scraped from the internet without asking permission. Books, articles, social media posts, code repositories—trillions of words, many of them copyrighted. The legal grey area surrounding this practice has exploded into courtrooms worldwide, with billion-dollar settlements reshaping the future of AI development.

Training a frontier AI model requires staggering amounts of text. GPT-4, Claude 3, and LLaMA 4 each consumed over 2 trillion tokens after aggressive filtering, language identification, and deduplication. That's roughly equivalent to reading every book ever published, multiple times over.

These datasets come from five dominant sources: web crawls, reference works like Wikipedia, books, scientific and code repositories, and social media. The largest category is web crawls, massive automated sweeps of the internet that capture everything from blog posts to news articles to forum discussions.

Common Crawl, the most widely used web scraping dataset, contains over 250 billion pages spanning two decades. It's free, comprehensive, and legally ambiguous. AI companies love it because it provides diversity and scale. Copyright holders hate it because it treats their work as raw material, freely available for harvesting.

But web scrapes alone aren't enough. To make models truly useful, developers add specialized datasets: books for narrative structure, academic papers for technical knowledge, GitHub repositories for code understanding, and licensed content from publishers and data brokers.

This hybrid approach drives capability gains but creates legal exposure. Opaque "non-public third-party" slices can add 10-40% of tokens to training datasets, often with unclear provenance. Did someone pay for that content? Was it pirated? Do the original creators even know their work is being used?

In September 2025, a San Francisco federal judge approved a $1.5 billion settlement between AI company Anthropic and authors whose books were allegedly used without permission. The case involved 465,000 pirated books, with authors receiving roughly $3,000 per book.

Judge William Alsup called it "a fair settlement," though he worried about the complexity of distributing payments to all eligible authors. The settlement marked a watershed moment, the first time an AI company paid significant damages for training data practices.

Anthropic maintained that AI training constitutes transformative fair use, a legal defense that's become the industry's main shield. The company argued the settlement only addressed how materials were obtained, not whether training itself infringes copyright.

But Anthropic isn't alone in the courtroom. Approximately a dozen lawsuits have been filed in California and New York against various AI companies, including OpenAI, Microsoft, Google, and Meta. Most are class actions, meaning they cover not just named plaintiffs but all authors whose works appear in training datasets.

The Authors Guild called the Anthropic settlement "a milestone in authors' fights against AI companies' theft of their works." Maria Pallante, CEO of the Association of American Publishers, was blunt: "Anthropic is hardly a special case when it comes to infringement. Every other major AI developer has trained their models on the backs of authors and publishers."

The legal battleground centers on fair use, a doctrine that allows limited use of copyrighted material without permission for purposes like criticism, commentary, research, and transformative works.

AI companies argue that training is transformative. They're not republishing books or articles, they claim. Instead, they're extracting patterns and statistical relationships from millions of texts to create something fundamentally new: a language model that can generate original content.

Some courts have been receptive. In June 2025, a landmark ruling found that AI training constitutes transformative fair use, a decision that remains intact despite the Anthropic settlement.

But the legal landscape is nuanced. Courts distinguish between the act of training and the method of data acquisition. Even if training is fair use, obtaining copyrighted works through piracy or unauthorized scraping may still violate copyright law.

This split creates uncertainty for developers. Can you train on books from your local library? From Project Gutenberg? From a torrent site? The answer depends not just on fair use, but on how you obtained the material and whether you violated terms of service, anti-circumvention laws, or other legal protections.

Copyright holders also argue that AI training causes market harm. If AI models can generate content that competes with original works, they claim, that undermines the economic value of copyrighted material and fails the fourth factor of fair use analysis.

The copyright debate isn't just American. Different countries are taking radically different approaches, creating a patchwork of global regulations that complicate international AI development.

Japan has emerged as the most permissive jurisdiction. Japanese copyright law explicitly allows AI training on copyrighted works without permission, as long as the primary purpose is not to enjoy the artistic expression itself. This "non-enjoyment" exception means developers can train on any publicly available content, commercial or not.

The policy reflects Japan's strategic bet on becoming an AI superpower. By reducing legal friction, Japan hopes to attract AI investment and talent. But it's controversial. Critics argue it privileges tech companies over creators and could discourage content production if creators can't control or monetize their work.

Singapore has adopted a middle path. The country's copyright law includes exceptions for computational data analysis, but they're narrower than Japan's. Commercial use requires demonstrating that training doesn't substitute for the original work.

The European Union is taking a stricter approach. The EU AI Act includes copyright transparency requirements, mandating that AI developers publish detailed summaries of copyrighted material used in training. This is designed to give rights holders visibility into how their work is being used and enable them to opt out or seek compensation.

The EU is also enforcing existing copyright law more aggressively. In 2025, the European Parliament explored extending text and data mining exceptions specifically for AI training, but only for non-commercial research purposes. Commercial AI developers still need licenses.

These diverging approaches create compliance challenges. A model trained legally in Japan might violate copyright in Europe. A dataset assembled in the U.S. under fair use might expose developers to liability in jurisdictions with stricter rules.

Facing legal uncertainty, AI companies are increasingly turning to licensed data. Rather than scraping the web and hoping for the best, they're paying publishers, data brokers, and content platforms for permission to use copyrighted material.

Over 30 AI models have been trained at the scale of GPT-4 since its release, and many newer models incorporate licensed content. The economics are straightforward: licensing reduces legal risk and can improve model quality by guaranteeing access to high-value, curated datasets.

Data licensing deals are exploding in value. Reddit reportedly signed a deal worth $60 million per year to license its content for AI training. News organizations like The New York Times, The Washington Post, and The Financial Times have negotiated licensing agreements with OpenAI and other developers.

Video licensing is even more lucrative. AI companies are paying $1-4 per minute for video content, driven by demand for multimodal models that understand both text and visual information. The total market for video licensing could reach hundreds of millions of dollars annually.

Academic publishers are also getting in on the action. Springer Nature, Elsevier, and Wiley have all signed AI training licenses, typically requiring developers to attribute sources and respect author opt-outs.

But licensing isn't a panacea. It's expensive, potentially costing tens or hundreds of millions for a single training run. It favors well-funded incumbents like OpenAI, Google, and Anthropic, who can afford to pay for premium data. Smaller startups and open-source projects often can't compete on the same terms.

Licensing also raises equity concerns. Publishers' copyright statements are evolving to explicitly reserve text and data mining rights, blocking AI training unless developers pay. This consolidates power in the hands of large publishers and could reduce access to knowledge, especially in the Global South where licensing fees are prohibitive.

Synthetic data is one promising avenue. Instead of training on copyrighted text, developers can use existing models to generate new training data. This bootstrapping approach reduces dependence on external content and sidesteps copyright issues—at least in theory.

The catch is that synthetic data inherits the biases and limitations of the models that created it. If GPT-4 was trained on copyrighted books, and you use GPT-4 to generate synthetic data for GPT-5, have you really solved the copyright problem? Critics say you've just laundered the infringement through an extra step.

Opt-out mechanisms are another approach. Some AI companies now respect robots.txt files and offer web forms where creators can request removal from training datasets. Cloudflare's 2025 robots.txt update introduced new rules specifically for AI crawlers, allowing site owners to block scraping or demand payment per crawl.

But opt-out systems place the burden on creators. Unless you actively monitor and block AI crawlers, your work gets used by default. Many creators don't even know their content is being scraped. And by the time you opt out, your work may already be baked into models that will be used for years.

Google's AI has been caught scraping sites that explicitly asked to be ignored, undermining trust in voluntary opt-out systems. If major companies can't or won't respect opt-outs, what good are they?

Open-source datasets offer another path forward. Projects like The Pile, C4, and RedPajama curate large text corpora with explicit licenses, filtering out clearly copyrighted material and documenting data provenance.

These datasets aren't perfect. They still include web scrapes with ambiguous copyright status, and they're often smaller and less diverse than proprietary datasets. But they provide a more transparent, ethically defensible foundation for AI development, especially for researchers and nonprofits who can't afford licensing fees.

If you're building AI systems, ignoring copyright isn't an option. The legal landscape is shifting fast, and the cost of getting it wrong is rising. Here's how to navigate the risks:

Document your data sources. Maintain detailed records of where every piece of training data came from, what license it had, and when you acquired it. This documentation is essential for defending against copyright claims and complying with emerging transparency requirements.

Favor licensed and open-source data. Yes, it's more expensive and less comprehensive than web scrapes. But it dramatically reduces legal risk and demonstrates good faith. If you must use scraped data, prioritize sources with permissive licenses or clear fair use arguments.

Respect opt-outs religiously. If a creator or publisher asks to be excluded, honor that request immediately and document the exclusion. The reputational and legal costs of ignoring opt-outs far outweigh any marginal performance gains.

Conduct regular legal audits. Copyright law varies by jurisdiction and is evolving rapidly. Work with legal counsel to review your training practices, data sources, and compliance with local regulations. Don't assume that what was legal last year is still legal today.

Invest in data diversity. Relying on a single source or method creates concentration risk. If Common Crawl becomes legally untenable, or if a major publisher sues successfully, will your entire pipeline collapse? Diversify data sources to build resilience.

Consider fine-tuning over pre-training. You don't always need to train a model from scratch. Fine-tuning an existing model on carefully licensed data can achieve strong performance with far less copyright risk. This approach is especially attractive for domain-specific applications.

Engage with creators and rights holders. The AI community and the creative community don't have to be adversaries. Proactive outreach, transparent communication, and fair compensation can build trust and reduce litigation. Some publishers and author groups are willing to negotiate reasonable licensing terms if you approach them respectfully.

Plan for compliance costs. Licensing, legal counsel, and technical workarounds aren't free. Budget for them as a core cost of AI development, not an afterthought. Companies that scrimp on compliance today may face crippling settlements tomorrow.

Where is all this headed? The next five years will likely bring more clarity, but also more complexity.

Legislation is coming. Both the U.S. Congress and the European Parliament are considering AI-specific copyright reforms. These could codify fair use for training, mandate licensing, or create new rights for creators to control how their work is used. Whatever the outcome, the era of regulatory ambiguity is ending.

International harmonization may happen—or may not. The divergence between Japan, the EU, and the U.S. creates competitive pressures. Countries with lax copyright rules may attract AI investment, while those with strict rules protect creators but risk losing tech leadership. We could see a race to the bottom, or we could see international treaties that establish baseline protections.

Compensation models will evolve. Fixed licensing fees are just the beginning. Some advocates are pushing for ongoing royalties, where creators get paid every time a model uses their work. Others propose collective licensing schemes, similar to music rights organizations, where a central body negotiates rates and distributes payments. The AI video licensing wave suggests that per-use payments are technically feasible and economically viable.

Fair use precedents will solidify. Right now, courts are all over the map on whether AI training is fair use. As more cases reach appellate courts and supreme courts, we'll get clearer precedents. Those precedents will shape the industry for decades, determining whether training is a right, a privilege, or something in between.

Technical solutions will improve. Synthetic data, differential privacy, and federated learning could reduce reliance on copyrighted content. Watermarking and content tracking technologies might let creators monitor and monetize AI usage more effectively. The technical and legal landscapes will co-evolve.

Power dynamics will shift. The current model—where a handful of AI giants control most frontier models—may not last. If licensing costs skyrocket, we could see more open-source collaboration, or more concentration among companies wealthy enough to pay. If regulations favor small players with research exceptions, we might see a new wave of innovation from startups and universities.

The copyright crisis in AI isn't just a legal problem. It's a question about what kind of future we want to build.

On one hand, generative AI has transformative potential. It can democratize creativity, accelerate scientific discovery, and augment human capabilities in ways we're only beginning to explore. Restricting training data too severely could stifle innovation and concentrate power in the hands of incumbents who already have large datasets.

On the other hand, creators deserve respect and compensation. Writing a book, coding a library, or producing a video requires skill, time, and often financial investment. If AI companies can appropriate that work without permission or payment, they're extracting value from creators without giving anything back.

The challenge is finding a balance. A legal framework that allows transformative uses while ensuring fair compensation. Business models that reward both AI developers and content creators. Technical systems that respect opt-outs while maintaining dataset quality.

We're not there yet. The lawsuits, settlements, and regulatory debates are messy and contentious. But they're also necessary. The decisions we make today will determine whether AI becomes a tool for shared prosperity or another mechanism for extracting value from the many to benefit the few.

The future of AI isn't just about better algorithms or faster chips. It's about building systems that respect the people whose knowledge and creativity made those algorithms possible. That future is still being written—one lawsuit, one license, and one line of code at a time.

The Bussard Ramjet, proposed in 1960, would scoop interstellar hydrogen with a massive magnetic field to fuel fusion engines. Recent studies reveal fatal flaws: magnetic drag may exceed thrust, and proton fusion loses a billion times more energy than it generates.

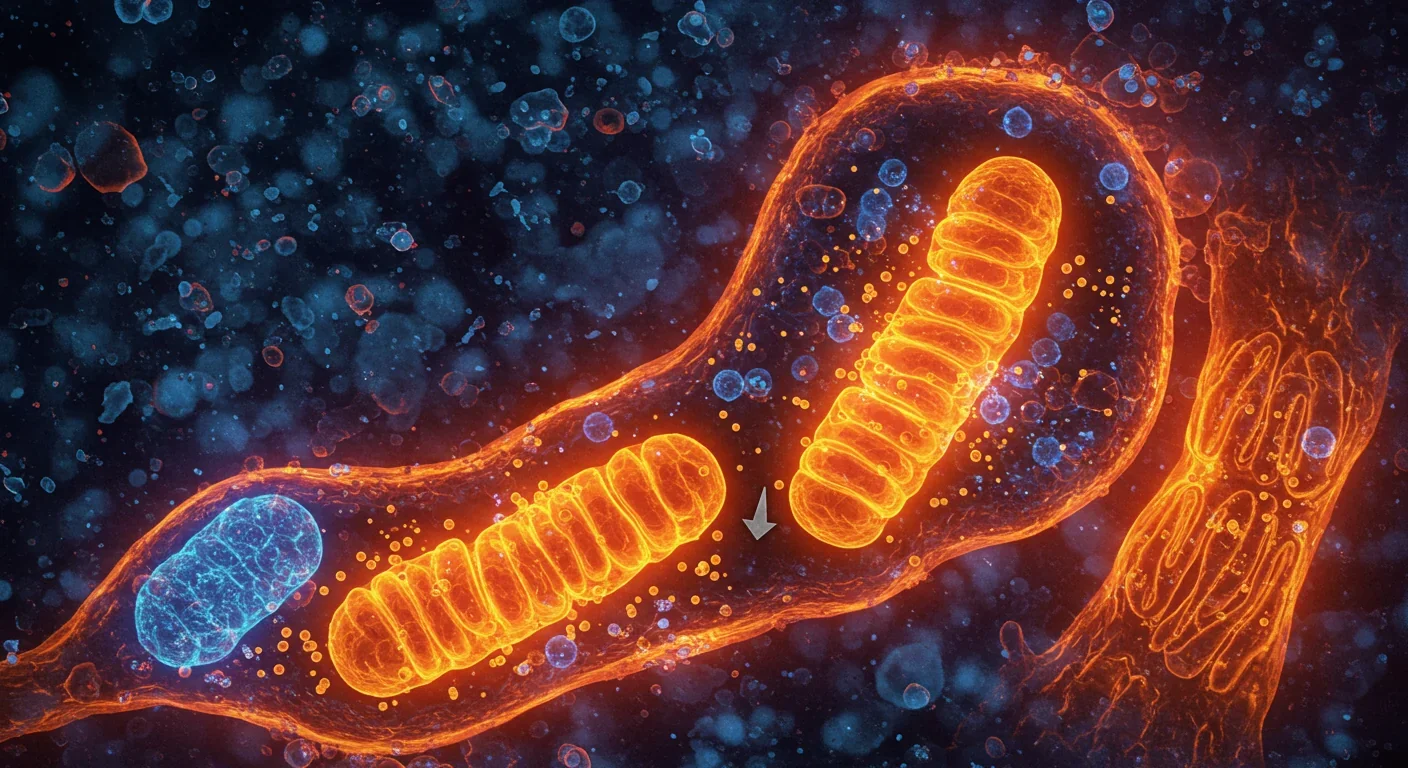

Mitophagy—your cells' cleanup mechanism for damaged mitochondria—holds the key to preventing Parkinson's, Alzheimer's, heart disease, and diabetes. Scientists have discovered you can boost this process through exercise, fasting, and specific compounds like spermidine and urolithin A.

Shifting baseline syndrome explains why each generation accepts environmental degradation as normal—what grandparents mourned, you take for granted. From Atlantic cod populations that crashed by 95% to Arctic ice shrinking by half since 1979, humans normalize loss because we anchor expectations to our childhood experiences. This amnesia weakens conservation policy and sets inadequate recovery targets. But tools exist to reset baselines: historical data, long-term monitoring, indigenous knowle...

Social media has created an 'authenticity paradox' where 5.07 billion users perform carefully curated spontaneity. Algorithms reward strategic vulnerability while psychological pressure to appear authentic causes creator burnout and mental health impacts across all users.

Scientists have decoded how geckos defy gravity using billions of nanoscale hairs that harness van der Waals forces—the same weak molecular attraction that now powers climbing robots on the ISS, medical adhesives for premature infants, and ice-gripping shoe soles. Twenty-five years after proving the mechanism, gecko-inspired technologies are quietly revolutionizing industries from space exploration to cancer therapy, though challenges in durability and scalability remain. The gecko's hierarch...

Cities worldwide are transforming governance through digital platforms, from Seoul's participatory budgeting to Barcelona's open-source legislation tools. While these innovations boost transparency and engagement, they also create new challenges around digital divides, misinformation, and privacy.

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.