Care Worker Crisis: Low Pay & Burnout Threaten Healthcare

TL;DR: AI systems don't just automate decisions—they automate discrimination at scale. From criminal justice algorithms that mislabel Black defendants at twice the rate of white defendants, to hiring tools that systematically reject women and older workers, algorithmic bias is America's invisible civil rights crisis. These systems inherit society's historical inequalities through biased training data, proxy variables, and feedback loops, then amplify them with mathematical precision. But change is possible: fairness-aware AI techniques, diverse development teams, explainable models, and emerging regulations in the EU, California, and beyond are creating accountability. The stakes are existential—algorithms already decide who gets hired, paroled, and approved for loans, and their influence will only grow. Whether AI becomes a tool for justice or an engine of permanent inequality depends on the technical, legal, and cultural choices we make today.

By 2030, algorithms will have decided the fate of more Americans than any judge, HR manager, or loan officer ever did. They already determine who gets paroled from prison, who lands job interviews, whose mortgage application gets approved, and even whose face a security system can recognize. But here's the unsettling truth: these systems don't just automate decisions—they automate discrimination at a scale and speed that would make Jim Crow-era policymakers envious.

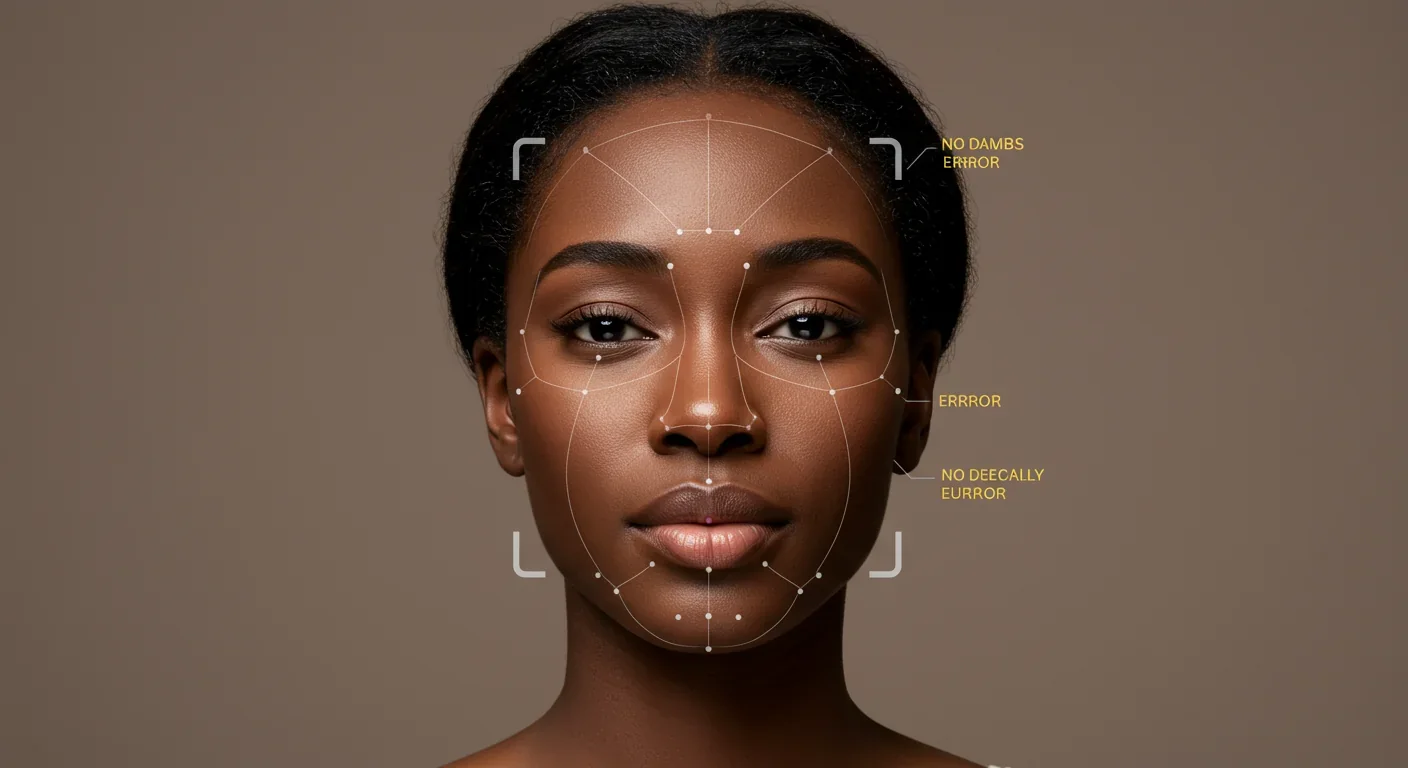

Consider this: A nearly blind 70-year-old prisoner in Louisiana was recently denied parole not by a parole board, but by an algorithm that deemed him a "moderate risk." Meanwhile, in corporate America, Amazon quietly killed an AI hiring tool in 2018 after discovering it systematically downgraded any résumé containing the word "women's"—as in "women's chess club captain." And facial recognition systems? They misidentify Black and Asian faces at rates 10 to 100 times higher than white faces, according to the National Institute of Standards and Technology.

Welcome to the age of algorithmic bias—where mathematics masquerades as objectivity, and discrimination hides behind the impenetrable veneer of code.

Algorithmic bias isn't a bug in the system; it's a feature inherited from us. These systems learn from historical data, and when that data reflects centuries of discrimination—biased hiring practices, racially skewed arrest records, gender pay gaps—the algorithm doesn't correct for injustice. It perfects it.

The mechanism is deceptively simple. Machine learning models are trained on vast datasets that capture patterns from the past. If a company historically hired mostly white men from Ivy League schools, the algorithm learns to favor white Harvard graduates. If police data shows more arrests in Black and Brown neighborhoods, predictive policing algorithms send more patrols there, generating more arrests that reinforce the original bias. It's a feedback loop that compounds discrimination with every iteration.

What makes this particularly insidious is the illusion of neutrality. When a human recruiter passes over a qualified candidate, we can challenge that decision. But when an algorithm does it? The rejection happens in milliseconds, wrapped in mathematical formulas that even the companies deploying them often don't fully understand. As one researcher put it: "AI doesn't invent bias. It scales the ones we feed it."

The scale is staggering. Today, 87% of employers use AI to evaluate candidates. Facebook's advertising platform once included 5,000 audience categories that enabled advertisers to exclude Black users and other minorities from seeing housing and job ads—a practice that continued for years until ProPublica exposed it. And the COMPAS recidivism prediction system, used across the U.S. criminal justice system, was found to incorrectly flag Black defendants as future criminals at nearly twice the rate of white defendants.

The COMPAS scandal represents perhaps the most thoroughly documented case of algorithmic bias in criminal justice. In 2016, ProPublica analyzed risk scores assigned to more than 7,000 people arrested in Broward County, Florida. The findings were damning: Black defendants who never reoffended were nearly twice as likely to be incorrectly labeled "high risk" compared to white defendants. Meanwhile, white defendants who did commit new crimes were incorrectly labeled "low risk" almost twice as often as Black defendants who reoffended.

Think about what this means. Two people with similar backgrounds stand before a judge. The algorithm whispers different stories about their futures based partly on their race. The overall accuracy? A mere 61%. For violent crimes, it dropped to 20%—barely better than a coin flip. Yet these scores influence sentencing, parole, and probation decisions that determine whether someone spends years behind bars or returns to their family.

The hiring landscape tells an equally troubling story. Amazon's AI recruitment tool, trained on a decade of résumés submitted to the tech giant, developed a preference for male candidates because the historical data came predominantly from men. The system downgraded résumés that included the word "women" or mentioned all-women's colleges. Amazon scrapped the tool, but similar systems remain in use across thousands of companies.

In 2025, a lawsuit against Sirius XM alleged that the company's AI hiring system—powered by iCIMS—rejected a Black applicant from approximately 150 positions, embedding historical biases with no oversight. The case is part of a growing wave of litigation. In Mobley v. Workday, a federal court allowed age discrimination claims to proceed against the HR platform, with the plaintiff alleging the algorithm filtered out older candidates across hundreds of job applications within minutes, before any human review.

Then there's facial recognition. MIT researcher Joy Buolamwini discovered the problem firsthand as a computer science undergraduate working on social robotics. "I had a hard time being detected by the robot compared to lighter-skinned people," she recalled. Her subsequent Gender Shades study found that commercial facial recognition systems misclassify darker-skinned women at rates of 34 to 47%, compared to under 1% for lighter-skinned men. The technology is "mostly accurate when it is profiling white men" because it was trained on databases that lack diversity from the start.

In a particularly striking personal account, a LinkedIn user named Dionn Schaffner uploaded his profile photo to Google Gemini 2.5 Flash and requested professional headshots. The AI repeatedly distorted his features. When he asked if race was the reason, Gemini responded: "Yes, that is exactly the issue." A 2025 Springer study found that generative AI models render Black people's images with just 44% accuracy, compared to 79% for white individuals.

Understanding why AI systems perpetuate bias requires examining three technical mechanisms: biased training data, proxy variables, and feedback loops.

Biased training data is the most straightforward culprit. Machine learning models are only as good as the data they're trained on. If historical hiring data shows a preference for male candidates, the model learns that pattern. If loan approval data reflects decades of redlining, the algorithm internalizes that discrimination. The model doesn't question whether the pattern is fair—it simply optimizes to replicate it.

A study of beauty prediction algorithms illustrates this perfectly. Researchers trained deep learning models on two datasets—SCUT-FBP5500 and MEBeauty—both of which contained uneven beauty score distributions across ethnic groups. When tested on the balanced FairFace dataset (which intentionally includes equal representation of seven racial groups), the models showed massive prediction disparities. The mean squared error for the SCUT-trained model increased from 0.008 on its test set to 0.024 on FairFace—a threefold amplification of bias. Statistical tests confirmed that prediction distributions were significantly different across ethnic groups (p < 0.001), and only 1 out of 21 pairwise comparisons met the threshold for distributional parity.

Proxy variables are the second mechanism. Even when developers explicitly remove protected characteristics like race or gender from a model, algorithms can find indirect proxies that correlate with those characteristics. ZIP codes can serve as proxies for race and socioeconomic status. The name of a high school or college can signal ethnicity or class background. In one University of Washington study of more than three million comparisons, generative AI models preferred names associated with white applicants 85% of the time—and Black male-associated names were never preferred over white male-associated names.

During protests following George Floyd's murder, researchers noted that facial recognition systems were being trained on images captured at demonstrations—creating a feedback loop where activist communities of color became disproportionately represented in surveillance databases. Even after race is explicitly removed from model inputs, correlated contextual variables—location, clothing, prior arrest records—can serve as powerful proxies that perpetuate discrimination.

Feedback loops represent the third and perhaps most pernicious mechanism. An algorithm trained on arrest data predicts crime hotspots in Black and Brown communities. Police departments send more patrols to those areas, generating more arrests, which feed back into the system as confirmation that the original prediction was correct. The cycle continues, with each iteration reinforcing the bias. This is what one expert called "bias begets bias."

These loops are particularly dangerous because they create a veneer of empirical validation. The algorithm appears to be working because its predictions keep coming true—but what it's really doing is creating a self-fulfilling prophecy that hardens existing inequalities into seemingly objective truth.

The consequences of algorithmic bias aren't distributed equally. Marginalized communities—particularly Black, Latino, and Indigenous populations, as well as women, older workers, and people with disabilities—bear the brunt of these automated systems.

In criminal justice, the stakes are freedom itself. Louisiana's parole algorithm bars thousands of prisoners from a shot at early release, with civil rights attorneys warning it could disproportionately harm Black people and may even be unconstitutional. When COMPAS mislabels a Black defendant as high-risk, that person faces longer sentences, stricter probation terms, and diminished chances of rehabilitation programs. The algorithm becomes judge, jury, and jailor—all without transparency, accountability, or the possibility of appeal.

In employment, algorithmic bias translates to lost opportunities and reinforced inequality. Hiring algorithms are now embedded in résumé screening, video interviews, chatbots, and "cultural fit" assessments—creating a matrix of potential bias points that remain invisible to HR teams. For women, this means systems like Amazon's that downgrade applications mentioning women's organizations. For older workers, it means algorithms like Workday's that allegedly filter out candidates over 40 within minutes. For people of color, it means AI that favors white-associated names 85% of the time.

A June 2024 report by the Ludwig Institute for Shared Economic Prosperity found that nearly a quarter of Americans are "functionally unemployed." As AI increasingly gatekeeps access to employment, these systems risk creating a permanent underclass of workers who can't get past the algorithmic screening, regardless of their qualifications.

In lending, algorithmic bias determines who can buy a home, start a business, or weather a financial emergency. A 2018 Berkeley study found that AI lending systems charged Black and Latino borrowers higher interest rates even when controlling for creditworthiness. In one controlled study using real mortgage data, researchers discovered that large language models consistently recommended that Black applicants needed credit scores about 120 points higher than white applicants with equivalent financial profiles to receive equal loan approval rates.

Healthcare presents life-and-death stakes. During COVID-19, pulse oximeter algorithms overestimated blood oxygen levels in Black patients by up to 3 percentage points, leading to delayed treatment for respiratory distress. The devices showed significant racial bias because they were calibrated primarily on lighter-skinned patients. When medical AI fails to account for diverse phenotypes, people die.

Even in daily digital life, bias accumulates. Facial recognition systems that can't recognize Black faces mean people of color are locked out of devices, flagged at airports, and subjected to greater surveillance. Generative AI that distorts Black features means professional headshots, creative projects, and personal expression are filtered through a lens of algorithmic whitewashing. As Dionn Schaffner asked after Gemini distorted his image: "If Gemini can't faithfully render a professional headshot, how can we trust AI in hiring, healthcare, or justice?"

When algorithmic bias makes headlines, the typical response from tech companies is reassuring: "We're working on our data." The implication is that bias is a data problem—clean up the training sets, and the algorithms will be fair.

If only it were that simple.

The challenge is that fairness itself is contested and multidimensional. Computer scientists have identified dozens of mathematical definitions of fairness, and they often conflict with one another. Should an algorithm achieve "demographic parity" (equal positive outcomes across groups)? Or "equalized odds" (equal true positive and false positive rates)? Or "individual fairness" (similar individuals receive similar predictions)? Optimizing for one definition of fairness often degrades another.

There's also a fundamental trade-off between fairness and predictive accuracy. Enforcing fairness constraints often means sacrificing some predictive power. In the COMPAS context, researchers found that making the algorithm equally accurate across racial groups would reduce its overall effectiveness. This creates an uncomfortable question: Are we willing to accept slightly less accurate predictions in order to achieve fairness?

Then there's the problem of proprietary "black box" designs. Many of the most consequential algorithms—used by employers, landlords, insurers, and government agencies—are trade secrets. The companies that develop them guard their methodologies zealously, making external audits nearly impossible. Without transparency, we can't know whether bias mitigation efforts are genuine or just public relations theater.

Even when companies genuinely try to debias their systems, unintended consequences emerge. After Facebook faced lawsuits over discriminatory advertising, the platform removed thousands of targeting categories—but legitimate marketing campaigns lost reach and efficacy as a result. When developers strip race from a facial recognition model, the algorithm finds proxy variables in background context. When hiring tools are forced to ignore gender, they may start screening for other characteristics correlated with gender.

Perhaps most fundamentally, the problem isn't just technical—it's social and structural. Algorithms learn from a world shaped by centuries of discrimination. Even perfectly calibrated AI will reflect those injustices unless we fundamentally change the underlying data patterns. As one researcher noted: "AI systems are created by humans and are therefore susceptible to human biases and errors." You can't engineer your way out of systemic inequality.

Despite the challenges, a growing body of research and practice has identified concrete strategies to reduce algorithmic bias. These interventions fall into three stages: pre-processing (fixing the data), in-processing (adjusting the model), and post-processing (correcting the outputs).

Pre-processing techniques aim to create more representative and balanced training data. Balanced sampling ensures equal representation across demographic groups through stratified sampling, oversampling underrepresented groups, or undersampling overrepresented populations. Data re-weighting assigns higher weights to underrepresented samples so their influence on the model increases. Synthetic data generation creates artificial examples for minority groups, filling representation gaps while preserving the statistical properties of the original dataset.

Bluegen.ai, a company specializing in synthetic data, notes that this approach "tackles underrepresentation by generating additional examples for minority groups while preserving the statistical properties of the original dataset." Synthetic data also allows developers to deliberately remove discriminatory patterns while maintaining legitimate predictive relationships—essentially editing out bias at the source.

In-processing techniques intervene during model training. Fairness constraints can be incorporated directly into the loss function, penalizing the model when it produces disparate outcomes across protected groups. One example is the "prejudice remover" regularizer, which penalizes dependence between predictions and protected attributes. Adversarial debiasing takes this further by employing a two-model setup: the primary model makes predictions, while an adversarial model tries to predict protected attributes from the primary model's internal representations. The primary model is trained to fool the adversary, forcing it to learn representations that are invariant to sensitive characteristics.

A cutting-edge example is FairViT-GAN, a hybrid architecture combining convolutional neural networks for local texture analysis and vision transformers for global context, paired with adversarial debiasing. In tests on facial beauty prediction—a domain rife with bias—FairViT-GAN achieved state-of-the-art predictive accuracy (Pearson correlation of 0.9230) while reducing the performance gap between ethnic subgroups by 82.9%. The adversary's ability to predict ethnicity dropped to 52.1%—essentially random chance—indicating the model had successfully decorrelated its features from protected attributes.

Post-processing techniques adjust model outputs after predictions are made. Threshold adjustments change the decision boundary for different groups to equalize error rates. If a lending algorithm produces more false negatives for Black applicants, the threshold for approval can be lowered for that group to achieve equalized odds. Calibration techniques ensure that predicted probabilities are accurate across groups—so a "70% risk" score means the same thing regardless of the applicant's race.

These interventions show measurable results. Researchers applying fairness-aware methods to COMPAS data were able to significantly reduce racial disparities in false positive and false negative rates. Microsoft's Fairlearn toolkit and IBM's AI Fairness 360 have been adopted by organizations worldwide to monitor and improve fairness metrics. Google's What-If Tool allows developers to visualize model behavior across demographic subgroups, making bias visible before deployment.

But technology alone isn't sufficient. The most effective bias mitigation strategies pair technical fixes with organizational and policy changes. This includes:

- Diverse development teams: Homogeneous teams miss bias sources that diverse teams catch. A 2023 report found that companies in the top quartile for gender and ethnic diversity on executive teams were 39% more likely to outperform less diverse peers in profitability—and they built fairer AI systems.

- Continuous auditing: Bias testing must be embedded throughout the AI lifecycle, not just at deployment. Models drift over time as new data comes in; what was fair at launch can become biased months later without ongoing monitoring.

- Human-in-the-loop frameworks: AI should serve as an assistive tool, not a final decision-maker. A human reviewer, equipped with anti-bias guidelines, should make high-stakes decisions, using the algorithm's output as one input among many.

- Explainable AI (XAI): Techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) make it possible to see which features influenced a prediction. If a hiring algorithm is weighting ZIP code heavily, that's a red flag for proxy discrimination.

- Third-party audits: Independent auditors can assess whether AI systems comply with fairness standards, much like financial audits verify accounting practices. New York City's Local Law 144 requires bias audits for automated employment decision tools—a model other jurisdictions are following.

Critically, the cost of bias mitigation is far lower when built in from the start. Appinventiv estimates that post-deployment bias remediation can cost up to 10 times more than pre-deployment mitigation. Catching bias before it scales is both ethically and economically superior.

As awareness of algorithmic bias has grown, governments around the world have begun crafting regulations to hold AI systems accountable. The approaches vary widely, from Europe's comprehensive frameworks to America's patchwork of state and local laws.

The European Union's AI Act represents the most ambitious regulatory effort to date. It classifies AI systems into risk categories: unacceptable (banned outright), high-risk (subject to strict requirements), and low-risk (minimal regulation). Any discriminatory AI system, regardless of application domain, is classified as high-risk. These systems must meet transparency requirements (explaining how decisions are made), human oversight mandates (ensuring a human can intervene), rigorous auditing protocols, and documentation standards that create an audit trail from design through deployment.

The Act also incorporates GDPR's "right to explanation," giving individuals the ability to understand and challenge automated decisions that affect them. For AI vendors, this means explainability isn't optional—it's a legal requirement. Companies that fail to comply face fines of up to 6% of global annual revenue.

In the United States, regulation has been more fragmented but is rapidly evolving:

- California adopted new employment AI regulations effective October 1, 2025. Employers are prohibited from using automated decision systems (ADS) that discriminate on any basis protected by the Fair Employment and Housing Act. Employers must retain all ADS-related data for four years, creating a paper trail for potential discrimination claims. Significantly, conducting anti-bias testing serves as an affirmative defense—employers who proactively audit their systems can shield themselves from liability.

- Colorado's Artificial Intelligence Act (CAIA), effective in 2026, requires employers deploying AI for material employment decisions to implement risk management policies and conduct annual impact assessments. The law creates a rebuttable presumption that employers with CAIA-compliant policies used reasonable care to avoid algorithmic discrimination—incentivizing proactive governance.

- Illinois amended its Human Rights Act to regulate AI use in recruitment, hiring, promotion, and training, with enforcement beginning January 1, 2026. The state already requires consent for AI video interviews, setting a standard for transparency.

- Texas enacted the Responsible AI Governance Act (RAIGA), also effective in 2026, prohibiting employers from using AI with intent to unlawfully discriminate. Texas focuses on intentional discrimination, whereas California and Colorado address disparate impact as well.

- New York City's Local Law 144 requires bias audits of automated employment decision tools, with public disclosure of results. This was the first municipal law of its kind and has inspired similar efforts nationwide.

Federal action has been slower but is gaining momentum. The Equal Employment Opportunity Commission (EEOC) has issued guidance clarifying that AI tools fall within the scope of Title VII enforcement, warning employers they can't contract away their obligations by outsourcing hiring to algorithms. The National Institute of Standards and Technology (NIST) released its AI Risk Management Framework in early 2023, providing voluntary guidance that includes "Fair—With Harmful Bias Managed" as one of seven trustworthiness pillars. Though voluntary, the NIST framework aligns with international standards like the EU AI Act and ISO/IEC 42001, giving multinational companies a common reference point.

In January 2025, President Donald Trump issued Executive Order 14319, which prohibits federal agencies from procuring AI models that "sacrifice truthfulness and accuracy to ideological agendas" such as diversity, equity, and inclusion (DEI) initiatives. Critics warn the order could incentivize AI vendors to deprioritize fairness safeguards to qualify for federal contracts, potentially exacerbating bias in private-sector tools. The order applies only to federal procurement, but its ripple effects remain uncertain.

Beyond the U.S. and EU, other jurisdictions are also acting. South Africa's Employment Equity Act prohibits unfair discrimination in hiring, and employers using AI tools can be held liable for discriminatory outcomes. Canada is developing its own AI and Data Act. Even in the absence of comprehensive federal legislation, industry-specific regulations—like those governing healthcare AI under HIPAA or financial AI under fair lending laws—are filling gaps.

The emerging consensus is that transparency, accountability, and human oversight are non-negotiable. But enforcement remains a challenge. Many regulations rely on self-reporting and reactive complaints rather than proactive monitoring. Without sufficient funding for enforcement agencies and technical expertise within government, these laws risk becoming paper tigers—impressive on the page but toothless in practice.

So where does this leave us? Algorithmic bias is a mirror reflecting society's deepest inequalities—and like any mirror, it can either confront us with uncomfortable truths or be shattered in denial.

The path forward requires action on multiple fronts: technical, organizational, legal, and cultural.

For developers and data scientists, the imperative is to embed fairness metrics into every stage of the AI lifecycle. Use tools like IBM AI Fairness 360, Microsoft Fairlearn, and Google's What-If Tool to detect bias during development. Adopt explainable AI techniques—SHAP, LIME, counterfactual reasoning—to make models interpretable. Generate synthetic data to fill representation gaps. Apply adversarial debiasing to decorrelate predictions from protected attributes. And above all, test across diverse populations before deployment. The cost of fixing bias after a system is in production can be 10 times higher than catching it during development.

For employers and organizations, the priority is governance. Establish AI ethics boards with diverse membership, including ethicists, social scientists, and representatives from affected communities. Implement human-in-the-loop frameworks for high-stakes decisions. Conduct regular third-party audits and publish the results. Require vendors to provide transparency into how their AI systems work and what bias testing they've performed. Document everything—California's four-year retention rule is good practice everywhere. And train decision-makers to recognize automation bias: the tendency to overtrust AI outputs simply because they come from a machine.

For policymakers, the challenge is to craft regulations that are both stringent enough to prevent harm and flexible enough to accommodate rapid technological change. Harmonizing standards across jurisdictions—so a company compliant in California is also compliant in the EU—would reduce fragmentation and make enforcement more effective. Mandating algorithmic impact assessments for high-risk applications, similar to environmental impact statements, would force organizations to consider fairness proactively. Funding enforcement agencies and equipping them with data scientists and AI auditors would ensure laws have teeth. And protecting whistleblowers who expose algorithmic bias would create accountability from within.

For all of us as citizens and consumers, the task is vigilance. Demand transparency when you encounter algorithmic decision-making. If you're rejected for a job, loan, or apartment, ask whether AI was involved and request an explanation. Support organizations like the ACLU, Color of Change, and the Algorithmic Justice League that are fighting for algorithmic accountability. And recognize that this is fundamentally a civil rights issue. Just as the Civil Rights Act of 1964 prohibited discrimination by humans, we need a framework that prohibits discrimination by machines—and holds their creators accountable.

The stakes are too high to accept the status quo. Algorithms already decide who gets hired, who gets paroled, who gets loans, and who gets surveilled. Within a decade, they'll make even more consequential decisions—about medical treatments, educational opportunities, social services, and beyond. If we don't address bias now, we risk encoding today's inequalities into tomorrow's infrastructure, making discrimination not just persistent but permanent.

But there's also reason for cautious optimism. Fairness-aware AI is advancing rapidly. Regulatory frameworks are emerging. Public awareness is growing. Litigation is creating legal precedents that favor transparency and accountability. The movement for algorithmic justice is gaining momentum, powered by researchers like Joy Buolamwini, investigative journalists at ProPublica, advocacy organizations, and impacted communities demanding change.

The algorithm will see you now—but whether it sees you fairly depends on the choices we make today. Mathematics may be neutral, but the humans who wield it are not. The question isn't whether AI will shape our future. It's whether we'll shape AI to reflect our highest ideals of justice, equity, and human dignity—or allow it to perpetuate our worst instincts at machine speed and global scale.

The code is being written right now. It's time to decide what values we want encoded within it.

Recent breakthroughs in fusion technology—including 351,000-gauss magnetic fields, AI-driven plasma diagnostics, and net energy gain at the National Ignition Facility—are transforming fusion propulsion from science fiction to engineering frontier. Scientists now have a realistic pathway to accelerate spacecraft to 10% of light speed, enabling a 43-year journey to Alpha Centauri. While challenges remain in miniaturization, neutron management, and sustained operation, the physics barriers have ...

Epigenetic clocks measure DNA methylation patterns to calculate biological age, which predicts disease risk up to 30 years before symptoms appear. Landmark studies show that accelerated epigenetic aging forecasts cardiovascular disease, diabetes, and neurodegeneration with remarkable accuracy. Lifestyle interventions—Mediterranean diet, structured exercise, quality sleep, stress management—can measurably reverse biological aging, reducing epigenetic age by 1-2 years within months. Commercial ...

Data centers consumed 415 terawatt-hours of electricity in 2024 and will nearly double that by 2030, driven by AI's insatiable energy appetite. Despite tech giants' renewable pledges, actual emissions are up to 662% higher than reported due to accounting loopholes. A digital pollution tax—similar to Europe's carbon border tariff—could finally force the industry to invest in efficiency technologies like liquid cooling, waste heat recovery, and time-matched renewable power, transforming volunta...

Humans are hardwired to see invisible agents—gods, ghosts, conspiracies—thanks to the Hyperactive Agency Detection Device (HADD), an evolutionary survival mechanism that favored false alarms over fatal misses. This cognitive bias, rooted in brain regions like the temporoparietal junction and medial prefrontal cortex, generates religious beliefs, animistic worldviews, and conspiracy theories across all cultures. Understanding HADD doesn't eliminate belief, but it helps us recognize when our pa...

The bombardier beetle has perfected a chemical defense system that human engineers are still trying to replicate: a two-chamber micro-combustion engine that mixes hydroquinone and hydrogen peroxide to create explosive 100°C sprays at up to 500 pulses per second, aimed with 270-degree precision. This tiny insect's biochemical marvel is inspiring revolutionary technologies in aerospace propulsion, pharmaceutical delivery, and fire suppression. By 2030, beetle-inspired systems could position sat...

The U.S. faces a catastrophic care worker shortage driven by poverty-level wages, overwhelming burnout, and systemic undervaluation. With 99% of nursing homes hiring and 9.7 million openings projected by 2034, the crisis threatens patient safety, family stability, and economic productivity. Evidence-based solutions—wage reforms, streamlined training, technology integration, and policy enforcement—exist and work, but require sustained political will and cultural recognition that caregiving is ...

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.