Zero-Trust Security Revolution: Verify Everything Always

TL;DR: Edge computing achieves sub-10ms latency by relocating computation from distant data centers to locations near users, enabling real-time applications like autonomous vehicles, remote surgery, and AR/VR that require imperceptible response times.

Within the next five years, billions of devices will rely on a latency threshold that sounds impossibly fast: 10 milliseconds. That's one-hundredth of a second—faster than a hummingbird's wingbeat. For autonomous vehicles coordinating lane changes, surgeons operating robotic instruments remotely, or stock traders executing microsecond-critical transactions, the difference between 10 milliseconds and 100 milliseconds isn't just noticeable. It's the difference between success and catastrophe.

Edge computing represents a fundamental reimagining of where computation happens. Instead of shuttling data thousands of miles to centralized cloud data centers and back, edge computing moves the processing power to the periphery—closer to where data originates. The result? Response times that can drop below 10ms, enabling a new generation of real-time applications that simply couldn't exist in a cloud-only world.

The primary barrier to low latency isn't processing power—it's distance. Light travels through fiber optic cables at roughly 200,000 kilometers per second, about two-thirds the speed of light in a vacuum. Sounds fast, right? But when your request needs to travel from San Francisco to a data center in Virginia and back—a round trip of nearly 6,000 miles—physics imposes a theoretical minimum latency of around 40 milliseconds. Add in routing delays, network congestion, and processing time, and you're easily looking at 100-200ms for typical cloud requests.

Edge computing sidesteps this fundamental limitation by relocating compute resources. Instead of Virginia, your request might hit a server 50 miles away—or even on your street. According to research from Fastly, their edge network delivers median response times of 2-8 milliseconds across global locations, compared to 50-150ms for traditional cloud architectures.

Edge computing achieves sub-10ms response times not through faster processors, but by fundamentally rethinking where computation happens—moving it from distant data centers to locations within tens or hundreds of miles of users.

But proximity alone doesn't guarantee sub-10ms performance. The infrastructure must be optimized at every layer. Studies on edge containerization reveal that even small configuration choices matter enormously: switching from Docker's default bridge networking to host mode reduces average round-trip latency from 10.1ms to 2.5ms on resource-constrained devices like Raspberry Pi—a 77% improvement from a single setting.

Network architecture plays an equally critical role. The rollout of 5G networks provides the wireless infrastructure edge computing needs, delivering one-to-two-millisecond round-trip times for radio transmission. Combined with local processing at cell towers or nearby micro-data centers, this creates an end-to-end latency budget that makes sub-10ms feasible even for mobile users.

The applications driving edge adoption aren't theoretical—they're already reshaping industries. Autonomous vehicles generate more than 40 terabytes of data per hour, processing inputs from cameras, LIDAR, radar, and other sensors. A car traveling at highway speed covers about 30 meters per second. At 100ms latency, that's three meters of delayed reaction time. At 10ms, it's 30 centimeters. For vehicle-to-vehicle communication enabling autonomous truck platooning, that difference determines whether the system can react in time to prevent collisions.

In healthcare, edge computing enables real-time patient monitoring that can save lives. Hospital-based edge systems process data from wearable sensors and bedside monitors locally, detecting anomalies and alerting medical staff within milliseconds. When a patient's vitals begin deteriorating, waiting even a second for data to round-trip to a distant cloud could be fatal.

"Edge computing creates a competitive moat by delivering sub-5ms latency, making cloud-level delays unacceptable to customers."

— Industry analysis from edge computing research

Financial markets have understood latency for decades. High-frequency trading firms locate their servers in the same buildings as stock exchanges, paying millions for the privilege. Edge computing democratizes this advantage beyond Wall Street. Payment processing, fraud detection, and real-time pricing can now happen at the network edge, reducing transaction latency from seconds to milliseconds.

Gaming and augmented reality applications depend on imperceptible lag. Cloud gaming services struggle when latency exceeds 20-30ms, creating noticeable input delay that destroys immersion. AR applications overlaying digital information on the physical world need even tighter latency budgets—human perception can detect delays above 20ms, breaking the illusion. Edge computing brings the rendering and processing close enough that the digital and physical worlds can synchronize seamlessly.

Edge computing isn't a single technology but a spectrum of deployment models. At one end sits the "far edge"—individual devices like smartphones, IoT sensors, or industrial equipment performing local processing. In the middle, "near edge" infrastructure includes cellular base stations, local access points, and regional micro-data centers. At the other end, "edge cloud" deployments leverage Points of Presence (PoPs) in major metropolitan areas, still geographically distributed but with more robust compute capabilities.

Modern edge architectures typically involve three layers. The device layer handles immediate data collection and preliminary filtering—think sensors discarding obviously irrelevant data. The edge layer performs the latency-critical processing, running AI inference models, executing business logic, or caching frequently accessed content. The cloud layer stores historical data, trains machine learning models, and handles workloads that don't require real-time response.

Content delivery networks pioneered many edge techniques. CDN edge servers cache static content globally, reducing load times from seconds to milliseconds by serving files from nearby servers rather than origin servers continents away. Modern edge computing extends this principle beyond static files to dynamic computation—running code, processing data, and making decisions at the network periphery.

The technical implementation varies by provider. AWS's edge infrastructure includes CloudFront locations for content delivery, Lambda@Edge for serverless compute at CDN points, and Local Zones for low-latency access in major metros. Cloudflare Workers runs JavaScript at over 300 global edge locations, executing custom code within 10-50ms of users worldwide. These platforms abstract away the complexity of distributed deployment while providing the latency benefits.

Edge computing sounds magical until you confront the operational reality. Distributing compute across hundreds or thousands of locations introduces management complexity that centralized cloud avoids. How do you deploy updates atomically? How do you debug issues occurring only at specific edge nodes? How do you ensure consistent behavior when code runs on heterogeneous hardware?

Container orchestration helps but introduces its own challenges. Docker containers add minimal CPU overhead on edge devices—about 0.56% for CPU-bound workloads—but container startup latency ranges from 100-500ms. That cold-start penalty violates schedulability constraints for sub-10ms applications. The solution often involves keeping containers pre-warmed or using lightweight alternatives like Firecracker or unikernels that boot in 20-50ms.

The hidden cost of edge computing: while cloud computing scales capacity instantly, edge deployments require physical hardware at every location—often with dedicated GPUs that sit underutilized during off-peak hours.

Cost calculations shift dramatically. Traditional cloud computing follows a simple model: pay for what you use, with the ability to scale capacity up or down instantly. Edge deployments require hardware at every location, often with dedicated GPU accelerators for AI workloads. Without dynamic scaling across locations, peak capacity must be provisioned everywhere, leading to underutilized resources during off-peak hours.

Security concerns multiply when your attack surface spans hundreds of distributed nodes. Each edge location becomes a potential entry point. Physical security becomes relevant again—edge servers might sit in cell towers, retail locations, or industrial facilities rather than hardened data centers. Managing credentials, encryption keys, and access controls across distributed infrastructure requires different tooling than centralized cloud security.

Data sovereignty and compliance introduce geographic complexity. Processing data locally can help satisfy regulations requiring data to remain in specific jurisdictions, but it also means ensuring every edge location complies with local laws. A global edge deployment might operate under dozens of different regulatory frameworks simultaneously.

Few organizations go all-in on edge computing. The real-world pattern involves hybrid architectures that split workloads intelligently between edge, cloud, and on-premises infrastructure. The key question isn't "should we use edge?" but "which workloads benefit enough to justify the complexity?"

Interactive user experiences make excellent edge candidates. Form validation, authentication checks, and UI rendering can happen at the edge, providing instant feedback while background processes sync to the cloud. E-commerce sites use edge computing for catalog browsing and product searches while keeping inventory and payment processing centralized.

IoT deployments often adopt a tiered approach. Sensors perform basic filtering locally. Edge gateways aggregate data from multiple sensors, run anomaly detection, and trigger immediate responses. Only aggregated or anomalous data travels to the cloud for analysis and long-term storage. This architecture reduces bandwidth costs while maintaining real-time responsiveness.

AI inference particularly benefits from edge deployment. Training large models requires massive compute best handled in the cloud, but running inference on those models can happen locally. An autonomous vehicle doesn't train its neural networks while driving—it loads pre-trained models and executes them locally with sub-10ms latency.

Edge computing adoption varies dramatically by geography, driven by infrastructure availability, regulatory environment, and use case priorities. China leads in edge deployment for smart cities and industrial IoT, with massive investments in 5G infrastructure creating the connectivity foundation edge computing requires.

Europe focuses heavily on data sovereignty aspects of edge computing. GDPR and similar regulations make processing data locally attractive, keeping personally identifiable information within jurisdictional boundaries. European telecommunications providers build out edge computing capabilities as value-added services beyond basic connectivity.

"Edge devices can operate autonomously even when network access is restricted or very limited, ensuring uninterrupted operations and mitigating the risks associated with network outages or latency issues."

— SUSE Edge Computing Analysis

The United States sees edge adoption driven primarily by content delivery, gaming, and financial services—industries where latency directly impacts competitive advantage. Major cloud providers race to deploy edge infrastructure, but fragmented telecommunications infrastructure creates challenges compared to markets with more centralized network operators.

Developing nations face different trade-offs. Limited backbone connectivity makes edge computing potentially transformative—local processing could enable services impossible with unreliable internet access. But the capital costs of distributed edge infrastructure compete with basic connectivity needs. Innovative deployments use existing infrastructure creatively, turning retail locations with reliable power into neighborhood edge nodes.

As organizations adopt edge computing, they're discovering a talent shortage that spans multiple disciplines. Traditional backend developers understand centralized systems but may lack experience with distributed architectures. Network engineers understand connectivity but not application deployment. DevOps teams skilled in cloud orchestration face new challenges with physical hardware management.

The most valuable skill set combines expertise across domains: understanding network topology and latency characteristics, container orchestration and microservices architecture, embedded systems and resource-constrained computing, and security models for distributed systems. This rare combination commands premium salaries.

Educational institutions lag behind industry needs. Computer science curricula still emphasize either cloud computing or embedded systems as separate tracks, rarely integrating them the way edge computing requires. Bootcamps and online courses focus on cloud-native development, leaving edge computing as an advanced specialization rather than core curriculum.

The trajectory seems clear: more computation will migrate to the edge over the next decade. Not because centralized cloud computing fails, but because certain workloads demand latencies cloud architectures can't deliver. As 5G expands and eventually transitions to 6G, the wireless infrastructure will support even more sophisticated edge deployments.

AI advances will accelerate adoption. Today's edge deployments often run relatively simple models on specialized hardware. As inference becomes more efficient and model compression techniques improve, edge devices will handle increasingly sophisticated AI workloads. Imagine every security camera running real-time object recognition, every industrial sensor performing predictive maintenance analysis, or every vehicle participating in city-wide traffic optimization—all with sub-10ms latency.

The environmental implications deserve attention. Distributed edge computing could reduce the massive energy footprint of hyperscale data centers by processing data where it's generated rather than transmitting it globally. But it could also increase overall energy use if edge nodes operate inefficiently. The outcome depends on architectural choices made today.

For technical professionals: the relevant questions aren't whether edge is faster (physics guarantees that), but whether your applications need sub-10ms latency, whether your organization can manage distributed infrastructure, and whether performance gains justify the complexity.

For technical professionals evaluating edge computing, the question isn't whether it's faster—the physics guarantees that. The relevant questions are: Do your users or applications need sub-10ms latency? Can your organization manage distributed infrastructure? Does the performance improvement justify the operational complexity and cost? For an expanding set of use cases, the answer increasingly becomes yes.

Ten milliseconds emerged as a psychological and practical threshold. It's fast enough that humans perceive responses as instantaneous. It's slow enough that modern hardware and networks can reliably achieve it with proper architecture. And it's the boundary separating applications that work from applications that feel magical.

We're entering an era where billions of devices will expect sub-10ms responses not as a luxury but as a baseline requirement. The infrastructure enabling that future already exists in early form. Research shows that designing systems with 10ms budgets forces architectural discipline that benefits all users, even those with slower connections.

The transformation ahead isn't just technical—it's experiential. Just as broadband internet enabled streaming video and mobile networks enabled ubiquitous apps, sub-10ms latency will enable applications we haven't imagined yet. The companies and engineers mastering edge computing now are building the foundation for whatever comes next.

The speed of light remains constant, but the distance data travels keeps shrinking. Edge computing represents the latest chapter in computing's ongoing race against latency—a race where the winners won't just be faster, but capable of entirely new possibilities. In a world measured in milliseconds, 10ms might be the most important number in technology.

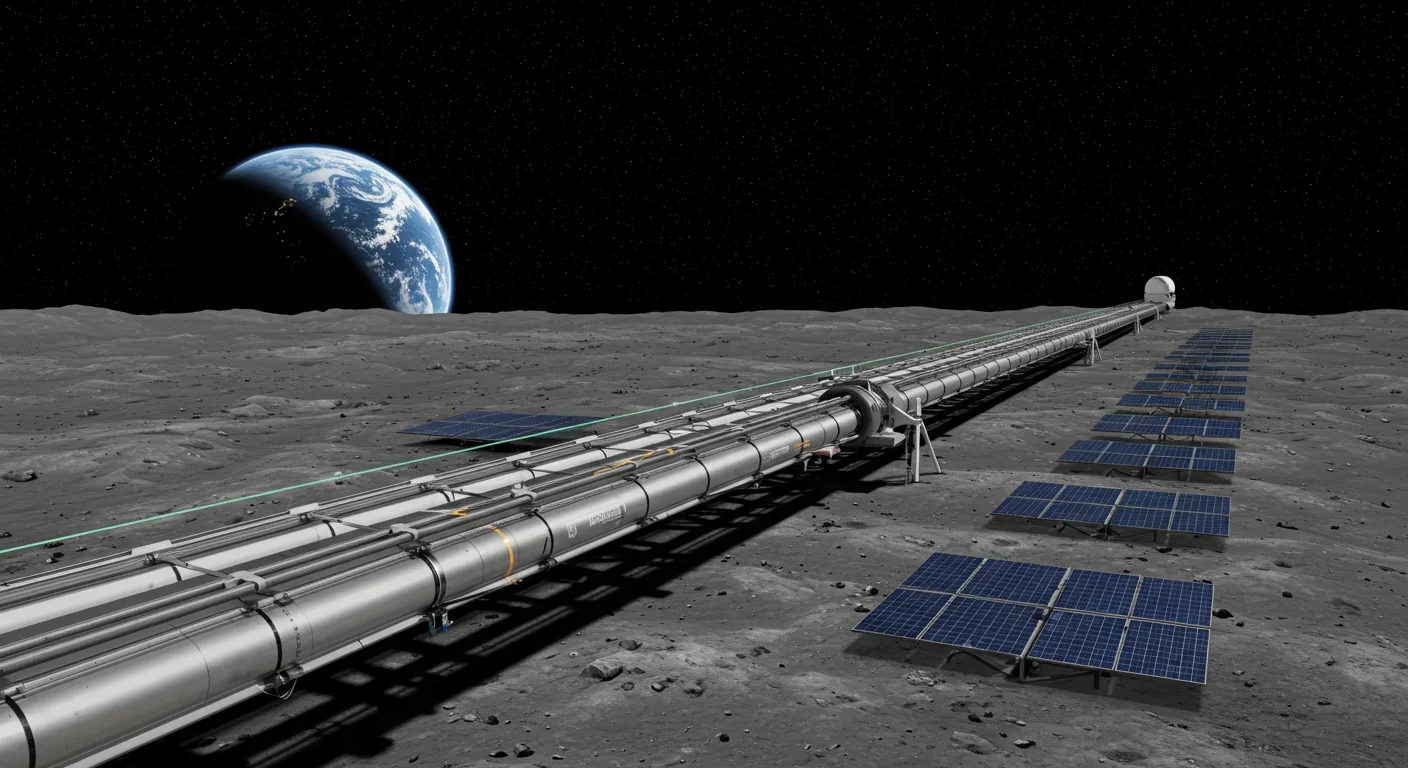

Lunar mass drivers—electromagnetic catapults that launch cargo from the Moon without fuel—could slash space transportation costs from thousands to under $100 per kilogram. This technology would enable affordable space construction, fuel depots, and deep space missions using lunar materials, potentially operational by the 2040s.

Ancient microorganisms called archaea inhabit your gut and perform unique metabolic functions that bacteria cannot, including methane production that enhances nutrient extraction. These primordial partners may influence longevity and offer new therapeutic targets.

CAES stores excess renewable energy by compressing air in underground caverns, then releases it through turbines during peak demand. New advanced adiabatic systems achieve 70%+ efficiency, making this decades-old technology suddenly competitive for long-duration grid storage.

Human children evolved to be raised by multiple caregivers—grandparents, siblings, and community members—not just two parents. Research shows alloparenting reduces parental burnout, improves child development, and is the biological norm across cultures.

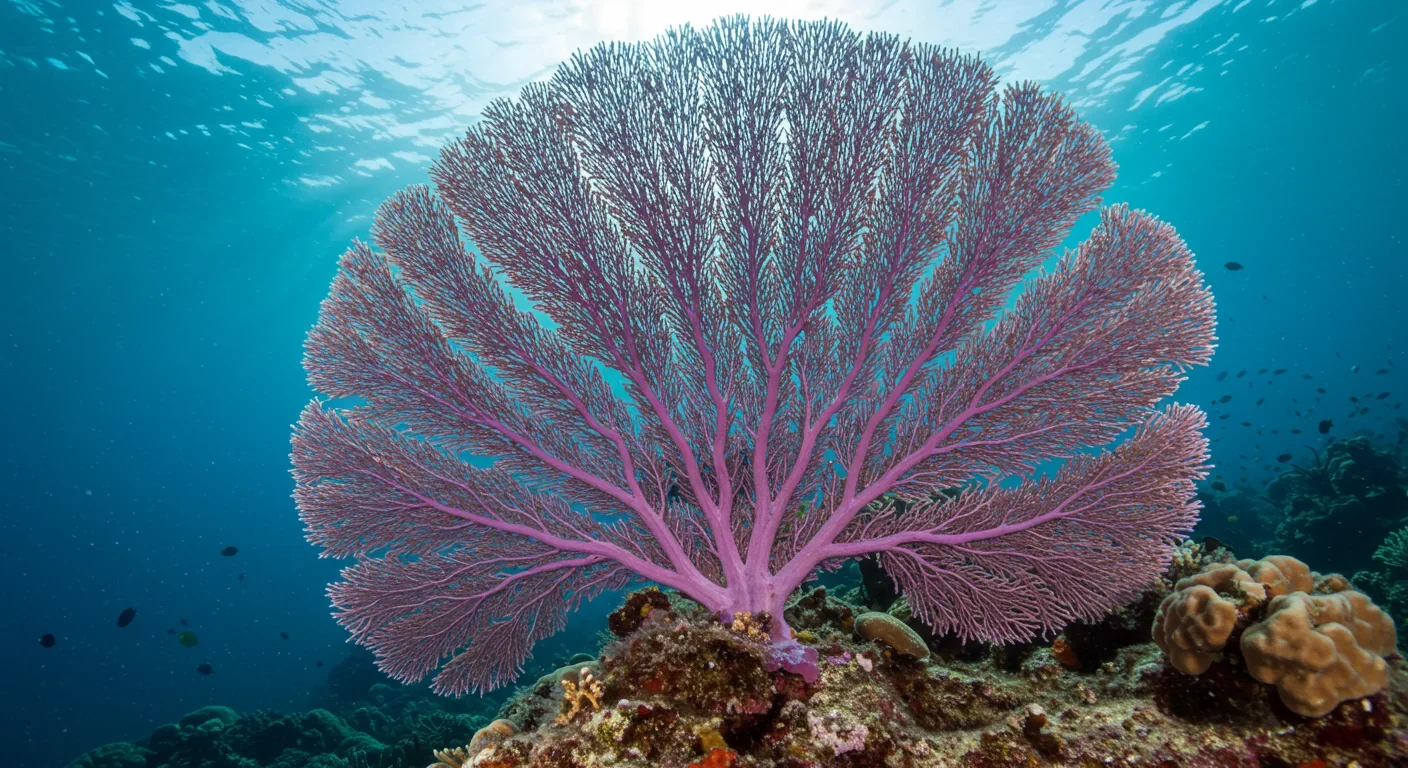

Soft corals have weaponized their symbiotic algae to produce potent chemical defenses, creating compounds with revolutionary pharmaceutical potential while reshaping our understanding of marine ecosystems facing climate change.

Generation Z is the first cohort to come of age amid a polycrisis - interconnected global failures spanning climate, economy, democracy, and health. This cascading reality is fundamentally reshaping how young people think, plan their lives, and organize for change.

Zero-trust security eliminates implicit network trust by requiring continuous verification of every access request. Organizations are rapidly adopting this architecture to address cloud computing, remote work, and sophisticated threats that rendered perimeter defenses obsolete.