Photonic Processors: Inside the Race for Light-Speed Computing

TL;DR: AlphaGo revolutionized AI by defeating world champion Lee Sedol through reinforcement learning and neural networks. Its successor, AlphaGo Zero, learned purely through self-play, discovering strategies superior to millennia of human knowledge—opening new frontiers in AI applications across healthcare, robotics, and optimization.

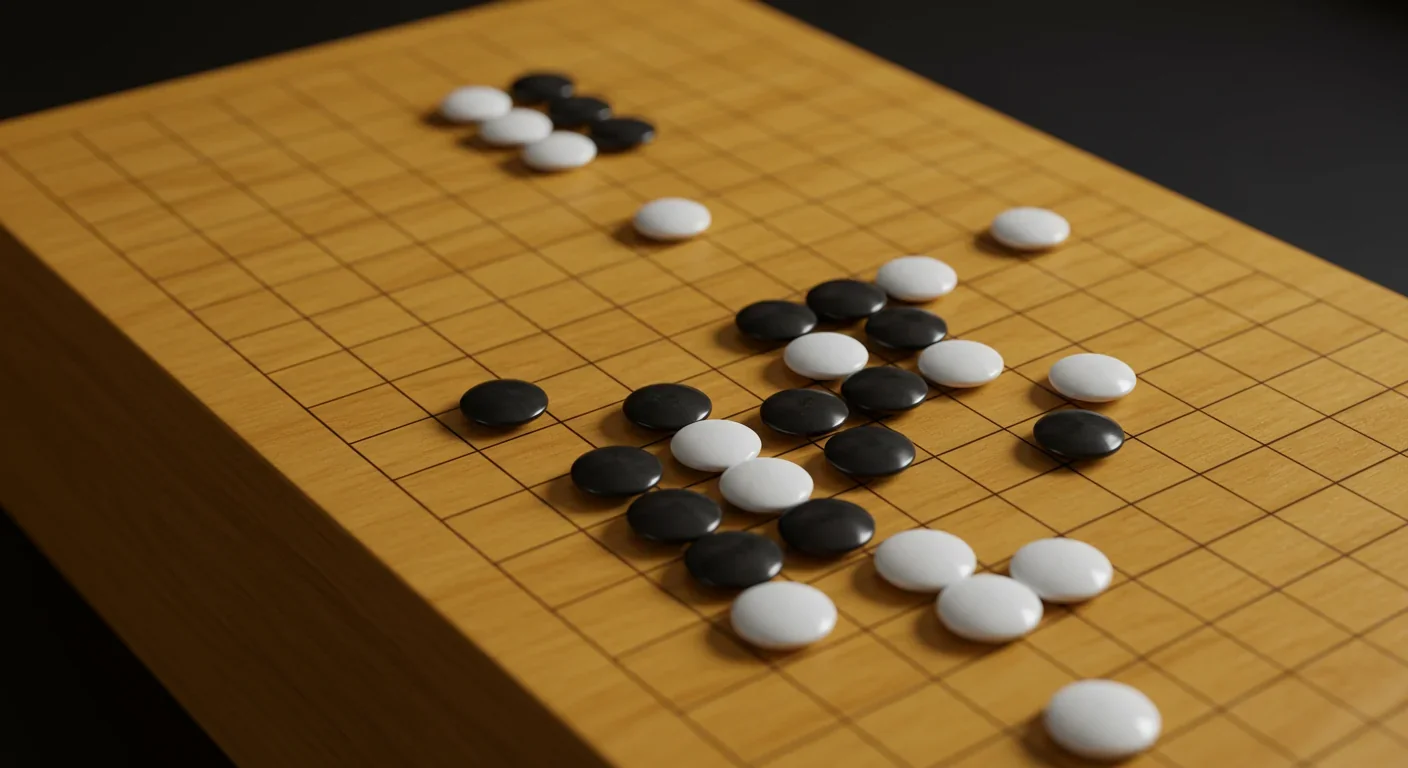

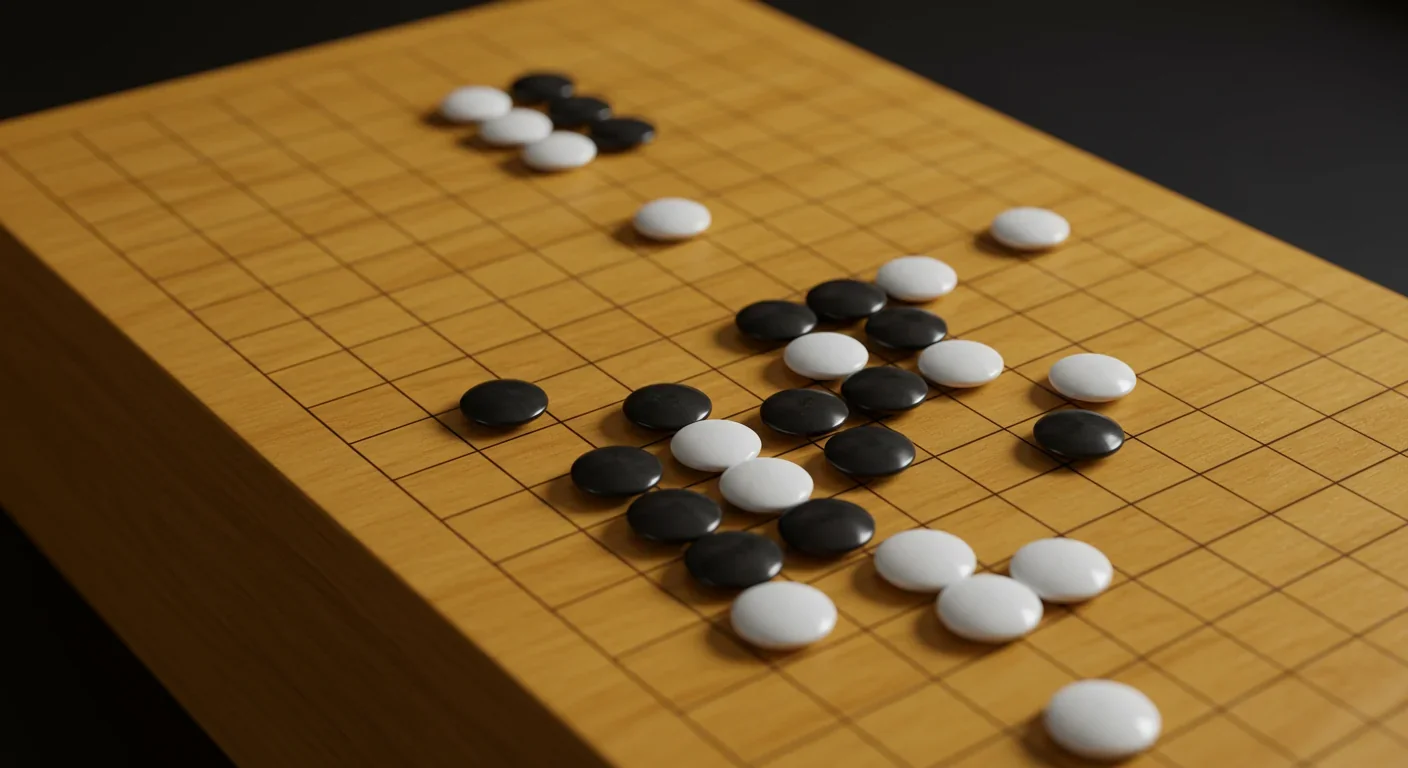

In March 2016, something extraordinary happened in Seoul. A machine defeated one of humanity's greatest Go players 4-1, and the world suddenly realized we'd crossed a threshold nobody saw coming. Lee Sedol, a living legend with 18 international titles, sat across from a cluster of computers running DeepMind's AlphaGo. When the final stone was placed, it wasn't just a game that ended—it was an entire era of human cognitive supremacy in one of our oldest and most complex strategic pursuits.

Go seemed impossible for machines. Not difficult. Impossible. While IBM's Deep Blue had conquered chess back in 1997, Go stood as the unconquerable fortress. The numbers tell you why: chess has roughly 10^47 possible positions. Go has 10^170. That's more than the number of atoms in the observable universe. Every position offers around 250 legal moves, compared to chess's average of 35. Traditional AI approaches—the kind that could analyze every possible move—simply couldn't work. The computational requirements would outlive the universe itself.

So how did AlphaGo crack what seemed fundamentally uncrackable?

Go originated in China over 2,500 years ago, and its elegant simplicity masks staggering complexity. Two players place black and white stones on a 19x19 grid, attempting to surround territory and capture opponent stones. The rules fit on a single page. Mastering the game takes a lifetime—or several.

The challenge for AI wasn't just the astronomical number of positions. It was the nature of Go itself. Chess programs could evaluate positions by counting material: a queen is worth nine points, a pawn one point. But in Go? Territory value depends on context that cascades across the entire board. A stone placed in one corner can influence fighting in the opposite corner ten moves later. Human experts describe Go intuition as something felt rather than calculated—exactly the kind of understanding that eluded machines.

By 2014, the best Go programs played at strong amateur level but couldn't challenge professionals. Most experts predicted it would take another decade, maybe two, before AI could compete with the world's best. They were spectacularly wrong.

DeepMind's approach wasn't to build a better calculator—it was to teach a machine to learn like humans do, but faster and more thoroughly. The breakthrough came from combining three key technologies: deep neural networks, reinforcement learning, and Monte Carlo tree search.

First, AlphaGo needed to understand what good moves looked like. The team trained a "policy network" on 30 million moves from 160,000 human games. This supervised learning phase taught AlphaGo to predict what a strong human player might do in any position. Think of it as learning by watching masters play thousands of games.

But humans, for all their brilliance, have limitations. So DeepMind didn't stop there. They set AlphaGo loose to play against itself—millions of times. This reinforcement learning phase let the system discover strategies no human had ever tried. Every game refined its understanding. Win, and the moves that led to victory got reinforced. Lose, and alternative strategies got explored. It's the same principle that teaches children through trial and error, compressed into computational timescales.

The second neural network, the "value network," learned to evaluate positions—to look at any board state and estimate the probability of winning from that position. While the policy network suggested moves, the value network judged outcomes.

AlphaGo combined supervised learning from human games with reinforcement learning through self-play, creating a system that could learn from humanity's best strategies and then surpass them by discovering its own.

The final piece was Monte Carlo tree search, a technique that simulates thousands of possible future games from each position. But instead of randomly exploring like earlier MCTS implementations, AlphaGo's search was guided by its neural networks. The policy network suggested which branches to explore, and the value network evaluated whether those branches looked promising.

This wasn't just incremental improvement—it was a fundamentally different architecture. The hardware behind it was substantial: 1,920 CPUs and 280 GPUs humming away during the Lee Sedol match. But the real innovation was the approach.

The second game of the Lee Sedol match produced what Go players now call simply "Move 37."

AlphaGo placed a stone on the fifth line—a position so unconventional that commentators initially thought it was a mistake. Professional players watching the live stream assigned it a 1-in-10,000 probability of being played by any competent player. Fan Hui, the European Go champion who'd been training with AlphaGo, called it "not a human move." Lee Sedol left the room.

But Move 37 wasn't a mistake. It was brilliant. The move's purpose revealed itself dozens of turns later, as its strategic value cascaded through the game. AlphaGo had calculated something human intuition had missed for 2,500 years. It had explored beyond the boundaries of established theory and found something new.

That single move redefined what the game meant. Go professionals worldwide began analyzing their own games differently. Some adopted strategies AlphaGo pioneered. Others re-examined classical positions that had been considered "solved" for centuries. The student had become the teacher.

"Even if you took all the computers in the world, and ran them for a million years, it wouldn't be enough to compute all possible variations in the game of Go."

— Demis Hassabis, DeepMind CEO

Lee Sedol did win one game—Game 4—with his own brilliant move that exposed a weakness in AlphaGo's play. But the 4-1 final score told the story. Humanity's stronghold had fallen.

If the original AlphaGo was impressive, what came next was genuinely mind-bending.

In October 2017, DeepMind announced AlphaGo Zero, and it changed everything we thought we understood about AI learning. Zero started with nothing—no human games, no historical data, no conventional wisdom. Just the rules of Go and the directive to play against itself.

Three days of self-play later, Zero beat the version of AlphaGo that defeated Lee Sedol. Beat it 100 games to zero. In 40 days, it became the strongest Go player in history, with an Elo rating of 5,185—hundreds of points beyond any previous version.

Zero's architecture was actually simpler than the original AlphaGo. It used a single neural network instead of separate policy and value networks. It required less computational power. It learned faster. And it played better—discovering strategies that the human-trained version, constrained by human gameplay patterns, had never explored.

Think about what that means. We fed AlphaGo Zero nothing but rules and computing time, and it not only matched but surpassed the collective strategic wisdom of humanity accumulated over millennia. It independently rediscovered classical strategies—the opening patterns, the standard responses, the proven endgames—then pushed beyond them.

Some of Zero's novel strategies have been analyzed by professional players and integrated into high-level play. The machine wasn't just beating humans; it was teaching them. Centuries of Go theory were being rewritten by an algorithm that learned in weeks.

The story doesn't end with Go. In December 2017, DeepMind unveiled AlphaZero, which generalized the same approach to chess and shogi (Japanese chess). Starting from zero knowledge of each game—just rules—AlphaZero taught itself to superhuman level in hours.

In chess, it demolished Stockfish, the world's leading chess engine. Stockfish relied on decades of human chess knowledge, hand-tuned evaluation functions, and the ability to analyze 70 million positions per second. AlphaZero analyzed just 80,000 positions per second but chose better. It didn't need to look at everything—it understood which variations mattered.

What made this transition remarkable was how little the team had to change. The same fundamental architecture—neural networks plus guided tree search plus reinforcement learning—conquered three completely different games. They didn't engineer a Go solution or a chess solution. They engineered a solution to the entire class of perfect-information games.

The implications rippled far beyond board games. If this approach could master Go, chess, and shogi without domain-specific engineering, what else could it tackle?

AlphaGo Zero learned purely through self-play—no human games, no historical data. It defeated the version that beat Lee Sedol 100-0 after just three days of training, proving that machines can discover strategies superior to millennia of human knowledge.

The reinforcement learning techniques pioneered in AlphaGo are now reshaping fields that have nothing to do with board games.

DeepMind applied similar approaches to protein folding with AlphaFold, cracking a problem that had stymied biologists for decades. Understanding how proteins fold is crucial for drug discovery, disease research, and synthetic biology. AlphaFold can predict protein structures in hours that would take experimental methods months or years.

In robotics, reinforcement learning is teaching machines to grasp objects, walk, and manipulate tools—tasks that seemed to require human-like intuition. The same principle applies: let the system explore, reward success, and learn from millions of attempts compressed into simulation time.

Energy grid optimization, traffic flow management, resource allocation—anywhere you have complex systems with delayed rewards and uncertain futures, these techniques find applications. The data centers that power your online searches use reinforcement learning to optimize cooling, cutting energy consumption by 40%.

Financial trading systems use similar approaches to develop strategies. Chemical process optimization applies the same principles. Even dialogue systems like ChatGPT incorporate reinforcement learning from human feedback to align their behavior with what humans find useful.

The pattern is consistent: traditional optimization struggles with complexity, delayed rewards, and massive search spaces. Reinforcement learning combined with neural networks excels precisely where traditional methods fail.

Understanding AlphaGo's success requires looking at how its components fit together.

The policy network takes a board position as input—represented as a 19x19x48 tensor capturing stone positions, liberties, and game history. Thirteen convolutional layers process this information, looking for patterns like edges, formations, and strategic structures. The output is a 19x19 grid of probabilities—how likely each possible move is to be strong.

The value network has a similar architecture but outputs a single number: the estimated probability of winning from this position. Training this network required 30 million positions from AlphaGo's self-play games, each labeled with the eventual outcome.

During actual gameplay, AlphaGo runs thousands of simulated playouts using MCTS. But instead of exploring randomly, the policy network biases exploration toward promising moves, and the value network provides fast position evaluations, reducing how many simulations need to play all the way to the end.

This combination of learning and search proved more powerful than either approach alone. Pure neural networks can evaluate positions but struggle with tactical calculation. Pure search is thorough but prohibitively slow. Together, they complement each other's weaknesses.

AlphaGo Zero simplified this further, using a single neural network with shared layers feeding into separate policy and value "heads." This architecture was more efficient and learned better representations, since the same features useful for predicting moves also proved useful for evaluating positions.

Go isn't just a game in East Asia—it's culture, art, and philosophy. Professional Go players dedicate their lives to the game, starting training in childhood. The top players command celebrity status. Matches are broadcast on television. Critical games get analyzed for decades.

When AlphaGo defeated Lee Sedol, it wasn't comparable to a chess computer winning. It was more like an AI writing better poetry than Shakespeare or composing more moving symphonies than Mozart. Go represented the pinnacle of human strategic thought, the game where intuition and creativity supposedly mattered more than raw calculation.

The reaction mixed shock, fascination, and for some, genuine grief. Lee Sedol retired from professional Go in 2019, partly because AI had changed what the game meant to him. "With the debut of AI in Go games," he said, "I've realized that I'm not at the top even if I become the number one through frantic efforts."

But the story isn't just about displacement. Many professionals describe AlphaGo as liberating. For centuries, Go strategy was passed down through rigid patterns and established theory. Students learned conventional moves because that's what masters taught. AlphaGo demonstrated that many "fundamental" principles were just conventions—effective but not optimal.

"With the debut of AI in Go games, I've realized that I'm not at the top even if I become the number one through frantic efforts."

— Lee Sedol, 9-dan professional Go player

Young players especially embraced this freedom. They experimented with unorthodox openings, questioned traditional responses, and found that some moves dismissed as weak were actually undervalued. The game became more creative, more diverse, more exploratory. Professional commentary changed, with analysts explicitly discussing "human moves" versus "AI moves."

For all its achievements, AlphaGo had boundaries. It mastered perfect-information games—games where both players see everything and randomness plays no role. Poker, with hidden cards and bluffing, requires different techniques. The real world, with imperfect information and continuously changing rules, demands yet more innovation.

AlphaGo also consumed enormous computational resources. The system that played Lee Sedol ran on hardware worth millions. Subsequent versions became more efficient, but we're still far from pocket-sized superhuman Go players, let alone general problem solvers.

And there's a deeper question about what these systems understand. AlphaGo can play brilliant Go, but does it understand the game the way humans do? Can it explain its strategy in words? Does it appreciate beauty in a well-played game? These philosophical questions matter as we contemplate what machine intelligence means.

Current research explores several frontiers. Multi-agent reinforcement learning investigates how algorithms can learn to cooperate or compete with multiple parties. Transfer learning asks whether systems can apply knowledge from one domain to another, the way humans do. Efficient learning research pursues algorithms that need less data and computation.

DeepMind's follow-up work includes MuZero, which learned to play games without even knowing the rules—it inferred rules from observation—and Agent57, which mastered all 57 Atari games, including ones that resisted previous approaches. Each advance pushes the boundary of what reinforcement learning can handle.

The most profound lesson from AlphaGo isn't about games—it's about the nature of expertise and learning itself.

For decades, AI researchers assumed that human expertise was the ceiling. You'd train systems on human data, try to capture human intuition in code, and hope to approximate human performance. AlphaGo Zero demolished that assumption. Not only could machines learn without human data, they could exceed what human knowledge makes possible.

This has huge implications. In domains where we lack expert human data—predicting molecular interactions, optimizing supply chains, managing complex ecosystems—we might not need experts to train AI. We might just need good simulation environments and reward signals. The AI can discover solutions we've never imagined.

If Go, studied intensively for 2,500 years, still had better strategies waiting to be found, what about economics, medicine, or engineering? How many "established principles" are really just the best humans have discovered, not the best that's discoverable?

It also suggests that many fields we consider mature might have undiscovered territory. If Go, studied intensively for 2,500 years, still had better strategies waiting to be found, what about economics? Medicine? Engineering? How many "established principles" are really just the best humans have discovered, not the best that's discoverable?

There's a humbling and exciting aspect to this. We're not teaching machines to think like us. We're creating systems that learn differently—through massive parallelism, perfect memory, and tireless iteration—and discover their own paths to competence.

If you're wondering how these techniques might affect your industry or career, the pattern is becoming clear. Reinforcement learning excels when:

1. The problem has clear metrics for success (winning games, energy efficiency, profit)

2. Trial and error is possible, especially in simulation

3. The solution space is too large for human analysis or traditional optimization

4. Long-term strategy matters more than immediate optimization

These conditions appear in manufacturing (optimizing production schedules), healthcare (personalized treatment plans), finance (portfolio management), logistics (routing and scheduling), and countless other domains.

The skills that matter in this landscape aren't about competing with AI at calculation or pattern matching. They're about defining problems well, interpreting results, and understanding contexts that machines miss. The most valuable professionals will be those who can collaborate with AI systems—using them as tools that amplify human judgment rather than replacements for it.

For developers and engineers, understanding reinforcement learning is becoming as fundamental as understanding databases or networking. You don't need to derive the mathematical foundations, but you should understand when and how to apply these techniques, just as earlier generations learned when to use caching or parallelism.

Seven years after AlphaGo defeated Lee Sedol, the techniques pioneered for that match have become foundational. Every major tech company now invests heavily in reinforcement learning research. The approach has moved from academic curiosity to production systems handling billions of dollars in value.

But we're still in early days. AlphaGo conquered a specific, bounded domain. General intelligence—the ability to learn diverse tasks, transfer knowledge across domains, adapt to novel situations, and understand context the way humans do—remains elusive. Current systems are narrow experts, not broad thinkers.

The next frontiers involve scaling these techniques to messier domains: partial information, multiple agents, continuous learning, and real-world physics. Researchers are exploring how reinforcement learning can work with smaller amounts of data, how to make training more stable, and how to align AI objectives with human values.

Some speculate that general artificial intelligence will emerge from scaling up these methods. Others argue we need fundamental algorithmic breakthroughs. The honest answer is we don't know—but AlphaGo proved that our intuitions about what's possible are often dramatically wrong.

What we do know is that the barrier between "human-only" and "machine-achievable" keeps shifting. Tasks that seemed to require human intuition, creativity, and judgment fall to algorithms with increasing regularity. Not through brute force calculation, but through learning systems that discover patterns we never noticed and strategies we never imagined.

The game of Go still captivates millions. Human players still compete, still innovate, still find joy in a well-placed stone. AlphaGo didn't make the game obsolete—it revealed depths we hadn't seen. That might be the pattern for AI more broadly. Not replacing human endeavor, but expanding the frontier of what's possible and teaching us to see our own domains with fresh eyes.

The machine learned to play. In doing so, it taught us how much we still have to learn.

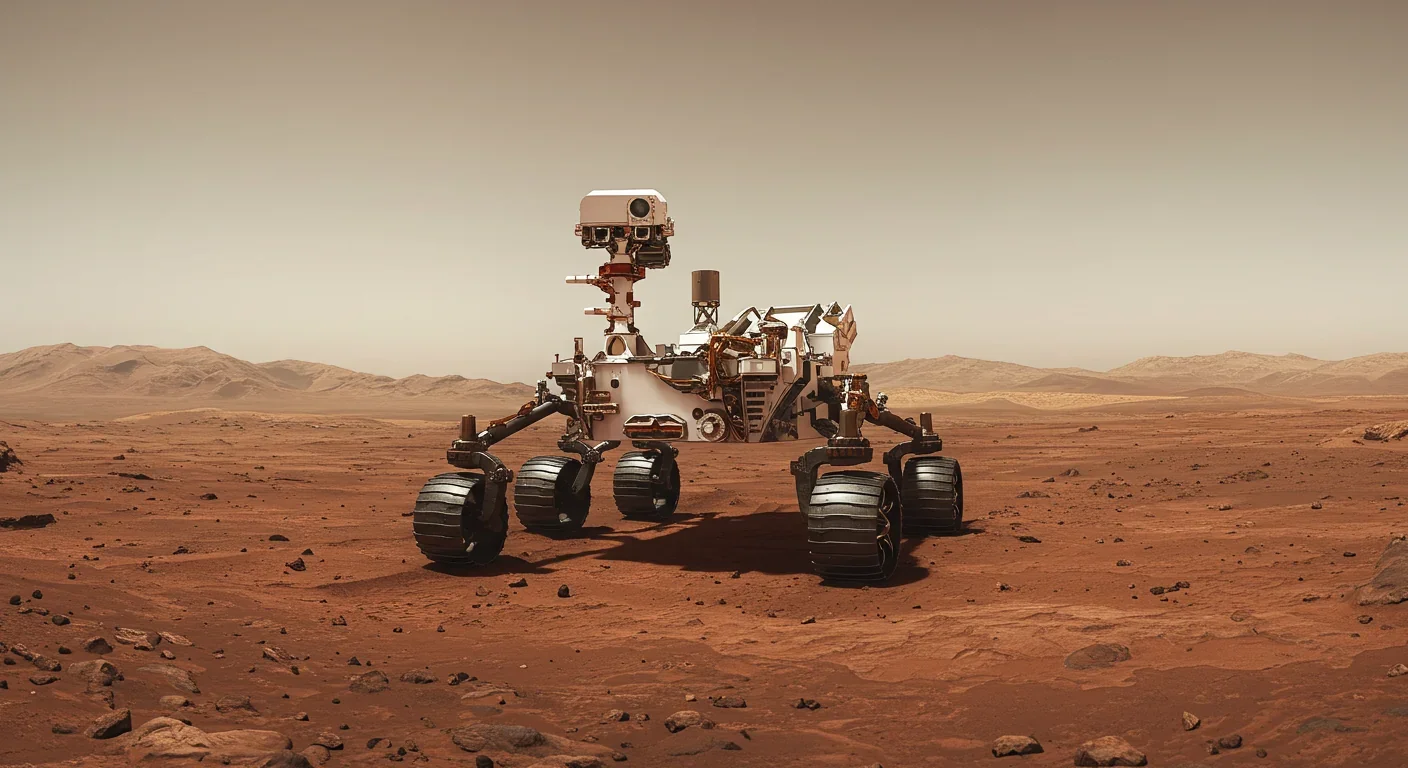

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

The Baader-Meinhof phenomenon explains why newly learned information suddenly seems everywhere. This frequency illusion results from selective attention and confirmation bias—adaptive evolutionary mechanisms now amplified by social media algorithms.

Plants and soil microbes form powerful partnerships that can clean contaminated soil at a fraction of traditional costs. These phytoremediation networks use biological processes to extract, degrade, or stabilize toxic pollutants, offering a sustainable alternative to excavation for brownfields and agricultural land.

Renters pay mortgage-equivalent amounts but build zero wealth, creating a 40x wealth gap with homeowners. Institutional investors have transformed housing into a wealth extraction mechanism where working families transfer $720,000+ over 30 years while property owners accumulate equity and generational wealth.

AlphaGo revolutionized AI by defeating world champion Lee Sedol through reinforcement learning and neural networks. Its successor, AlphaGo Zero, learned purely through self-play, discovering strategies superior to millennia of human knowledge—opening new frontiers in AI applications across healthcare, robotics, and optimization.