Why Your Brain Sees Gods and Ghosts in Random Events

TL;DR: The uncanny valley—the unsettling feeling triggered by almost-human robots, CGI characters, and digital avatars—isn't just an aesthetic problem. It's rooted in evolutionary threat detection, brain fear responses, and violated social expectations. As AI-powered digital humans become ubiquitous in gaming, virtual meetings, healthcare, and companionship, understanding why near-perfect realism triggers discomfort is critical. Designers can navigate the valley through strategic stylization, fluid motion, cultural sensitivity, and ethical transparency—or risk creating technologies that erode trust and connection. The future hinges on whether we can cross the valley without losing what makes humanity worth simulating.

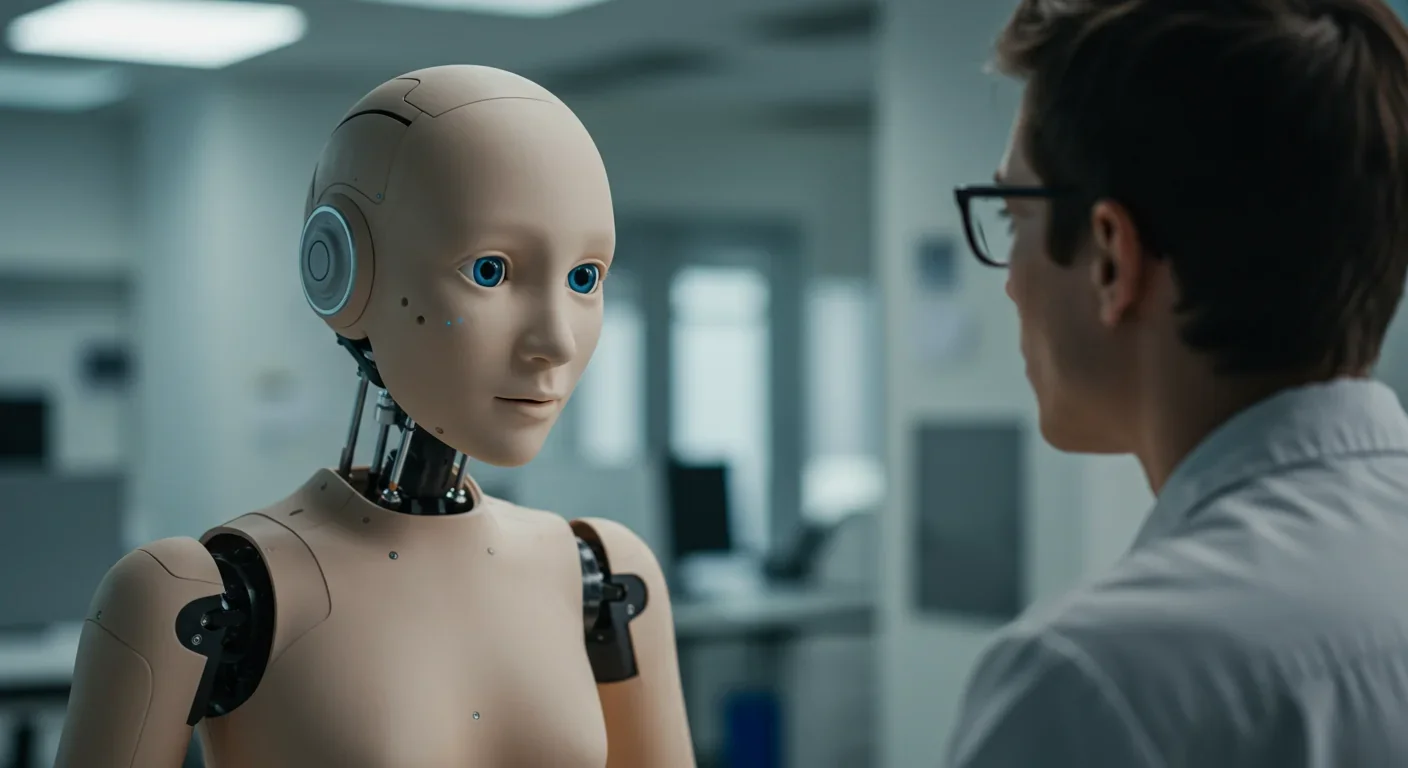

By 2030, it's estimated that more than half of your daily interactions may involve AI-powered digital humans—virtual assistants with lifelike faces, customer service avatars that mirror human emotion, and video game characters indistinguishable from real actors. But there's a catch that roboticists, animators, and AI developers have struggled with for decades: making artificial beings look almost human can trigger a profound psychological discomfort that researchers call the "uncanny valley." This phenomenon—where a nearly perfect simulation of humanity suddenly feels deeply wrong—isn't just an aesthetic quirk. It's a window into how our brains process reality, detect threats, and maintain social bonds. Understanding why we shiver at the sight of humanoid robots or CGI characters that are "too realistic" is becoming critical as designers race to create companions, coworkers, and entertainers from silicon and code.

In 1970, Japanese robotics engineer Masahiro Mori introduced a concept that would reshape how we think about artificial beings. Mori proposed that as robots become more human-like, our emotional response to them grows increasingly positive—until a threshold is crossed. At that point, when a robot or digital character is almost, but not quite, indistinguishable from a living person, affinity plummets into what he graphically depicted as a valley: the uncanny valley.

Mori's original graph charted "human likeness" on the horizontal axis and "affinity" on the vertical. A simple industrial robot arm sits comfortably at low affinity and low human likeness. A stuffed animal or cartoon character scores higher on both. But as you approach near-perfect human realism—think eerily lifelike androids, hyperrealistic CGI faces, or VR avatars with skin textures and eye movements meticulously rendered—affinity doesn't continue to rise. Instead, it crashes. The almost-human becomes unsettling, even repulsive. Only when realism becomes truly indistinguishable from a living human does affinity recover, climbing out of the valley.

What changes everything about Mori's hypothesis isn't just that it named the feeling millions have experienced watching a CGI character in a blockbuster film or encountering a humanoid robot at a tech expo. It's that the phenomenon has now been validated by brain imaging, psychological studies, and real-world user reactions across industries—from gaming to virtual meetings to prosthetic design. Understanding the uncanny valley is no longer optional for anyone building the next generation of human-computer interaction.

The uncanny valley isn't new—it's as old as humanity's attempts to recreate itself. Ancient Greek myths told of Pygmalion's ivory statue brought to life, evoking both wonder and unease. In the 18th century, intricate automata—mechanical dolls that could write, play music, or mimic breathing—drew crowds but also whispered fears of soulless imitation. These creations sat at the edge of human likeness, oscillating between marvel and dread.

The 20th century accelerated this trajectory. Early prosthetic limbs were purely functional—hook hands and wooden legs that made no attempt to look real. But as materials science advanced, prosthetics incorporated skin-like coverings, articulated fingers, and realistic coloring. Some users reported a strange discomfort: the more lifelike the prosthetic appeared, the more its subtle wrongness—immobile joints, unnatural textures—triggered aversion rather than acceptance. This was the uncanny valley manifesting in medical technology long before Mori gave it a name.

In cinema, the valley yawned wide with early experiments in motion capture and CGI. The 2004 film The Polar Express became infamous for its dead-eyed, waxy characters that children found creepy rather than charming. Critics coined the term "Polar Express syndrome" to describe the revulsion audiences felt toward these near-humans. By contrast, fully stylized animated films like Toy Story avoided the valley entirely by embracing cartoonish abstraction—characters that were clearly toys, not people.

These historical stumbles taught designers a critical lesson: the path to realism isn't linear. There's a treacherous middle ground where the pursuit of human likeness backfires, and the cost is measured in user rejection, box office disappointment, and eroded trust in technology. The question became: how do we navigate this valley—or avoid it altogether?

Why does the almost-human trigger such visceral discomfort? Researchers have proposed multiple, overlapping explanations rooted in evolutionary psychology, neuroscience, and social cognition.

Threat Detection and Pathogen Avoidance: One leading theory suggests that the uncanny valley evolved as a survival mechanism. Throughout human history, detecting subtle signs of illness, death, or deception was critical. A face that looks human but exhibits unnatural pallor, jerky movements, or empty stares might signal disease or a corpse. Our brains evolved to flag these anomalies as threats, triggering avoidance. When a robot or CGI character hits that almost-human sweet spot, our ancient threat-detection circuits fire, interpreting the mismatch as dangerous.

Brain imaging studies support this. Research by Pütten et al. (2019) using fMRI scans found that as human likeness increases, activity in the temporoparietal junction (TPJ)—a region involved in processing social cues and theory of mind—rises linearly. But the amygdala, the brain's fear center, shows a different pattern: heightened activation when artifacts become too lifelike. This suggests that our brains simultaneously try to engage socially with near-human entities while triggering fear responses when subtle wrongness is detected.

Death Salience and Mortality Reminders: Mori himself hinted at another dimension: the uncanny valley may function as a death-salience cue. Encountering something that looks alive but isn't—or looks dead but moves—can remind us of our own mortality. This existential discomfort amplifies the emotional response, pushing near-human artifacts deep into the valley. It's why corpses, mannequins, and certain wax figures can evoke profound unease even when stationary. Adding movement to an already creepy object, Mori predicted, only amplifies its peculiarity—a prediction confirmed by studies showing that animated near-human faces are rated as more disturbing than static ones.

Violated Expectations and Cognitive Dissonance: Another explanation centers on violated expectations. When we perceive something as human, we automatically invoke a rich mental model: expectations about how it will move, emote, and respond. Near-human entities violate these expectations in subtle ways—an avatar's eyes don't track quite right, a robot's skin doesn't compress naturally, a CGI character's lip sync is milliseconds off. These micro-violations create cognitive dissonance, a mental friction that registers as discomfort. Intriguingly, expertise amplifies this effect. A study on 3D rendering found that trained observers—people skilled in visual arts or computer graphics—rated distorted, almost-real faces as more abnormal and less attractive than novices did. Familiarity with how the human face should look makes experts more sensitive to deviations, pushing them deeper into the valley.

Gaze and Social Dynamics: Even the mechanics of eye contact matter. Recent analysis of virtual meeting platforms like Zoom revealed that camera angles and screen layouts can trigger uncanny responses. Zoom's default camera close-crop places participants' faces in a mugshot-style view—overly intimate and unnatural. Meanwhile, because people look at the on-screen face rather than the camera, gaze alignment is off by several degrees, creating a disjointed "Zoom-room of direct stares" where everyone seems to be looking at you but not quite. This subtle misalignment mimics the eerie feeling of interacting with an android whose eyes don't track yours, amplifying discomfort during what should be natural social interaction.

The uncanny valley isn't confined to research labs or sci-fi films—it's reshaping industries worth trillions of dollars.

Robotics and Companion AI: Social robots designed for elder care, education, and companionship face the valley head-on. Developers must decide: should robots look human, or embrace machine-like aesthetics? Japan's Hiroshi Ishiguro, creator of hyperrealistic androids like Geminoid and the Ito Mancio android unveiled at Expo 2025 Osaka, intentionally pushes into the valley to study how humans respond. His androids feature silicone skin, realistic hair, and lifelike facial movements. Yet public reactions remain mixed—some visitors are fascinated, others deeply unsettled. Ishiguro argues that only by confronting the valley can we understand how to design robots that will eventually be "accessible to everybody."

Meanwhile, studies on robot height, gaze, and movement reveal subtle design factors that influence compliance and comfort. One experiment found that a robot's height significantly impacted how many questions participants agreed to answer—shorter robots (perceived as less threatening) elicited more cooperation. This suggests that physical design choices—not just facial realism—modulate uncanny valley effects.

Gaming and Virtual Worlds: Video game developers have pioneered strategies to avoid or exploit the valley. NVIDIA's ACE (Avatar Cloud Engine) suite uses generative AI to create digital humans with realistic speech, facial animation, and behavior. By combining automatic speech recognition (Riva), neural animation (Audio2Face), and cloud-based rendering, ACE enables non-playable characters (NPCs) that respond naturally in real time. Developers like Convai leverage ACE to build lifelike NPCs with "low latency response times and high fidelity natural animation," aiming to deliver characters that sit comfortably beyond the valley.

Yet even cutting-edge tools face challenges. As one designer noted after testing multiple conversational avatars, results ranged from "jaw-dropping and convincing" to "clunky and robotic." The difference often comes down to motion fluidity, voice modulation, and micro-expressions—tiny details that, when wrong, plunge characters into the valley.

Film and Animation: Hollywood learned its lesson from The Polar Express. Subsequent films employing performance capture—like Avatar (2009)—succeeded by keeping characters distinctly non-human (blue-skinned Na'vi) while capturing nuanced human performance. By contrast, films that attempted photorealistic human characters, such as early drafts of The Lion King (2019) with overly realistic animal faces, faced criticism for feeling emotionally flat—a valley effect where realism came at the cost of expressive warmth.

Virtual Meetings and Telepresence: The COVID-19 pandemic thrust millions into prolonged virtual interaction, and the uncanny valley emerged in unexpected ways. Platforms like Zoom created new forms of social discomfort: continuous direct gaze, abrupt transitions into breakout rooms, and the unnerving experience of seeing your own face for hours. Apple's Vision Pro headset attempted to mitigate "tech encroachment" by projecting the wearer's eyes outward—a feature widely received as dystopian and uncanny. These examples illustrate that the valley isn't limited to robots or CGI; it infiltrates any technology attempting to mediate human presence.

AI Companions and Attachment: In 2024, 16 of the top 100 AI apps focused on companionship. Users reported feeling less lonely and receiving emotional support from chatbots and avatars they perceived as "real persons." Yet researchers warn of a double-edged dynamic: anthropomorphism increases attachment up to a point, but when AI responses become too human-like yet occasionally unnatural, the attachment system breaks down. This disruption—when users expect human empathy but receive algorithmic responses—can trigger grief, disappointment, and uncanny discomfort. Mark Zuckerberg's 2024 proposal that AI friends could fill social needs was met with backlash, highlighting societal unease with replacing human connection with near-human simulations.

Successfully crossing the uncanny valley unlocks transformative possibilities.

Enhanced Communication: Digital humans that feel genuinely present can revolutionize telemedicine, remote education, and global collaboration. Imagine a virtual doctor whose empathetic facial expressions put anxious patients at ease, or a language tutor whose natural gestures and expressions make learning intuitive.

Accessibility and Inclusion: Realistic avatars can give voice and presence to individuals with disabilities. Custom digital humans can represent users in virtual spaces, enabling participation in social and professional environments previously inaccessible.

Creative and Economic Opportunities: Generative AI-powered digital actors can reduce production costs, enable personalized storytelling, and create interactive narratives where characters remember and respond to player choices. As Kylan Gibbs, CEO of Inworld AI, noted, combining NVIDIA ACE with the Inworld AI Engine "enables developers to create digital characters that can drive dynamic narratives, opening new possibilities for how gamers can decipher, deduce and play."

Emotional and Psychological Support: Thoughtfully designed AI companions can provide consistent, non-judgmental support for individuals experiencing loneliness, anxiety, or social challenges. When designed ethically—avoiding manipulation and maintaining transparency—these companions can complement human relationships rather than replace them.

Yet the uncanny valley also reveals profound risks.

Manipulation and Deception: Hyperrealistic avatars can be weaponized for misinformation, deepfakes, and social engineering. Grok avatars, for example, use "subtle voice modulation, expressive facial cues, and even flirtation" to draw users into longer interactions. While this gamification boosts engagement, it raises ethical concerns about emotional manipulation—designing avatars that exploit attachment vulnerabilities for profit or influence.

Erosion of Trust: As digital humans become indistinguishable from real ones, distinguishing authentic human interaction from AI simulation grows harder. This erosion of trust could destabilize social norms, online discourse, and even legal proceedings where video evidence becomes unreliable.

Inequality and Access: Advanced digital human technology requires significant computational resources. NVIDIA's ACE microservices run on high-end GPUs and cloud infrastructure, accessible primarily to well-funded studios and enterprises. This risks creating a digital divide where only wealthy organizations can deploy convincing, trustworthy avatars, while lower-budget creators produce uncanny, off-putting alternatives.

Psychological Harm: Over-reliance on AI companions can disrupt real-world relationships. When users form attachments to AI entities, the abrupt termination of service—whether due to subscription lapses, platform shutdowns, or algorithm changes—can trigger grief akin to losing a loved one. Furthermore, if avatars fail to maintain consistent, human-like behavior, the resulting dissonance can amplify feelings of loneliness rather than alleviate them.

Cultural Homogenization: Most digital human research originates in Western or East Asian contexts. Cultural differences in emotional expression, eye contact norms, and social cues mean that avatars designed for one culture may trigger uncanny responses in another. The debate at Expo 2025 Osaka involving Italian, Asian, and Japanese perspectives on the Ito Mancio android underscored this: different cultures hold divergent expectations about robot-human relationships, divine analogies, and ethical boundaries. Designers must resist one-size-fits-all approaches or risk alienating global audiences.

The uncanny valley is not experienced uniformly across cultures. In Japan, where Shinto beliefs sometimes attribute spirits to inanimate objects, androids may evoke different emotional responses than in Western societies rooted in Judeo-Christian distinctions between animate and inanimate. At the Italy Pavilion during Expo 2025 Osaka, speakers including Rev. Soeda Ryusho and Professor Giorgio Metta discussed whether robots could possess souls, how humans and machines might coexist, and the ethical visions shaping different societies.

These dialogues reveal that navigating the uncanny valley requires more than technical prowess—it demands cross-cultural sensitivity. A robot designed to comfort elderly Japanese patients may need different facial expressiveness, vocal tone, and physical proximity than one serving European or American users. Emerging research hints that cultural exposure to robots and digital media may shift valley thresholds: societies with early, widespread adoption of humanoid technology may develop greater tolerance for near-human entities.

International cooperation is essential. Standards for transparency, consent, and ethical AI deployment must emerge from inclusive conversations, not Silicon Valley boardrooms alone. Otherwise, the technology risks exacerbating global inequalities and cultural tensions.

Designers, engineers, and product developers can employ evidence-based strategies to minimize uncanny valley effects.

Embrace Strategic Stylization: Rather than chasing photorealism, consider stylized designs that maintain expressive warmth. Pixar and Nintendo have mastered this—characters with exaggerated features that feel emotionally authentic without triggering realism-based discomfort.

Prioritize Motion Quality Over Skin Texture: Studies show that jerky, unnatural movement amplifies uncanniness more than imperfect textures. Invest in fluid animation, realistic eye tracking, and natural gestures. NVIDIA's Audio2Face and similar tools can generate facial animations that sync seamlessly with speech, reducing micro-violations of expectation.

Manage Gaze and Eye Contact: Eyes are the most scrutinized feature. Ensure avatars track the user's gaze accurately, blink naturally, and exhibit micro-movements (pupil dilation, subtle shifts) that signal life. In video calls, advocate for camera placements and interface designs that align gaze with screen position.

Leverage Incremental Exposure: Repeated exposure to near-human entities can reduce discomfort over time. Introduce users gradually to more realistic avatars, allowing their perceptual systems to recalibrate. This "habituation" effect suggests that the valley may erode as digital humans become ubiquitous.

Balance Realism with Function: Match the avatar's appearance to its role. A customer service bot doesn't need hyperrealistic skin; clarity and friendliness suffice. Reserve high realism for contexts—like virtual actors or telepresence—where presence and emotional connection are paramount.

Incorporate User Control: Let users adjust avatar realism. Some prefer cartoon-like simplicity; others crave lifelike detail. Modular systems like NVIDIA ACE's microservice architecture enable rapid iteration, allowing developers to test and tune realism levels.

Ethical Transparency: Clearly signal when interactions involve AI, not humans. Avoid designing avatars to deceive users into believing they're engaging with real people. Trust erodes rapidly when users discover manipulation.

Cultural Adaptation: Test avatars across diverse user groups. Facial expressions, vocal cadence, and social cues vary widely. An avatar that feels natural in Tokyo may feel uncanny in Rome—and vice versa.

Emerging technologies promise to reshape—or dissolve—the uncanny valley.

Photorealistic AI and Real-Time Rendering: Advances in neural rendering and generative models are closing the realism gap. NVIDIA's RTX ray tracing and AI-driven rendering deliver lifelike lighting, reflections, and textures in real time. As computational power grows, the distinction between digital and real may vanish, lifting digital humans out of the valley.

Neural Interfaces and Brain-Computer Integration: Future interfaces that communicate directly with neural pathways could bypass visual and auditory uncanniness altogether, transmitting presence and emotion without relying on fragile, near-human appearances.

Adaptive AI Personalities: AI that learns individual user preferences, adapting its behavior, appearance, and communication style in real time, may sidestep the valley by optimizing for each person's comfort zone.

Societal Normalization: Just as early cinema audiences recoiled at moving images, but now take CGI for granted, prolonged exposure to digital humans may normalize near-human entities. Within the next decade, you'll likely interact daily with AI assistants, virtual coworkers, and digital influencers—each iteration training your brain to accept them as part of the social landscape.

Yet challenges remain. Even if technology achieves perfect visual realism, the valley may persist in subtler dimensions: conversational timing, emotional authenticity, or the indefinable "spark" of consciousness. Philosophers and cognitive scientists debate whether any simulation, no matter how perfect, can ever fully escape the valley without genuine sentience.

The uncanny valley is more than a quirk of perception—it's a mirror reflecting our deepest anxieties about identity, mortality, and what it means to be human. As we race to populate our world with digital companions, robotic caregivers, and AI-generated influencers, understanding why we shiver at the almost-human becomes essential.

Designers who respect the valley's lessons—who prioritize fluid motion, cultural sensitivity, ethical transparency, and user agency—will craft technologies that enhance human flourishing. Those who ignore it risk creating a future where distrust and discomfort fracture the very connections these technologies promise to strengthen.

The valley isn't a barrier to overcome through brute-force realism. It's a signal from our evolutionary past, a reminder that humanity is defined not by flawless appearance but by the warmth, unpredictability, and authentic presence that no algorithm has yet replicated. The question isn't whether we can escape the uncanny valley—it's whether, in crossing it, we'll remember what made the human side worth reaching for in the first place.

Within the next decade, you'll decide whether to embrace digital humans as collaborators, companions, or cautionary tales. The choice begins now—with every avatar you design, every interaction you code, and every moment you pause to ask: does this bring us closer together, or does it leave us shivering on the edge of something almost, but not quite, human?

Recent breakthroughs in fusion technology—including 351,000-gauss magnetic fields, AI-driven plasma diagnostics, and net energy gain at the National Ignition Facility—are transforming fusion propulsion from science fiction to engineering frontier. Scientists now have a realistic pathway to accelerate spacecraft to 10% of light speed, enabling a 43-year journey to Alpha Centauri. While challenges remain in miniaturization, neutron management, and sustained operation, the physics barriers have ...

Epigenetic clocks measure DNA methylation patterns to calculate biological age, which predicts disease risk up to 30 years before symptoms appear. Landmark studies show that accelerated epigenetic aging forecasts cardiovascular disease, diabetes, and neurodegeneration with remarkable accuracy. Lifestyle interventions—Mediterranean diet, structured exercise, quality sleep, stress management—can measurably reverse biological aging, reducing epigenetic age by 1-2 years within months. Commercial ...

Data centers consumed 415 terawatt-hours of electricity in 2024 and will nearly double that by 2030, driven by AI's insatiable energy appetite. Despite tech giants' renewable pledges, actual emissions are up to 662% higher than reported due to accounting loopholes. A digital pollution tax—similar to Europe's carbon border tariff—could finally force the industry to invest in efficiency technologies like liquid cooling, waste heat recovery, and time-matched renewable power, transforming volunta...

Humans are hardwired to see invisible agents—gods, ghosts, conspiracies—thanks to the Hyperactive Agency Detection Device (HADD), an evolutionary survival mechanism that favored false alarms over fatal misses. This cognitive bias, rooted in brain regions like the temporoparietal junction and medial prefrontal cortex, generates religious beliefs, animistic worldviews, and conspiracy theories across all cultures. Understanding HADD doesn't eliminate belief, but it helps us recognize when our pa...

The bombardier beetle has perfected a chemical defense system that human engineers are still trying to replicate: a two-chamber micro-combustion engine that mixes hydroquinone and hydrogen peroxide to create explosive 100°C sprays at up to 500 pulses per second, aimed with 270-degree precision. This tiny insect's biochemical marvel is inspiring revolutionary technologies in aerospace propulsion, pharmaceutical delivery, and fire suppression. By 2030, beetle-inspired systems could position sat...

The U.S. faces a catastrophic care worker shortage driven by poverty-level wages, overwhelming burnout, and systemic undervaluation. With 99% of nursing homes hiring and 9.7 million openings projected by 2034, the crisis threatens patient safety, family stability, and economic productivity. Evidence-based solutions—wage reforms, streamlined training, technology integration, and policy enforcement—exist and work, but require sustained political will and cultural recognition that caregiving is ...

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.