The Renting Trap: Wealth Extraction from Working Families

TL;DR: Algorithms making decisions about jobs, loans, and justice are finally facing mandatory audits. From NYC to the EU, regulators are forcing tech companies to prove their systems are fair, backed by billion-dollar fines.

By 2027, every AI system used to hire you, approve your mortgage, or sentence you to prison in the European Union will face mandatory scrutiny. What started as scattered activist campaigns has become a coordinated global push to crack open the algorithmic black boxes that increasingly shape human destiny. From New York to Brussels, a diverse coalition of regulators, researchers, and civil rights groups isn't just asking for transparency anymore—they're demanding it, backed by regulations with teeth sharp enough to make tech giants nervous.

The stakes couldn't be higher. Algorithms already make consequential decisions about billions of people, often without oversight or accountability. But the algorithm audit movement is forcing a fundamental shift: the era of "trust us, it's just math" is ending.

When researchers finally got access to Amazon's AI recruiting tool, they found something disturbing: the system systematically discriminated against women. Trained on résumés from predominantly male past hires, the algorithm learned to penalize applications containing words like "women's." Amazon abandoned the tool in 2018, but only after the bias was discovered internally. How many similar systems are running right now, unchecked?

The COMPAS recidivism prediction algorithm provides another cautionary tale. A ProPublica investigation revealed that Black defendants were twice as likely as white defendants to be incorrectly classified as high risk for violent reoffending. The algorithm was treating people differently based on race, yet it was helping determine how long they'd spend behind bars.

Facial recognition technology presents perhaps the most visceral example. Joy Buolamwini and Timnit Gebru's landmark study found error rates of 35% for darker-skinned women compared to less than 1% for lighter-skinned men. This imbalance has led to multiple wrongful arrests of Black men, their lives upended because training datasets contained disproportionately fewer images of people who looked like them.

In content moderation, Meta's automated systems exposed troubling patterns. When the Oversight Board examined Meta's algorithms, they found both over-enforcement and under-enforcement driven by bias. An educational post about breast cancer awareness was automatically removed because the AI couldn't recognize Portuguese text overlaid on the image. Meanwhile, deepfake intimate images of an Indian public figure weren't added to Meta's removal database until the Board specifically asked why.

These aren't isolated glitches. They're symptoms of a systemic problem: algorithms trained on biased data, designed without diverse input, and deployed without adequate oversight.

Each discovery validates what the audit movement has argued from the start—you can't just trust the math.

Algorithm audits combine technical analysis, statistical testing, and domain expertise to evaluate whether automated systems produce fair outcomes. Auditors employ several methodologies, each designed to reveal different types of problems.

Discrimination testing involves feeding the algorithm carefully constructed test cases to see if it treats similar candidates differently based on protected characteristics. By changing only one variable at a time—swapping a traditionally Black name for a white name, for example—auditors can isolate discriminatory effects.

Disparate impact analysis examines outcomes across demographic groups. If an AI hiring tool rejects women at twice the rate it rejects men with equivalent qualifications, that's evidence of disparate impact, which can violate civil rights law even if the algorithm never explicitly considers gender.

Explainability techniques try to peer inside the black box. Tools like LIME and SHAP help auditors understand which features most influence an algorithm's decisions. If a lending algorithm heavily weights zip code—which correlates with race—that's a red flag for proxy discrimination, where neutral-seeming variables encode protected characteristics.

Causality analysis takes this further, attempting to trace exactly how input features flow through a model to produce outcomes. Recent research frameworks apply causal inference methods to determine whether an algorithm's decisions stem from legitimate factors or impermissible proxies.

But here's the catch: all these techniques require access to the algorithm, its training data, and detailed documentation. That access is exactly what companies have historically refused to provide, citing trade secrets and proprietary concerns.

The audit movement doesn't have a single headquarters. It's a distributed network of researchers, nonprofits, government agencies, and advocacy groups pushing from different angles.

AI Now Institute, founded by NYU researchers, has published influential frameworks for algorithmic impact assessments. Their work laid intellectual groundwork for policies adopted by cities and states across the United States.

AlgorithmWatch, a European nonprofit, conducts public interest research. They've pushed for transparency requirements in the EU and documented how automated systems affect fundamental rights.

The European Union Agency for Fundamental Rights has produced comprehensive reports on algorithmic bias, providing evidence that informed the EU AI Act's development.

In the United States, civil rights organizations have been crucial players. The Lawyers' Committee for Civil Rights filed formal complaints against Facebook's advertising algorithms, alleging they enabled housing and employment discrimination. Those complaints led to significant policy changes and a civil rights audit of Facebook's entire platform.

Academic institutions have built specialized audit infrastructure. Northeastern University's algorithm audit research center is developing new methodologies and tools, while computer science and law schools have launched interdisciplinary programs focused on algorithmic accountability.

"The audit movement signals that algorithm auditing has matured from activist research project to established professional practice."

— Professional Audit Analysis

Professional audit firms have emerged to meet regulatory demand. Companies like BABL AI and Eticas provide commercial audit services, helping organizations comply with new laws. Their existence signals that algorithm auditing has matured from activist research project to established professional practice.

Even when auditors get access, the work is extraordinarily difficult. Modern machine learning systems are genuinely opaque, containing millions of parameters adjusted through training processes that even their creators struggle to explain.

Trade secrecy creates the most obvious barrier. Companies claim that revealing how their algorithms work would expose competitive advantages. This tension between transparency and trade protection has become a central battleground, with new AI regulations trying to balance both interests.

But even with full access, algorithmic complexity itself impedes understanding. A systematic review of audit processes found that the sheer intricacy of modern AI systems creates interpretation challenges. When an algorithm weighs thousands of features through nonlinear interactions, isolating the cause of a biased outcome becomes like tracing a single drop of water through a waterfall.

Data access poses another challenge. To properly test for discrimination, auditors need representative datasets, but privacy regulations like GDPR restrict how personal data can be shared. This creates a paradox: protecting individual privacy can make it harder to detect systematic discrimination against groups.

Proxy discrimination presents an especially insidious technical challenge. An algorithm might never explicitly consider race, yet still discriminate because it heavily weights variables that correlate with race. Zip code, name patterns, school attended—all can act as proxies. Detecting this requires sophisticated analysis that goes beyond checking whether protected characteristics appear in the model.

Then there's the dynamic problem: algorithms change. Companies continuously retrain models, tweak parameters, and adjust systems based on feedback. An audit that certifies an algorithm as fair today might be obsolete next month. This creates demand for continuous monitoring frameworks rather than one-time audits.

Tech companies haven't exactly rolled out the welcome mat for auditors. Their resistance takes multiple forms, from legal challenges to technical obfuscation.

The most direct approach is simply refusing access. Without legal mandates, companies face little pressure to voluntarily submit to external scrutiny. When researchers have tried to audit systems through reverse engineering—creating test accounts and systematically probing for bias—platforms have threatened legal action, claiming terms of service violations.

Some companies have embraced "algorithmic auditing" but defined it on their own terms. They hire auditors, control the scope, and decide what findings to publish. Critics point out that these industry-sponsored audits lack independence, raising credibility questions.

The trade secrets argument has proven particularly effective at blocking meaningful oversight. In criminal justice, this has created bizarre situations where defendants can't effectively challenge the algorithms used against them because the code is proprietary.

For years, the audit movement relied on persuasion and public pressure. That's changing as regulators worldwide write mandatory algorithmic accountability into law.

New York City fired the first major shot with Local Law 144, which took effect January 1, 2023. The law requires companies using automated employment decision tools to conduct annual independent bias audits and make results publicly accessible. Employers must notify candidates that AI is being used and allow them to request alternative evaluation. Violations bring daily fines.

The EU AI Act imposes fines up to €35 million or 7% of worldwide annual turnover for non-compliance—potentially billion-dollar penalties for major tech companies.

The scope is significant. Any tool that substantially assists or replaces human decision-making in hiring or promotion falls under the law—résumé screening software, interview analysis platforms, candidate ranking systems. The requirement for independent auditors represents a crucial accountability mechanism.

The European Union's AI Act, which became legally binding August 1, 2024, takes an even broader approach. It classifies AI systems by risk level and imposes proportionate requirements. High-risk systems—including those used in employment, education, law enforcement, and credit scoring—face strict obligations: conformity assessments, risk management, data governance, transparency requirements, human oversight, and accuracy standards.

Non-compliance carries severe penalties: fines up to €35 million or 7% of worldwide annual turnover, whichever is higher. For major tech companies, that could mean billion-dollar fines. Enforcement ramps up in phases through 2027, giving organizations time to achieve compliance while making clear that the accountability era has arrived.

The Digital Services Act, also from the EU, requires platforms to provide researchers with data access for auditing algorithmic content moderation and recommendation systems. This directly addresses the access problem that has stymied independent researchers.

In the United States, multiple states are considering or have passed algorithmic accountability measures. A wave of state-level AI regulation is expected through 2025 as legislators grapple with these technologies.

Beyond mandates, voluntary standards are emerging. ISO 42001 provides a structured framework for AI governance that aligns with the EU AI Act's requirements. Organizations implementing ISO 42001 create audit-ready documentation, risk assessments, and transparency disclosures.

As audit requirements take effect, we're seeing the first wave of systematic findings. The picture is mixed—some systems perform better than feared, while others confirm the worst suspicions.

Several NYC Local Law 144 audits have revealed selection rate disparities between demographic groups, where AI hiring tools advance men or white candidates at meaningfully higher rates than women or candidates of color with similar qualifications. Companies have responded by adjusting algorithms, though questions remain about whether adjustments address root causes or just mask symptoms.

In content moderation, audits of the Digital Services Act transparency database show patterns in how platforms enforce rules. Preliminary findings suggest that automated systems struggle with context, nuance, and cultural variation—the breast cancer awareness case wasn't isolated.

Academic researchers conducting fairness audits over time have found that algorithms can become more biased as they learn from biased human decisions. This feedback loop effect means that without intervention, discriminatory patterns can amplify rather than diminish.

Recidivism prediction audits have revealed that even algorithms achieving similar false positive rates across racial groups can still produce racial disparities because of differences in base rates and system usage. Fairness doesn't have a single mathematical definition—different fairness metrics can conflict, forcing difficult normative choices.

"Fairness doesn't have a single mathematical definition—different fairness metrics can conflict, forcing difficult normative choices about what 'fair' really means."

— Algorithmic Fairness Research

Financial algorithm audits have documented how seemingly neutral credit scoring systems perpetuate historical discrimination. A mortgage algorithm study found that minority borrowers were charged higher interest rates for identical loans, a differential that algorithms inherited from past human lending decisions.

The audit movement has scored major victories, but it's entering a new phase. Regulation is in place; now comes implementation, enforcement, and evolution.

Certification programs for algorithm auditors are proliferating. Organizations like BABL AI and Tonex offer training in audit methodologies, regulatory frameworks, and technical tools. As the profession matures, expect standardized credentials, ethical guidelines, and quality standards—an auditor licensing regime similar to what exists for financial auditors.

Continuous monitoring will likely supplement or replace periodic audits. Given how quickly algorithms change, annual reviews can't catch problems emerging in real time. Researchers are developing continuous audit infrastructure that monitors systems as they operate, flagging potential fairness issues before they affect thousands of people.

The scope of auditing will expand beyond bias detection. Emerging frameworks examine accuracy, robustness, security, privacy, interpretability, and environmental impact. Comprehensive algorithmic accountability means evaluating systems across multiple dimensions.

International coordination is becoming necessary. Algorithms operate globally, but regulations remain national or regional. Companies face a patchwork of requirements, while auditors need cross-border cooperation. The GDPR created a template for globally influential regulation; algorithmic accountability may follow a similar pattern.

Public access to audit results will become a battleground. Current regulations mostly require audits to be conducted, but don't always mandate that detailed findings be public. Civil society groups are pushing for transparency, arguing that people affected by algorithms deserve to know how those systems have been evaluated.

Unless you're living off the grid, algorithms already make decisions about your life. The audit movement matters because it determines whether those decisions are fair, accurate, and subject to accountability.

When you apply for a job, there's an increasing chance that AI will screen your résumé, analyze your video interview, or rank you against other candidates. Thanks to laws like Local Law 144, companies must now disclose that AI is being used and prove they've tested it for bias. You have more visibility and, in some cases, the right to request human review.

If you're on social media, algorithms determine what content you see and whether your posts get removed. Audit requirements are forcing platforms to be more transparent about these decisions and to build appeals processes. While content moderation will never be perfect, external oversight is pushing systems toward greater accuracy and fairness.

For lending, employment, housing, education, and criminal justice decisions, algorithmic accountability creates legal mechanisms to challenge discrimination. If you're rejected for a loan or job and suspect algorithmic bias, audit results can provide evidence for complaints or lawsuits.

The audit movement establishes a principle: automated systems that affect human welfare should be subject to scrutiny. It rejects the idea that "the computer said so" is an acceptable justification.

More broadly, the audit movement establishes a principle: automated systems that affect human welfare should be subject to scrutiny. It rejects the idea that "the computer said so" is an acceptable justification for consequential decisions. As AI becomes more powerful and pervasive, that principle becomes more important.

The algorithm audit movement isn't about stopping automation or innovation. It's about ensuring that as we build systems to assist or replace human judgment, we build in accountability mechanisms from the start. The black boxes are being opened, one audit at a time, and what we're finding inside is reshaping how society governs its increasingly algorithmic infrastructure.

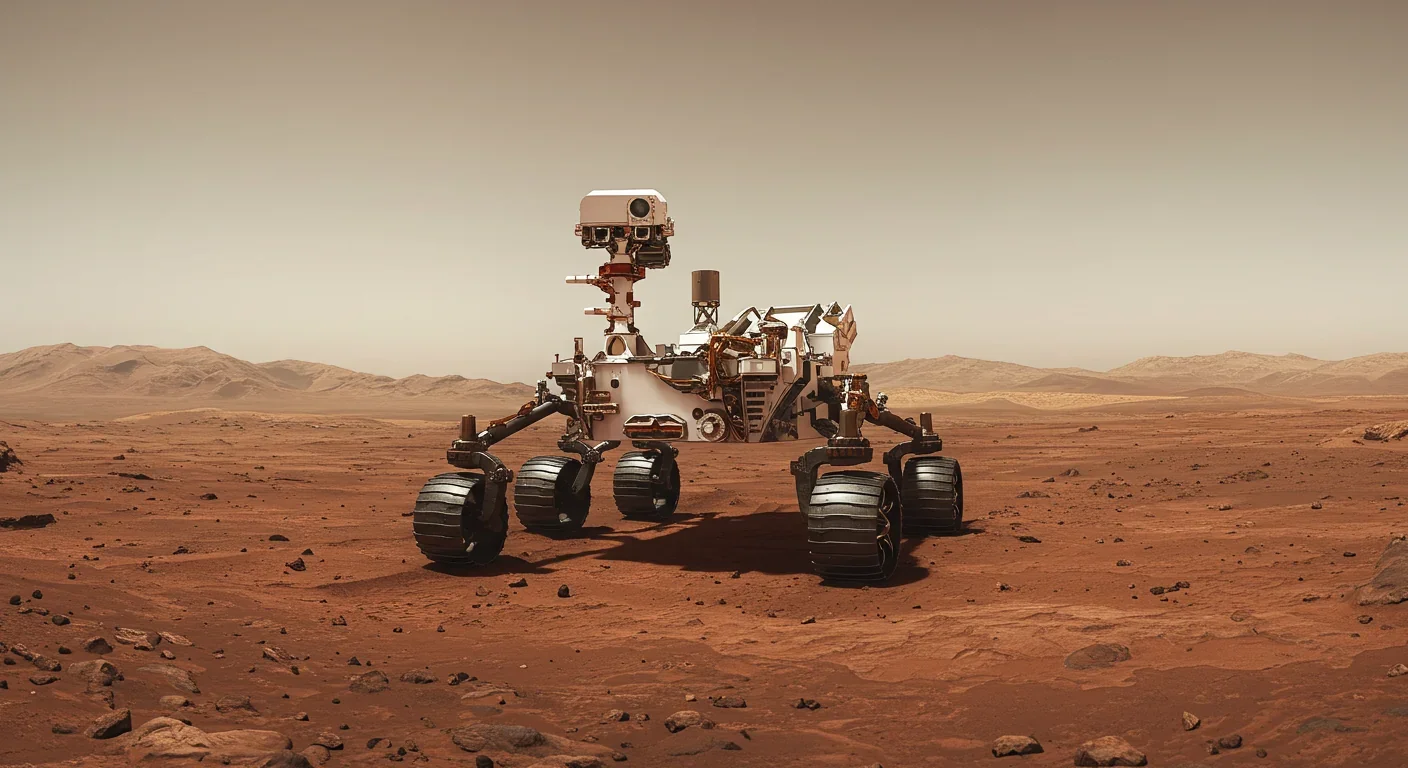

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

The Baader-Meinhof phenomenon explains why newly learned information suddenly seems everywhere. This frequency illusion results from selective attention and confirmation bias—adaptive evolutionary mechanisms now amplified by social media algorithms.

Plants and soil microbes form powerful partnerships that can clean contaminated soil at a fraction of traditional costs. These phytoremediation networks use biological processes to extract, degrade, or stabilize toxic pollutants, offering a sustainable alternative to excavation for brownfields and agricultural land.

Renters pay mortgage-equivalent amounts but build zero wealth, creating a 40x wealth gap with homeowners. Institutional investors have transformed housing into a wealth extraction mechanism where working families transfer $720,000+ over 30 years while property owners accumulate equity and generational wealth.

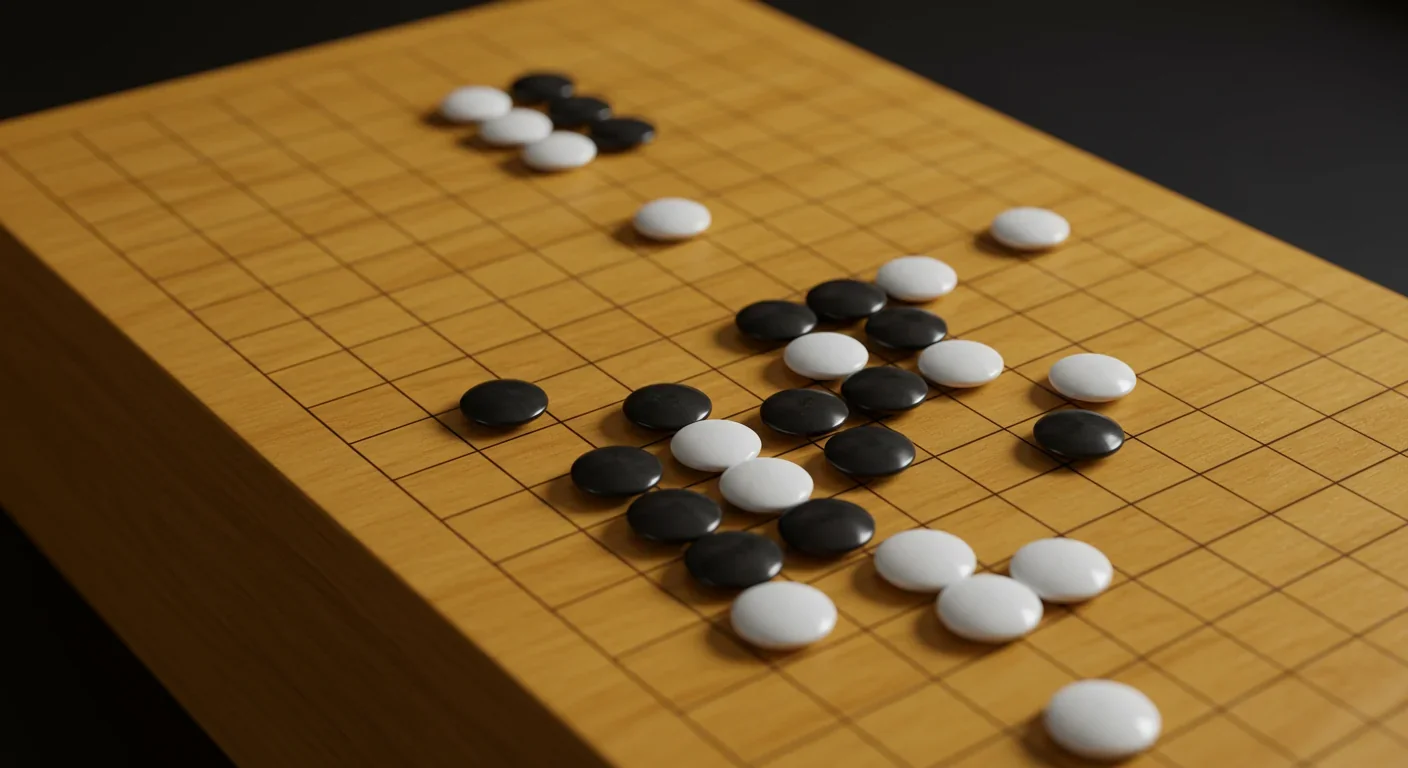

AlphaGo revolutionized AI by defeating world champion Lee Sedol through reinforcement learning and neural networks. Its successor, AlphaGo Zero, learned purely through self-play, discovering strategies superior to millennia of human knowledge—opening new frontiers in AI applications across healthcare, robotics, and optimization.