Care Worker Crisis: Low Pay & Burnout Threaten Healthcare

TL;DR: Generation Alpha (born 2010-2025) is the first generation developing cognitively alongside AI, experiencing personalized learning from smart toys and adaptive platforms that boost achievement by up to 54%—yet facing risks including memory decline, attention challenges, and unprecedented data surveillance. With 86% of students using AI tools but only 32% of teachers receiving training, and wealthier schools accessing cutting-edge platforms while underfunded schools struggle, society faces urgent imperatives: teach cognitive complementarity (when to use vs. build internal knowledge), embed AI literacy as fundamental as reading, establish ethical guardrails protecting privacy and preventing bias, and ensure Generation Alpha becomes critical evaluators rather than passive users of the technology reshaping their minds.

In the spring of 2024, researchers in Japan recruited 96 adolescents for a groundbreaking mental health study involving AI chatbots. Within weeks, every single participant had withdrawn—not because the technology failed, but because it felt irrelevant, intrusive, and disconnected from their digital reality. This stunning failure reveals a profound truth: Generation Alpha, the 2.8 billion children born between 2010 and 2025, aren't just using AI—they're being fundamentally rewired by it in ways we're only beginning to understand.

While parents debated screen time limits, something far more consequential was happening in playrooms and classrooms worldwide. The Smart AI Toys Market has exploded from $11.62 billion in 2025 to a projected $21.72 billion by 2033—a 10.99% annual growth rate that reflects not consumer enthusiasm but an irreversible transformation of childhood itself. These aren't simple gadgets; they're personalized learning companions that recognize voices, read facial expressions, adapt to emotional states, and deliver educational content that evolves with each interaction.

Consider what this means for a five-year-old in 2025. Her AI-powered plush bear tailors bedtime stories to her exact vocabulary level, gradually introducing complex words as she masters simpler ones. Her smart tablet uses adaptive algorithms that track not just her answers but her hesitation patterns, attention drift, and frustration thresholds. By the time she enters first grade, she's already experienced thousands of hours of machine-personalized instruction—more one-on-one tutoring than any previous generation received in their entire childhood.

The cognitive implications are staggering. Research tracking 388 undergraduates over a year found that frequent AI interaction creates a dual pathway: it simultaneously increases prosocial behavior through the need for affiliation while potentially fostering problematic device dependency through loneliness. For Generation Alpha, this isn't an occasional phenomenon—it's the architecture of daily life. Over 80% of students now report regular AI tool usage, with 53 million American homes containing voice-activated smart speakers that children engage with as naturally as they once played with blocks.

Neuroscientist Barbara Oakley's research team has identified what they call The Memory Paradox: as AI tools become more capable of storing and retrieving information, human memory systems are measurably declining. This isn't speculation—it's visible in the reversal of the Flynn effect across advanced nations. IQ scores, which rose steadily throughout the 20th century, have plateaued or declined in the US, UK, France, and Norway since the early 2010s—precisely when smartphones and cloud computing became ubiquitous.

The mechanism is cognitive offloading. When children know they can instantly retrieve any fact, they allocate mental resources away from encoding and consolidation—the neurological processes that build genuine understanding. A 2024 CDC study found that 50% of adolescents aged 12-17 spend over four hours daily on screens, correlating with severely elevated rates of depression and anxiety. But the damage isn't just emotional—it's architectural. Brain regions responsible for retrieval practice, spaced repetition, and step-by-step skill progression are literally developing differently in Gen Alpha.

Educators see this transformation firsthand. "When children come into organized childcare, it's difficult for them to follow instructions," explains Kisha Lee, describing attention challenges that were rare a generation ago. The problem isn't screen time duration alone—research in JAMA distinguishes between total hours and addictive use patterns involving compulsion, withdrawal, and loss of control. Gen Alpha is experiencing both: unprecedented exposure and fundamentally altered usage patterns that shape neural development during critical windows.

Yet this same technology is producing remarkable learning gains. Adaptive AI platforms boost achievement by up to 54% compared to traditional instruction, with students reporting 10 times higher engagement. The AI math tutor Skye improved learner accuracy from 34% to 92% within a single session in one UK study. Singapore's Nanyang Primary School documented 27% comprehension improvements after implementing personalized learning algorithms. The technology isn't simply good or bad—it's creating cognitive trade-offs we're only beginning to map.

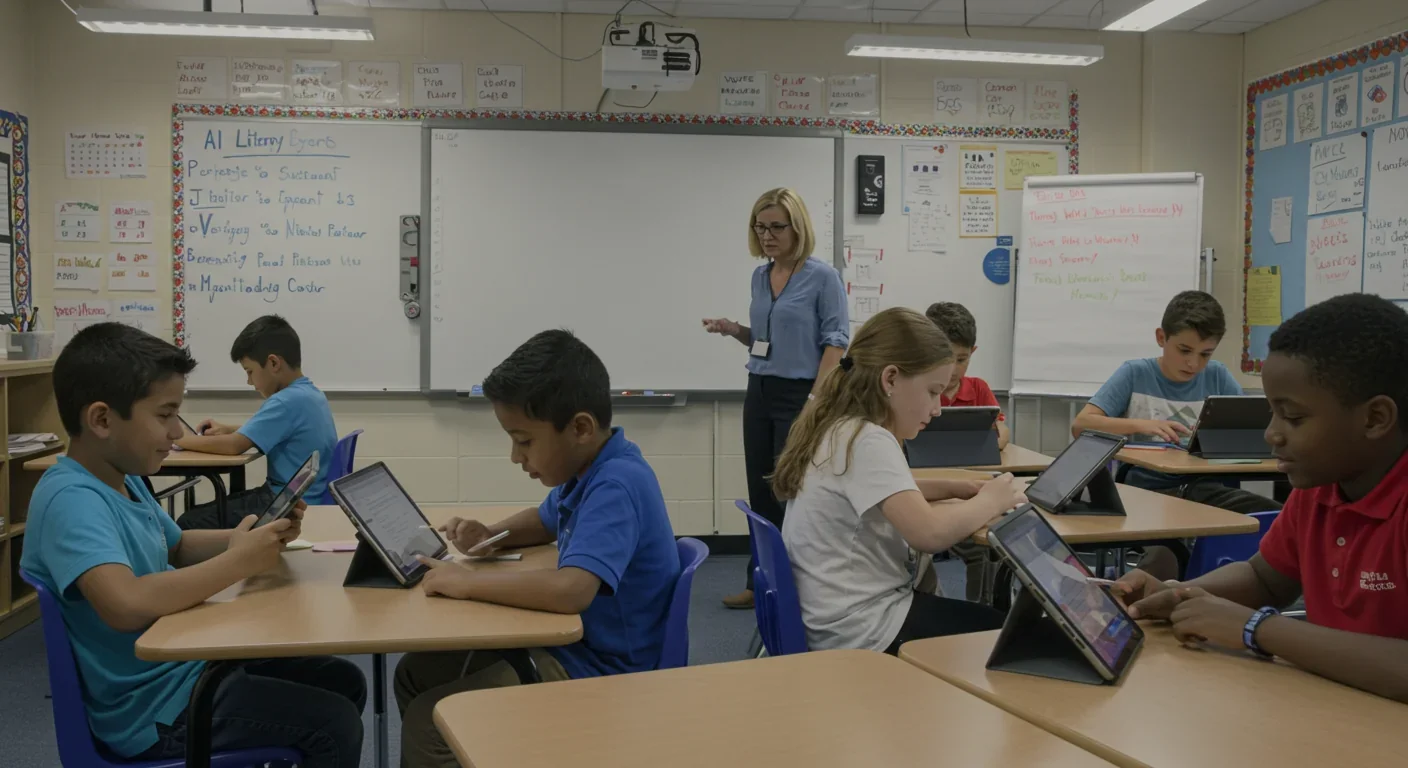

Walk into a Michigan elementary school today, and you'll witness a seismic shift that data confirms: 43.9% of teachers now use AI in their classrooms, nearly double the 22.9% from just one year earlier. Over 80% of building administrators report AI usage in their schools, with 100% planning further integration. This isn't gradual adoption—it's a tidal wave driven by compelling evidence that AI fundamentally improves teaching efficiency.

Teachers using AI grading assistants, lesson planners, and content generators report saving 44% of their work hours—roughly six hours weekly that previously went to repetitive tasks. A McKinsey analysis estimates AI saves teachers 13 hours per week in content creation and administrative work alone. This efficiency isn't an abstract benefit; it's the difference between exhausted educators grading papers until midnight and those with time for one-on-one student mentorship.

But here's where the story gets complicated: only 32% of teachers have received formal training on these tools. They're navigating powerful systems—predictive analytics, automated grading algorithms, adaptive assessment engines—with minimal guidance on ethical use, bias detection, or privacy protection. The gap between adoption and understanding is widening dangerously fast.

Meanwhile, students are racing ahead. Some 86% of students across all levels use AI tools, with 90% of undergraduates and 26% of high schoolers reporting regular usage. One analysis of 200 million assignments found machine-generated content in about 10% of submissions. Gen Alpha students aren't asking permission—they're integrating AI into homework, creative projects, and research as naturally as previous generations used calculators and spell-checkers.

The inequality dimension is particularly stark. Wealthier schools experiment with cutting-edge platforms—MIT's LLM-based physics tutors that provide guided practice problems, Bowdoin College's Digital Excellence Commitment providing every student with MacBook Pros and iPads regardless of financial need. Underfunded schools struggle to access any AI tools. Schools with larger disadvantaged cohorts are measurably less likely to teach how AI works or offer computer science courses. High usage statistics hide a growing digital divide that will define Gen Alpha's economic trajectories.

What competencies will Generation Alpha need for careers that don't yet exist? The question haunts educators, parents, and policymakers alike. Traditional answers—memorization, calculation, information retrieval—are precisely what AI excels at. The skills that matter are shifting toward what machines can't easily replicate: critical evaluation, ethical reasoning, creative synthesis, and adaptive problem-solving.

AI literacy has emerged as fundamental as reading and writing. The OECD and European Commission are developing an AI Literacy Framework defining the technical knowledge, durable skills, and future-ready attitudes required to thrive in AI-influenced environments. The framework emphasizes four pillars: understanding how AI works, recognizing ethical implications, using AI safely, and judging AI outputs critically.

Countries are responding with varying urgency. China launched AI curricula in 40 schools in 2018, introduced 14 approved AI textbooks, trained 5,000 specialized teachers, and now mandates AI instruction in all schools. Germany is investing $5 billion in digital infrastructure to support AI integration. The 2025 US Federal AI Education Initiative explicitly calls for schools to prepare students for an AI-powered world. Yet surveys in Hungary and the Netherlands found that not a single participating student reported receiving instruction in basic digital literacy skills like password protection, safe email use, or source evaluation.

The curriculum challenge is immense. Effective AI literacy requires spiraled instruction—introducing concepts early and building complexity over time—integrated into existing subjects rather than isolated modules. Google's AI Quests program invites students ages 11-14 to step into researchers' shoes, applying AI to real challenges like flood forecasting, diabetic retinopathy detection, and brain connectomics. Each quest includes video messages from actual scientists explaining responsible AI use. Minecraft Education's "Reed Smart: AI Detective" teaches children to spot misinformation and question digital content through immersive gameplay.

But the most critical skill may be cognitive complementarity—understanding when to use AI and when to build internal knowledge foundations. As researcher Carl Hendrick observes: "The most advanced AI can simulate intelligence, but it cannot think for you. That task remains, stubbornly and magnificently, human." Teaching students to delay offloading, practice retrieval, and solidify understanding before relying on AI tools is essential for mental muscle-building.

Generation Alpha's relationship with AI creates what researchers call a "double-edged sword effect." Longitudinal data following undergraduates for a year revealed that AI interaction frequency shapes both prosocial behavior and problematic phone use through intertwined pathways involving affiliation needs and loneliness.

Here's the mechanism: Students who frequently interact with AI systems report increased need for human connection—the technology paradoxically heightens awareness of what it can't provide. This drives prosocial behaviors like helping classmates and community engagement. However, peer support moderates this effect; students with strong social networks show smaller increases in prosocial behavior, suggesting AI fills gaps rather than enhancing already-robust relationships.

Simultaneously, frequent AI interaction correlates with problematic mobile phone use—compulsive checking, anxiety when separated from devices, and difficulty regulating usage. The pathway runs through feelings of loneliness: AI interactions that fail to satisfy genuine connection needs can intensify isolation, driving more screen time in a self-reinforcing cycle.

For Gen Alpha, these patterns are forming during critical developmental windows. The average Gen Z individual already spends 9 hours daily on screens; Gen Alpha's usage is tracking higher. International averages have reached 6 hours 45 minutes daily, rising to 9 hours 24 minutes in countries like South Africa. These aren't occasional indulgences—they're the primary environment where identity formation, social learning, and emotional regulation are occurring.

Mental health implications are profound. Screen time has been identified as a risk factor for binge eating disorders and suicidal behaviors. Reviews in medical journals document negative impacts on sleep quality, emotional regulation, language development, and executive functioning. A CDC study found that adolescents with over four hours daily screen time report severely higher depression rates than peers with less exposure.

Yet screen time duration alone doesn't tell the full story. Research distinguishes between total hours and addictive use patterns—it's the compulsion, withdrawal symptoms, and loss of control that predict harm, not the raw minutes. Parents and educators must look beyond timers to usage quality: Is the child zoning out passively or actively problem-solving? Does screen removal trigger meltdowns suggesting dependency? Are real-world relationships and activities being displaced?

Every interaction Generation Alpha has with AI toys, educational platforms, and voice assistants generates data. Voice samples. Facial scans. Emotional state assessments. Learning progress metrics. Behavioral patterns. This information, aggregated and analyzed, creates detailed psychological profiles of children—often stored on corporate servers under privacy policies few parents read and even fewer understand.

Compliance with regulations like GDPR and COPPA is mandatory but insufficient. These laws address consent and data minimization; they don't prevent the fundamental transformation of childhood into a surveilled, quantified, optimized experience. AI toys integrate with smart home ecosystems, enabling parents to monitor usage remotely and set learning goals via apps—a degree of oversight previous generations never experienced.

Educators echo these concerns. In Michigan's 2025 survey, 94% of district administrators identified AI literacy as a priority, yet many simultaneously cited data privacy as a primary barrier to adoption. Only 50.7% of districts have formal AI policies, leaving ethical decisions to individual teachers often lacking training or guidance. Research from Carnegie Mellon and Microsoft confirms fears that AI over-reliance can reduce attention spans and cognitive engagement, yet schools face immense pressure to deploy these tools to remain competitive.

The ethical dimension extends beyond privacy to algorithmic bias. Automated grading systems have documented biases against certain writing styles and dialects. Predictive analytics used for behavior monitoring disproportionately flag students from marginalized communities. Content recommendation algorithms can create filter bubbles that limit exposure to diverse perspectives. For Generation Alpha, these systems are shaping not just what they learn but how they see themselves and their possibilities.

Ethical leadership frameworks are emerging in response. The EU Artificial Intelligence Act, adopted July 2024, will be fully applicable within 24 months, imposing transparency requirements for general-purpose AI systems by August 2025. Educational contexts are classified as high-risk, triggering strict compliance obligations. But legislation lags reality—Gen Alpha is already immersed in AI environments while regulatory structures play catch-up.

How societies prepare Generation Alpha for AI-integrated adulthood varies dramatically across cultures, revealing competing visions of technology's role in development.

In East Asia, AI adoption is aggressive and comprehensive. Japan's Smart AI Toys Market is expected to reach $700 million by 2028, growing at 14% annually—the highest regional rate globally. China's educational AI mandate ensures universal exposure, with 85% of institutions now using some AI form. Singapore's National AI Strategy explicitly positions AI literacy as a competitive advantage, with schools like Nanyang Primary documenting measurable learning gains from adaptive platforms.

European approaches emphasize ethical guardrails. The EU's AI Literacy Framework, developed collaboratively by the OECD and European Commission, prioritizes democratic citizenship and child rights alongside technical competency. European families show divergent attitudes: Dutch and German parents actively use AI tools to support learning, while Italian and Hungarian parents remain skeptical or uninformed. Surveys reveal that no participating students in Hungary or the Netherlands received instruction in basic digital skills—a gap families are attempting to fill themselves.

North America presents a fragmented landscape. Canada's Smart AI Toys Market is projected to reach $500 million by 2028, growing at 12% annually. The 2025 US Federal AI Education Initiative signals national recognition of urgency, but implementation remains decentralized. Adoption rates are climbing—56% of K-12 schools now use AI tutoring solutions, 40% of universities deploy AI for scheduling and enrollment—yet training and policy lag dangerously behind.

Across regions, three tensions emerge:

Efficiency versus equity: AI promises personalized learning at scale, but wealthier schools access superior tools, widening achievement gaps.

Innovation versus protection: Early AI exposure may confer cognitive advantages, but developmental risks—attention deficits, social skill delays, privacy violations—remain poorly understood.

Autonomy versus standardization: AI enables individualized learning paths, yet also enables unprecedented surveillance and behavioral control.

These tensions will define Generation Alpha's relationship with technology, work, and power structures. The decisions being made now—in classrooms, boardrooms, and policy chambers—are setting trajectories that will shape society for decades.

The challenge of supporting Generation Alpha falls primarily on adults who didn't grow up with AI—a profound reversal of historical learning dynamics. Three stakeholder groups face distinct imperatives:

Parents must become frontline digital literacy educators often without formal training themselves. Surveys show 87% of children exceed recommended screen time limits, yet 60% of parents attempt restrictions. Effective strategies go beyond timers: modeling screen hygiene (devices off two hours before bed, no screens at meals), practicing gradual reduction rather than cold-turkey elimination, distinguishing between passive consumption and active creation, and maintaining open dialogue about AI capabilities and limitations.

The most critical parental role is teaching cognitive complementarity—when to engage with AI and when to build internal knowledge. This requires understanding that early offloading prevents the encoding, retrieval, and consolidation processes that build genuine mastery. Children need space to struggle, fail, and develop problem-solving resilience before AI tools become crutches.

Educators need urgent professional development. Only 32% have received AI tool training despite 43.9% already using these technologies in classrooms. Effective programs provide hands-on, low-pressure workshops building confidence before deployment. Teachers require not just technical skills but ethical frameworks—identifying algorithmic bias, protecting student privacy, maintaining authentic relationships while integrating AI assistants.

The pedagogical shift is profound. Teachers must transition from information delivery to mentorship, using AI to handle routine tasks while focusing on higher-order interactions. Research shows schools where teachers thoughtfully implement AI see improvements not just in academic outcomes but in student engagement and teacher effectiveness. The key is positioning AI as enhancement rather than replacement—Jonathan Wharmby's framing of AI as "a teaching assistant in every teacher's pocket" offering real-time tailored feedback captures the ideal.

Policymakers face the most complex challenge: creating governance structures for rapidly evolving technologies affecting vulnerable populations. Current efforts are piecemeal, prioritizing hardware and connectivity over pedagogical reforms. AI policy adoption in Michigan schools rose from 31.7% in 2024 to 50.7% in 2025, with an additional 26.0% drafting guidelines—progress, but still leaving nearly a quarter of districts without formal frameworks.

Effective policy must address four dimensions: Technical infrastructure (ensuring equitable access), Pedagogical integration (embedding AI literacy across curricula), Ethical governance (protecting privacy, preventing bias, maintaining transparency), and Community engagement (involving families in digital citizenship education).

The UK's emerging AI empowerment framework offers a promising model. Rather than treating AI literacy as isolated technical training, it embeds ethical awareness into existing digital citizenship programs. Dr. Caitlin Bentley of King's College London defines AI empowerment as positioning students as "active agents of change" who question AI outputs, understand limitations, and recognize embedded perspectives. This approach cultivates not just users but critical evaluators.

By 2028, the first wave of Generation Alpha—those born in 2010—will set foot on college campuses. By 2033, they'll be entering the workforce en masse. By 2045, they'll hold leadership positions in governments, corporations, and institutions worldwide. The cognitive architectures, ethical frameworks, and technological relationships they develop now will shape humanity's next chapter.

The stakes are immense. AI tutors have demonstrated the power to reduce dropout rates by up to 20% through real-time feedback and targeted practice—potentially transforming economic mobility. Conversely, excessive AI reliance risks creating a generation with diminished memory, attention, and critical thinking capabilities. The same technology enabling unprecedented personalized learning also enables unprecedented surveillance, behavioral manipulation, and inequality amplification.

Generation Alpha didn't choose this experiment. They were born into it, digital natives in the most literal sense—their cognitive development inseparable from AI systems. The question isn't whether AI will shape them—that's inevitable—but how we guide that shaping.

Three principles should anchor our approach:

Cognitive sovereignty: Children must develop robust internal knowledge and critical thinking before offloading to AI, ensuring they remain masters of technology rather than servants to it.

Ethical awareness: AI literacy must include understanding bias, privacy implications, and power structures embedded in algorithms, cultivating informed democratic participation.

Human connection: The prosocial impulses AI interaction can paradoxically strengthen must be channeled into genuine relationships, community engagement, and empathetic collaboration that no machine can replicate.

The research is clear: AI in education isn't inherently good or bad—it's a multiplier of existing pedagogical approaches, institutional priorities, and societal values. Used thoughtfully, with adequate training, ethical guardrails, and equity considerations, it can enhance learning, free educators for meaningful interactions, and prepare students for technological futures. Used carelessly, it widens inequality, erodes cognitive capabilities, and reduces humans to data points in optimization algorithms.

Generation Alpha will inherit the world these choices create. They'll also, eventually, judge the adults who made them. Our responsibility is ensuring that when they look back on this pivotal moment—when AI became inseparable from childhood—they see not reckless enthusiasm or fearful retreat, but thoughtful stewardship that centered their flourishing above all else.

The children surrounded by AI-powered toys, taught by adaptive algorithms, and connected through voice-activated assistants are being shaped in ways we're only beginning to understand. The question that will define this generation—and the society they build—is whether we give them the tools not just to use AI, but to question it, shape it, and ultimately, transcend it.

Recent breakthroughs in fusion technology—including 351,000-gauss magnetic fields, AI-driven plasma diagnostics, and net energy gain at the National Ignition Facility—are transforming fusion propulsion from science fiction to engineering frontier. Scientists now have a realistic pathway to accelerate spacecraft to 10% of light speed, enabling a 43-year journey to Alpha Centauri. While challenges remain in miniaturization, neutron management, and sustained operation, the physics barriers have ...

Epigenetic clocks measure DNA methylation patterns to calculate biological age, which predicts disease risk up to 30 years before symptoms appear. Landmark studies show that accelerated epigenetic aging forecasts cardiovascular disease, diabetes, and neurodegeneration with remarkable accuracy. Lifestyle interventions—Mediterranean diet, structured exercise, quality sleep, stress management—can measurably reverse biological aging, reducing epigenetic age by 1-2 years within months. Commercial ...

Data centers consumed 415 terawatt-hours of electricity in 2024 and will nearly double that by 2030, driven by AI's insatiable energy appetite. Despite tech giants' renewable pledges, actual emissions are up to 662% higher than reported due to accounting loopholes. A digital pollution tax—similar to Europe's carbon border tariff—could finally force the industry to invest in efficiency technologies like liquid cooling, waste heat recovery, and time-matched renewable power, transforming volunta...

Humans are hardwired to see invisible agents—gods, ghosts, conspiracies—thanks to the Hyperactive Agency Detection Device (HADD), an evolutionary survival mechanism that favored false alarms over fatal misses. This cognitive bias, rooted in brain regions like the temporoparietal junction and medial prefrontal cortex, generates religious beliefs, animistic worldviews, and conspiracy theories across all cultures. Understanding HADD doesn't eliminate belief, but it helps us recognize when our pa...

The bombardier beetle has perfected a chemical defense system that human engineers are still trying to replicate: a two-chamber micro-combustion engine that mixes hydroquinone and hydrogen peroxide to create explosive 100°C sprays at up to 500 pulses per second, aimed with 270-degree precision. This tiny insect's biochemical marvel is inspiring revolutionary technologies in aerospace propulsion, pharmaceutical delivery, and fire suppression. By 2030, beetle-inspired systems could position sat...

The U.S. faces a catastrophic care worker shortage driven by poverty-level wages, overwhelming burnout, and systemic undervaluation. With 99% of nursing homes hiring and 9.7 million openings projected by 2034, the crisis threatens patient safety, family stability, and economic productivity. Evidence-based solutions—wage reforms, streamlined training, technology integration, and policy enforcement—exist and work, but require sustained political will and cultural recognition that caregiving is ...

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.