How AlphaGo Mastered Go Using Reinforcement Learning

TL;DR: Transformer architectures with self-attention mechanisms have completely replaced static word vectors like Word2Vec in NLP by generating contextual embeddings that adapt to word meaning based on surrounding context, enabling dramatic performance improvements across all language understanding tasks.

In 2018, a paradigm shift quietly transformed the way machines understand human language. Static word vectors like Word2Vec and GloVe, which had dominated natural language processing for nearly a decade, were suddenly obsolete. Transformer architectures—powered by self-attention mechanisms and contextual embeddings—swept through the field with startling speed. By 2024, transformers have become the backbone of virtually every major language AI system, from ChatGPT to BERT. This wasn't just an incremental improvement. It was a complete rethinking of how machines represent meaning.

For years, Word2Vec and GloVe defined the state-of-the-art in language representation. These models learned to map words to fixed-length vectors—mathematical representations that captured semantic relationships. Words with similar meanings clustered together in vector space, and you could perform algebraic operations like "king - man + woman = queen."

The breakthrough was real. These embeddings captured word relationships far better than earlier one-hot encoding schemes, and they worked across countless NLP tasks: sentiment analysis, named entity recognition, machine translation. But they had a fundamental flaw.

Each word got exactly one vector representation. The word "bank" always mapped to the same point in vector space, whether you meant a financial institution or the edge of a river. Static embeddings couldn't handle polysemy—the linguistic reality that most words carry multiple meanings depending on context.

Natural language is inherently contextual. We disambiguate meaning through surrounding words, grammatical structure, and broader discourse. Word2Vec and GloVe approached this problem by averaging context during training, producing a single "best guess" representation for each word. This worked surprisingly well for many tasks, but it hit a ceiling.

Consider these sentences:

"The bank approved my loan application."

"We sat by the river bank watching the sunset."

"The pilot had to bank the aircraft sharply."

Static embeddings assigned identical representations to all three instances of "bank," forcing downstream models to re-learn disambiguation from scratch—a fundamental bottleneck for complex language understanding tasks.

The solution required a fundamental architectural change. Instead of learning one vector per word, systems needed to generate different representations based on the specific context in which each word appeared.

The 2017 paper "Attention Is All You Need" by Vaswani and colleagues introduced a radical architecture that solved the context problem elegantly. Instead of relying on recurrent neural networks (RNNs) or convolutional layers, transformers used a mechanism called self-attention.

Self-attention allows every word in a sentence to "look at" every other word and determine which ones are most relevant for understanding its meaning. When processing the word "bank" in "The bank approved my loan," the model learns to pay attention to words like "loan" and "approved," which provide strong clues about the financial meaning.

The transformer architecture consists of stacked layers of these attention mechanisms, combined with feed-forward neural networks. Each layer refines the representation, with lower layers capturing syntax and word order, middle layers handling sense disambiguation, and higher layers encoding abstract semantic relationships.

The mathematical elegance was striking. By computing attention scores between all pairs of words, transformers could model long-range dependencies that RNNs struggled with. They could process entire sentences in parallel rather than sequentially, making training dramatically faster.

Transformers generate what researchers call contextual embeddings. Unlike static vectors, these representations change based on surrounding words. Each occurrence of "bank" receives a unique vector that reflects its specific meaning in that context.

BERT (Bidirectional Encoder Representations from Transformers), released by Google in October 2018, demonstrated the power of this approach. By pre-training on massive text corpora using transformers, BERT learned rich contextual representations that could be fine-tuned for specific tasks with minimal additional training.

"BERT achieved state-of-the-art results on 11 different NLP benchmarks upon release. Tasks that previously required custom architectures and extensive feature engineering suddenly became solvable with a single pre-trained model."

— Research findings from BERT evaluation studies

Several key innovations make transformers superior to static embeddings:

Bidirectional Context Processing: Unlike earlier models that processed text left-to-right or right-to-left, transformers can attend to context in both directions simultaneously. When interpreting a word, they consider the complete sentence structure.

Scalability: Transformer architecture parallelizes beautifully. RNNs must process sequences step-by-step, creating a training bottleneck. Transformers compute all positions simultaneously, allowing them to scale to billions of parameters and train on massive datasets.

Positional Encoding: To handle word order without recurrence, transformers use positional encodings—mathematical functions that inject information about each word's position in the sequence. This elegant solution preserves sequential information while maintaining parallelism.

Subword Tokenization: Modern transformer models use tokenization schemes like WordPiece or Byte-Pair Encoding that break words into subword units. This handles rare words, misspellings, and morphological variations far better than fixed vocabularies used with Word2Vec.

Multi-Head Attention: Instead of learning a single attention pattern, transformers use multiple attention "heads" that capture different types of relationships simultaneously. One head might focus on syntactic dependencies, while another captures semantic similarity.

The empirical evidence is overwhelming. Across virtually every NLP benchmark, transformer-based models outperform static embedding approaches by substantial margins.

On the GLUE (General Language Understanding Evaluation) benchmark—a comprehensive test suite covering nine different language understanding tasks—BERT achieved scores in the 80-90% range, compared to 70-80% for the best static embedding models. That might sound incremental, but in machine learning, those percentage points represent transformative capability improvements.

Reading comprehension tasks show even starker differences. On the SQuAD (Stanford Question Answering Dataset), transformer models like BERT and its successors achieved human-level performance, answering questions about passages with over 90% accuracy. Static embedding approaches topped out around 75%.

Machine translation saw similar leaps. Google's switch from phrase-based statistical translation to neural models, and then to transformer-based systems, reduced translation errors by approximately 60% for some language pairs. The quality improvement was perceptible to end users—not just measurable in academic metrics.

The transition happened with startling speed:

2017: The original "Attention Is All You Need" paper appeared. Initial reactions were skeptical—the architecture seemed too simple to beat established RNN approaches.

2018: BERT's release proved transformers worked. GPT (Generative Pre-trained Transformer) demonstrated impressive text generation. The NLP research community pivoted hard toward transformers.

2019-2020: A Cambrian explosion of transformer variants emerged. XLNet, RoBERTa, ALBERT, T5—each pushed performance higher through architectural tweaks and larger-scale pre-training.

2021-2022: Transformers jumped from NLP to computer vision, audio processing, protein folding prediction, and other domains. The architecture proved remarkably general.

2023-2024: Models like GPT-4, Claude, and Gemini—all transformer-based—achieved capabilities that seemed impossible with static embeddings. They handled multi-step reasoning, creative writing, code generation, and cross-lingual understanding at near-human levels.

Today, static word vectors have largely become historical artifacts. Academic papers that use Word2Vec or GloVe are typically working on resource-constrained scenarios or conducting historical comparisons.

The transition wasn't instantaneous. ELMo (Embeddings from Language Models), released in 2018 just before BERT, represented a crucial intermediate step. ELMo used bidirectional LSTMs (a type of RNN) to generate contextual embeddings, demonstrating that context-dependent representations dramatically improved performance.

ELMo showed the NLP community what was possible with contextual embeddings, but it retained the computational bottlenecks of recurrent architectures. BERT proved you could get even better contextual representations with transformers' parallelizable architecture.

This transition period was essential. It validated the core insight—that context-dependent representations were necessary—while creating demand for more efficient implementations. By the time BERT appeared, the research community was primed to embrace it.

The transformer revolution wasn't confined to academic benchmarks. Industry adoption happened remarkably fast.

Search Engines: Google deployed BERT for search queries in 2019, improving understanding of conversational and long-tail queries. This affected approximately 10% of all English searches—billions of queries per day.

Virtual Assistants: Siri, Alexa, and Google Assistant all migrated to transformer-based language understanding, improving their ability to handle complex, multi-turn conversations and ambiguous requests.

Healthcare: Clinical NLP systems using transformers extract information from medical records, identify patient cohorts for clinical trials, and assist with diagnosis by analyzing symptom descriptions with unprecedented accuracy.

Legal Tech: Document review, contract analysis, and legal research all benefit from transformers' ability to understand complex, domain-specific language and identify subtle patterns across massive document collections.

Content Moderation: Social media platforms use transformer models to detect hate speech, misinformation, and harmful content across dozens of languages, adapting to rapidly evolving slang and coded language.

This revolution came with costs. Transformers are computationally expensive. The self-attention mechanism has quadratic complexity with respect to sequence length—processing a sentence twice as long requires four times the computation.

Training large transformer models demands massive resources. GPT-3's training consumed an estimated 1,287 MWh of electricity, with a carbon footprint equivalent to 500 metric tons of CO₂. The environmental and financial costs are non-trivial.

Inference costs matter too. Deploying a BERT-sized model for real-time applications requires significant hardware, creating barriers for researchers and companies with limited resources. This has spawned an entire subfield focused on model compression, distillation, and efficient architectures.

Static word vectors had one major advantage: efficiency. A Word2Vec model could run on a laptop, required minimal training data, and generated embeddings in milliseconds. For simple tasks or resource-constrained environments, they were often good enough.

The research community has attacked the efficiency problem from multiple angles.

Distillation: Models like DistilBERT compress full-sized transformers into smaller versions that retain most of the performance while running 60% faster and using 40% less memory.

Sparse Attention: Instead of computing attention between all word pairs, sparse attention patterns focus on local neighborhoods or specific patterns, reducing computational complexity from quadratic to linear.

Retrieval-Augmented Models: Hybrid approaches combine fast retrieval using static embeddings with transformer-based reranking, getting most of the accuracy with a fraction of the computational cost.

Quantization and Pruning: Techniques that reduce model precision and remove redundant parameters allow transformers to run on mobile devices and edge hardware.

These optimizations make transformers increasingly practical for deployment, though the fundamental trade-off between model capacity and computational cost remains.

If you're building NLP systems today, the choice is clear. Start with transformers. The ecosystem has matured to the point where using pre-trained models from Hugging Face or similar libraries requires minimal expertise.

For most applications, fine-tuning an existing model like BERT or RoBERTa on your specific dataset will outperform custom architectures built from scratch. Transfer learning—leveraging the contextual understanding learned during pre-training—has become the standard workflow.

That said, understand your constraints. If you're building systems for deployment on smartphones, IoT devices, or environments with strict latency requirements, you may need specialized compact models or alternative architectures.

For students and researchers, the shift means different priorities. Understanding attention mechanisms, positional encodings, and the mathematics of transformers is now foundational knowledge. The era when you could be an NLP expert without deep learning expertise is over.

Transformers continue to evolve. Current research explores several frontiers:

Longer Context Windows: Extending transformers to handle documents, books, and conversation histories spanning millions of tokens rather than thousands.

Multimodal Integration: Models that apply transformer architectures across text, images, audio, and video simultaneously, learning unified representations across modalities.

Improved Efficiency: Novel attention mechanisms and architectural innovations that maintain transformers' performance advantages while reducing computational costs.

Interpretability: Better techniques for understanding what attention heads learn and why models make specific predictions, addressing the "black box" criticism.

Domain Adaptation: Methods for efficiently adapting pre-trained transformers to specialized fields like law, medicine, and scientific research without requiring complete retraining.

"Some researchers argue we've reached a plateau. GPT-4 and Claude show impressive capabilities, but scaling alone may not reach artificial general intelligence. The next breakthrough might require fundamentally different architectures or training paradigms."

— Perspective from recent AI research literature

Others believe we're still in the early stages. If transformers can revolutionize language understanding in seven years, what might another seven years of research, larger datasets, and more computational power achieve?

The replacement of static word vectors by transformers follows a familiar pattern in technology. Superior approaches don't win immediately—they win when the ecosystem aligns.

Word2Vec and GloVe remained state-of-the-art for years because they solved real problems and the infrastructure to use them was mature. Transformers needed cloud computing infrastructure, specialized hardware (GPUs and TPUs), and frameworks like PyTorch and TensorFlow to become practical.

Once those pieces fell into place, adoption accelerated. Within two years of BERT's release, static embeddings had gone from ubiquitous to niche. The same pattern played out when deep learning displaced traditional machine learning, when smartphones replaced feature phones, when cloud computing displaced on-premises servers.

The lesson: technical superiority alone doesn't drive adoption. It requires supporting infrastructure, ecosystem buy-in, and applications that demonstrate tangible value. Transformers checked all those boxes.

The transformer revolution extends beyond technical circles. These models power technologies billions of people use daily, often invisibly.

When you search Google, transformers interpret your query. When you ask Siri a question, transformers parse your intent. When you receive automated customer service, transformers generate the responses. When content gets moderated on social platforms, transformers make the decisions.

This raises important questions. Who controls these powerful language models? How do we ensure they work fairly across different languages, dialects, and cultural contexts? What happens when automated systems can generate convincing misinformation at scale?

The computational costs create centralization pressures. Only a handful of organizations can afford to train models at the frontier. This concentrates power in ways that static word vectors—which any researcher could train on a desktop—never did.

At the same time, the democratization of pre-trained models means small teams can build applications that would have required significant resources just years ago. A startup can fine-tune BERT for a specialized domain and achieve professional-grade NLP capabilities.

Static word vectors taught us that geometric relationships in high-dimensional space could capture semantic meaning. They showed that unsupervised learning from massive text corpora could produce useful representations. They made modern NLP possible.

But they were always a stepping stone. Transformers and contextual embeddings represent a more sophisticated understanding of how language works. Meaning isn't fixed—it's constructed through context, and models that recognize this fundamental truth outperform those that don't.

The transformer revolution is complete. The question now isn't whether to adopt these architectures—it's how to use them responsibly, efficiently, and in service of applications that genuinely improve human capabilities. The technology that dethroned word vectors will itself eventually be surpassed. That's the nature of progress. But for now, transformers reign supreme in the realm of natural language understanding, and the future they're building is just beginning to unfold.

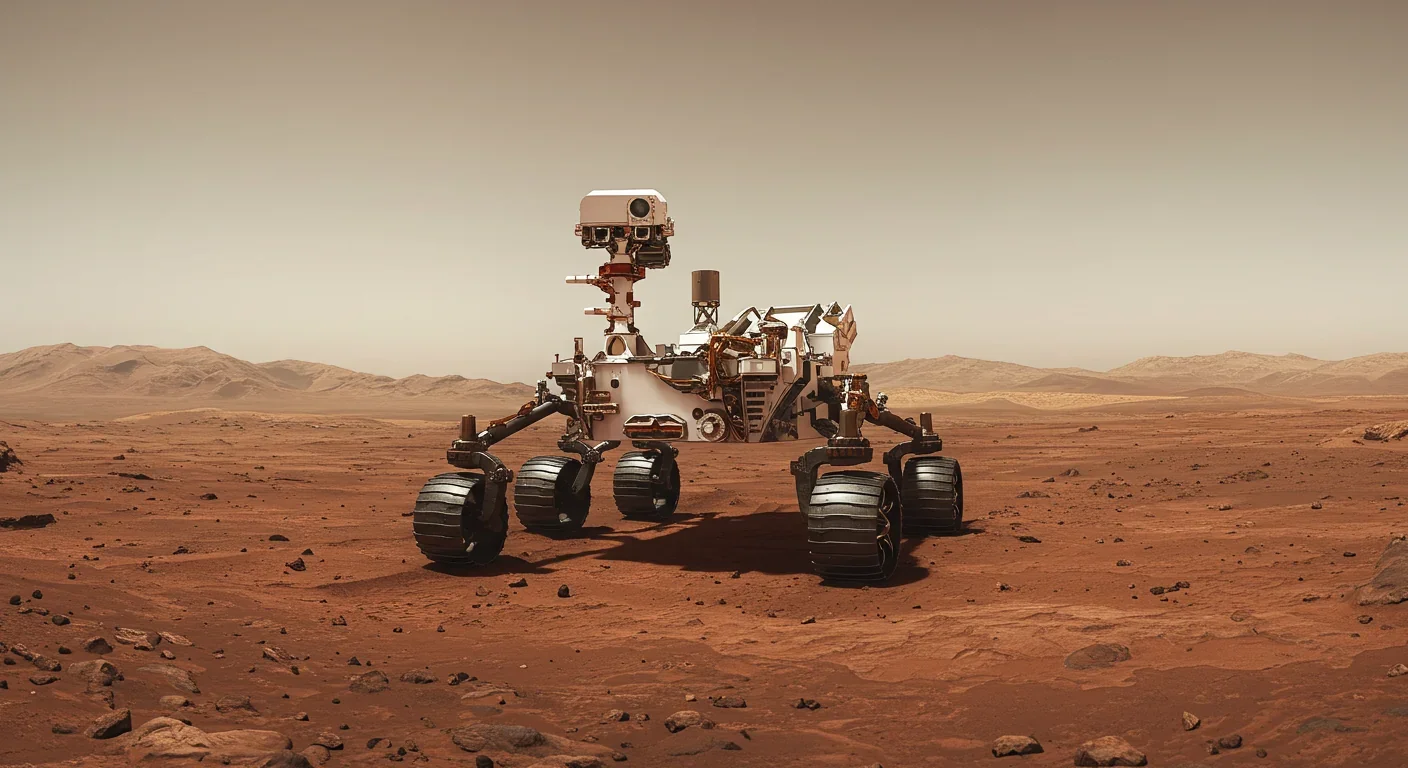

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

Cryptomnesia—unconsciously reproducing ideas you've encountered before while believing them to be original—affects everyone from songwriters to academics. This article explores the neuroscience behind why our brains fail to flag recycled ideas and provides evidence-based strategies to protect your creative integrity.

Cuttlefish pass the marshmallow test by waiting up to 130 seconds for preferred food, demonstrating time perception and self-control with a radically different brain structure. This challenges assumptions about intelligence requiring vertebrate-type brains and suggests consciousness may be more widespread than previously thought.

Epistemic closure has fractured shared reality: algorithmic echo chambers and motivated reasoning trap us in separate information ecosystems where we can't agree on basic facts. This threatens democracy, public health coordination, and collective action on civilizational challenges. Solutions require platform accountability, media literacy, identity-bridging interventions, and cultural commitment to truth over tribalism.

Transformer architectures with self-attention mechanisms have completely replaced static word vectors like Word2Vec in NLP by generating contextual embeddings that adapt to word meaning based on surrounding context, enabling dramatic performance improvements across all language understanding tasks.