The Hidden Memory Trap: Why Your Ideas Aren't Original

TL;DR: The curse of knowledge is a cognitive bias where experts, having internalized their expertise, can't imagine not knowing what they know. This makes brilliant specialists terrible teachers, leading to communication breakdowns in education, business, and product design. The article explores the neuroscience behind the curse and provides evidence-based strategies for experts to bridge the gap.

You've mastered your field. You can solve problems in your sleep. But when you try to explain something simple to a colleague or student, they stare at you blankly. You wonder: how do they not understand this? The answer isn't that they're slow—it's that you've fallen victim to the curse of knowledge, a cognitive bias so powerful it can turn the brightest experts into the worst teachers.

The curse of knowledge is a psychological trap where knowing something makes it impossible to imagine not knowing it. Once information enters your brain and becomes internalized, you lose access to your former ignorant state. You can't un-see what you've learned. This phenomenon, first identified by economists in 1989, affects everyone from professors to product designers, creating a systematic breakdown in communication between those who know and those who need to learn.

In 1990, Stanford psychology researcher Elizabeth Newton designed an experiment that would become the definitive demonstration of this curse. She divided participants into two groups: "tappers" and "listeners." The tappers received a list of 25 well-known songs like "Happy Birthday" and "The Star-Spangled Banner." Their job was to tap out the rhythm of a chosen song on a table while listeners tried to guess the tune.

Before starting, Newton asked the tappers to predict how many songs listeners would correctly identify. The tappers estimated that listeners would guess correctly about 50% of the time—a reasonable assumption if you're hearing the melody in your head as you tap.

The actual results were stunning. Out of 120 songs tapped, listeners correctly identified only 3—a success rate of 2.5%, not 50%. The tappers were off by a factor of 20.

When tappers tapped, they heard the full song in their minds. Listeners heard nothing but disconnected tapping. The tappers couldn't conceive how ambiguous their taps sounded—they couldn't unhear the music.

What happened? When tappers tapped, they heard the full song playing in their minds—melody, harmony, lyrics, everything. To them, the taps were obviously "Happy Birthday." But listeners heard nothing but disconnected tapping, like Morse code without a key. The tappers, cursed by their knowledge of the melody, couldn't conceive how ambiguous their taps sounded. They couldn't unhear the music.

This experiment reveals the curse's core mechanism: once you possess knowledge, you automatically process information through that lens. You can't voluntarily suppress what you know to adopt a beginner's perspective. Your brain won't let you.

The curse of knowledge isn't laziness or arrogance—it's a byproduct of how expertise fundamentally changes your cognitive architecture. When you first learn a skill, every step requires conscious attention. You think about each movement when learning to drive, each word when learning a language, each concept when studying physics.

But as you practice, something remarkable happens: your brain automates the process. Neural pathways strengthen. Tasks that once demanded deliberate thought become unconscious. This automation is what separates experts from novices—experts have compressed thousands of hours of learning into instant, intuitive responses.

The problem emerges when experts try to teach. Because the knowledge has become automatic, experts lose conscious access to the intermediate steps. They skip foundational explanations because those steps no longer exist in their conscious awareness. It's like trying to remember how you learned to walk—the knowledge is there, but the learning process has been erased from conscious memory.

"Once we know something, we find it hard to imagine what it was like not to know that information."

— Gentelligence Research

Research on hindsight bias confirms this mechanism. When people learn the outcome of an event, they instantly feel they "knew it all along," even when they couldn't have predicted it beforehand. The new information rewrites their memory, making the outcome seem inevitable. Similarly, experts can't accurately reconstruct what it felt like to not understand their field because their brains have been permanently altered by learning it.

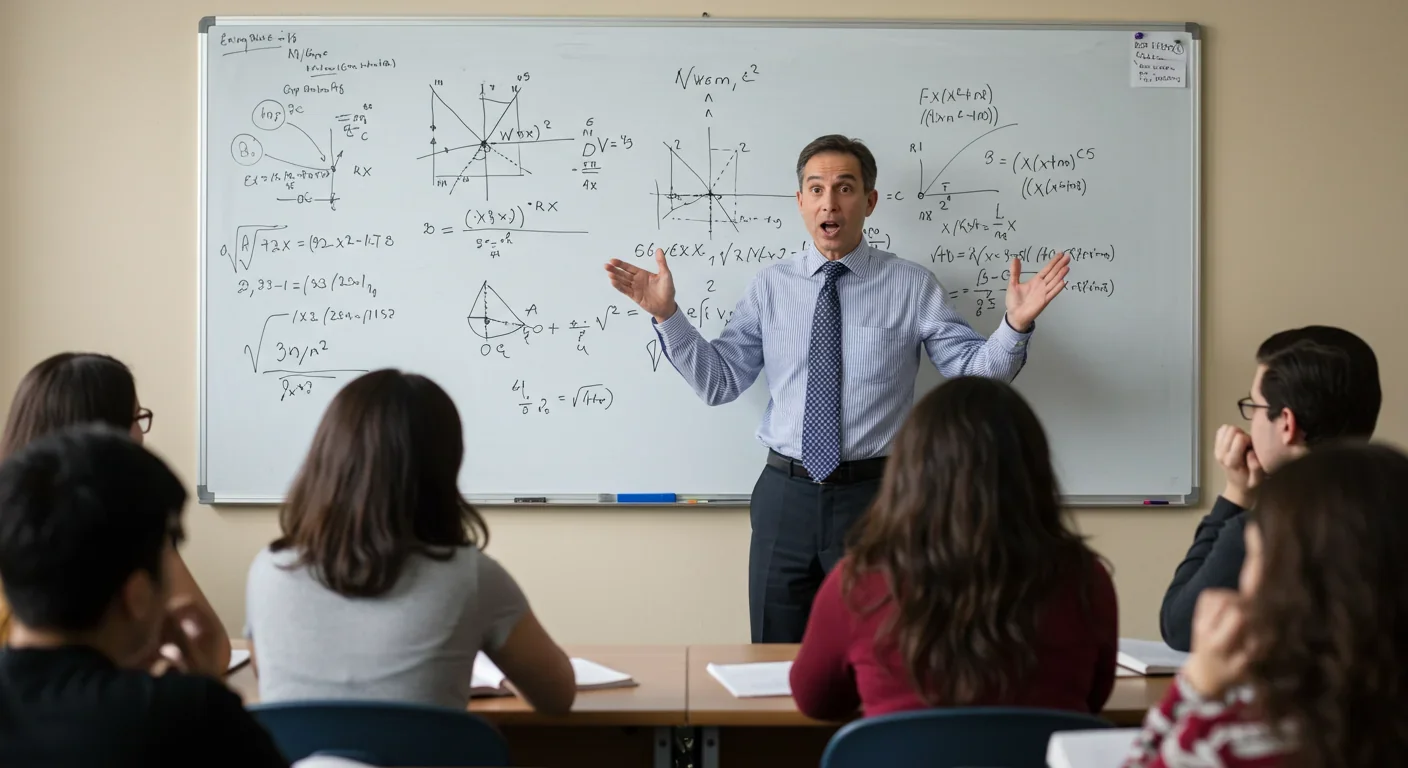

Walk into any university lecture hall, and you'll witness the curse in action. A physics professor breezes through a derivation, skipping steps that seem "obvious" to her but leave students frantically copying symbols they don't understand. A computer science instructor uses terminology like "polymorphism" and "dependency injection" without pausing to define them, assuming students share his conceptual framework.

Studies of instructional effectiveness reveal that experts consistently overestimate how much students understand, leading to what educators call "instructional scaffolding failures." When teachers skip crucial intermediate steps, students can't build the conceptual foundation needed for advanced concepts. The knowledge gap compounds, creating students who can memorize formulas but can't apply them creatively.

One professor reflected on his own blind spot: "I realize my students don't see the world the way I do". He had spent decades immersed in his discipline, developing what cognitive scientists call "chunked knowledge"—the ability to see patterns and connections instantly. His students, lacking that expertise, needed explicit guidance to recognize what seemed obvious to him.

The consequences extend beyond frustration. When students repeatedly fail to understand expert explanations, they often blame themselves, assuming they lack intelligence rather than recognizing they lack appropriate instruction. This dynamic particularly harms students from non-traditional backgrounds who haven't been exposed to discipline-specific ways of thinking.

Educational researchers have developed the "Decoding the Disciplines" framework to combat this curse. The method forces experts to explicitly articulate their tacit knowledge—the unspoken mental moves they make when analyzing problems. By making the implicit explicit, experts can identify and teach the invisible skills that separate novices from practitioners.

The curse of knowledge wreaks havoc in business, particularly in product design and marketing. Engineers build features they find intuitive, forgetting that customers lack their technical knowledge. Marketing teams write copy saturated with industry jargon, assuming prospects understand terminology that's only familiar to insiders.

Consider the classic example of software documentation. Developers, who know exactly how their product works, write help files that skip basic concepts and use technical terms without definition. To them, these shortcuts make sense—why explain what everyone knows? But new users, confronting the interface for the first time, find the documentation impenetrable. The gap between expert assumption and user reality kills adoption.

One effective strategy: during an employee's first two weeks, have them document everything in customer-facing materials that confuses them. After a few months, they'll be cursed too, unable to see the product with beginner's eyes.

One particularly effective strategy that UserTesting recommends: during an employee's first two weeks at a company, have them document everything in customer-facing materials that confuses them. Fresh employees still remember what it's like to be an outsider. After a few months, they'll be cursed too, unable to see the product with beginner's eyes. That two-week window captures invaluable perspective before the curse sets in.

The 1989 study that coined the term "curse of knowledge" analyzed economic negotiations where one party had privileged information. Researchers Colin Camerer, George Loewenstein, and Martin Weber found that better-informed parties consistently overestimated what their counterparts knew, leading to negotiation breakdowns. In bargaining experiments, informed participants couldn't ignore their advantage, even when trying to be fair. Their knowledge anchored their expectations, preventing them from accurately modeling their partner's perspective.

This dynamic plays out in workplace intergenerational conflicts. Older employees expect face-to-face meetings because that's how business has always been done—an assumption so obvious they don't articulate it. Younger employees default to Slack messages and expect rapid digital responses, a norm equally obvious to them. Neither side recognizes their assumptions until conflict arises, at which point both feel the other is being unreasonable. The curse makes each generation's "common sense" invisible to them and incomprehensible to others.

Understanding the curse requires examining how brains represent knowledge. When you learn something new, your brain creates neural connections linking concepts, facts, and procedures. As you practice, these connections strengthen through a process called long-term potentiation. Eventually, the knowledge becomes consolidated into your brain's architecture.

This consolidation is permanent. You can't simply deactivate these neural pathways to simulate ignorance. Research on memory reconsolidation shows that even when we try to forget information, the pathways remain, influencing our thinking unconsciously. This is why telling experts to "think like a beginner" doesn't work—their brains are structurally different from beginners' brains.

The curse is particularly strong for procedural knowledge—skills like riding a bike, playing an instrument, or debugging code. Unlike factual knowledge (which can be consciously retrieved), procedural knowledge operates below conscious awareness. You can't verbally explain all the micro-adjustments you make when riding a bike because those adjustments happen faster than conscious thought. Similarly, expert programmers make dozens of intuitive decisions per minute that they can't consciously articulate because the knowledge has been compiled into unconscious competence.

"You can't unlearn what you've learned, and you can't see it with fresh eyes anymore."

— UserTesting Research

Mental models compound the curse. Experts develop sophisticated frameworks for understanding their domains—interconnected webs of concepts that let them quickly categorize problems and apply solutions. These models are incredibly efficient for expert thinking but nearly impossible to transfer intact. When experts try to explain their reasoning, they describe the conclusions their models produce, not the underlying structure. Beginners hear the conclusions but lack the model to generate similar insights themselves.

Breaking the curse requires systematic effort and specific techniques. The first step is awareness—recognizing the warning signs that you've slipped into cursed thinking. If you find yourself frustrated by questions that seem "basic," if you're using jargon without pausing to define it, if you're saying "obviously" or "clearly" frequently, you're probably cursed.

Once aware, experts can deploy several evidence-based strategies:

Seek fresh perspectives actively. Expose your explanations to genuine novices and watch where they stumble. Their confusion reveals the gaps in your explanation that the curse made invisible. Don't dismiss their struggles as stupidity—treat confusion as data about what needs better explanation.

Make the implicit explicit. Force yourself to articulate every step, even ones that feel "obvious." Use phrases like: "Let me back up and explain why this matters," or "What I'm assuming you already know is..." These phrases signal you're about to provide foundational context, inviting beginners to ask questions if that context isn't clear.

Use analogies from the learner's domain. Instead of explaining new concepts in terms of other new concepts, connect them to knowledge the learner already possesses. A computer science professor might explain recursion by comparing it to Russian nesting dolls rather than using more programming examples. The analogy bridges the knowledge gap by building on stable ground.

Teach backwards from problems to solutions. Experts often teach by building up from fundamentals—first principles, then applications. But beginners learn better when starting with concrete problems that illustrate why the fundamentals matter. Show the confusing situation first, then explain the concept that resolves the confusion. This sequence mirrors how experts originally learned: through encountering and solving problems.

Document your thinking process, not just your conclusions. When creating documentation or tutorials, narrate the reasoning behind each step. Don't just say what to do—explain why you're doing it and what alternatives you considered. This gives learners access to the expert thought process, not just expert outputs.

The first step to breaking the curse is awareness. If you're saying "obviously" or "clearly" frequently, or frustrated by "basic" questions, you're probably cursed.

Use cognitive task analysis. This technique, borrowed from cognitive psychology, involves experts performing a task while verbalizing every decision, no matter how trivial it seems. Recording and reviewing these verbalizations reveals the hidden steps experts take unconsciously. These steps can then be explicitly taught to beginners.

Not everyone can fix the curse from the expert side. If you're a learner struggling with a cursed expert, you're not helpless—there are strategies to extract better explanations.

First, recognize that confusion is a signal, not a failure. When an expert explanation doesn't make sense, the problem usually lies in missing prerequisites, not in your intelligence. Instead of nodding along pretending to understand, explicitly identify where you lost the thread: "I understood everything up until you mentioned X. Can you explain what that is?"

Second, ask for examples before theory. When experts launch into abstract explanations, interrupt politely and request a concrete case: "Can you show me an example of that in practice?" Examples make the abstract tangible, providing scaffolding for understanding the general principle.

Third, paraphrase back what you think you heard. Say "So what you're saying is..." and restate the concept in your own words. This forces the expert to verify whether you've understood correctly and reveals misconceptions before they compound.

Fourth, seek multiple sources. If one expert's explanation doesn't work, find another. Different experts skip different steps based on their particular path to expertise. One explanation that's opaque might become clear when presented from another angle.

As society becomes more complex and specialized, the curse of knowledge grows more dangerous. We need experts to translate specialized knowledge into public understanding—climate scientists explaining climate change, economists explaining policy, doctors explaining health risks. When these translations fail due to the curse, public understanding suffers, leading to poor decisions and decreased trust in expertise.

Some promising developments suggest ways forward. AI language models, trained on vast corpuses of text at multiple sophistication levels, might help bridge expertise gaps by translating expert language into beginner-friendly explanations. These models aren't cursed—they can generate explanations at any level of complexity by pattern-matching against their training data.

Educational technology is also evolving to combat the curse. Adaptive learning systems monitor where students struggle and provide supplementary explanations automatically. Intelligent tutoring systems can scaffold instruction in ways human experts, blinded by their curse, might miss.

Perhaps most importantly, we're developing better frameworks for teaching teachers. Programs that train graduate students, subject matter experts, and technical professionals in pedagogy increasingly emphasize the curse of knowledge explicitly. By naming the bias and providing tools to counteract it, we can create a generation of experts who know how to escape their own blind spots.

The curse of knowledge isn't going away—it's a fundamental feature of how expertise works. But by understanding its mechanisms and deploying systematic strategies to counteract it, we can dramatically improve how knowledge flows from expert to beginner. In an age where complex problems require both deep expertise and broad public understanding, breaking this curse isn't optional—it's essential.

The gap between what experts know and what they can communicate may never fully close. But recognizing the curse is the first step toward bridging it. When you next find yourself baffled by someone's inability to grasp what seems obvious, pause and remember: the problem isn't their ignorance. It's your curse. And fortunately, curses can be broken—if you know they're there.

Curiosity rover detects mysterious methane spikes on Mars that vanish within hours, defying atmospheric models. Scientists debate whether the source is hidden microbial life or geological processes, while new research reveals UV-activated dust rapidly destroys the gas.

CMA is a selective cellular cleanup system that targets damaged proteins for degradation. As we age, CMA declines—leading to toxic protein accumulation and neurodegeneration. Scientists are developing therapies to restore CMA function and potentially prevent brain diseases.

Intercropping boosts farm yields by 20-50% by growing multiple crops together, using complementary resource use, nitrogen fixation, and pest suppression to build resilience against climate shocks while reducing costs.

Cryptomnesia—unconsciously reproducing ideas you've encountered before while believing them to be original—affects everyone from songwriters to academics. This article explores the neuroscience behind why our brains fail to flag recycled ideas and provides evidence-based strategies to protect your creative integrity.

Cuttlefish pass the marshmallow test by waiting up to 130 seconds for preferred food, demonstrating time perception and self-control with a radically different brain structure. This challenges assumptions about intelligence requiring vertebrate-type brains and suggests consciousness may be more widespread than previously thought.

Epistemic closure has fractured shared reality: algorithmic echo chambers and motivated reasoning trap us in separate information ecosystems where we can't agree on basic facts. This threatens democracy, public health coordination, and collective action on civilizational challenges. Solutions require platform accountability, media literacy, identity-bridging interventions, and cultural commitment to truth over tribalism.

Transformer architectures with self-attention mechanisms have completely replaced static word vectors like Word2Vec in NLP by generating contextual embeddings that adapt to word meaning based on surrounding context, enabling dramatic performance improvements across all language understanding tasks.