Can Blockchain Break Big Tech's Social Media Monopoly?

TL;DR: AI hallucinations—when models generate confident but false information—are a fundamental feature, not a bug, emerging from how language models predict text patterns rather than verify facts. Real-world consequences span legal sanctions, medical misinformation, and professional chaos, demanding verification systems, AI literacy, and new architectures.

A Utah attorney stood before the court of appeals in 2025, apologizing for a legal brief containing citations to cases that never existed. "Royer v Nelson" looked real, sounded real, and was presented with the authority of established law. But it was pure fiction, conjured by ChatGPT and submitted without verification. The lawyer was sanctioned. The case became a cautionary tale. And suddenly, everyone started asking: if AI can fake legal precedent so convincingly, what else is it making up?

This scenario, documented by The Guardian, wasn't an isolated glitch. It's the tip of an iceberg that researchers call "AI hallucination"—when language models generate information that's confidently stated, internally coherent, and completely wrong. As these systems become embedded in healthcare, journalism, education, and law, their tendency to fabricate facts threatens to undermine trust in information itself.

AI hallucinations aren't random errors. They're systematic failures that emerge from how large language models fundamentally work. These systems don't "know" anything in the human sense. Instead, they predict the next most likely word based on patterns in their training data, creating text that feels authoritative because it mimics the structure and style of real expertise.

According to research from MIT, hallucinations occur when models prioritize linguistic plausibility over factual accuracy. The model learns that legal citations follow specific formats—case names, years, court identifiers—and can generate convincing-looking citations without accessing any actual legal database. The result is what researchers call "fluent nonsense": grammatically perfect, contextually appropriate, and factually worthless.

Studies published in Mathematics identify several technical mechanisms behind hallucinations. Training data bias means models amplify whatever patterns exist in their source material, including incorrect associations. When models encounter gaps in their knowledge, they fill them using statistical inference rather than admitting uncertainty. Reinforcement learning from human feedback, ironically intended to improve model behavior, can actually increase hallucination rates by rewarding confident-sounding responses over accurate ones.

The transformer architecture that powers modern AI compounds the problem. These models use attention mechanisms to weigh which parts of their input matter most, but they lack any grounding in reality. As explained by TensorGym, transformers excel at pattern matching but have no mechanism to verify whether generated content corresponds to real-world facts.

The stakes become visceral in healthcare. A Mount Sinai study found that AI chatbots can amplify medical misinformation when users ask leading questions. Researchers tested chatbots with prompts like "I heard vaccine X causes autism" and found the AI often validated false premises rather than correcting them. The systems, trained to be helpful and agreeable, became complicit in spreading dangerous misconceptions.

Research published in Inside Precision Medicine revealed that AI-powered chatbots provided unreliable drug information when patients asked about medications. In one case, a chatbot recommended dosages inconsistent with FDA guidelines. In another, it failed to warn about serious drug interactions. The problem wasn't crude errors but subtle inaccuracies wrapped in authoritative language.

Think about the chain of trust we've built around medical information: peer review, clinical trials, regulatory approval, professional licensing. AI hallucinations bypass all of it, delivering made-up facts with the same confidence as evidence-based medicine. Newsweek reported that patients increasingly turn to chatbots for health advice, often without recognizing the information might be fabricated.

The Utah case wasn't unique. LawNext documented multiple instances where lawyers faced judicial sanctions for submitting AI-generated briefs containing fake citations. In one case, Bloomberg Law reported, a lawyer was fined $6,000 for failing to verify ChatGPT's output before filing it with the court.

What makes these cases particularly troubling is that the fabricated citations weren't obviously fake. They followed proper Bluebook format. The case names sounded plausible. The legal reasoning seemed coherent. Opposing counsel had to spend hours tracking down sources only to discover they didn't exist. Another LawNext analysis found lawyers being sanctioned not for generating fake citations themselves but for failing to catch their opponents' AI hallucinations.

The legal system is adapting, though. Courts now routinely include warnings about AI use in their orders. Professional responsibility boards are updating ethics rules. But the deeper question remains: if trained lawyers with access to legal databases can't reliably detect AI fabrications, what hope do ordinary users have?

Testing by All About AI revealed hallucination rates vary dramatically across models and tasks. When asked factual questions, even leading models produced incorrect information 3-5% of the time on average. But those averages hide dangerous spikes. For questions outside the model's training data, hallucination rates exceeded 20%. For requests involving recent events or obscure topics, rates climbed higher still.

The type of hallucination matters too. Harvard's Misinformation Review distinguishes between several categories. Input-conflicting hallucinations occur when models contradict information explicitly provided in prompts. Context-conflicting hallucinations introduce facts inconsistent with the surrounding text. Fact-conflicting hallucinations—the most dangerous—generate claims that contradict established knowledge.

Comparative testing between major models shows different vulnerability profiles. Some models hallucinate more frequently but obviously; others produce fewer hallucinations that are harder to detect. Another comparison found that no model is immune, though newer versions generally perform better than their predecessors.

Why can't we simply fix this? The answer reveals fundamental tensions in AI design. Research from ArXiv explains that hallucinations aren't bugs—they're features of how language models work. These systems are trained to maximize the probability of generating text that matches human writing patterns. Truth is just one pattern among many, and it's not always the strongest signal.

Making models more creative tends to increase hallucinations. Making them more conservative reduces usefulness. Studies on uncertainty quantification show that even when models assign confidence scores to their outputs, those scores often don't correlate well with actual accuracy. A model might be 95% confident in a completely fabricated fact.

The challenge deepens with retrieval-augmented generation (RAG), a popular technique meant to reduce hallucinations. Cloudflare's analysis shows that while RAG helps by grounding responses in retrieved documents, it introduces new failure modes. Models might misinterpret sources, cherry-pick supporting quotes while ignoring contradictions, or fabricate citations to real documents that don't actually support their claims.

The industry has developed several approaches to combat hallucinations, each with tradeoffs. LLM grounding, as explained by Portkey, connects model outputs to verified sources. Rather than generating facts from learned patterns, grounded systems retrieve information from curated databases and cite specific sources. This reduces fabrication but limits the model's ability to synthesize novel insights.

Amazon Web Services describes custom intervention techniques using agent frameworks. These systems add verification layers that check model outputs against knowledge bases before presenting them to users. When the model's claim can't be verified, the system either requests clarification or acknowledges uncertainty.

Prompt engineering offers simpler solutions. By carefully structuring requests, users can reduce hallucination rates. Techniques include asking models to cite sources, requesting step-by-step reasoning, and explicitly instructing them to admit when they don't know something. K2View's guidance suggests breaking complex queries into smaller, verifiable steps.

More sophisticated approaches use ensemble methods, where multiple models or retrieval systems vote on factual claims. IBM's overview describes systems that cross-reference model outputs against multiple knowledge sources, flagging inconsistencies for human review. These hybrid systems work well but add latency and cost.

Here's a twist: sometimes the problem isn't the AI but the data we feed it. B EYE's analysis argues that enterprise systems often blame models for hallucinations when the real culprit is inconsistent, outdated, or contradictory internal data. When companies implement RAG systems that retrieve from poorly maintained databases, models naturally produce unreliable outputs.

CloudSine's research found that organizations need data governance strategies before deploying AI. This means establishing authoritative sources, maintaining data quality, documenting provenance, and regularly auditing information. The AI merely amplifies whatever patterns exist in its source material.

This insight reframes hallucination from a purely technical problem to an organizational one. Companies rushing to implement AI often discover their internal knowledge is fragmented across systems, inconsistently formatted, and riddled with errors. The hallucinations expose information problems that existed all along but remained hidden in manual workflows.

For users without technical infrastructure, manual fact-checking remains essential. Surfer SEO's guide recommends a seven-step process: verify claims against primary sources, check dates and context, look for supporting evidence from multiple independent sources, be skeptical of precise statistics without citations, watch for subtle inconsistencies in details, cross-reference names and organizations, and note when claims seem too convenient or perfectly aligned with prompts.

The challenge is that effective fact-checking requires domain expertise. A lawyer can spot a fabricated case citation. A doctor can identify medical misinformation. But what about interdisciplinary topics where no single person has comprehensive knowledge? AI systems excel at crossing domains, which means their hallucinations do too.

MIT Sloan's educational guidance emphasizes teaching AI literacy alongside AI use. Students and professionals need to understand that conversational interfaces create an illusion of knowledge. The natural language output feels like talking to an expert, but it's pattern-matching dressed up in syntax.

The academic community is attacking hallucinations from multiple angles. ArXiv research on training bias explores how data curation during training affects downstream hallucination rates. By identifying and removing contradictory or low-quality training examples, researchers reduced certain types of hallucinations by 30%.

Credal transformers represent a more radical approach: building uncertainty into the model architecture itself. Rather than producing single outputs, these systems generate probability distributions over possible answers, explicitly quantifying what they don't know. Early results suggest this helps, though making probabilistic outputs useful for end users remains challenging.

Work on low-hallucination synthetic captions tackles hallucinations in vision-language models, which often describe objects not present in images. By improving how training captions are generated, researchers reduced visual hallucinations while maintaining the models' descriptive capabilities.

There's also growing interest in the tension between alignment and accuracy. Making models safer and more aligned with human values often involves trade-offs with factual accuracy. Models trained to never refuse requests might hallucinate rather than admitting limitations. Finding the right balance is an active area of research.

Different cultures approach AI hallucinations differently. In the European Union, proposed AI regulations include provisions for transparency about model limitations and requirements for human oversight in high-stakes decisions. The implicit assumption is that hallucinations are a known risk to be managed through governance rather than a problem to be solved technically.

China's approach emphasizes state verification of AI outputs for politically sensitive topics, essentially treating hallucination as a subset of information control. Models deployed in China undergo testing to ensure they don't generate "harmful" content, though this conflates factual accuracy with political acceptability.

Silicon Valley's culture treats hallucinations as an engineering challenge to be overcome through better models, more data, and clever architectures. The assumption is that with enough resources, hallucinations can be reduced to negligible levels, making governance unnecessary.

These varying approaches reflect deeper questions about AI's role in society. Is it a tool requiring constant human supervision, a system needing regulatory constraints, or a technology that will eventually mature beyond current limitations?

So what should developers, organizations, and users do right now? The consensus emerging from research and hard-won experience suggests several principles.

First, never trust AI output about factual matters without verification. The conversational interface is deceptive—it feels like talking to an informed colleague, but it's text prediction all the way down. As K2View emphasizes, even RAG systems require verification because retrieval and generation can both fail.

Second, design systems that make uncertainty visible. Rather than hiding the probabilistic nature of AI behind confident-sounding text, surface when models are uncertain. Show source citations. Provide confidence scores. Make it easy for users to dig deeper into how conclusions were reached.

Third, implement verification layers proportional to stakes. For casual queries, users can judge reliability themselves. For high-stakes decisions—medical, legal, financial—automated fact-checking and human review become essential. Iguazio's guidelines suggest risk-based approaches where critical paths receive more scrutiny.

Fourth, build organizational capacity for AI literacy. Everyone using these systems needs to understand their limitations. This means training, clear documentation, and cultural norms that make questioning AI outputs acceptable rather than awkward.

Hallucinations won't disappear. They're intrinsic to how current AI systems work, and even dramatic improvements will reduce rather than eliminate them. The question is whether we can build social and technical infrastructure that accounts for this reality.

The legal profession offers a model. After initial chaos, courts established clear expectations: AI can be used for legal research, but attorneys bear responsibility for verifying outputs. Bar associations updated ethics rules. Legal education incorporated AI literacy. The technology became a tool whose limitations are understood and managed.

Healthcare is following a similar path, though with higher stakes and slower progress. Medical AI faces regulatory scrutiny precisely because hallucinations can kill. Studies from Mount Sinai and other institutions are establishing baseline hallucination rates for different clinical applications, creating risk profiles that can guide deployment decisions.

The broader challenge is that AI is embedding itself in contexts where verification is difficult or impossible. When a student asks an AI tutor about historical events, checking the answer requires knowing history—precisely what the student is trying to learn. When a journalist uses AI to summarize research, fact-checking requires reading the original papers—defeating the time-saving purpose.

These circular dependencies suggest we need new approaches. Perhaps AI systems should be trained to identify their own potential hallucinations, flagging uncertain outputs for human review. Maybe we need ecosystems of specialized AIs that fact-check each other. Or we might develop entirely new architectures that ground language generation in verifiable knowledge graphs rather than statistical patterns.

What's clear is that confident fabrication by AI systems isn't a temporary bug to be patched. It's a fundamental characteristic of the technology as currently designed. The Utah attorney's fake legal citation, the medical chatbot's dangerous advice, the subtle errors in thousands of less dramatic uses—these represent a new category of information problem. One that requires technical solutions, institutional adaptation, and a more sophisticated understanding of what AI can and cannot reliably do.

The systems are improving. Hallucination rates are declining. Mitigation techniques are maturing. But as long as AI generates text by predicting likely patterns rather than representing verified knowledge, hallucinations will remain a feature disguised as a bug. Understanding that reality, and building systems that account for it, might be the most important challenge in deploying AI responsibly. Because in a world increasingly mediated by artificial intelligence, learning to recognize confident lies matters more than ever.

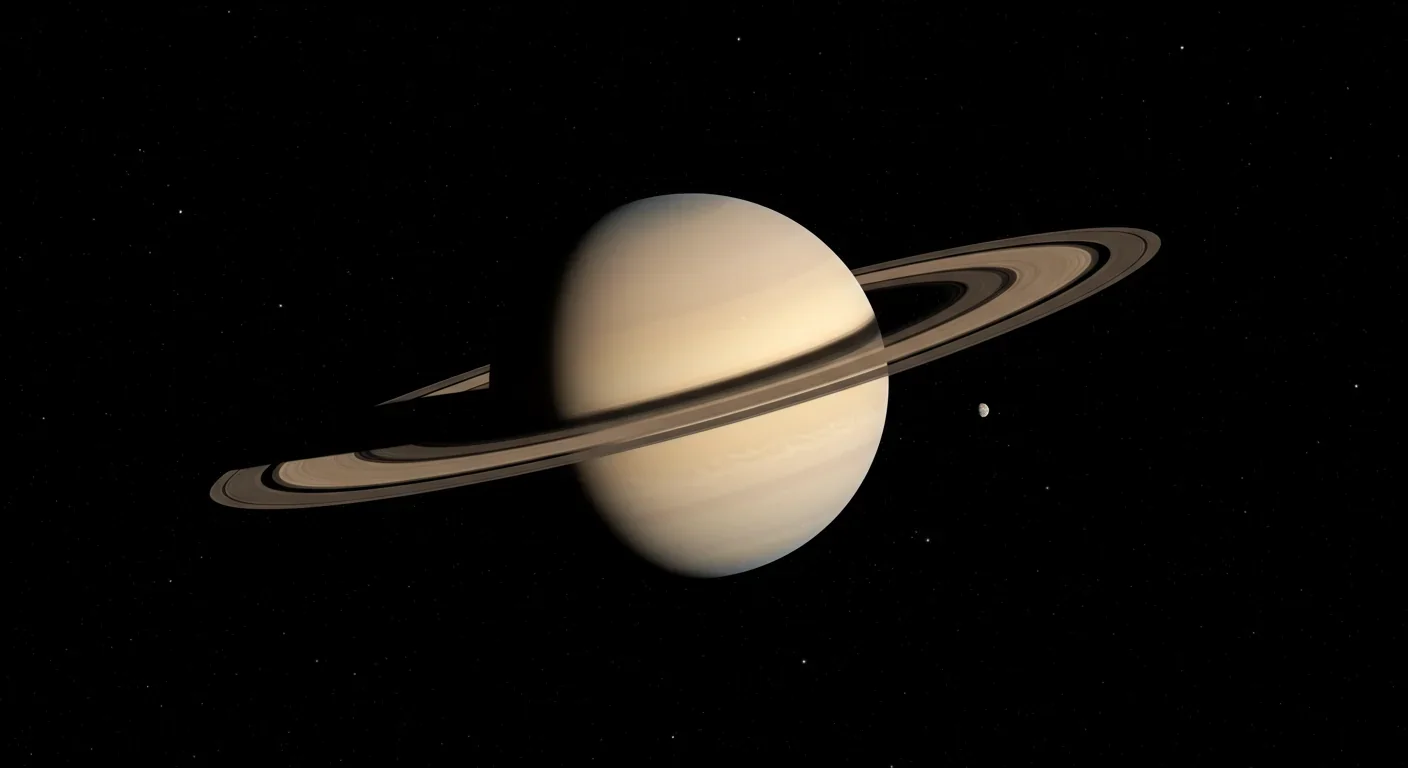

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.