Can Blockchain Break Big Tech's Social Media Monopoly?

TL;DR: Companies use sophisticated algorithms to charge different prices to different customers based on browsing history, device type, location, and behavior patterns. While legal in most cases, these practices raise ethical concerns and regulatory scrutiny is increasing.

You're shopping for the same flight, from the same airport, on the same day. Your friend pulls up the airline's website and sees $247. You check your phone and see $312. Same flight, same seat, same everything. What gives?

Welcome to the world of algorithmic pricing discrimination, where invisible systems decide how much you're worth and how much you should pay. These aren't random fluctuations or lucky timing, they're calculated decisions made by sophisticated machine learning models that analyze thousands of data points about you in milliseconds. Your browsing history, device type, location, purchase patterns, and even the time of day you typically shop all factor into a personalized price tag that might be higher or lower than the person sitting next to you.

This isn't science fiction. It's happening right now, every time you book a ride, reserve a hotel, or add something to your cart. Companies call it "dynamic pricing" or "revenue optimization." Consumer advocates call it price discrimination, and it's reshaping the fundamental nature of commerce. The invisible hand of the market has been replaced by the invisible algorithm of profit maximization.

Algorithmic pricing systems work by constantly adjusting prices based on real-time data. These aren't simple formulas, they're neural networks trained on millions of transactions, learning patterns that even their creators don't fully understand. The systems ingest data from dozens of sources: your browsing behavior, competitor prices, inventory levels, weather forecasts, local events, economic indicators, and predictive models of future demand.

The sophistication is staggering. Machine learning models can identify micro-segments of customers, each with different price sensitivities. They know that someone searching from an iPhone in an affluent zip code will pay more than someone on an Android device in a middle-income area. They track how many times you've visited a product page, understanding that repeated visits signal higher intent and willingness to pay.

Airlines were pioneers here. American Airlines introduced yield management in the 1980s, and president Robert Crandall claimed it generated an extra $500 million annually. Back then, it was relatively simple: charge more for last-minute bookings when business travelers had no choice. Today's systems are exponentially more complex, adjusting prices thousands of times per day based on seat availability, competitor actions, historical patterns, and individual customer profiles.

The technology has become remarkably efficient at extracting consumer surplus, the economic term for the gap between what you're willing to pay and what you actually pay. Research shows that current airline pricing practices capture approximately 89% of theoretical maximum welfare, a figure that would have seemed impossible a generation ago. What's left in your pocket is increasingly by design, not accident.

Ride-sharing apps brought algorithmic pricing to the masses, making it visible and controversial. Uber's surge pricing multiplier became infamous, turning routine trips into expensive surprises during peak demand. The company justified it as necessary to bring more drivers onto the road, balancing supply and demand in real time.

But the system does more than respond to aggregate demand. It learns individual patterns. The algorithm knows if you typically accept surge prices or wait them out. It understands that some riders are price-insensitive during emergencies or after nights out. Studies of Uber's surge pricing strategy reveal a sophisticated balancing act designed to maximize revenue while maintaining just enough supply to avoid complete market failure.

The psychological impact is significant. Unlike airline tickets purchased weeks in advance, surge pricing hits you in the moment when you're tired, stressed, or stranded. You can see the price climbing in real time, creating pressure to book before it gets worse. The algorithm understands this urgency and exploits it.

What's less visible is that ride-sharing apps may also adjust base prices based on your profile. The personalized pricing extends beyond surge multipliers to subtle adjustments in regular fares based on your history, device, and predicted price sensitivity. Two riders requesting the same trip at the same time might see different base prices before any surge is applied.

Online shopping has become a minefield of dynamic pricing, with retailers constantly testing your willingness to pay. Amazon, the e-commerce giant, has faced intense scrutiny for pricing practices that some customers describe as discriminatory. The company adjusts prices millions of times per day based on competitor pricing, inventory levels, and customer behavior.

E-commerce pricing strategies have become incredibly sophisticated. Retailers track your entire customer journey: what you searched for, what you clicked on, how long you lingered on product pages, what you added and removed from your cart, and what you ultimately purchased. This behavioral data feeds algorithms that predict your maximum willingness to pay.

The practice of showing different prices to different users based on their profile isn't just theory. Investigations have documented instances where personal data is used to set individualized consumer prices that generate higher profits for companies. The FTC has launched studies into these "surveillance pricing" practices, finding that major retailers are systematically using customer data to price discriminate.

Shopping cart abandonment emails offer a window into this system. That 10% discount code that arrives an hour after you leave items in your cart? The algorithm calculated that you need a small incentive but not a large one. Someone else might get 20% off, or no offer at all if the system predicts they'll return and purchase anyway.

Hotels and booking platforms have created perhaps the most opaque pricing ecosystem. The same room on the same night can appear at wildly different prices depending on where you look and who you are. Booking strategies reveal multiple layers of price discrimination: rates vary by booking platform, loyalty status, device type, geographic location, and browsing history.

The hotel industry pioneered revenue management alongside airlines, but the online booking revolution added new complexity. Now you're not just dealing with the hotel's pricing algorithm but also the algorithms of Booking.com, Expedia, Hotels.com, and dozens of other platforms, each with their own optimization strategies.

These platforms use dynamic pricing models that consider hundreds of variables: local events, competitor pricing, weather forecasts, historical patterns, and your individual likelihood of booking. The system knows that someone searching for a hotel two hours from now will pay more than someone planning a trip three months out. It knows that mobile users book more impulsively than desktop users.

What's particularly insidious is the way these platforms obscure true availability and pricing. That message saying "only 2 rooms left at this price" might be algorithmically generated pressure rather than actual scarcity. The countdown timer isn't necessarily tied to real inventory, it's a psychological tool to push you toward an immediate decision before you can comparison shop.

The legal landscape around algorithmic pricing is murky and rapidly evolving. Traditional price discrimination has long been legal in most contexts, businesses have always charged different customers different prices. But personalized pricing based on surveillance of individual behavior raises new questions about fairness, transparency, and consumer protection.

The FTC has increasingly focused on these practices. Recent actions against major retailers have highlighted concerns about how personal data is weaponized to extract maximum revenue from each customer. The agency argues that when companies use detailed surveillance to identify vulnerable consumers or exploit moments of desperation, they cross ethical and potentially legal lines.

European regulators have gone further, with GDPR providing some protections against automated decision-making that significantly affects consumers. The regulation requires transparency about algorithmic decisions and gives consumers rights to contest them. However, enforcement remains spotty, and companies have become adept at obscuring their pricing logic behind trade secret claims.

Research into price discrimination in international airline markets demonstrates the global nature of these practices. Airlines operating across jurisdictions face different regulatory environments, leading to a patchwork of protections. What's legal in the United States might violate European consumer protection laws, creating complexity for both companies and consumers.

The fundamental legal question is whether algorithmic pricing constitutes unfair discrimination when it's based on protected characteristics or proxies for them. If an algorithm learns that certain demographic groups are more price-sensitive and systematically charges them less, is that efficiency or illegal discrimination? Courts haven't definitively answered this question.

Consumers aren't entirely powerless, awareness is the first defense. Several telltale signs indicate you're seeing a personalized price rather than a universal one. The most obvious is price variation across devices or browsers. If you see different prices when checking from your phone versus your laptop, the algorithm is targeting you.

Incognito mode booking has become popular wisdom for avoiding price manipulation. The theory is that browsing in private mode prevents companies from tracking your interest and inflating prices on repeat visits. Reality is more complicated. While clearing cookies can help, sophisticated systems use device fingerprinting, IP addresses, and other identifiers that persist across private browsing.

Price comparison tools offer some protection by aggregating options from multiple sources, but they have limitations. Many booking platforms pay comparison sites for prominent placement, biasing the results you see. Additionally, businesses investigating dynamic pricing have learned to game these comparison tools, showing attractive rates that disappear during the booking process or come with hidden fees.

Timing your purchases strategically can help. Many systems assume you'll pay more when you're in a hurry, so booking well in advance generally yields better prices. However, AI-powered pricing systems are learning to counter this, using predictive analytics to identify early bookers who are price-conscious versus those who are simply organized planners willing to pay premium rates.

Creating multiple accounts or using different email addresses can sometimes reveal price variations, though this is time-consuming and potentially violates terms of service. Some consumers have reported success with VPN services to appear in different geographic locations, though companies are increasingly detecting and blocking this tactic.

The market has responded to consumer concerns with tools designed to level the playing field. Browser extensions like Honey and Capital One Shopping promise to find better prices and apply coupon codes automatically. While helpful, these tools also collect data on your shopping behavior, potentially feeding the same algorithmic systems they claim to counter.

Price tracking apps and services monitor products over time, alerting you when prices drop. CamelCamelCamel for Amazon and similar services for other retailers can help you avoid buying at peak prices. However, sophisticated e-commerce pricing strategies now account for these trackers, sometimes maintaining artificially high prices that occasionally drop to create the illusion of deals.

Credit cards with price protection offer some recourse, automatically refunding the difference if prices drop shortly after purchase. But these benefits are disappearing as card issuers face increasing costs from dynamic pricing environments where prices fluctuate constantly.

Community-driven platforms where users share prices they're seeing can help identify discrimination. Reddit threads and specialized forums discuss pricing anomalies, though this approach requires significant effort and only works for purchases where you have time to research.

The most powerful tool might be collective action. When enough consumers become aware of pricing discrimination and change their behavior, companies face reputational risks. Social media has amplified stories of particularly egregious pricing practices, forcing some companies to walk back aggressive algorithms after public backlash.

Policymakers worldwide are grappling with how to regulate algorithmic pricing without stifling beneficial innovation. The challenge is distinguishing between efficiency-improving dynamic pricing and exploitative surveillance-based discrimination. FTC studies on surveillance pricing have documented the extent of data collection but haven't yet resulted in comprehensive regulations.

Proposed regulations focus on transparency and consumer rights. One approach would require companies to disclose when they're using personalized pricing and what data informs those decisions. Critics argue this is insufficient, algorithmic systems are so complex that disclosure would be meaningless to most consumers. Others advocate for a right to see and challenge the price you're offered, similar to credit report disputes.

Some economists argue for baseline price requirements, where companies must offer a reference price available to all consumers, with personalized adjustments disclosed as premiums or discounts from that baseline. This would make discrimination visible rather than hidden. The challenge is enforcement, companies could game the system by setting high baselines and offering most customers "discounts."

European approaches emphasize data protection and algorithmic accountability. GDPR's restrictions on automated decision-making provide more consumer control than U.S. laws, but the complexity of modern pricing systems makes it difficult for individuals to exercise these rights effectively. Most consumers don't even know to ask about algorithmic pricing, much less how to contest it.

The most aggressive proposals would ban certain types of personalized pricing altogether, particularly in essential services or when based on protected characteristics. However, defining what constitutes an essential service in the digital economy is contentious, and proving that algorithms discriminate based on protected characteristics is technically challenging when systems use hundreds of correlated variables.

While personal strategies help, systemic change requires collective and political action. Consumer advocacy groups are pushing for algorithmic transparency laws that would require companies to explain their pricing systems in plain language. The goal isn't to expose trade secrets but to make the basic logic and data sources visible to regulators and consumers.

Competition policy offers another avenue. If algorithmic pricing allows companies to segment markets perfectly and extract maximum surplus from each customer, it could reduce the competitive pressure that traditionally protects consumers. Antitrust authorities are exploring whether pricing algorithms facilitate implicit collusion, where competitors' systems learn to avoid price wars even without explicit communication.

Class action lawsuits have emerged as a tool for challenging the most aggressive practices. Recent settlements with major e-commerce platforms have forced some changes in pricing disclosure and data use, though companies typically don't admit wrongdoing. The legal theory is still evolving, plaintiffs must prove harm in environments where they can't see what price they should have received.

Labor organizing also plays a role, particularly in the gig economy. Drivers for ride-sharing platforms have pushed for transparency about how fare algorithms work and how their compensation is calculated. While these efforts focus on worker pay rather than consumer prices, they challenge the black-box nature of algorithmic systems more broadly.

Education matters too. As more consumers understand how algorithmic pricing works, they can make more informed decisions and demand better practices. Financial literacy programs increasingly include content on digital pricing discrimination, helping people recognize and respond to manipulative tactics.

The future of pricing sits at the intersection of technology, economics, and ethics. Algorithmic systems aren't inherently good or bad, they're tools that reflect the incentives and constraints we place on them. The question is whether we'll allow these systems to operate in the shadows or demand that they serve broader social goals beyond profit maximization.

Some technologists envision "algorithmic transparency certifications," where independent auditors verify that pricing systems don't discriminate improperly. This would be similar to how financial audits work, providing assurance without exposing competitive details. The challenge is developing standards and finding auditors with sufficient technical expertise.

Blockchain and decentralized systems offer alternative models where pricing is more transparent and harder to manipulate. While these technologies have their own issues, they demonstrate that the current paradigm of opaque, centralized pricing algorithms isn't the only option. As consumers become more sophisticated, demand may grow for platforms that prove their pricing fairness through verifiable systems.

Artificial intelligence could ironically be part of the solution. Personal AI assistants that negotiate on your behalf, comparing prices across platforms and identifying discrimination, could restore some balance to the relationship between consumers and sellers. These systems could operate at the same speed and sophistication as corporate pricing algorithms, leveling the playing field.

The fundamental choice before us is what kind of market we want. Pure efficiency, where prices extract maximum surplus from each consumer? Perfect transparency, where everyone pays the same? Or something in between that balances innovation with fairness? These aren't just technical questions, they're decisions about the society we're building.

What's certain is that algorithmic pricing isn't going away. The economic incentives are too strong, and the technology is too powerful. But that doesn't mean we're powerless. Through awareness, action, and advocacy, consumers can shape how these systems evolve. The algorithms may be invisible, but their impact on your wallet is very real. Understanding them is the first step toward making sure they serve you, not just the companies deploying them.

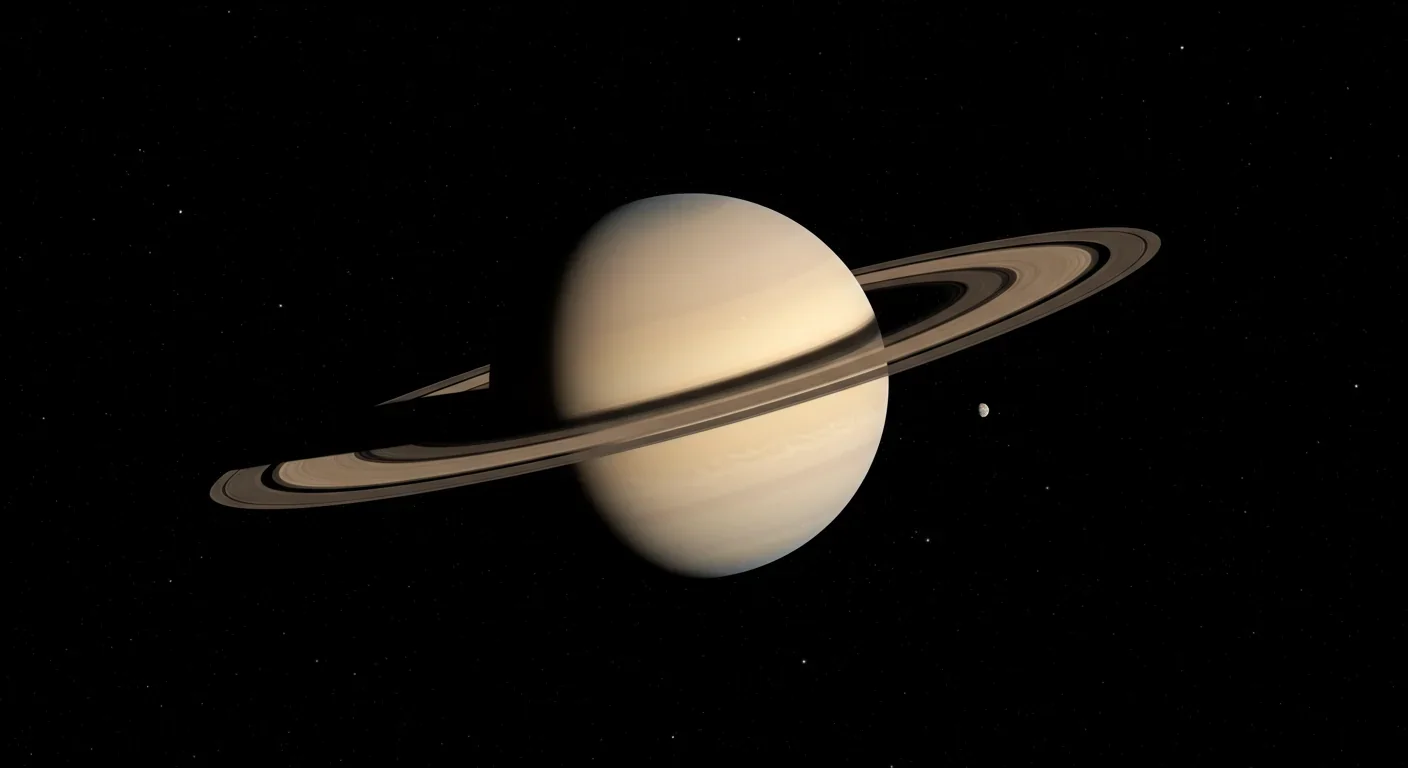

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.