Can Blockchain Break Big Tech's Social Media Monopoly?

TL;DR: NASA's Mars rovers use advanced AI algorithms to navigate autonomously across treacherous terrain, making split-second decisions without human input due to 20+ minute communication delays. Perseverance's AutoNav system can think while driving at speeds five times faster than previous rovers.

Picture this: You're driving through treacherous terrain at high speed, but there's a catch. Every decision you make takes 20 minutes to reach your brain, and another 20 to get back to your hands. That's the reality for Mars rovers, except they don't have the luxury of waiting. When a hazardous rock appears in Perseverance's path or a steep slope threatens to flip Curiosity sideways, these robotic explorers must think for themselves. And they're getting remarkably good at it.

Mars sits anywhere from 140 to 250 million miles from Earth, depending on where both planets are in their orbits. This creates what NASA engineers call the "communication delay problem." Radio signals, traveling at light speed, take between 4 and 24 minutes to cross that void in each direction. In practice, this means a round-trip communication takes 20+ minutes on average.

Early rovers like Sojourner in 1997 crept along at glacial speeds because human operators had to pre-plan every move. Send commands. Wait 20 minutes. See what happened. Adjust. Wait another 20 minutes. A simple drive that might take you five minutes on Earth could consume an entire Martian day.

The solution? Give rovers the ability to think, decide, and act on their own. But teaching a robot to navigate alien terrain autonomously turned out to be one of the hardest challenges in space exploration.

The transformation from remote-controlled rovers to autonomous explorers didn't happen overnight. Spirit and Opportunity, which landed in 2004, represented the first major leap. They could identify obvious hazards like large rocks and adjust their paths accordingly. But they still required human operators to select navigation modes and approve major decisions.

Curiosity, which touched down in 2012, brought more sophisticated autonomy. The rover could analyze terrain geometry, classify obstacles, and choose between different driving strategies. Yet it still needed humans to tell it when to be cautious and when to move fast.

Then came Perseverance in 2021, and everything changed.

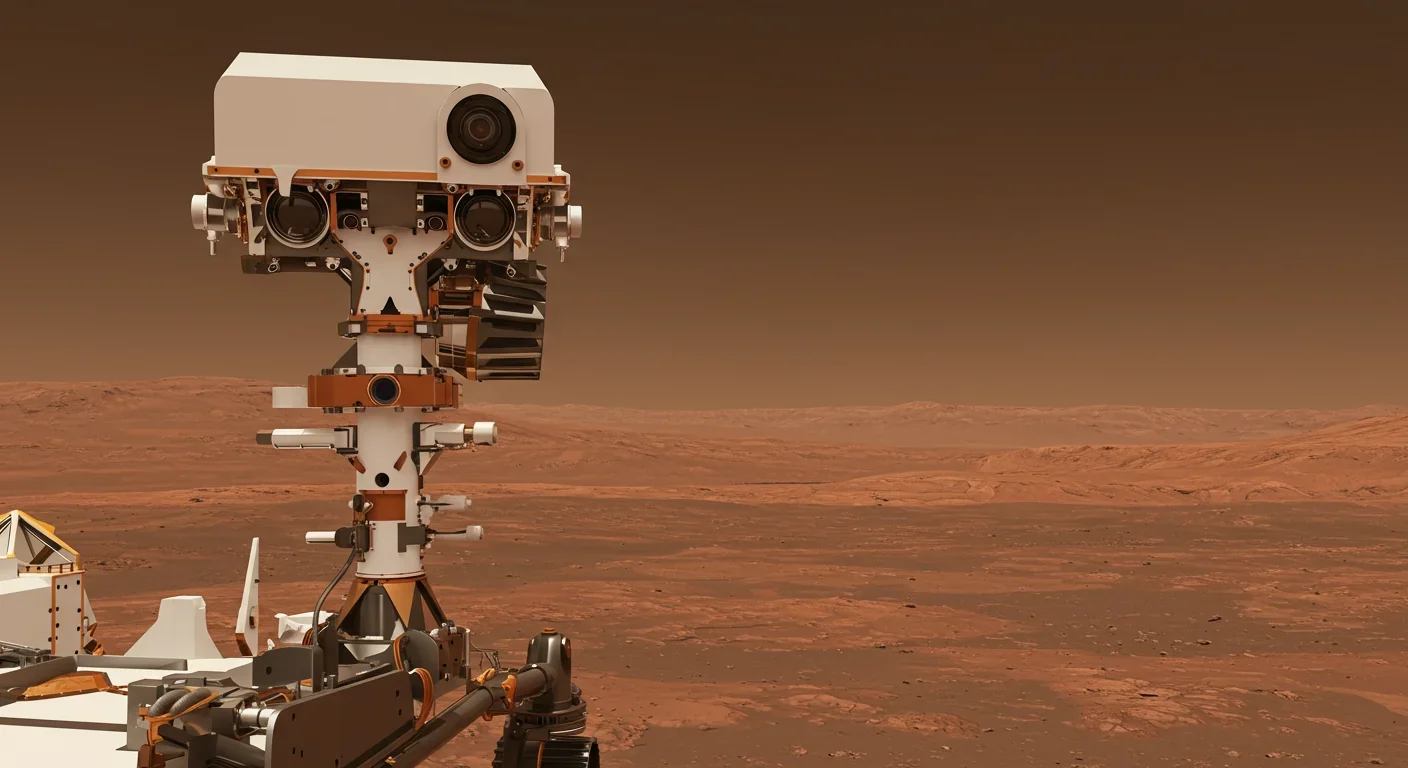

Perseverance's AutoNav system represents a quantum leap in rover autonomy. The key innovation? It can think while driving. Previous rovers had to stop, take pictures, process images, plan a path, and then drive. Perseverance does all of this simultaneously at speeds up to five times faster than Curiosity.

Here's how it works: A dedicated second computer with a field-programmable gate array (FPGA) processes stereo images from the rover's navigation cameras in real time, about 5 frames per second. As Perseverance rolls forward, this system continuously builds a 3D map of the terrain ahead, identifies hazards, calculates multiple possible paths, and selects the safest, most efficient route.

The rover employs something called Visual Odometry to track its exact position by monitoring how features in the landscape move through its field of view. This lets it measure wheel slip on loose sand or gravel and adjust its trajectory accordingly. It's like having GPS on a planet with no satellites.

On Sol 166 of Perseverance's mission, the rover faced a test that would have stumped its predecessors. The science team wanted to reach a formation called Citadelle, but getting there required climbing an 84-meter slope studded with rocks and cut by a narrow gap that wasn't visible in orbital imagery.

Under the old system, this would have required days of careful planning, multiple communication cycles, and probably several aborted attempts. Instead, engineers simply pointed Perseverance at the target and let AutoNav handle it.

The rover drove the entire 84 meters in a single session, autonomously identifying the gap, analyzing whether it could fit through, calculating the approach angle, and threading the needle. The AI essentially saved the mission days of work and prevented a potential disaster if the rover had blindly followed a pre-programmed path into an impassable obstacle.

Two weeks later, on Sol 200, Perseverance broke the record for the longest single-sol autonomous drive in rover history: 175.15 meters, with 167 meters covered using AutoNav after building just 8 meters of initial terrain map.

At the heart of these capabilities sits a suite of AI techniques working in concert. Vision-Language Models (VLMs) have recently been integrated to classify terrain complexity without needing traditional geometric analysis. The system can look at an image and essentially "understand" whether it's seeing flat ground, rocky terrain, or hazardous slopes by analyzing visual patterns the way a human geologist might.

For route planning, Perseverance uses a multi-mode navigation system. On flat terrain, it employs B-spline path generation with a pure pursuit controller, allowing it to zip along at 2 meters per second. When the terrain gets dicey, it switches to conservative navigation that builds detailed local maps and picks careful paths around obstacles.

The transition between these modes happens autonomously. Recent research shows that this multi-mode approach can improve traversal efficiency by nearly 80% compared to always using conservative navigation, while maintaining the same level of safety.

While much of rover navigation relies on classical computer vision and path planning algorithms, machine learning is playing an increasingly important role. NASA's AEGIS (Autonomous Exploration for Gathering Increased Science) system uses neural networks to identify scientifically interesting rocks and targets them with the rover's laser spectrometer, without waiting for human approval.

Curiosity has adapted to its aging power systems through machine learning. The rover's radioisotope thermoelectric generator decays over time, providing less energy each year. AI algorithms now optimize which instruments to use and when, maximizing scientific output despite diminishing power.

Deep learning models process terrain images to identify features like bedrock, sand dunes, and potential hazards. Some systems use depth estimation algorithms like MiDaS to generate detailed 3D understanding from 2D images, helping rovers understand not just what they're seeing, but how far away it is and whether it's traversable.

The real test of autonomous systems comes when things go wrong. And on Mars, things go wrong regularly.

During Perseverance's first AutoNav test, engineers deliberately planned a route toward a hazardous rock to see what the system would do. The AI correctly identified the obstacle as dangerous and rerouted around it without any human intervention. It was like watching a self-driving car on Earth, except this one was making life-or-death decisions with no possibility of over-the-air updates or emergency shutdowns.

Curiosity has faced its own close calls. The rover once approached a patch of terrain that looked solid in orbital imagery but turned out to be dangerously soft sand. Its hazard detection algorithms recognized the wheel slip, stopped forward progress, and executed a backing maneuver to firmer ground. A decade ago, a similar situation trapped Spirit in soft sand, eventually ending its mission.

The difference? AI that can recognize trouble and react in real time.

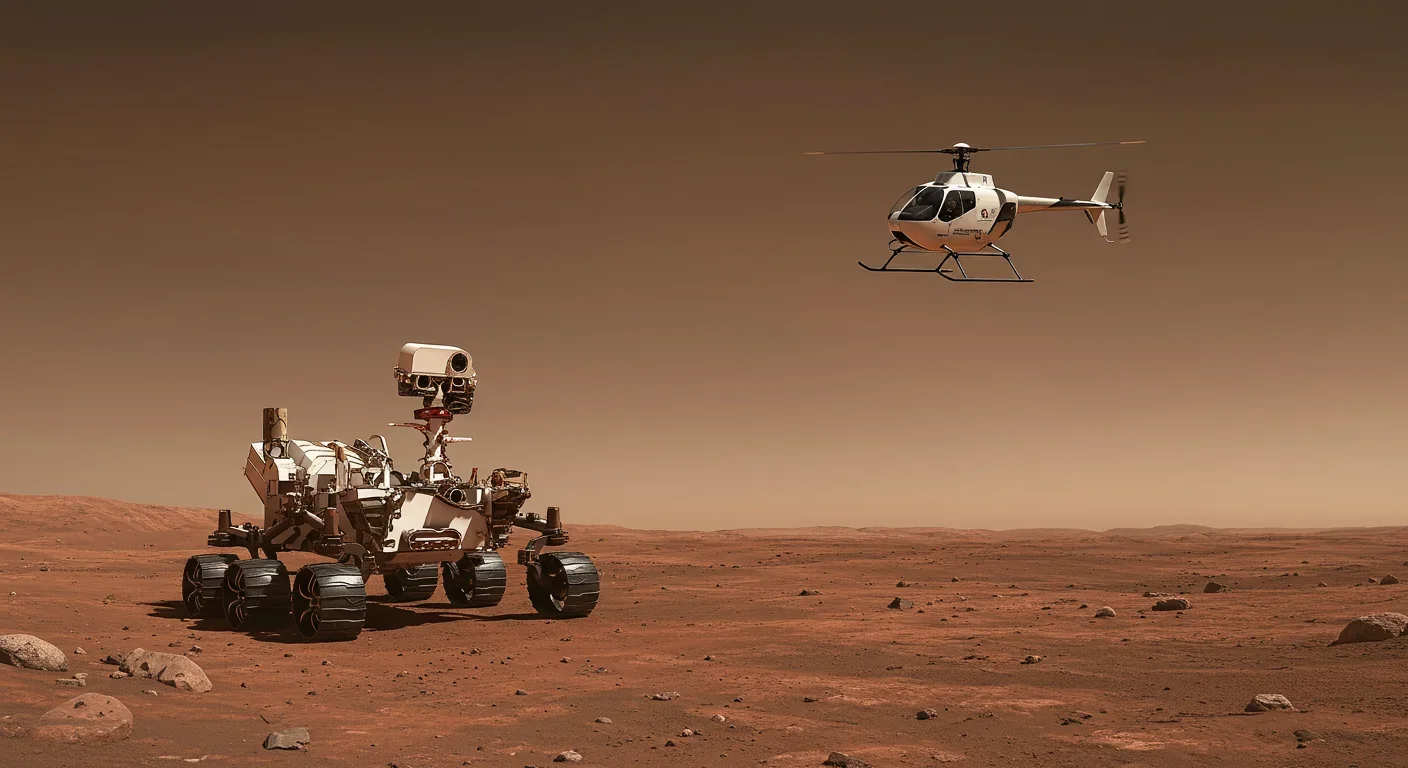

One of the most innovative developments in Mars exploration has been the integration of aerial and ground systems. Ingenuity, the helicopter that arrived with Perseverance, scouts terrain ahead of the rover using its Return-to-Earth (RTE) camera, providing high-resolution imagery that helps AutoNav plan safer, faster routes.

This represents a new paradigm in autonomous exploration: distributed intelligence. The helicopter sees the big picture, the rover handles the details, and both feed data into planning systems that optimize routes for speed, safety, and scientific value.

Imagine future missions with swarms of small rovers working together, sharing sensor data and coordinating movements through AI systems that distribute tasks based on each robot's capabilities and position. Research simulations have shown that five-rover micro-teams can achieve over 95% probability of scientific discovery by autonomously dividing exploration tasks.

Everything NASA learns from rover AI directly informs planning for crewed Mars missions, hopefully in the 2030s. But the requirements are fundamentally different in several crucial ways.

Rovers can take calculated risks because a damaged wheel or stuck robot, while expensive, doesn't cost human lives. Astronauts need higher reliability thresholds. The AI systems that guide human crews must be even more conservative, with multiple redundancies and the ability to explain their reasoning in ways that build trust.

The communication delay problem actually gets worse with humans. A rover can wait out a dust storm or take a day to analyze an obstacle. Astronauts operating on limited life support can't afford that luxury. They'll need AI systems that can make faster decisions with higher confidence levels, while still allowing human override when intuition suggests a different approach.

Energy management becomes critical too. Recent research on autonomous mission planning shows that AI can optimize routes to minimize power consumption while maximizing exploration. For astronauts, this could mean the difference between reaching a shelter before their oxygen runs low or getting stranded in the Martian wilderness.

You might wonder why we can't just adapt self-driving car technology for Mars. After all, companies like Tesla and Waymo have invested billions in autonomous navigation here on Earth. The answer reveals just how unique the Martian environment is.

Earth-based autonomous vehicles rely heavily on high-definition maps, GPS positioning, and cloud connectivity. Mars has none of these. Every route is uncharted, every rock formation is new, and there's no infrastructure to fall back on.

The terrain itself presents challenges that Earth vehicles rarely face. Loose regolith that can bog down wheels. Rocks that can damage equipment. Slopes that might trigger avalanches. Temperature extremes that affect sensor performance. And all of it bathed in radiation levels that would fry commercial electronics in hours.

Then there's the computing constraint. Perseverance's main computer runs on a 133 MHz processor with 128 MB of RAM. That's less power than a 1990s desktop computer. Modern AI models that require gigabytes of memory and billions of calculations per second have to be radically simplified to run on radiation-hardened hardware that can survive the Martian environment for years.

The autonomous systems developed for Mars rovers are already influencing technology on Earth. The multi-mode navigation that switches between aggressive and conservative strategies based on terrain analysis? That's now being adapted for autonomous vehicles in extreme environments like mines, construction sites, and disaster zones.

The vision-language models that let Perseverance "understand" terrain are finding applications in agricultural robots that need to navigate varied field conditions. The energy optimization algorithms developed for Curiosity's aging power system are being used to extend the operational life of remote sensors and exploration equipment in the Arctic and deep ocean.

Even the fundamental problem-solving approach, designing systems that must operate autonomously in uncertain environments with limited resources, has shaped how engineers think about robotic systems in general. Mars has become a proving ground for AI concepts that eventually make their way back to Earth.

The next generation of Mars exploration is already taking shape. NASA's plans include rovers with even more sophisticated autonomy, capable of identifying and pursuing scientific targets without any human input. They'll be able to recognize patterns in geology that might indicate past life, prioritize samples for future return missions, and coordinate with orbital assets and other surface vehicles.

Future systems will likely incorporate more advanced machine learning that can actually improve through experience. Imagine a rover that, after a year on Mars, develops an intuitive understanding of Martian terrain that lets it make better decisions than when it first landed. Some researchers are already testing these adaptive algorithms in Earth-based simulations.

The ultimate goal is a level of autonomy that seems almost science fiction today: rovers that can be given high-level mission objectives and figure out the details themselves. "Find evidence of past water activity in Jezero Crater" becomes a command the rover interprets, plans for, and executes over months of autonomous operation, checking in with Earth only to report discoveries and request guidance on ambiguous situations.

Every advancement in rover autonomy isn't just about exploring Mars faster or more efficiently. It's about pushing the boundaries of what humanity can achieve in the cosmos. With AI handling the split-second decisions, human scientists can focus on the big questions: Where should we explore next? What experiments will reveal the most about Mars's past? How do we prepare for human arrival?

The algorithms keeping Perseverance and Curiosity alive aren't just lines of code. They're the vanguard of human exploration, digital pioneers making it possible for us to maintain a presence on another world. Each successful autonomous drive, each hazard avoided, each scientific target identified without human input, brings us one step closer to the day when humans walk the Martian surface themselves.

And when that day comes, the AI systems developed for these rovers will be right there with them, thinking at the speed of light, making split-second decisions, keeping them safe 140 million miles from home.

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.