Can Blockchain Break Big Tech's Social Media Monopoly?

TL;DR: AI language models like ChatGPT confidently fabricate facts because they're pattern-matching systems, not knowledge databases. This hallucination crisis is affecting legal cases, healthcare, education, and business worldwide, requiring new verification skills and stricter professional standards.

Imagine trusting a colleague who speaks with absolute certainty, citing sources with perfect confidence, only to discover later that everything they told you was fabricated. That's exactly what's happening millions of times a day as people interact with AI language models. In September 2025, a California lawyer was fined $10,000 after ChatGPT invented 21 out of 23 legal citations in his court brief. The cases sounded real, the quotes seemed authentic, and the AI delivered them with unwavering confidence. The problem? None of them existed.

This isn't a quirk or a bug that'll be fixed in the next update. It's a fundamental feature of how these systems work, and it's creating a crisis of trust that will define the next decade of human-AI interaction.

Language models don't retrieve information the way you'd search Wikipedia or Google. They're prediction machines, trained on billions of text examples to guess what word should come next. When you ask ChatGPT about a legal case or Claude about a historical event, they're not looking anything up. They're generating text that statistically resembles things they've seen before.

Think of it like this: if you asked someone who'd read thousands of mystery novels to describe a fictional detective named "Inspector Morrison," they could probably create a convincing biography based on patterns they'd absorbed. They might place him in 1920s London, give him a pipe and a clever assistant, describe his methods. It would sound authentic because it follows the pattern. But Inspector Morrison never existed.

AI models do exactly this, except they don't distinguish between fiction and fact. A Stanford RegLab study found that some AI systems generate hallucinations in one out of three queries. When GPT-4 was asked to cite sources for scientific claims, researchers discovered it fabricated references roughly 30% of the time. These weren't obvious errors; the fake papers had plausible titles, realistic author names, and journals that sounded legitimate.

The technical reason comes down to how these models are built. During training, they learn statistical relationships between words and concepts. They know that legal briefs cite cases, that scientific papers reference journals, that historical events have dates and locations. So when prompted, they confidently generate text that fits those patterns, whether or not the specific details actually exist. There's no fact-checking layer, no internal database of verified information, just pattern-matching at massive scale.

We've been here before, in a way. When calculators first appeared, people worried we'd lose the ability to do mental math. When spell-checkers became standard, teachers feared students would never learn proper spelling. When GPS became ubiquitous, concerns arose about spatial awareness and map-reading skills.

But AI hallucinations are different because they exploit something deeply human: our tendency to trust confident explanations. A calculator that shows "2+2=5" is obviously wrong. But an AI that generates a detailed, coherent, citations-filled explanation of why a nonexistent court case supports your argument? That feels real. It matches every pattern we use to evaluate credibility except the most important one: whether it's true.

The printing press analogy is instructive here. When Gutenberg's invention spread, it democratized information but also enabled the rapid spread of false pamphlets and propaganda. Society eventually developed new literacy skills (source evaluation, critical reading, cross-referencing). We're at a similar inflection point now, except the information environment is moving faster and the fabrications are more sophisticated.

What makes this moment particularly challenging is that we're automating the very skill we most need: judgment. Previous technologies automated physical or computational tasks. AI is automating writing, analysis, and explanation, the tools we traditionally used to evaluate truth. It's as if we invented a technology that looks and sounds exactly like human expertise but occasionally, unpredictably, makes things up.

To understand why AI fabricates information, you need to know what happens when you type a prompt. Large language models process text as tokens (roughly words or word fragments) and calculate probabilities for what should come next based on everything they learned during training.

When you ask Claude to "explain the landmark 2019 Supreme Court case about AI rights," it doesn't search a database. Instead, it generates text that statistically fits the patterns of Supreme Court case descriptions. It might create a plausible case name, realistic-sounding legal reasoning, and even fabricate dissenting opinions. Every sentence flows naturally from the previous one, maintaining coherence and style.

The model has no concept of truth or falsehood. It only knows which sequences of words are likely to follow others based on its training data. If it encountered thousands of real Supreme Court summaries during training, it learned the pattern well enough to generate new ones that sound authentic.

This becomes even more problematic with newer techniques like retrieval-augmented generation (RAG), where models are given access to external documents. Even when real sources are available, models sometimes ignore them and generate plausible-sounding alternatives instead. Researchers at Cornell found that adding more context sometimes increased hallucination rates because models would blend real information with fabricated details.

Temperature settings affect this too. Higher temperatures make models more creative but also more likely to hallucinate. Lower temperatures produce more predictable outputs but don't eliminate fabrication. There's no setting that guarantees accuracy because accuracy isn't what these systems optimize for; they optimize for fluency and coherence.

The California lawyer case isn't isolated. A tracker maintained by legal technology observers has documented over 600 cases nationwide where attorneys cited nonexistent legal authority, 52 of them in California alone. In Utah, another lawyer faced sanctions after ChatGPT invented cases for an immigration brief. The judge noted that "ChatGPT can write a story but it cannot yet distinguish between what is real and what is not."

But legal briefs are just the beginning. In academia, researchers have found AI-generated papers with fabricated citations making it through peer review. Students submit essays referencing books that were never written. Journalists have published stories based on AI-generated quotes from people who never said them.

The business world has its own horror stories. A financial analyst used an AI model to summarize quarterly reports and presented fabricated statistics to executives. A healthcare startup built a patient information system that occasionally invented medication names and dosages. A marketing team launched a campaign based on AI-generated consumer research that included made-up survey data.

What makes these cases particularly dangerous is the confidence factor. When humans make mistakes, we usually signal uncertainty with qualifiers like "I think" or "probably." AI models deliver fabrications with the same confident tone as facts. They'll tell you that "according to a 2023 Nature study" and proceed to describe research that never happened, using the exact phrasing and formatting that makes real citations credible.

The psychological impact matters too. People are starting to distrust useful AI applications because they've been burned by hallucinations. Teachers ban ChatGPT entirely rather than teaching students to use it critically. Lawyers avoid AI tools that could genuinely improve their practice. Doctors hesitate to use diagnostic aids that could save lives.

Identifying AI hallucinations is harder than it sounds. Simple fact-checking doesn't always work because models often blend real and fake information. They might cite an actual journal but invent the article title, or reference a real author but fabricate their findings.

Several research teams have developed detection tools. Some analyze the statistical patterns of generated text, looking for telltale signs of artificial creation. Others use "chain of thought" reasoning, asking models to explain their sources and checking whether those explanations hold up. Some systems generate multiple responses to the same prompt and flag inconsistencies.

The most promising approach combines AI detection with traditional verification. When a model cites a source, an automated system attempts to retrieve it. When it makes a factual claim, a fact-checking model trained on verified databases evaluates it. When it describes historical events, a cross-reference tool searches for confirmation.

But these solutions have limitations. Detection tools can be gamed by more sophisticated models. Verification systems only work for claims that can be externally validated. And all of these approaches add latency and cost to AI interactions, making them impractical for casual use.

The human factor remains crucial. Experts can spot fabrications in their domains more reliably than any automated system. A lawyer recognizes when a case citation doesn't match standard formats. A scientist notices when a paper's methodology doesn't align with its claimed results. A historian catches anachronisms in AI-generated accounts.

The problem is scaling human expertise. We can't have expert fact-checkers reviewing every AI interaction. And as these models become more sophisticated at mimicking domain-specific patterns, even experts will struggle to distinguish real from fake without checking original sources.

OpenAI, Anthropic, Google, and other AI developers are well aware of the hallucination problem. They've implemented several mitigation strategies, with mixed results.

One approach is improved training data curation. By filtering out unreliable sources and emphasizing high-quality factual content, companies hope to reduce the frequency of fabrications. Google's Gemini, for instance, prioritizes content from verified sources and includes confidence indicators in some responses.

Retrieval-augmented generation (RAG) systems try to ground AI responses in real documents. When you ask a question, the system searches a knowledge base and instructs the model to answer based only on retrieved information. This works better for specific domains (a company's internal documents, medical literature) than for general knowledge.

Some companies are adding uncertainty expressions. Models are trained to say "I don't know" or "I'm not certain" when appropriate. Claude, for instance, has been trained to acknowledge limitations more explicitly than earlier models. But this creates a tradeoff: users often prefer confident answers, even if some are wrong, over cautious responses that might be more accurate.

Constitutional AI is another approach, where models are trained with explicit rules about truthfulness and accuracy. During training, they learn to evaluate their own outputs against principles like "be factual" and "cite real sources." This reduces hallucinations but doesn't eliminate them.

Microsoft has integrated Bing search directly into ChatGPT, allowing the model to retrieve current information. OpenAI is exploring similar capabilities. But even with internet access, models sometimes ignore retrieved information and generate plausible alternatives instead.

The most effective solution so far is combining multiple verification approaches. A model generates an answer, a retrieval system finds relevant sources, a fact-checking model evaluates claims, and a confidence scorer flags uncertain information. But this is computationally expensive and slower than users expect from AI interactions.

Until technology solves the hallucination problem (and there's no guarantee it will), users need defensive strategies. Here's what actually works:

Verify citations independently. If an AI cites a paper, case, or article, look it up yourself. Don't just trust that it exists. A search for the title, author, or publication should turn up the actual source. If it doesn't appear in the first page of results, it's probably fabricated.

Cross-check important claims. For any factual statement that matters to your work or decisions, find confirmation from established sources. Wikipedia, academic databases, official government sites, and reputable news outlets are more reliable than AI-generated content.

Look for red flags. Overly specific details can signal fabrication. If an AI provides an exact date, precise statistics, or detailed quotes without citing a source, be skeptical. Real information usually comes with some uncertainty and multiple perspectives.

Use AI for drafts, not finals. Treat AI-generated content as a starting point requiring verification, not a finished product. It's excellent for brainstorming, outlining, and exploring ideas, but every factual claim needs human review before you rely on it.

Ask for sources explicitly. When you want factual information, request specific citations in your prompt: "List your sources" or "Provide links to references." This doesn't eliminate hallucinations, but it makes them easier to spot because you can check whether the sources exist.

Develop domain expertise. The best defense against AI fabrication is knowing enough about a subject to recognize when something sounds wrong. You don't need to be an expert in everything, but having solid knowledge in your field helps you catch fabrications.

Use specialized tools. For critical applications like legal research or medical information, use purpose-built AI systems with verification layers rather than general chatbots. These tools are designed with higher accuracy standards and domain-specific safeguards.

The hallucination problem has different stakes depending on where AI is deployed. In some domains, the consequences of fabrication are catastrophic. In others, they're merely annoying.

Healthcare presents perhaps the highest risk. A doctor using an AI diagnostic aid that hallucinates drug interactions or invents treatment guidelines could harm patients. Several medical AI systems have been pulled from the market after generating dangerous recommendations. The FDA is now developing specific guidelines for AI in medical settings, with particular attention to hallucination risks.

Legal practice has been hit hard, as the California and Utah cases demonstrate. Beyond the obvious problem of fake case citations, AI can fabricate procedural rules, invent legal precedents, or misrepresent statutes. State bar associations are now requiring continuing education on AI limitations, and some jurisdictions mandate that lawyers verify any AI-generated legal content.

Education faces a different challenge. Students using AI to write papers with fabricated sources learn bad research habits. They're training themselves to trust unverified information and to view citation as a formality rather than a way to ground claims in evidence. Some educators argue that AI writing tools should be banned entirely until hallucinations are solved; others believe we need to teach critical evaluation skills explicitly.

Journalism and media are particularly vulnerable because fabricated quotes, invented statistics, and fake sources can spread rapidly before fact-checkers catch them. Several news outlets have published AI-assisted articles that later required corrections. The Society of Professional Journalists now recommends that any AI-generated content undergo the same verification process as human-written material.

Business intelligence and decision-making rely on accurate information. When executives make strategic choices based on AI-summarized reports that contain fabrications, the consequences ripple through entire organizations. Some companies now require dual verification: AI analysis must be checked against original documents by human analysts before informing major decisions.

How societies are responding to AI hallucinations reveals deeper cultural differences about trust, verification, and the role of technology in decision-making.

In the United States, the approach has been reactive and legalistic. Courts impose sanctions on lawyers who rely on fabricated citations. Regulatory bodies issue guidelines. Professional associations develop standards. The emphasis is on individual responsibility: users should verify AI outputs, and those who don't face consequences.

European Union regulations take a more proactive approach. The AI Act includes specific provisions about accuracy and hallucinations, requiring companies to assess and mitigate fabrication risks before deployment. The emphasis is on preventing harm before it occurs, placing more responsibility on AI developers than end users.

China's approach combines technical solutions with social controls. Major AI companies are required to implement verification systems and report hallucination rates to regulators. Users are educated about AI limitations through government-coordinated campaigns. The goal is maintaining social stability by preventing the spread of AI-generated misinformation.

Japan has focused on industry self-regulation and collaborative standards development. Tech companies, academic researchers, and government officials work together to establish best practices. The cultural emphasis on collective responsibility means companies are expected to solve the problem collaboratively rather than competing for market advantage.

In developing countries, the hallucination problem is compounded by limited access to verification resources. A student in rural India or Kenya might lack reliable internet to fact-check AI claims. This creates new forms of information inequality, where wealthier users can verify AI outputs while others cannot.

Within the next decade, you'll likely interact with AI systems hundreds of times per day. Some will be obvious (chatbots, virtual assistants), others invisible (content recommendations, search results, automated decisions). The hallucination problem won't be solved by then. So how do we adapt?

First, we need to develop new forms of information literacy. Just as previous generations learned to evaluate print sources and internet content, the next generation must learn to assess AI-generated information. This means understanding how these systems work, recognizing their limitations, and maintaining healthy skepticism.

Second, we'll need better tools and infrastructure. Verification systems must become as ubiquitous and easy to use as AI itself. Imagine browser extensions that automatically fact-check AI-generated content, or communication platforms that flag unverified claims in real-time. These tools are beginning to emerge, but they need to scale dramatically.

Third, professional standards must evolve. Every field that adopts AI needs explicit guidelines about verification requirements. Lawyers must check citations. Doctors must confirm diagnoses. Journalists must verify facts. Teachers must educate students about AI limitations. These practices need to become as automatic as wearing seatbelts.

Fourth, we should demand transparency from AI companies. Models should indicate when they're uncertain, distinguish between retrieved facts and generated content, and provide audit trails for important decisions. Some of this is happening, but user pressure can accelerate progress.

Fifth, we need to preserve and strengthen human expertise. AI works best as a tool to augment human judgment, not replace it. The temptation is to automate everything, but hallucinations prove that some tasks require human intelligence and accountability.

Finally, we should resist the urge to trust AI by default. The baseline assumption should be skepticism, with trust earned through verification. This might sound exhausting, but it's the only rational response to systems that confidently fabricate information.

The confidence crisis isn't just about fixing AI. It's about fundamentally rethinking our relationship with automated information systems. We're learning, painfully and expensively, that fluency and accuracy are not the same thing. A system that sounds intelligent isn't necessarily reliable. Confidence doesn't equal correctness.

The California lawyer's $10,000 fine is a relatively small price for that lesson. But the real cost is measured in eroded trust, wasted resources, and the growing gap between what AI seems capable of and what it can actually deliver reliably.

The machines will keep hallucinating. The question is whether we'll learn to see through their confidence before trusting them with decisions that matter. The next few years will determine if we develop the skills and systems to verify AI outputs effectively, or if we sleepwalk into a future where fabricated facts are indistinguishable from reality.

That's the real crisis: not that AI makes things up, but that we might stop caring whether what it tells us is true.

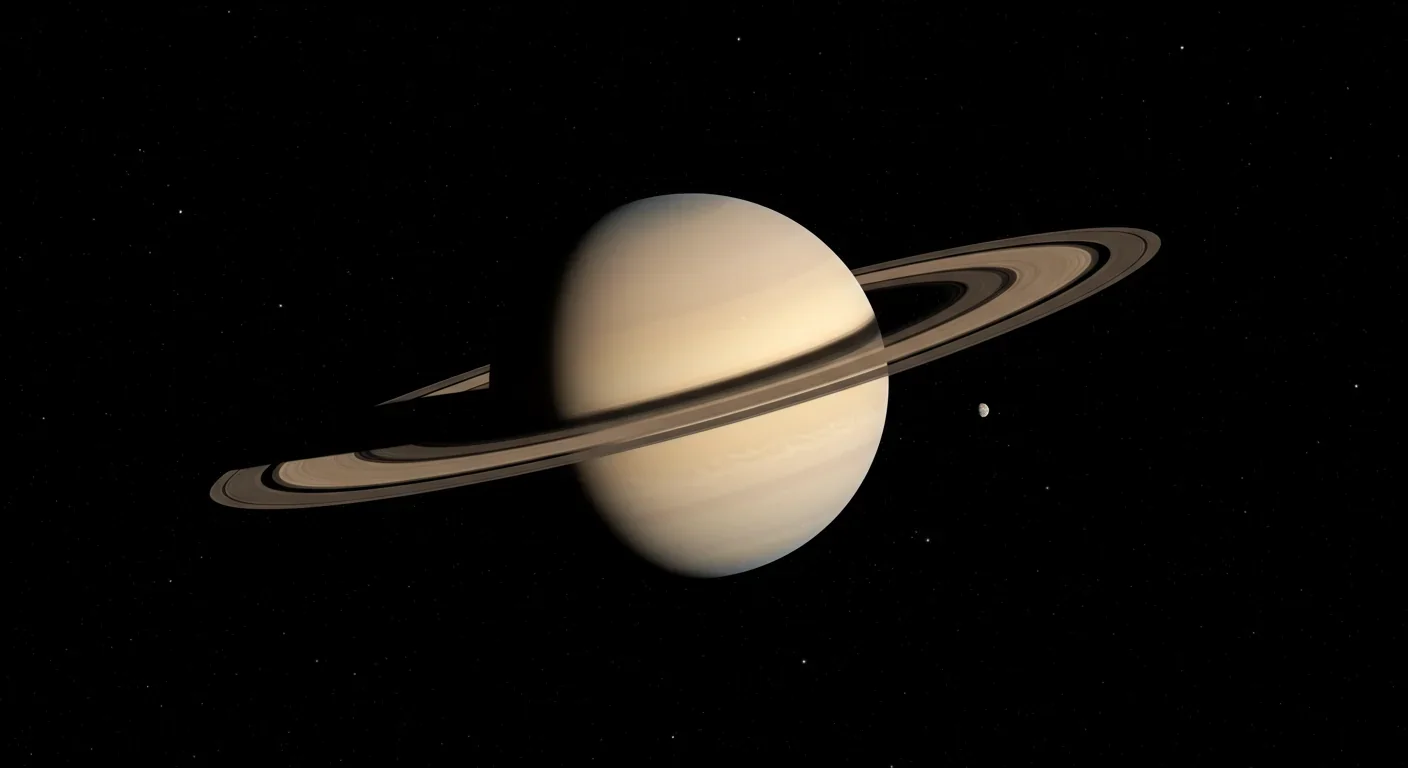

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.