Can Blockchain Break Big Tech's Social Media Monopoly?

TL;DR: Neural architecture search (NAS) is transforming deep learning by automating model design through algorithms that explore architecture spaces and optimize network structures. Leading AutoML platforms like Google AutoML, AutoKeras, and H2O.ai now embed NAS to democratize AI development, though challenges around computational cost, interpretability, and overfitting persist as the field moves toward hybrid human-AI workflows.

For decades, designing deep learning models felt like alchemy. You'd brew together layers, neurons, and activation functions based on intuition, papers from Stanford, or whatever worked last quarter. Sometimes you'd strike gold. More often, you'd waste weeks on architectures that barely outperformed a coin flip.

That era is ending. Neural architecture search (NAS) is fundamentally changing how we build AI, letting algorithms design networks that rival—and often surpass—what human experts create. What once took months of trial and error now happens in days or hours, thanks to AutoML platforms that embed NAS into user-friendly interfaces. The implications reach far beyond convenience: we're witnessing the democratization of AI expertise, where a data scientist in Lagos can access the same model-design capabilities as a researcher at Google.

But this transformation carries weight. As machines learn to build better machines, we're confronting questions about interpretability, computational costs, and the future role of human creativity in AI. The answers will shape not just how we deploy deep learning, but who gets to wield its power.

Traditional model design starts with a human making educated guesses. Should we use convolutional layers or transformers? How deep should the network go? What learning rate makes sense? Each choice multiplies into thousands of permutations, and testing them all would burn through computational budgets faster than you can say "hyperparameter tuning."

NAS flips this script. Instead of manually specifying architecture details, you define a search space—a set of possible operations like convolutions, pooling layers, or skip connections—and let an algorithm explore it. The system evaluates candidate architectures against your objective function, learns which design patterns perform well, and iteratively refines its search. Think of it as evolution guided by gradient descent rather than random mutation.

The breakthrough came with differentiable approaches, particularly DARTS (Differentiable Architecture Search), which transformed NAS from a combinatorial nightmare into something tractable. Early methods using reinforcement learning required 2,000 to 3,150 GPU days to find a single architecture. DARTS reduced that to 2-3 GPU days by relaxing the discrete choice of operations into a continuous space, enabling gradient-based optimization. Suddenly, NAS wasn't just a research curiosity—it became practical for industry use.

The shift from academic papers to commercial platforms happened faster than most anticipated. Today's AutoML solutions integrate NAS directly into their workflows, abstracting away the complexity while preserving the power.

Google AutoML leverages NAS to automatically design custom neural networks tailored to your dataset. Upload images for classification, and the platform searches for architectures optimized for your specific visual patterns, data volume, and latency requirements. It's running sophisticated search algorithms under the hood, but users interact through a point-and-click interface.

AutoKeras, an open-source alternative, uses NAS to handle everything from image classification to text analysis. A case study on text classification showed how developers with minimal deep learning expertise achieved competitive results in under an hour—a task that previously demanded weeks of architecture experimentation and tuning.

H2O.ai takes a different angle, emphasizing speed and interpretability. Their Driverless AI platform runs NAS alongside feature engineering and model selection, producing not just high-performing models but also explanations of why certain architectures work. This matters in regulated industries where you can't deploy a black box, no matter how accurate.

The common thread? All these platforms treat NAS as infrastructure rather than a science project. You specify your problem, constraints, and success metrics. The system handles the search, evaluation, and deployment.

Theory is elegant, but production results tell the real story. NAS-powered AutoML has accelerated development across domains that previously required deep specialist knowledge.

In medical imaging, researchers at multiple institutions used AutoML platforms with NAS to develop diagnostic models for cancer detection, reducing development time from months to weeks while matching or exceeding human radiologist performance. The key wasn't just speed—it was accessibility. Smaller hospitals without AI teams could deploy state-of-the-art models by leveraging automated architecture search.

Retail giants applied NAS to demand forecasting, where architecture choices significantly impact prediction accuracy. One case study demonstrated how AutoML reduced model development from three weeks to 15 minutes, letting teams iterate on business logic rather than network topology. The automated search discovered architectures with attention mechanisms that captured seasonal patterns human designers had overlooked.

Manufacturing quality control offers another compelling example. Vision systems detecting defects traditionally required painstaking architecture design to balance precision and inference speed. NAS-enabled platforms found lightweight architectures that maintained accuracy while meeting real-time latency constraints—architectures that likely wouldn't have emerged from manual design because they violated conventional wisdom about network depth and width.

The computational story of NAS is paradoxical. Yes, DARTS dropped search costs from thousands to single-digit GPU days. But "efficient" is relative—2-3 GPU days still represents significant resources, especially for organizations without cloud budgets.

Recent innovations address this through zero-shot NAS, which predicts architecture performance without full training, and weight-sharing techniques that train a supernet once and derive multiple architectures from it. These methods push search times down to hours or even minutes for certain tasks.

Yet efficiency gains come with trade-offs. Faster search often means narrower search spaces, which can miss unconventional architectures that outperform standard designs. Researchers have found that aggressive efficiency optimizations sometimes lead to architectures that overfit validation data or collapse into degenerate solutions dominated by skip connections—the neural network equivalent of taking shortcuts.

The cost equation extends beyond computation to interpretability. Human-designed architectures often reflect clear reasoning: "We added residual connections to address vanishing gradients." NAS-discovered architectures may work brilliantly but offer no intuitive explanation for why this particular arrangement of layers succeeds. When deployment requires regulatory approval or debugging, that opacity becomes a liability.

Automated doesn't mean foolproof. NAS carries specific failure modes that aren't immediately obvious.

Overfitting manifests differently in architecture search than in typical model training. The search process itself can overfit to validation data, discovering architectures that exploit peculiarities of your test set rather than learning generalizable patterns. Experts note that optimizing architecture parameters on the same data used for weight training leads to "overfitting of alphas"—the architecture parameters—causing performance to deteriorate on unseen data.

Search space bias is subtler but equally consequential. Every NAS implementation defines a search space based on operations researchers think are promising. If your problem requires novel architectural elements outside that space, NAS will never find them. This creates a homogenization risk: as platforms standardize search spaces, we might converge on similar solutions even when diversity would serve us better.

Computational inequality looms large. While NAS democratizes access to good architectures, the ability to run NAS remains concentrated among well-resourced organizations. A startup with limited GPU budget may struggle to afford even "efficient" search, creating a two-tier system where some teams automate design while others resort to manual experimentation.

The most sophisticated practitioners aren't choosing between human design and automated search—they're blending both.

Hybrid approaches let human experts define high-level architectural motifs while NAS optimizes details. You might specify "this model needs attention mechanisms and some form of hierarchical feature extraction," then let search algorithms determine optimal layer arrangements, filter sizes, and connection patterns. This division of labor leverages human intuition about problem structure and machine efficiency at exploring combinatorial spaces.

Transfer learning amplifies this partnership. Recent work shows that NAS search results transfer across related tasks, meaning you can seed searches with architectures discovered for similar problems. A model designed for bird classification might inform the starting point for aircraft detection, dramatically reducing search time while incorporating domain knowledge.

Interactive NAS tools let designers guide searches in real-time, pruning unpromising branches or expanding interesting regions of the search space. This feels less like automation replacing humans and more like a sophisticated co-pilot that handles tedious exploration while deferring to human judgment on strategic direction.

While NAS made its name in computer vision, it's spreading to domains where architecture choice matters just as much but receives far less attention.

Natural language processing models benefit enormously from automated architecture search, particularly as transformer variants proliferate. NAS helps navigate the explosion of choices around attention mechanisms, layer normalization, and positional encodings. Research demonstrates that NAS-discovered language models often find efficient architectures that balance accuracy and inference speed better than manual designs.

Time series forecasting presents unique challenges because temporal dependencies require architectures that capture both short-term fluctuations and long-term trends. NAS platforms are starting to search over recurrent, convolutional, and attention-based temporal models simultaneously, discovering hybrid architectures that outperform standard LSTM or Transformer baselines.

Multimodal learning, where models process vision, language, and audio together, explodes the architecture search space. How should different modalities interact? Where should fusion happen? NAS offers a systematic way to explore these questions rather than relying on architectural intuitions developed for single-modality tasks.

Edge deployment drives another NAS frontier: hardware-aware architecture search that optimizes not just for accuracy but for specific device constraints. Finding models that run efficiently on mobile phones or IoT sensors requires balancing memory footprint, inference latency, and energy consumption—exactly the kind of multi-objective optimization that NAS handles well.

As NAS-discovered architectures proliferate in production systems, the interpretability gap grows more urgent. It's one thing to deploy an inscrutable model for ad targeting; it's another when that model makes medical diagnoses or loan decisions.

Recent research distinguishes between interpretability—understanding how a model works internally—and explainability—providing post-hoc justifications for predictions. NAS architectures struggle on both fronts because they lack the design narrative that accompanies human-crafted networks.

Some platforms address this by constraining search spaces to architectures with known interpretable properties, like attention mechanisms that highlight relevant input features. Others generate automated documentation explaining discovered architectures in human-readable terms, though this risks confusing correlation (describing what the architecture does) with causation (explaining why it works).

The tension is real: NAS finds better models precisely by exploring unconventional designs that violate our intuitions. Insisting on interpretability might mean sacrificing the performance gains that make NAS valuable. Reconciling these goals remains an open challenge, particularly as regulations around AI transparency tighten.

The trajectory points toward faster, cheaper, and more accessible architecture search. Emerging techniques like zero-shot NAS and neural predictor models that estimate architecture performance without training hint at a future where search happens in seconds rather than hours.

Lowering computational barriers matters immensely for democratization. If a researcher in a developing country can run NAS on a laptop rather than a GPU cluster, we unlock global participation in AI development. Early experiments with efficient AutoML show promise, but accessibility remains uneven.

The knowledge transfer problem also looms. Current platforms treat each search as independent, discarding the learned patterns once an architecture is found. Future systems might accumulate architectural knowledge across searches, building meta-models that predict which design patterns work for different problem classes. Imagine an AutoML platform that knows "for medical imaging with limited labeled data, architectures with these properties tend to excel" based on millions of previous searches.

Hybrid human-AI workflows will likely become standard practice. Rather than framing this as automation versus manual design, expect tools that let experts express high-level intentions and constraints while machines handle combinatorial optimization. The goal isn't to eliminate human creativity but to amplify it—letting domain experts focus on problem formulation while automation handles implementation details.

With NAS-powered AutoML maturing, selecting the right platform depends less on whether they use architecture search and more on how they implement it.

Consider computational budget: Google AutoML offers powerful search but requires cloud resources. Open-source AutoKeras runs locally, giving you control over costs but potentially limiting search scope.

Evaluate interpretability requirements: If you need to explain model decisions to regulators or stakeholders, platforms like H2O.ai that emphasize explainability make more sense than pure performance optimizers.

Assess domain fit: Some platforms specialize in vision, others in tabular data or NLP. Benchmark comparisons show significant performance variation across domains, so match the platform's strengths to your problem type.

Factor in expertise: AutoML promises to democratize deep learning, but platforms vary in how much ML knowledge they assume. Some abstract away all details; others expose hyperparameters for fine-tuning, which helps experts but overwhelms beginners.

Plan for iteration: The best results often come from multiple search runs with refined constraints. Platforms that enable quick iteration cycles and support transfer learning between searches accelerate this process.

Neural architecture search represents more than a technical advance—it's a shift in how humanity builds intelligence. We've moved from crafting models by hand to defining goals and letting algorithms discover solutions, sometimes surprising us with their creativity.

This transition raises profound questions. As machines become better at designing other machines, does human intuition about neural networks become obsolete, or does it evolve into higher-level strategic thinking? Will automated design concentrate AI capabilities in the hands of those with computational resources, or will efficiency gains genuinely democratize access?

The answers depend on choices being made right now: whether we prioritize interpretability alongside performance, whether we lower barriers to entry or accept a two-tier system, whether we view NAS as replacing human expertise or augmenting it.

What's clear is that AutoML platforms powered by NAS have already transformed what's possible for data scientists, engineers, and researchers. The question isn't whether to adopt these tools but how to wield them responsibly, ensuring that automation amplifies human capability rather than replacing human judgment. That balance—between the efficiency of algorithms and the wisdom of people—will determine whether NAS fulfills its democratizing promise or simply automates existing inequalities at scale.

The revolution in how we build AI is underway. The challenge now is making sure it's a revolution that lifts everyone.

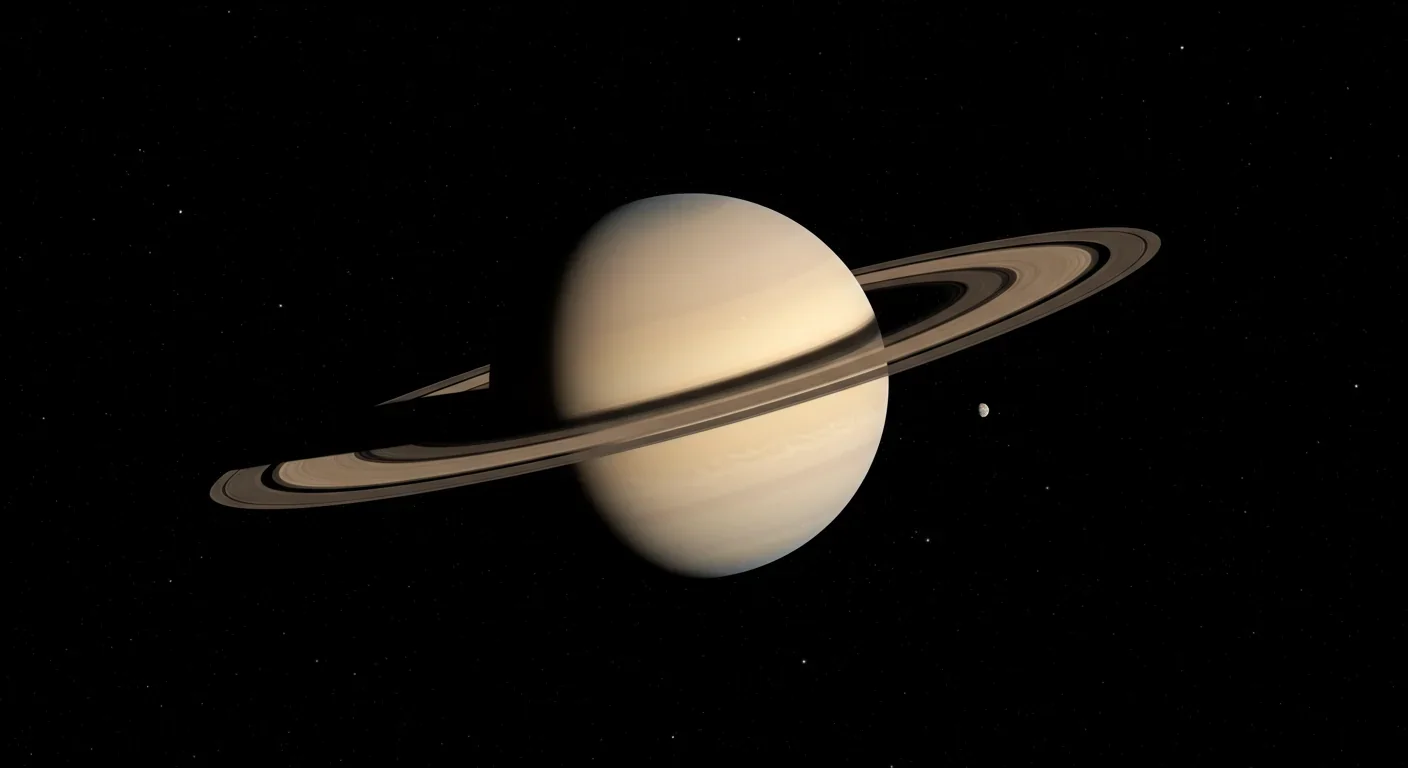

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.