The Authenticity Paradox in Social Media Explained

TL;DR: Social contagion spreads through networks using the same mathematical principles as disease outbreaks, with network structure, centrality, and reinforcement thresholds determining which behaviors go viral and which fizzle out.

Within five years, researchers predict that predicting viral trends will be as precise as forecasting the weather. Right now, most marketers still treat virality like magic, hoping their content catches lightning in a bottle. But scientists have spent decades mapping exactly how ideas, behaviors, and emotions spread through networks using the same mathematical models that track disease outbreaks. The difference? Understanding these patterns gives you the ability to design contagion instead of just hoping for it.

When epidemiologists model how flu spreads through a population, they use SIR models (Susceptible-Infected-Recovered) that track who's vulnerable, who's contagious, and who's immune. Social contagion works remarkably similarly. Instead of viruses jumping between hosts, we're watching memes, behaviors, and beliefs jump between minds through social networks and digital platforms.

The math is nearly identical. Both biological and social contagion follow predictable curves, have tipping points where spread accelerates exponentially, and depend heavily on network structure. A single highly connected person, a "super-spreader" in epidemiological terms, can trigger cascades that reach millions. The key difference is that social contagion often requires reinforcement—you're more likely to adopt a behavior after seeing multiple friends do it, whereas one exposure to influenza is usually enough.

Recent studies using Independent Cascade models show that social influence spreads through opinion networks in waves, with early adopters serving as patient zero. The progression mirrors disease spread so closely that researchers now use the same simulation tools for both. When Facebook launched its News Feed in 2006, they essentially created the world's largest petri dish for studying social contagion.

Not all network positions are created equal. Your chances of starting or amplifying a viral trend depend almost entirely on where you sit in the social web. Researchers have identified specific structural features that predict contagion speed with startling accuracy.

Betweenness centrality—how often you bridge different groups—correlates more strongly with influence than raw follower count. Someone with 5,000 connections spanning diverse communities will outperform someone with 50,000 followers in a homogeneous bubble. The bridgers control the flow of information between clusters, acting as gatekeepers and amplifiers.

Star and line network motifs accelerate diffusion dramatically. When researchers analyzed network structures and diffusion speed, they found that star motif concentration correlated at 0.9447 with spread velocity, while line motifs correlated at 0.8345. These local patterns matter more than global metrics. A message passing through a star configuration, where one central node connects to many periphery nodes, spreads 40% faster than through random connections.

Hub-dominated networks create a vulnerability. While they're resilient to random failures, targeted removal of high-centrality nodes can abruptly halt contagion. This has major implications for stopping misinformation—you don't need to remove every false post, just the key amplifiers.

Distance between influencers matters too. When selecting multiple seed nodes for a campaign, choosing people far apart in the network increases total reach. BCR (Basic Cycle Ratio), a novel metric that balances local cycle participation with global cohesion, consistently outperforms degree centrality by identifying influential nodes that don't overlap in their spheres of influence. This is why coordinated launches across different communities work better than concentrating all your influencers in one space.

We connect with people like us. This tendency, called homophily, shapes every network from Twitter to your workplace. Birds of a feather don't just flock together—they create the highways along which behaviors travel.

Homophily amplifies social contagion because similar people are more likely to trust each other's choices and adopt each other's behaviors. When you see someone demographically or ideologically similar trying a new fitness routine, you're exponentially more likely to try it yourself than if the recommendation came from someone different. This creates feedback loops where behaviors become more entrenched within groups but struggle to cross demographic or ideological boundaries.

The downside is polarization. As groups self-segregate, they develop distinct information ecosystems. A message spreading in one cluster might never reach another, even though both exist on the same platform. This is why vaccine hesitancy spreads so effectively within certain communities but barely touches others. The network structure itself creates immune systems against outside ideas.

Breaking through homophilic barriers requires strategic bridge-building. Public health campaigns increasingly target connectors between communities, people who maintain ties across different groups. These boundary spanners are rare but incredibly valuable because they can carry behaviors from one cluster to another.

Unlike biological viruses, most social behaviors require multiple exposures before adoption. This is the threshold effect, and it fundamentally shapes how contagion unfolds.

Simple contagion, where one exposure is enough (like sharing a funny meme), spreads quickly but often dies out just as fast. Complex contagion, requiring multiple confirmations (like changing your diet or political beliefs), spreads more slowly but creates lasting change. Research on diffusion thresholds shows that behaviors requiring social validation need denser networks with overlapping connections.

The Ice Bucket Challenge succeeded because it combined both. Sharing a video was simple contagion, but actually dumping ice water on yourself required social reinforcement—seeing multiple friends do it lowered the threshold. The campaign raised $115 million for ALS research in 2014 by understanding that public commitment plus peer pressure creates unstoppable momentum.

Fashion trends demonstrate threshold effects perfectly. Early adopters have low thresholds, willing to try new styles with minimal social proof. Mainstream consumers have higher thresholds, waiting until 20-30% of their network has adopted before following. Laggards have the highest thresholds, only adopting after behaviors become completely normalized. Successful campaigns sequence their targeting, moving from low-threshold to high-threshold populations as adoption builds.

Computational models of social reinforcement reveal that timing matters enormously. A message arriving when you've already seen two friends adopt a behavior has 5x more impact than the first exposure. This is why coordinated launches, where multiple influencers post simultaneously, create artificial reinforcement that accelerates spread.

In summer 2014, a simple challenge exploded across social media with unprecedented speed and reach. The formula seemed straightforward: record yourself dumping ice water on your head, challenge others, donate to ALS research. But the mechanics beneath the surface reveal why this campaign succeeded where thousands fail.

The challenge leveraged several contagion mechanisms simultaneously. First, it used nomination mechanics, creating direct peer pressure across network ties. When someone tags you by name, refusing becomes socially costly. Second, it required public performance, which activated social proof and status signaling. Third, it bundled entertainment with altruism, lowering psychological barriers to participation.

Network effects amplified quickly because the challenge crossed demographic boundaries. Unlike most viral content that remains trapped in specific clusters, the Ice Bucket Challenge spread from teenagers to celebrities to politicians to CEOs. This boundary-crossing happened because the core mechanic was universally accessible—anyone with a bucket and a phone could participate.

The timing was crucial but accidental. It launched during summer when people were outdoors, making the physical challenge practical. It hit peak velocity in August when social media engagement naturally spikes. And it occurred during a relatively quiet news cycle, reducing competition for attention.

From a contagion perspective, the campaign created a low-threshold simple behavior (sharing a video) that led to a higher-threshold complex behavior (donating money). About 17 million people posted videos, but only 2.5 million donated. That conversion rate would normally be considered poor, but the absolute numbers were staggering because the top-of-funnel spread was so explosive.

The campaign also benefited from what researchers call "costly signaling." Dumping ice water on yourself is mildly unpleasant, which paradoxically increased participation because it demonstrated genuine commitment rather than effortless virtue signaling. People who completed the challenge earned social currency.

The same mechanisms that spread the Ice Bucket Challenge also spread conspiracy theories, health misinformation, and harmful behaviors. The network doesn't distinguish between true and false—it only amplifies what resonates emotionally and socially.

Anti-vaccine misinformation spreads through tightly-knit communities using identical patterns. False claims about vaccine dangers circulate within homophilic clusters where members share similar values and distrust mainstream medicine. These networks have high clustering coefficients, meaning members' friends are also friends with each other, creating echo chambers where misinformation receives constant reinforcement.

The challenge is that debunking doesn't spread as well as the original false claim. Corrections require complex contagion—people need multiple exposures to accurate information from trusted sources before updating their beliefs. Meanwhile, the misinformation benefits from simple contagion and emotional resonance.

Platform algorithms worsen the problem by optimizing for engagement rather than accuracy. Content that triggers strong emotions spreads faster, and misinformation often contains more emotionally charged language than factual reporting. This creates a structural advantage for false claims.

Effective counter-strategies focus on network structure rather than just content. Identifying and engaging high-betweenness nodes—people who bridge different communities—allows accurate information to cross cluster boundaries. Pre-bunking, where you inoculate networks with weakened versions of misinformation before the real thing arrives, builds resistance.

Another approach targets the reinforcement threshold. If you can interrupt the cascade early, before it achieves sufficient density for complex contagion, the behavior dies out. This is why rapid response teams monitoring emerging misinformation trends can be effective—they disrupt spread during the vulnerable early phase.

Understanding contagion mechanics transforms how you approach viral marketing, behavior change campaigns, or social movements. Instead of creating content and hoping it spreads, you engineer spread into the design.

Start with seed selection. Identify people with high betweenness centrality who bridge different communities. Research on influential spreaders shows that choosing five strategically positioned seeds outperforms one hundred random seeds. Tools now exist to map social networks and calculate centrality metrics automatically.

Design for progressive reinforcement. Create multiple touchpoints that build on each other. The first exposure should be simple, low-threshold, and shareable. Subsequent exposures should deepen engagement and commitment. Think of it as a funnel where each stage reinforces the next.

Build in nomination mechanics that leverage existing network ties. Challenges, referrals, and tagging systems all exploit social proof and peer pressure. When people make public commitments, they're more likely to follow through and more likely to influence others.

Optimize timing for cascade amplification. Launch strategies that coordinate multiple influencers create artificial reinforcement that kicks off exponential growth. Stagger releases across time zones to maintain momentum while appearing organic.

Reduce friction at every step. Every additional click, form field, or moment of confusion kills potential spread. The Ice Bucket Challenge succeeded partly because participation required minimal effort—record, post, tag. Compare that to campaigns requiring app downloads or account creation, which create bottlenecks that choke off contagion.

Create content that signals identity and values. People share things that help them present themselves to their network. Content that says "this is who I am" or "this is what I believe" spreads faster than purely informational content. The #MeToo movement spread because sharing your story was simultaneously an act of personal courage and collective solidarity.

Use emotional resonance strategically but ethically. Content triggering strong emotions spreads further, but manipulating fear or outrage has social costs. Positive emotions like awe and inspiration also drive sharing while building brand equity rather than burning it.

Machine learning models now forecast social contagion with increasing accuracy. By analyzing network structure, content features, and early adoption patterns, researchers can predict which trends will explode and which will fizzle hours or days before it becomes obvious.

These predictive models look for telltale signatures. Rapid growth in highly clustered networks suggests the contagion will peak quickly but die fast. Slower growth that bridges multiple clusters indicates lasting impact. Content spreading through high-betweenness nodes reaches more diverse populations. Early adoption by accounts with diverse follower bases predicts broad reach.

AI systems are being deployed to both amplify and suppress contagion. Platforms use them to identify emerging misinformation and reduce its spread before it reaches critical mass. Marketers use them to optimize campaign timing and targeting. Governments use them to forecast social movements and public opinion shifts.

The next frontier is real-time intervention. Imagine systems that detect a harmful behavior spreading through a network and automatically deploy targeted counter-messaging to high-centrality nodes, interrupting the cascade before it amplifies. Or imagine tools that identify the optimal moment to launch a product based on network readiness and clustering patterns.

We're also seeing the weaponization of contagion science. State actors and influence operations use these same principles to spread propaganda, suppress dissent, or manipulate elections. The same network analysis that helps public health officials fight disease can help authoritarian regimes identify and isolate dissidents.

This creates an arms race between those engineering contagion and those trying to control it. As models improve, both sides gain capability. The question becomes not just can we predict and control social contagion, but should we, and who gets to decide?

Just as populations develop immunity to biological diseases, social networks can develop resistance to certain types of contagion. Overexposed audiences become skeptical. Techniques that worked once stop working when they become familiar.

Consider viral marketing tactics from the early 2000s. Flash mobs, hidden camera pranks, and manufactured controversies all eventually lost their power because audiences learned to recognize the patterns. Each technique burned out its own effectiveness. This is the Red Queen problem—you need to keep evolving just to maintain the same level of impact.

Building resistance to harmful contagion requires a different approach. Media literacy programs aim to inoculate people against misinformation by teaching them to recognize manipulation tactics. Studies show this works, but it requires reaching people before they're exposed to false claims, and it needs reinforcement over time.

Network-level interventions may be more scalable. Adjusting algorithms to penalize sensationalism, rewarding accuracy, or making sharing slightly more effortful all reduce the spread of low-quality information without requiring individual users to change behavior. These structural interventions reshape the environment rather than trying to change every person.

The challenge is balancing control with freedom. Too much intervention and you risk censorship, echo chambers, and the loss of network value. Too little and you get runaway misinformation, harassment, and social harm. Finding that balance will define the next decade of platform governance.

Whether you're launching a campaign, fighting misinformation, or just trying to understand why everyone suddenly cares about something you'd never heard of yesterday, the same principles apply.

Pay attention to network structure, not just content quality. The best idea in the world dies if it launches in the wrong network configuration. Map your audience's connections before crafting your message.

Recognize that most behaviors require reinforcement. Plan multi-touch strategies that build momentum rather than one-shot gambits. Think in terms of cascades and thresholds, not individual conversions.

Understand the ethics of influence. These tools are powerful, which means they can cause harm. Using contagion mechanics to spread health information is different from manipulating people into buying things they don't need or believing things that aren't true.

Build for the long term. Viral moments fade, but lasting behavior change requires sustained engagement and reinforcement. The Ice Bucket Challenge raised millions in 2014, but ALS organizations struggled to maintain that momentum. One-time virality isn't a strategy, it's a lottery ticket.

Most importantly, remember that we're all part of these networks. Every time you share, like, or comment, you're either accelerating or dampening a contagion cascade. The behaviors we choose to spread shape the culture we live in. The science of social contagion gives us power, but power without wisdom is just noise.

The next time something goes viral, you'll know it's not magic or luck. It's math, psychology, and network structure combining in ways we're just beginning to understand and control. The question is what we'll do with that understanding.

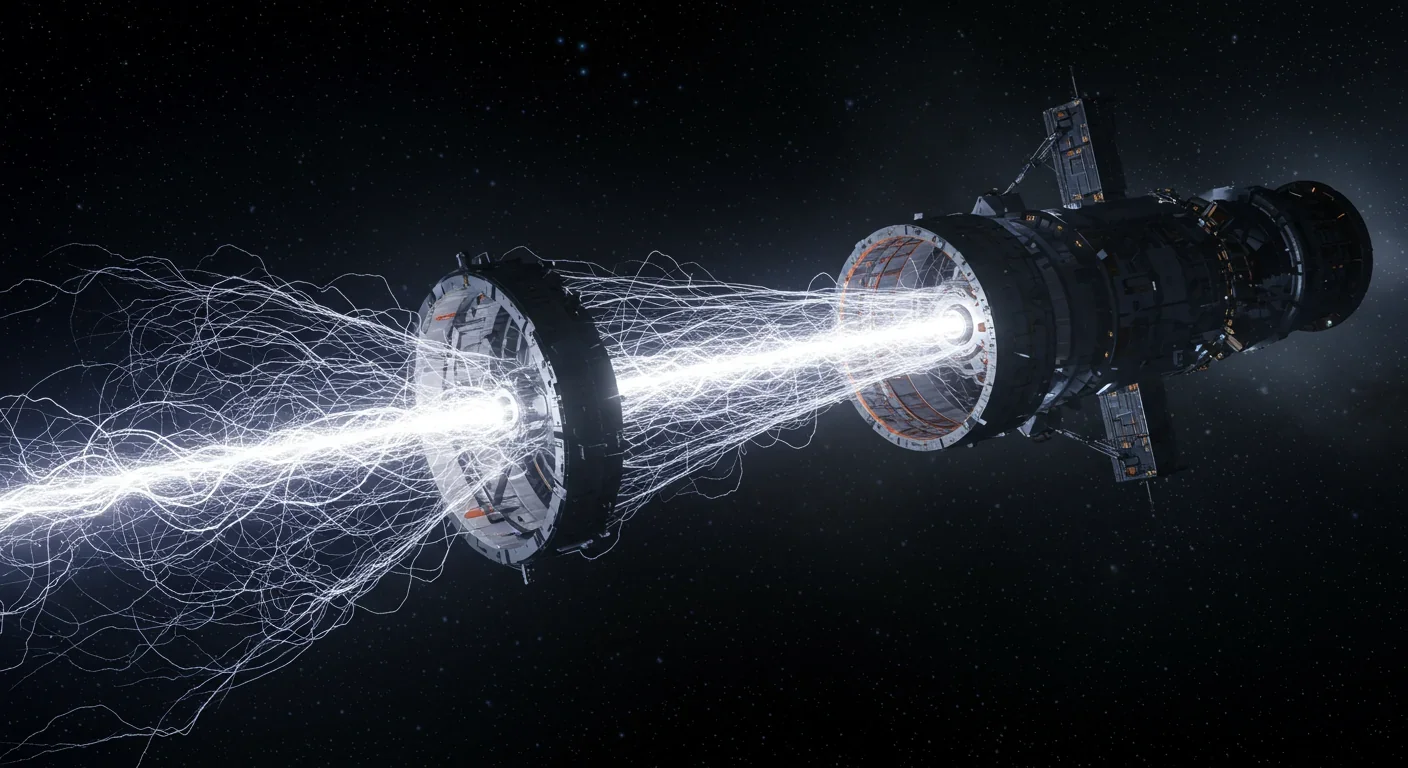

The Bussard Ramjet, proposed in 1960, would scoop interstellar hydrogen with a massive magnetic field to fuel fusion engines. Recent studies reveal fatal flaws: magnetic drag may exceed thrust, and proton fusion loses a billion times more energy than it generates.

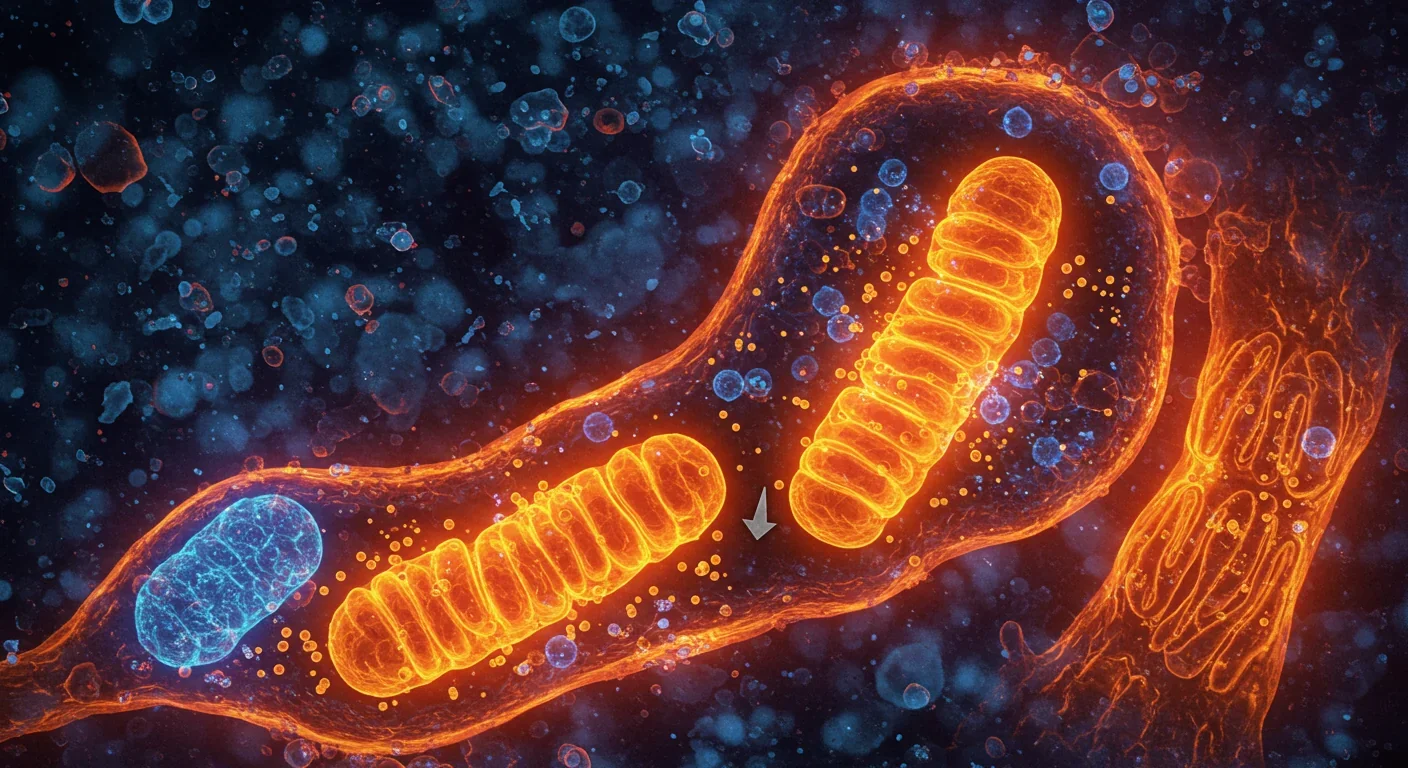

Mitophagy—your cells' cleanup mechanism for damaged mitochondria—holds the key to preventing Parkinson's, Alzheimer's, heart disease, and diabetes. Scientists have discovered you can boost this process through exercise, fasting, and specific compounds like spermidine and urolithin A.

Shifting baseline syndrome explains why each generation accepts environmental degradation as normal—what grandparents mourned, you take for granted. From Atlantic cod populations that crashed by 95% to Arctic ice shrinking by half since 1979, humans normalize loss because we anchor expectations to our childhood experiences. This amnesia weakens conservation policy and sets inadequate recovery targets. But tools exist to reset baselines: historical data, long-term monitoring, indigenous knowle...

Social media has created an 'authenticity paradox' where 5.07 billion users perform carefully curated spontaneity. Algorithms reward strategic vulnerability while psychological pressure to appear authentic causes creator burnout and mental health impacts across all users.

Scientists have decoded how geckos defy gravity using billions of nanoscale hairs that harness van der Waals forces—the same weak molecular attraction that now powers climbing robots on the ISS, medical adhesives for premature infants, and ice-gripping shoe soles. Twenty-five years after proving the mechanism, gecko-inspired technologies are quietly revolutionizing industries from space exploration to cancer therapy, though challenges in durability and scalability remain. The gecko's hierarch...

Cities worldwide are transforming governance through digital platforms, from Seoul's participatory budgeting to Barcelona's open-source legislation tools. While these innovations boost transparency and engagement, they also create new challenges around digital divides, misinformation, and privacy.

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.