AI Training Data Copyright Crisis: Lawsuits & Solutions

TL;DR: Differential privacy and federated analytics are transforming how organizations extract insights from data. Differential privacy adds mathematical noise to protect individuals while preserving aggregate statistics; federated analytics keeps data local, moving computation to the data instead of centralizing raw records. Together, they enable compliance with GDPR, CCPA, and HIPAA, reduce breach risks, and unlock collaborative AI across healthcare, finance, and consumer tech. The global Privacy Enhancing Technologies market is projected to reach $28.4 billion by 2034, driven by regulatory pressure and public demand for privacy. However, challenges remain: privacy-utility trade-offs, budget exhaustion, implementation complexity, and risks of entrenching tech giants. Organizations must invest in privacy engineering skills, open-source tools, and governance to balance individual privacy, collective utility, and equity. The next decade will determine whether these techniques democratize data or consolidate power.

In 2020, the U.S. Census Bureau made a decision that would ripple through every dataset, boardroom, and regulatory framework in the years to come. For the first time in history, they released census statistics using differential privacy—a mathematical technique that adds carefully calibrated noise to data, protecting individual identities while preserving aggregate insights. By 2025, this approach has evolved from a research curiosity into a production reality, powering everything from Apple's emoji suggestions to Google's Chrome telemetry. Meanwhile, a parallel revolution—federated analytics—has emerged, enabling hospitals, banks, and tech giants to train AI models and extract insights without ever centralizing raw data. Together, these techniques are dismantling the long-held assumption that data must be centralized to be useful, and in doing so, they're redefining what privacy means in the age of AI.

The stakes couldn't be higher. Privacy concerns remain the top barrier to AI adoption, with 80% of organizations now dedicating entire risk functions to AI governance. Regulatory enforcement is intensifying: the California Privacy Protection Agency issued its first-ever enforcement advisory on data minimization, the FTC banned data brokers from selling sensitive location data, and the DOJ entered the arena with proposed rules on bulk data transfers to adversarial nations. At the same time, the global Privacy Enhancing Technologies (PETs) market is exploding—projected to grow from $3.17 billion in 2024 to $28.4 billion by 2034, a compound annual growth rate of 24.5%. This isn't hype; it's a structural shift. Organizations that master differential privacy and federated analytics will unlock competitive advantages, regulatory compliance, and customer trust. Those that don't risk fines, breaches, and obsolescence.

Differential privacy is not intuitive. It defies the conventional wisdom that privacy and utility are locked in zero-sum combat. At its core, differential privacy guarantees that the output of an analysis will be roughly the same whether any single individual's data is included or excluded. This is achieved by injecting noise—randomness drawn from distributions like Laplace or Gaussian—into query results. The amount of noise is calibrated to the sensitivity of the query (how much one person's data can change the result) and the privacy budget, represented by epsilon (ε). Smaller epsilon values yield stronger privacy but lower accuracy; typical production systems use ε between 0.1 and 1.

The genius lies in the mathematics. Differential privacy provides provable guarantees that hold even when an adversary possesses unlimited auxiliary information. Traditional anonymization—removing names, addresses, Social Security numbers—crumbles under linkage attacks. In 1997, a graduate student re-identified Massachusetts Governor William Weld's medical records by linking anonymized hospital data to voter registration lists. Differential privacy renders such attacks moot. No matter what external datasets an adversary combines, the noise ensures that the presence or absence of any individual remains ambiguous.

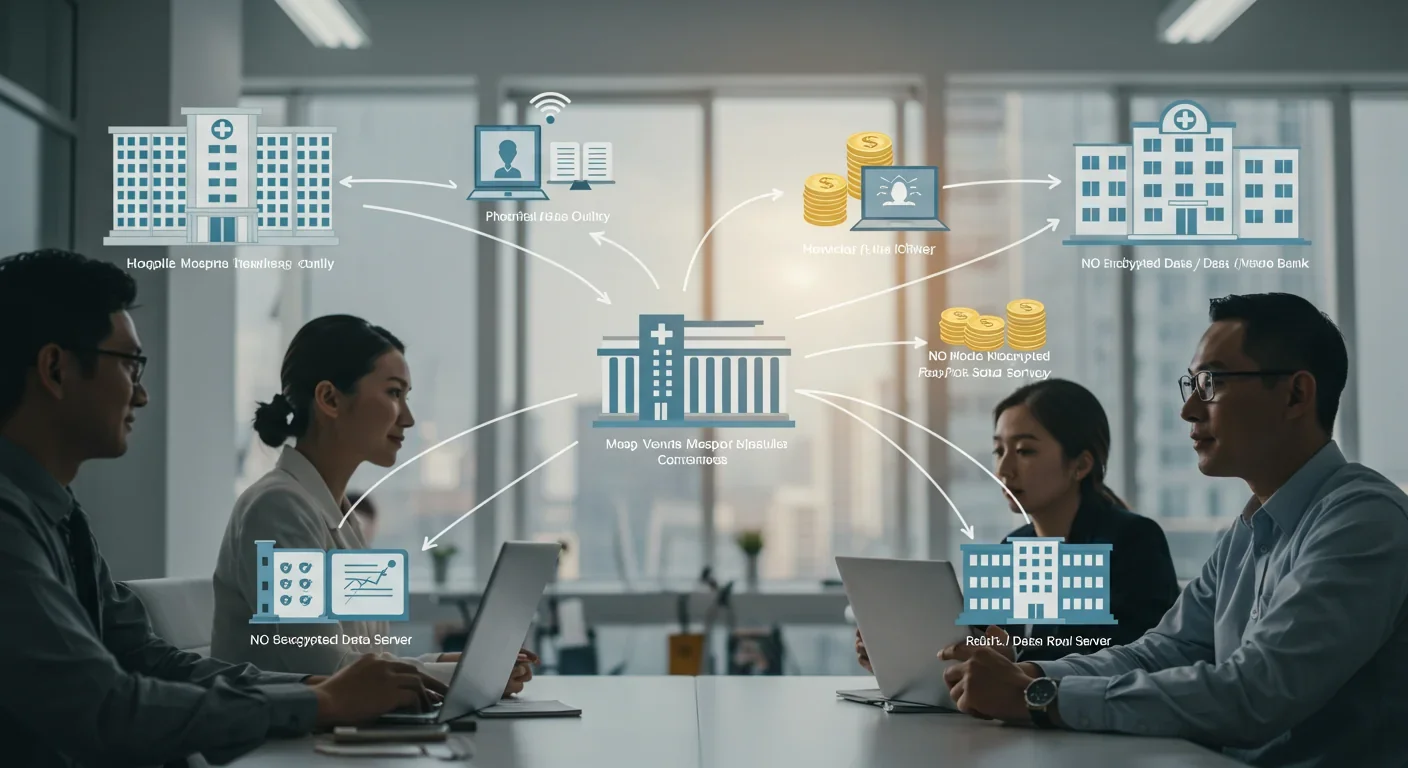

Federated analytics operates on a different principle: data locality. Instead of moving data to the computation, federated systems move the computation to the data. In federated learning—a subset of federated analytics—multiple devices or institutions train a shared machine learning model on their local data, then send only model updates (gradients) to a central server. The server aggregates these updates to improve the global model, but raw data never leaves its origin. This architecture inherently protects against data breaches that plague centralized repositories. If a server is compromised, attackers gain access to aggregated model parameters, not to millions of individual records.

The two techniques are complementary, not competing. Differential privacy excels when aggregate statistics or model outputs must be publicly released from a central dataset. Federated analytics thrives when raw data cannot be centrally stored due to legal, technical, or trust constraints. Increasingly, organizations layer both: federated learning keeps data local, while differential privacy adds noise to the model updates, creating a two-tier defense against inference attacks.

The story of privacy-preserving analytics is a history of breaches, scandals, and regulatory backlash. In the pre-digital era, privacy depended on obscurity—data existed, but was too fragmented and inaccessible to be weaponized. The internet changed that. By the 2000s, data brokers were aggregating billions of records, and researchers were demonstrating linkage attacks with alarming ease. The Weld incident in 1997 was a watershed. Netflix's 2006 Prize dataset—supposedly anonymized movie ratings—was quickly re-identified by cross-referencing with IMDb. Facebook's 2018 Cambridge Analytica scandal exposed how seemingly innocuous data could be repurposed for mass manipulation. Each breach eroded public trust and galvanized regulators.

Differential privacy emerged from academia in 2006, when cryptographers Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith published "Calibrating Noise to Sensitivity in Private Data Analysis." The paper introduced the Laplace mechanism—adding noise proportional to a query's sensitivity—and formalized the privacy guarantee. For nearly a decade, differential privacy remained a theoretical construct, confined to conferences and whitepapers. Then, in 2014, Google deployed RAPPOR (Randomized Aggregatable Privacy-Preserving Ordinal Response) in Chrome, using local differential privacy to collect telemetry without learning individual browsing habits. In 2016, Apple followed with iOS 10, applying local DP to emoji suggestions, QuickType, and Safari analytics. Both companies demonstrated that differential privacy could scale to billions of devices while preserving utility.

Federated learning took a different path. Google introduced the term in 2016, describing a system to improve Gboard's predictive text by training on users' smartphones without uploading keystrokes. The approach addressed a practical problem: transmitting gigabytes of raw text from millions of devices was bandwidth-prohibitive and privacy-invasive. By 2020, federated learning had expanded beyond mobile keyboards. Hospitals began using it to train diagnostic models on patient data siloed by HIPAA. Banks deployed it for fraud detection without sharing transaction records. The COVID-19 pandemic accelerated adoption; the COVID-19 Host Genetics Initiative used federated analytics to analyze genetic data from over 20,000 ICU patients across multiple countries, all while respecting data sovereignty laws.

The regulatory landscape has hardened in parallel. Europe's GDPR (2018) and California's CCPA (2020) imposed strict data minimization and purpose limitation requirements. In 2024, the California Privacy Rights Act (CPRA) expanded these mandates to educational data, and the California Privacy Protection Agency issued its first enforcement advisory, declaring data minimization a "foundational principle." Differential privacy and federated analytics have become compliance tools, not just privacy enhancements. Organizations adopt them to satisfy regulators, reduce breach liability, and signal trustworthiness to customers.

Differential Privacy Mechanisms

The Laplace mechanism is the workhorse of differential privacy. Given a query with sensitivity Δf (the maximum change in output when one record is added or removed), the mechanism adds noise drawn from a Laplace distribution with scale Δf/ε. For example, if a query counts the number of individuals with a certain attribute, and the sensitivity is 1 (adding one person changes the count by at most 1), then for ε = 0.1, the noise scale is 10. A true count of 1,000 might be reported as 1,002.09 or 997.32. The randomness obscures the contribution of any single individual.

The Gaussian mechanism provides (ε, δ)-differential privacy, where δ is a small probability of privacy failure (typically 10⁻⁵). It adds noise from a Gaussian distribution and is often used in machine learning, where gradients are clipped and noised during training. Differentially Private Stochastic Gradient Descent (DPSGD) injects Gaussian noise into gradient updates, limiting the influence of any single training example. This technique powers privacy-preserving AI: Google uses it for federated learning in Gboard, and researchers have trained medical image classifiers with DPSGD to prevent memorization of individual patients.

The privacy budget (ε) is consumed with each query. If an analyst runs ten queries on the same dataset, each with ε = 0.1, the total privacy loss is 1.0 (under basic composition). Advanced composition theorems can tighten this bound, but the fundamental truth remains: repeated queries deplete the budget. Apple addresses this by resetting its privacy budget daily, though critics note this reintroduces risk for users who contribute data every day. The U.S. Census Bureau allocated a fixed budget for its 2020 release, trading off geographic granularity for stronger privacy—a decision that sparked controversy when local governments complained about noisy block-level counts.

Federated Analytics Architecture

Federated learning unfolds in four stages. First, a central server initializes a global model and distributes it to participating nodes (devices, hospitals, data centers). Second, each node trains the model locally on its private data, computing gradients or model updates. Third, nodes encrypt and upload only these updates—never raw data—to the server. Fourth, the server aggregates the updates (typically via Federated Averaging, FedAvg, which computes a weighted mean) to produce a new global model. The cycle repeats until convergence.

Heterogeneity is the Achilles' heel of federated learning. Nodes may have non-IID (non-identically and independently distributed) data—one hospital treats cancer patients, another handles trauma—leading to divergent gradients that slow convergence. Devices may have wildly different computational power and connectivity, causing stragglers to delay aggregation. Adaptive aggregation strategies have emerged: systems dynamically choose between FedAvg (fast but sensitive to outliers) and FedSGD (robust but slower) based on real-time data divergence. One study achieved a 20% reduction in execution time by pruning half the parameters of a convolutional neural network, halving client training time on CIFAR-10 with minimal accuracy loss.

Communication overhead is another bottleneck. Transmitting model updates requires bandwidth; for large models (ResNet, VGG16), this can be prohibitive. Techniques like gradient sparsification (sending only the top-k gradients), quantization (reducing precision from 32-bit floats to 8-bit integers), and model compression address this. In one deployment, federated learning reduced communication cost by 60% compared to centralized training, while achieving less than a 2% accuracy drop. For IoT devices with limited battery and intermittent connectivity, federated edge computing processes data locally, sending only lightweight summaries to the cloud.

Layered Privacy: Combining DP and Federated Learning

The most robust systems layer differential privacy atop federated learning. Each node adds noise to its model updates before transmission, providing a mathematical guarantee even if the server or network is compromised. This approach mitigates inference attacks—adversarial attempts to reconstruct training data from model parameters. Experiments show that differential privacy reduces re-identification risk, though it introduces a privacy-utility trade-off: higher noise (lower ε) improves privacy but degrades model accuracy. In healthcare, a federated learning system with DP achieved 96.3% accuracy on medical image classification—comparable to centralized training—while preserving patient confidentiality.

Blockchain and smart contracts add another layer. Decentralized federated learning replaces the central server with a peer-to-peer network, eliminating the single point of failure. Smart contracts enforce data-sharing agreements, automatically logging consent and usage in an immutable ledger. This architecture is particularly valuable in healthcare, where GDPR and HIPAA mandate audit trails and patient control over data.

Industries Transformed

Healthcare is ground zero. TriNetX's Dataworks-USA Network aggregates de-identified electronic health records from 65 U.S. healthcare organizations—over 110 million patient records—using federated analytics. Researchers query this network to identify clinical trial sites, analyze disease prevalence, and benchmark treatments, all without accessing raw records. BC Platforms partners with TripleBlind to enable federated AI model training on genetic databases, harmonized to the OMOP Common Data Model. The result: faster drug discovery, improved diagnostics, and compliance with data sovereignty laws across Europe, Asia, and North America.

Finance is equally fertile ground. Federated learning allows competing banks to collaboratively train fraud detection models without exposing proprietary transaction data or trading strategies. Zurich Insurance partnered with telecom giant Orange to improve AI predictions by 30%—Orange's customer data enhanced Zurich's models without ever leaving Orange's servers. This collaboration unlocked new revenue streams and competitive advantages. By 2025, financial institutions use federated learning for credit scoring, anti-money-laundering, and algorithmic trading, reducing compliance costs while maintaining data security.

Consumer tech companies—Apple, Google, Meta—have embedded differential privacy into their telemetry pipelines. Apple's local DP protects emoji suggestions, Safari analytics, and macOS usage patterns by adding noise on-device before data reaches Apple's servers. Google's RAPPOR collects aggregate statistics on Chrome features without learning which user visited which site. Microsoft applies differential privacy to Windows telemetry, LinkedIn advertiser queries, Office suggested replies, and Workplace Analytics manager dashboards. These deployments demonstrate that privacy-preserving analytics can scale to billions of users while delivering actionable insights.

Education and government are late adopters but catching up. The U.S. Census Bureau's 2020 differential privacy release was a trial by fire—praised by privacy advocates, criticized by local officials who needed granular counts for redistricting. NIST's 2025 publication, "Guidelines for Evaluating Differential Privacy Guarantees" (SP 800-226), codifies best practices, offering interactive tools, flow charts, and sample code to help organizations design DP systems. The guidelines introduce a differential privacy pyramid that categorizes factors—data sensitivity, query complexity, privacy budget allocation—into a systematic risk assessment framework. This standardization is accelerating adoption in public health surveillance, education analytics, and census statistics worldwide.

Job Market and Skills

The rise of privacy-preserving analytics is reshaping the data profession. Demand for privacy engineers—specialists who implement differential privacy, federated learning, and confidential computing—has surged. Organizations seek talent fluent in cryptographic protocols, distributed systems, and regulatory compliance. Data scientists must now master DP libraries (Google's Differential Privacy Project, OpenDP, TensorFlow Privacy) and federated frameworks (TensorFlow Federated, PyTorch Flower, NVIDIA FLARE, OpenFL). Privacy officers, once confined to legal departments, now sit at the table during system design, translating GDPR and CCPA requirements into technical specifications.

New roles are emerging: privacy budget managers, who allocate epsilon across queries to maximize utility; federated orchestration engineers, who optimize aggregation strategies for heterogeneous nodes; and differential privacy auditors, who verify that deployed systems adhere to their privacy claims. Salaries for these roles command premiums—privacy engineers in Silicon Valley earn 20-30% more than generalist software engineers.

Cultural Shifts and Public Trust

Public attitudes toward data collection are hardening. A 2024 survey found that 94% of consumers expect companies to protect their data, and 46% distrust AI due to accuracy and bias concerns. Privacy-preserving analytics offers a narrative reset: companies can demonstrate compliance, transparency, and respect for user autonomy. Apple markets differential privacy as a competitive differentiator, contrasting its on-device intelligence with competitors' cloud-centric, data-hungry models. This messaging resonates; consumers increasingly choose products that prioritize privacy, even at the cost of convenience.

However, privacy-preserving analytics also introduces new power dynamics. Large tech companies—Google, Apple, Microsoft—possess the engineering resources, data volumes, and expertise to deploy differential privacy and federated learning at scale. Smaller firms struggle with integration complexity and high implementation costs. This risks entrenching incumbents, as PETs become a barrier to entry rather than an equalizer. Open-source initiatives (OpenDP, Prio, TensorFlow Privacy) aim to democratize access, but the gap between theory and production-ready systems remains wide.

Regulatory Compliance Made Feasible

GDPR mandates data minimization, purpose limitation, and the right to erasure. Federated analytics satisfies all three: data never leaves its origin, processing occurs only for specified purposes, and local deletion ensures compliance with erasure requests. Differential privacy provides "anonymization" under GDPR's definition—data that cannot be re-identified even with auxiliary information. Legal scholars debate whether DP's probabilistic guarantees meet the "irreversible" standard, but multiple Data Protection Authorities have issued favorable opinions. Differential privacy is increasingly viewed as a safe harbor for data releases, reducing legal risk.

CCPA and CPRA impose transparency and opt-out requirements. Privacy-preserving analytics enables organizations to comply without sacrificing insights. Google Consent Mode, for instance, allows Google Analytics to function without placing cookies if users deny consent—federated analytics and server-side tracking replace client-side data collection. This architecture reduces privacy violations while maintaining measurement fidelity.

HIPAA and similar health privacy laws prohibit sharing identifiable patient data without consent. Federated analytics solves this: hospitals train models locally, sharing only aggregated statistics or model updates. A COPD remote monitoring system demonstrated this by integrating smartphone and smartwatch data with edge computing, delivering real-time analytics without transmitting raw health data to the cloud. Federated Electronic Health Record networks, harmonized to OMOP, enable cross-institutional research while respecting data sovereignty. Over 65 U.S. healthcare organizations now participate in such networks, unlocking insights from 110 million patient records.

Competitive Advantages and Revenue Growth

Organizations that deploy privacy-preserving analytics gain first-mover advantages. They can offer data-driven services—personalized recommendations, predictive analytics, AI-powered tools—without the compliance headaches and breach risks of centralized data lakes. Federated learning enables data partnerships that were previously untenable: competitors collaborate on fraud detection, hospitals co-train diagnostic models, and manufacturers improve predictive maintenance algorithms using data from multiple supply chain partners. Zurich Insurance's 30% accuracy gain from federated learning with Orange exemplifies the value.

Differential privacy unlocks previously untouchable datasets. Governments can release granular statistics on income, health, and demographics without privacy concerns. Companies can share aggregate customer behavior with third parties for benchmarking or research. Differential privacy also enables synthetic data generation: noised real data seeds generative models that produce realistic datasets for testing, training, and sharing. These synthetic datasets carry minimal privacy risk and can be disseminated freely, accelerating innovation.

Operational Efficiency and Cost Reduction

Federated analytics reduces infrastructure costs. Organizations avoid building massive centralized data warehouses, eliminating storage, transfer, and processing expenses. Data stays at its source, reducing bandwidth consumption—federated learning transmits only model updates, which are orders of magnitude smaller than raw datasets. One study found that federated learning's communication cost was 60% lower than centralized training. For IoT deployments—smart cities, industrial sensors, connected vehicles—this efficiency is critical; devices have limited battery and intermittent connectivity.

Federated analytics also lowers carbon footprints. Centralized data centers consume vast energy for storage and computation. Federated systems distribute computation to the edge, leveraging underutilized resources on smartphones, laptops, and local servers. By avoiding large data transfers and central warehouses, federated analytics reduces the environmental impact of AI and big data analytics—a selling point for organizations committed to sustainability.

Accelerated AI Adoption

Data scarcity is a top barrier to AI: 42% of organizations report insufficient proprietary data to customize generative AI models. Federated learning and differential privacy alleviate this. Organizations can train models on external datasets—partners, customers, even competitors—without data leaving its origin. This unlocks collaborative AI: pharmaceutical companies co-develop drug discovery models using patient data from multiple countries; automotive manufacturers improve autonomous driving algorithms with data from competing fleets; retailers enhance recommendation engines with federated training on cross-platform user behavior.

Differential privacy enables safe data augmentation. Organizations can publish differentially private synthetic data or aggregated features, which others use to bootstrap models. This accelerates innovation, especially for startups and researchers who lack access to large datasets.

The Privacy-Utility Trade-off

Differential privacy's core challenge is balancing noise and accuracy. Too little noise (high ε) leaks information; too much renders data useless. For small subpopulations, the trade-off is acute. The U.S. Census Bureau's 2020 differential privacy release protected overall state-level counts but distorted block-level data, especially for rural and tribal areas. Local governments complained they couldn't validate counts or draw accurate district boundaries. Critics argued that the noise disproportionately affected minority communities, whose small populations were masked more aggressively than large urban centers. This is the differential privacy paradox: the technique protects individuals by obscuring details, but those details are often essential for equity and representation.

Choosing epsilon is more art than science. NIST recommends ε between 1 and 10 for machine learning, but these values depend on sensitivity, dataset size, and acceptable risk. A binary search over epsilon, calibrated to posterior disclosure risk, can optimize the trade-off, but requires statistical expertise. Many practitioners default to ε = 1 without rigorous analysis, risking either over-protection (useless data) or under-protection (privacy violations). Usability studies reveal that even technically skilled users struggle to configure differential privacy tools correctly; intuitive interfaces and clear privacy budget visualizations are essential but rare.

Budget Exhaustion and Query Limits

The privacy budget is finite. Each query consumes part of the allowable epsilon, and repeated queries exhaust the budget, forcing analysts to choose between halting analysis or weakening privacy. Apple's daily budget reset is a pragmatic workaround but reintroduces cumulative risk for users who contribute data every day. Organizations must plan analytics workloads carefully, prioritizing high-value queries and batching where possible. Dynamic budget allocation—adjusting epsilon based on query sensitivity and remaining budget—can extend utility, but adds complexity.

In practice, budget exhaustion limits long-term analytics. A healthcare consortium running federated learning across hospitals found that after six months of continuous training, differential privacy budgets were nearly depleted, forcing a reset that reintroduced privacy risk. This tension between ongoing insights and finite budgets is unresolved.

Implementation Vulnerabilities

Differential privacy's mathematical guarantees assume correct implementation. Real-world deployments face pitfalls: floating-point arithmetic leakage allows attackers to distinguish adjacent datasets from a single Laplace sample with over 35% probability; timing side-channel attacks exploit differences in computation time to infer private information. Robust implementations require fixed-point or integer-based noise generation, constant-time operations, and rigorous auditing—requirements that exceed the capabilities of most engineering teams.

Similarly, federated learning's privacy depends on aggregation security. If an adversary compromises the central server or intercepts model updates, they can mount inference attacks—reconstructing training data from gradients. Secure aggregation (encrypting updates so the server sees only the sum, not individual contributions) mitigates this, but adds computational overhead. Homomorphic encryption, which allows computation on encrypted data, offers stronger protection but remains impractically slow for large-scale deployments.

Non-IID Data and Model Drift

Federated learning's performance degrades when data is heterogeneous. If one hospital treats pediatric patients and another treats geriatrics, their gradients diverge, slowing convergence and reducing accuracy. Data preparation—normalizing, augmenting, balancing classes—can mitigate heterogeneity, but requires domain knowledge and coordination across nodes. Adaptive aggregation (switching between FedAvg and FedSGD based on divergence) helps, but isn't a panacea.

Model drift is another risk. Nodes may update at different rates; a smartphone trains once a week, a data center trains hourly. Stale updates poison the global model, causing accuracy to plateau or regress. Asynchronous federated learning addresses this by weighting updates based on staleness, but introduces new hyperparameters to tune.

Inequality and the Digital Divide

Privacy-preserving analytics risks exacerbating inequality. Differential privacy's noise disproportionately obscures small populations—rural areas, minority groups, rare diseases—making them invisible in aggregated statistics. The 2020 Census controversy exemplifies this: critics argued that differential privacy introduced political bias, tilting redistricting toward urban, majority populations. While the Census Bureau defended the approach as necessary for privacy, the incident highlights a structural tension: privacy protections can conflict with equity and representation.

Federated learning's decentralization assumes that nodes have sufficient data and computational resources. In practice, hospitals in wealthy countries have well-curated electronic health records and powerful servers; clinics in low-income regions have sparse, paper-based records and unreliable infrastructure. Federated systems risk amplifying this divide, as wealthy nodes contribute more to the global model, biasing it toward their populations.

Regulatory Fragmentation

Global privacy regulations are inconsistent. GDPR demands strict data minimization and purpose limitation; China's PIPL imposes data localization; the U.S. has a patchwork of state laws (CCPA, CPRA, Virginia CDPA). Federated analytics can navigate this complexity—data never crosses borders—but differential privacy's status varies by jurisdiction. European Data Protection Authorities have issued conflicting guidance on whether differential privacy satisfies GDPR's anonymization requirement. Organizations deploying globally must interpret multiple legal frameworks, increasing compliance costs and legal uncertainty.

Europe: Regulation-Driven Adoption

Europe leads in privacy regulation and enforcement. GDPR's 2018 rollout forced organizations worldwide to adopt data minimization, encryption, and pseudonymization. Differential privacy and federated analytics emerged as compliance strategies. However, European Data Protection Authorities have taken divergent stances. Austria, France, Italy, and the Netherlands ruled Google Analytics non-compliant after the Schrems II decision invalidated EU-U.S. data transfers, citing insufficient safeguards for U.S. surveillance. The 2023 EU-U.S. Data Privacy Framework restored adequacy, but skepticism persists. Federated analytics offers a workaround: data stays within the EU, sidestepping cross-border transfer risks.

European research consortia are pioneering federated analytics. The COVID-19 Host Genetics Initiative used federated queries to analyze genetic data from over 20,000 ICU patients across multiple countries, respecting data sovereignty while accelerating research. The European Health Data Space (EHDS) aims to create a continent-wide federated health data infrastructure, enabling cross-border research without centralizing records. These initiatives demonstrate Europe's preference for collaborative, regulated, privacy-first data ecosystems.

United States: Industry Leadership, Regulatory Catch-Up

The U.S. lacks a federal privacy law, but state regulations (CCPA, CPRA, Virginia CDPA) are proliferating. The California Privacy Protection Agency's 2024 enforcement advisory on data minimization signals a shift toward European-style mandates. Meanwhile, U.S. tech giants—Apple, Google, Microsoft—have deployed differential privacy at scale, driven by competitive differentiation and user demand. Apple's marketing emphasizes privacy as a core product feature, contrasting its on-device intelligence with cloud-centric competitors.

The U.S. government is a mixed bag. The Census Bureau's 2020 differential privacy release was contentious but groundbreaking. NIST's 2025 guidelines provide practical tooling, signaling institutional support. However, the DOJ's 2024 proposed rules on bulk data transfers to adversarial nations highlight a national security lens: privacy-preserving analytics becomes a tool to protect American data from foreign adversaries, not just commercial misuse.

U.S. industry also leads in open-source PETs. Google's Differential Privacy Project, OpenDP (a Microsoft-Harvard collaboration), and TensorFlow Privacy lower barriers to entry, democratizing access. Prio, a Mozilla project for privacy-preserving telemetry, is deployed by Firefox and used by public health agencies for COVID-19 exposure notification.

China: Data Localization and Surveillance

China's Personal Information Protection Law (PIPL) and Cybersecurity Law impose strict data localization: personal data collected in China must be stored domestically, and cross-border transfers require government approval. Federated analytics aligns perfectly with this mandate—data never leaves the country. Chinese tech companies (Alibaba, Tencent, Baidu) are investing heavily in federated learning for e-commerce, fintech, and smart cities.

However, China's approach diverges sharply from Western privacy norms. The government demands backdoor access to data for surveillance and social credit systems. Privacy-preserving analytics, in this context, protects data from commercial misuse but not state access. Differential privacy and federated learning become tools for regime compliance, not individual autonomy.

Africa, Latin America, and Asia: Leapfrogging and Localization

Developing regions face unique challenges: limited infrastructure, sparse data, and weak regulatory frameworks. Federated analytics offers a leapfrog opportunity—building privacy-first systems without the legacy burden of centralized data lakes. Mobile-first economies in Africa and Asia can deploy federated learning on smartphones, sidestepping the need for expensive data centers.

However, adoption lags due to cost and complexity. Homomorphic encryption and secure multi-party computation require computational resources that many organizations lack. Open-source tools and cloud-based Federation-as-a-Service (AWS, Google Cloud, Snowflake) are lowering barriers, but the digital divide persists.

Some regions are innovating. CanDIG (Canada) enables cross-provincial genomic analyses without moving data across jurisdictional boundaries, respecting provincial privacy laws. Australian Genomics uses federated analytics to accelerate rare disease diagnosis across clinical sites. These models demonstrate that federated systems can address regional regulatory and infrastructure constraints.

Skills to Develop

Data professionals must acquire new competencies. Mastery of differential privacy libraries (Google's DP Project, OpenDP, TensorFlow Privacy) is essential—understanding epsilon selection, sensitivity calculation, and composition theorems. Federated learning frameworks (TensorFlow Federated, PyTorch Flower, NVIDIA FLARE, OpenFL) require fluency in distributed systems, secure aggregation, and adaptive optimization. Privacy budgeting—allocating epsilon across queries to maximize utility—is emerging as a specialized skill, combining statistics, optimization, and domain expertise.

Regulatory literacy is non-negotiable. Engineers must understand GDPR's anonymization requirements, CCPA's opt-out mechanics, HIPAA's de-identification standards, and emerging frameworks (CPRA, PIPL, EHDS). Legal and technical teams must collaborate to translate regulatory mandates into system designs.

Soft skills matter too. Privacy-preserving analytics requires cross-functional coordination: data scientists, privacy officers, infrastructure engineers, and business stakeholders must align on risk tolerance, utility requirements, and compliance obligations. Communication skills—explaining epsilon, privacy budgets, and federated architectures to non-technical audiences—are critical for adoption.

Organizational Strategies

Organizations should start small. Deploy differential privacy on low-sensitivity queries (aggregate counts, summary statistics) to build expertise and tune epsilon values. Pilot federated learning on a single use case—fraud detection, recommendation systems, predictive maintenance—before scaling. Leverage open-source tools and cloud-based Federation-as-a-Service to minimize upfront costs.

Establish a privacy budget governance process. Designate a privacy budget manager who allocates epsilon across teams and queries, tracks consumption, and flags budget exhaustion risks. Integrate privacy budgeting into analytics workflows: analysts request epsilon allocations, justify queries, and report utility metrics. This process prevents ad hoc queries from depleting budgets and ensures high-value analyses receive priority.

Build a federated operating model. Create a central analytics team that owns shared infrastructure (federated servers, aggregation algorithms, privacy tools) and governance (standards, training, compliance). Embed analytics teams within business units to deliver localized insights. Foster a governance community—regular forums where teams share learnings, coordinate on privacy budgets, and standardize practices. This federated structure balances autonomy and alignment, avoiding the fragmentation that plagues distributed analytics.

Invest in tooling and automation. Differential privacy and federated learning are operationally complex; manual configuration invites errors. Automate epsilon selection via binary search over posterior risk, implement dynamic privacy budget decay to extend utility, and deploy adaptive aggregation to handle non-IID data. Use Trusted Research Environments (TREs) with secure "airlock" processes to ensure that code entering the environment is safe and results exiting are non-disclosive.

How to Adapt as a Consumer

Individuals can demand privacy-preserving practices. Choose products that use differential privacy and federated learning—Apple's on-device intelligence, Google's federated Gboard, privacy-first analytics platforms. Read privacy policies; organizations increasingly disclose their use of PETs. Exercise opt-out rights under CCPA and GDPR; reduced data collection limits the value of centralized analytics, incentivizing companies to adopt federated approaches.

Stay informed. Privacy-preserving analytics is evolving rapidly; new techniques (synthetic data, confidential computing, zero-knowledge proofs) will emerge. Follow NIST guidelines, academic research, and industry blogs to understand how your data is protected—or not.

Advocate for transparency. Demand that organizations disclose their epsilon values, privacy budgets, and aggregation methods. Transparency enables accountability and helps users assess risk. Differential privacy's promise—that your presence or absence in a dataset makes no difference—only holds if implementations are correct and honestly reported.

By 2035, privacy-preserving analytics will be infrastructure, not innovation. Differential privacy and federated learning will be embedded in databases, cloud platforms, and AI frameworks—default behaviors, not opt-in features. The global PETs market's projected growth to $40 billion reflects this trajectory. Regulatory mandates will accelerate adoption: GDPR's ongoing scrutiny, the EU Health Data Space, and U.S. state laws are pushing organizations toward privacy by design.

Yet challenges remain. The privacy-utility trade-off will persist—differential privacy's noise will continue to obscure small populations, and federated learning's heterogeneity will slow convergence. Research is addressing these: advanced composition theorems tighten privacy budgets, adaptive aggregation mitigates non-IID data, and synthetic data generation offers high-utility, low-risk alternatives. But fundamental limits exist; perfect privacy and perfect utility are mathematically incompatible.

The political economy of privacy will shape outcomes. Large tech companies control the expertise, resources, and data volumes to deploy PETs at scale. Open-source initiatives and regulatory mandates can democratize access, but the risk of entrenchment is real. If only giants can afford robust privacy-preserving analytics, the technology will consolidate power, not distribute it.

The societal bargain is also unresolved. Differential privacy protects individuals but can undermine collective goals—accurate census counts, equitable redistricting, representation of marginalized groups. Federated analytics enables collaboration but assumes participants have resources and data. Balancing individual privacy, collective utility, and equity demands not just technical solutions but political choices about whose privacy matters, what insights are essential, and how to distribute the costs and benefits.

The next decade will determine whether privacy-preserving analytics fulfills its promise or becomes another tool for incumbent advantage. The mathematics is sound, the tools are maturing, and the regulatory momentum is building. But technology alone won't decide the outcome. Organizations, regulators, and individuals must engage—demanding transparency, investing in skills, and designing systems that serve not just efficiency and compliance, but also fairness, representation, and human dignity. The revolution is here. The question is: who will it serve?

Recent breakthroughs in fusion technology—including 351,000-gauss magnetic fields, AI-driven plasma diagnostics, and net energy gain at the National Ignition Facility—are transforming fusion propulsion from science fiction to engineering frontier. Scientists now have a realistic pathway to accelerate spacecraft to 10% of light speed, enabling a 43-year journey to Alpha Centauri. While challenges remain in miniaturization, neutron management, and sustained operation, the physics barriers have ...

Epigenetic clocks measure DNA methylation patterns to calculate biological age, which predicts disease risk up to 30 years before symptoms appear. Landmark studies show that accelerated epigenetic aging forecasts cardiovascular disease, diabetes, and neurodegeneration with remarkable accuracy. Lifestyle interventions—Mediterranean diet, structured exercise, quality sleep, stress management—can measurably reverse biological aging, reducing epigenetic age by 1-2 years within months. Commercial ...

Data centers consumed 415 terawatt-hours of electricity in 2024 and will nearly double that by 2030, driven by AI's insatiable energy appetite. Despite tech giants' renewable pledges, actual emissions are up to 662% higher than reported due to accounting loopholes. A digital pollution tax—similar to Europe's carbon border tariff—could finally force the industry to invest in efficiency technologies like liquid cooling, waste heat recovery, and time-matched renewable power, transforming volunta...

Humans are hardwired to see invisible agents—gods, ghosts, conspiracies—thanks to the Hyperactive Agency Detection Device (HADD), an evolutionary survival mechanism that favored false alarms over fatal misses. This cognitive bias, rooted in brain regions like the temporoparietal junction and medial prefrontal cortex, generates religious beliefs, animistic worldviews, and conspiracy theories across all cultures. Understanding HADD doesn't eliminate belief, but it helps us recognize when our pa...

The bombardier beetle has perfected a chemical defense system that human engineers are still trying to replicate: a two-chamber micro-combustion engine that mixes hydroquinone and hydrogen peroxide to create explosive 100°C sprays at up to 500 pulses per second, aimed with 270-degree precision. This tiny insect's biochemical marvel is inspiring revolutionary technologies in aerospace propulsion, pharmaceutical delivery, and fire suppression. By 2030, beetle-inspired systems could position sat...

The U.S. faces a catastrophic care worker shortage driven by poverty-level wages, overwhelming burnout, and systemic undervaluation. With 99% of nursing homes hiring and 9.7 million openings projected by 2034, the crisis threatens patient safety, family stability, and economic productivity. Evidence-based solutions—wage reforms, streamlined training, technology integration, and policy enforcement—exist and work, but require sustained political will and cultural recognition that caregiving is ...

Every major AI model was trained on copyrighted text scraped without permission, triggering billion-dollar lawsuits and forcing a reckoning between innovation and creator rights. The future depends on finding balance between transformative AI development and fair compensation for the people whose work fuels it.