Saturn's Rings: What Cassini Revealed About Their Fate

TL;DR: Machine learning enables spacecraft to navigate asteroids autonomously, making split-second decisions across 40-minute communication delays. Recent missions prove AI can conduct complex science without human oversight.

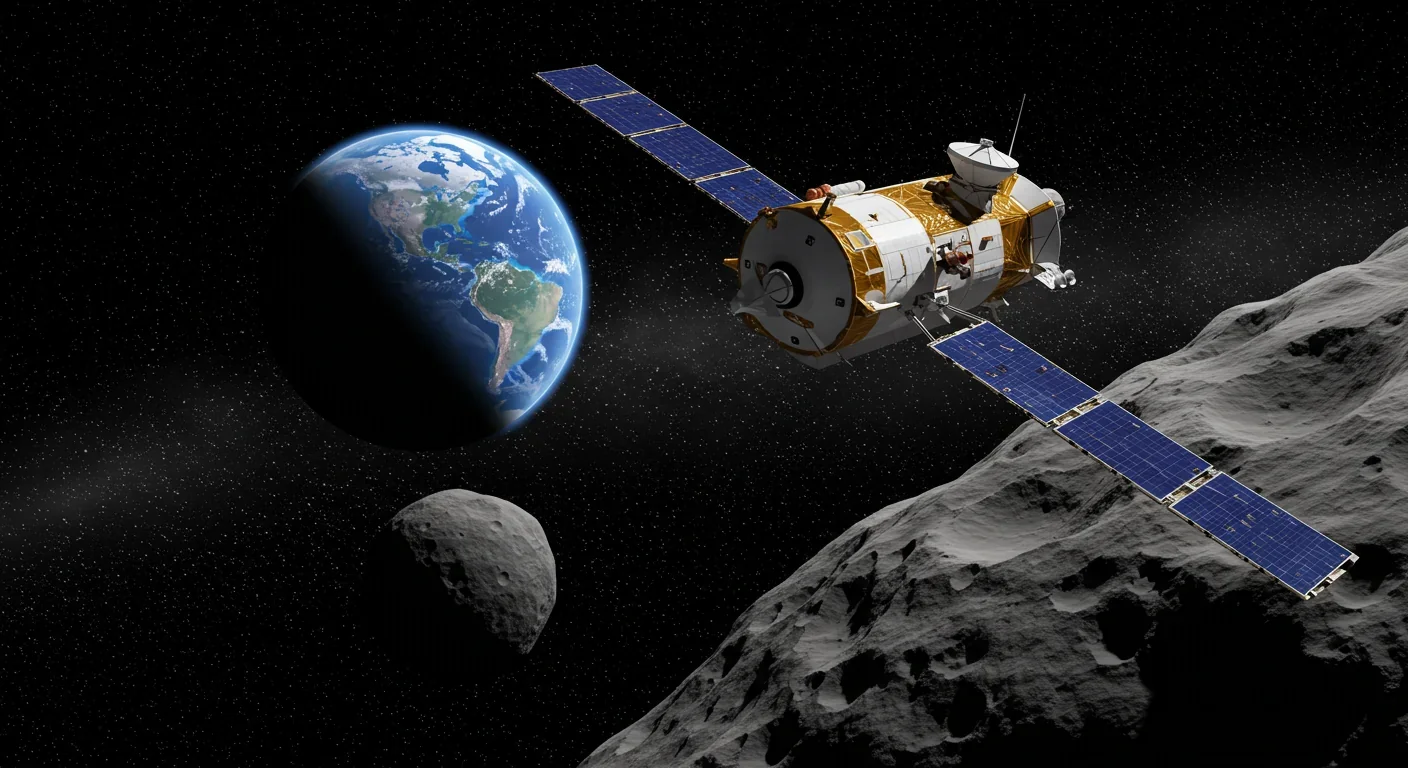

Picture a spacecraft the size of a car, alone in the asteroid belt, 200 million miles from Earth. A message sent from mission control takes 40 minutes to arrive, and another 40 to get a response back. When you're approaching a tumbling rock at thousands of miles per hour, 80 minutes might as well be 80 years. The only option? Let the spacecraft think for itself.

This isn't science fiction anymore. NASA's OSIRIS-REx landed in Utah in September 2023 carrying 70.3 grams of pristine asteroid material—more than its 60-gram target—after autonomously navigating to asteroid Bennu, selecting its own landing site, and collecting samples with a positional accuracy of just 3.5 centimeters. No human hands on the controls. Just machine learning algorithms making split-second decisions in the loneliest environment imaginable.

We're witnessing a fundamental shift in how humanity explores space. Autonomous spacecraft aren't just taking instructions anymore—they're making judgment calls that would stump veteran mission controllers. And as companies like Karman+ prepare to launch asteroid mining missions by 2027, the question isn't whether AI will run deep-space exploration. It's whether we're ready for what happens when machines become better at space travel than we are.

For decades, spacecraft were expensive remote-controlled cars. Voyager 1 and 2, launched in 1977, carried surprisingly simple computers with less processing power than a modern smart toaster. Every maneuver, every course correction, every photograph required human approval transmitted across millions of miles.

The turning point came with Japan's Hayabusa mission in 2003. When multiple reaction wheels failed and the spacecraft tumbled out of control near asteroid Itokawa, Hayabusa's ion thrusters operated autonomously for over 1,000 hours, the first time a deep-space probe demonstrated long-duration autonomous propulsion. The spacecraft limped home with five particles of asteroid dust, but it proved something more valuable: machines could improvise.

Fast-forward to 2018. NASA's twin MarCO CubeSats, each about the size of a briefcase, flew independently to Mars and served as autonomous communication relays during the InSight lander's descent. They weren't following a script—they were making real-time decisions about antenna pointing, signal routing, and data compression without human oversight.

The asteroid missions that followed weren't incremental improvements—they were leaps into uncharted territory. Communication delays weren't just inconvenient, they were mission-killers. When you're 200 million miles from Earth, the round-trip signal delay stretches to 40 minutes. If the spacecraft sees a boulder in its path, waiting for human instructions means collision, not correction.

So how does a spacecraft see an asteroid it's never visited? The answer lies in three interconnected AI systems working in concert: computer vision for perception, reinforcement learning for decision-making, and adaptive control for execution.

Computer vision came first. Modern spacecraft cameras analyze terrain in real-time, classifying surfaces as safe or hazardous faster than any human geologist. NASA's Natural Feature Tracking system on OSIRIS-REx could identify distinctive rocks and craters on Bennu's surface, then use them as navigation landmarks. Think of it as the spacecraft building its own GPS constellation from asteroid boulders.

But seeing isn't enough—you need to make decisions. That's where reinforcement learning enters, the same family of algorithms that taught computers to beat world champions at Go and StarCraft. Except instead of board games, these systems learn to navigate gravitational fields, plan fuel-efficient trajectories, and react to unexpected tumbling.

Recent research demonstrated that transformer-based AI policies significantly outperform older LSTM networks in autonomous spacecraft rendezvous under delayed observations, the exact scenario asteroid missions face. The AI doesn't memorize one solution—it learns principles: how to trade velocity for stability, when to abort an approach, how to recover from sensor failures.

Then there's adaptive control, the system that translates decisions into thruster firings and reaction wheel adjustments. Horizon-based asteroid navigation algorithms explicitly incorporate edge detection and synthetic image generation within the control loop, letting spacecraft predict what the asteroid will look like from different angles before actually moving there.

These three systems don't operate in isolation—they form a closed loop. Vision feeds perception data to the learning algorithms, which make high-level decisions, which get refined by adaptive controllers, which execute maneuvers that generate new visual data. The whole cycle happens dozens of times per second, with zero human input.

Perhaps most remarkably, these AI systems train on Earth before launch. Scientists generate synthetic asteroid images using known rotation periods, Bennu's 4.3-hour spin cycle for instance, and realistic lighting conditions. The spacecraft practices millions of virtual approaches before ever leaving the ground, building an intuition for asteroid navigation that would take human pilots lifetimes to develop.

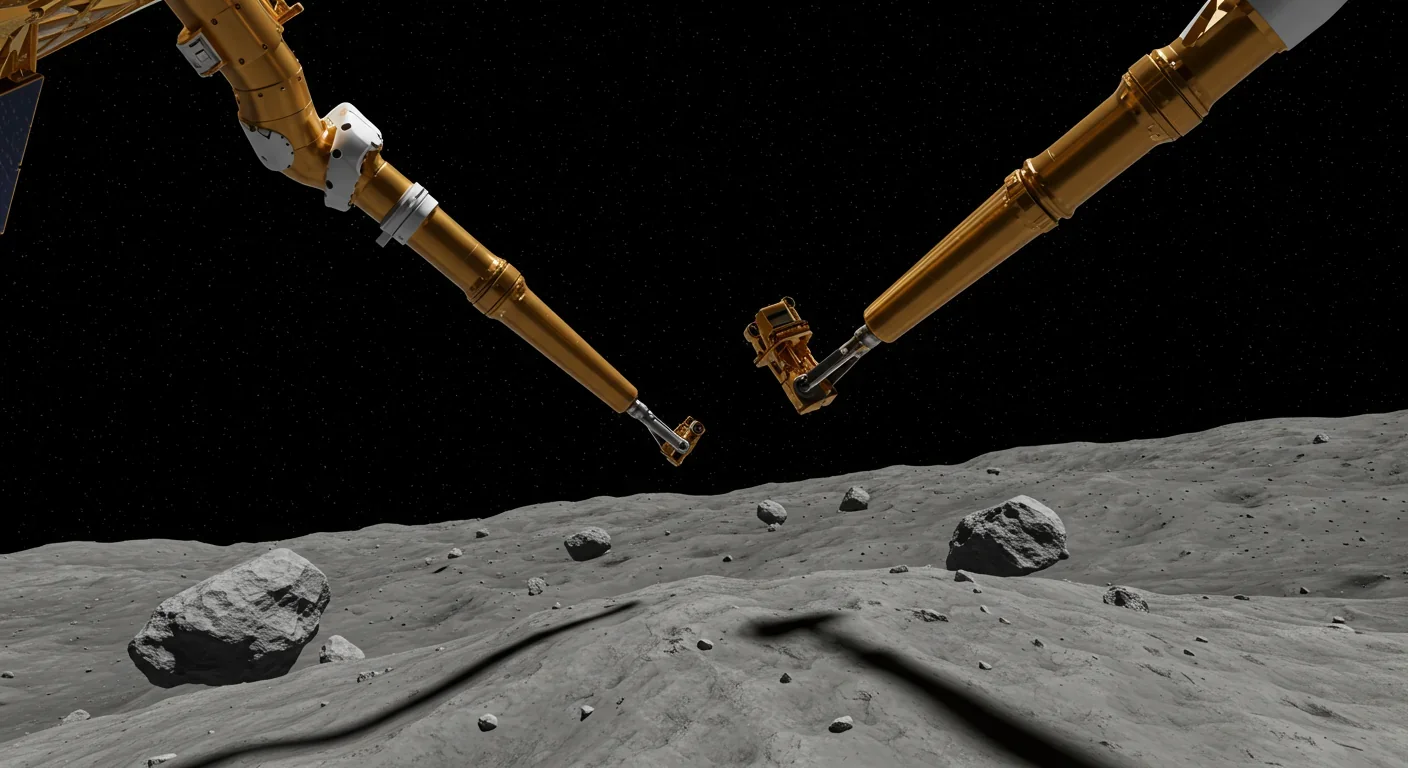

October 20, 2020. After four years of travel and two years orbiting asteroid Bennu, NASA's OSIRIS-REx spacecraft descended toward the surface for a Touch-and-Go maneuver that would last exactly five seconds. The spacecraft would extend its 11-foot robotic arm with three articulated joints, touch the surface, fire a burst of nitrogen gas to stir up dust and pebbles, and capture them in a collection chamber, all while traveling at the same speed as the spinning asteroid.

The landing site, called Nightingale, was the size of a few parking spaces in a crater surrounded by building-sized boulders. If OSIRIS-REx drifted more than a meter or two off course, it would crash into rock walls or miss the safe zone entirely. Mission control couldn't help because by the time radio signals reached Earth and instructions returned, the maneuver would be ancient history.

So the spacecraft did it alone. Using Natural Feature Tracking, OSIRIS-REx identified 1,500 distinct surface features, rocks, craters, color variations, and used them to calculate its exact position and velocity. The system achieved a final positional error of just 3.5 centimeters, about the width of a golf ball.

The precision was absurd. Bennu's weak gravity meant the slightest touch would send the spacecraft bouncing back into space, so the contact had to be perfectly controlled. Too slow and the collection mechanism wouldn't capture enough material. Too fast and the spacecraft would crash. The AI threaded that needle flawlessly, collecting so much material that some leaked out of the overfull container, a problem mission planners called the best failure we could ask for.

But the real breakthrough wasn't the sample collection—it was what happened during the two years OSIRIS-REx spent studying Bennu before the touchdown. The spacecraft autonomously mapped the asteroid's entire surface, analyzed its composition, measured its rotation, detected particle ejections, and adjusted its orbit dozens of times, all while making independent decisions about science priorities and fault detection.

When OSIRIS-REx's sample capsule parachuted into the Utah desert in September 2023 carrying 70.3 grams of pristine asteroid material, it validated something bigger than asteroid science. It proved that AI could conduct complex scientific fieldwork without human supervision across interplanetary distances.

If OSIRIS-REx was the proof of concept, ESA's Hera mission is the production model. Launched in October 2024 aboard a Falcon 9, Hera is heading to the Didymos asteroid system to study the aftermath of NASA's DART mission, which deliberately crashed into the small moon Dimorphos and reduced its orbital period by 32 minutes, the first demonstration of planetary defense.

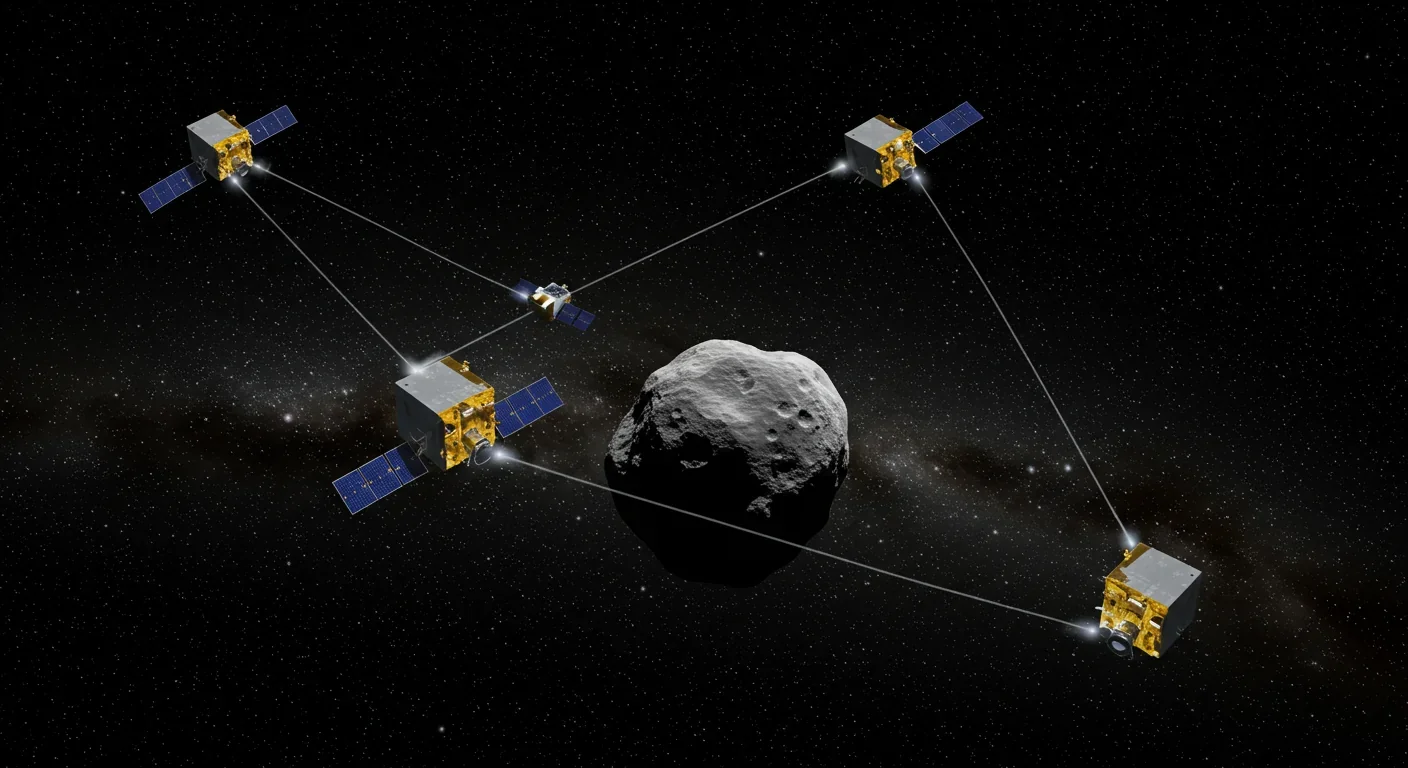

But Hera isn't traveling alone—it's bringing two CubeSat companions, Milani and Juventas, each about the size of a shoebox. These aren't passive passengers. They're autonomous science platforms with inter-satellite communication links that let all three spacecraft share data, coordinate maneuvers, and maintain precise positioning without constant Earth oversight.

Think about what that means. Three independent spacecraft, each with its own AI navigation system, working together around a rubble-pile asteroid barely 160 meters across, where the gravity is so weak you could jump off the surface into space. Juventas will deploy a subsurface radar to probe Dimorphos's internal structure, something never attempted at an asteroid before. Milani will study the dust and debris cloud created by DART's impact.

The AI advancement here is distributed autonomy. Hera's three spacecraft don't just navigate independently, they negotiate tasks, share observations, and back each other up if one system fails. NASA's Distributed Spacecraft Autonomy program has identified five technical areas for this kind of coordination: distributed resource management, reactive operations, system modeling, human-swarm interaction, and ad hoc network communications.

What's particularly clever is the digital twin approach. While Hera operates in deep space, an exact digital replica runs on Earth, simulating everything the real spacecraft might encounter. Scientists can test what-if scenarios without risking the actual mission. When they find solutions, those updates get beamed to the spacecraft to enhance its autonomous capabilities.

Hera represents a subtle but profound shift: from autonomous spacecraft to autonomous science teams. The mission isn't just collecting data automatically, it's deciding which data matters most, adapting experiments based on initial findings, and optimizing resource usage across multiple platforms.

Underneath the missions, there's a quiet revolution in space hardware. For decades, spacecraft computers were slow, radiation-hardened dinosaurs, reliable but painfully limited. Modern asteroid probes carry AI processors powerful enough to run neural networks in real-time while surviving cosmic radiation that would kill a smartphone in minutes.

Star trackers provide a perfect example of this evolution. These camera systems compare images of the night sky to onboard star catalogs, giving spacecraft absolute three-axis attitude knowledge accurate to arc-seconds. Integrated guidance, navigation, and control units now combine star trackers, inertial measurement units, reaction wheels, and magnetic torquers in a single module about the size of a loaf of bread.

Timing matters more than most people realize. NASA's Deep Space Atomic Clock achieved stability better than four nanoseconds over 20-day periods, translating to distance uncertainties of about one foot. When you're rendezvousing with a tumbling asteroid, that precision determines whether your navigation solution works or whether you drift into uncontrolled territory.

The AI software has gotten dramatically better too. Recent advances in asteroid shape reconstruction using deep neural networks allow spacecraft to build accurate 3D models from limited observations in milliseconds. The Hunyuan-3D algorithm achieved superior geometric reconstruction accuracy on asteroid datasets compared to competing approaches, critical for safe landing site selection.

Reinforcement learning algorithms have moved beyond the lab. The Naval Research Laboratory demonstrated the first reinforcement learning robot control in space aboard the International Space Station, proving these systems can handle real-world unpredictability in harsh environments.

Perhaps most impressive is how these systems handle uncertainty. Asteroid environments are inherently unpredictable: boulders that weren't visible from orbit, unexpected dust plumes, gravitational anomalies from uneven mass distribution. Modern autonomous systems incorporate explicit uncertainty quantification, making decisions that account for what they don't know.

The safety architecture deserves special attention. These aren't cowboy AIs making reckless decisions. Every autonomous spacecraft includes supervisory safety controllers that can override learned policies if they detect fault conditions. Think of it as AI with a conscience, or at least a kill switch.

The economic argument for autonomous spacecraft is simple math with revolutionary implications. Human-in-the-loop operations require massive ground control teams working around the clock. NASA's Curiosity rover on Mars, operating with 14-minute one-way communication delays, needs hundreds of people to plan each day's activities. OSIRIS-REx accomplished far more complex tasks with a fraction of the staff because the spacecraft handled routine decisions independently.

But the real promise isn't saving money on Earth, it's going places we couldn't reach otherwise. The outer solar system presents communication delays measured in hours. Uranus is about 2.7 billion miles away and radio signals take over two and a half hours one-way. Operating a spacecraft there with human oversight would be like playing chess by mail. Autonomy isn't a convenience at those distances, it's a requirement.

Commercial ventures are betting heavily on this capability. Karman+ raised $20 million in seed funding to develop autonomous asteroid mining technology, targeting a 2027 demonstration mission to extract water from near-Earth asteroids. The business model only works if spacecraft can prospect, navigate, and mine with minimal human intervention because operations costs would kill profitability otherwise.

The scientific payoff could be extraordinary. Asteroid mining aside, autonomous spacecraft enable entirely new mission architectures. Instead of one expensive flagship mission to a single target, space agencies could deploy swarms of smaller, autonomous probes that explore dozens of asteroids simultaneously, sharing data and resources through inter-satellite links.

The timeline is accelerating. ESA's RAMSES mission is planned for launch in spring 2028 to rendezvous with asteroid Apophis during its close approach to Earth in 2029, a mission that requires precise autonomous navigation to study an asteroid during a rare gravitational interaction with our planet.

There's a multiplier effect here. Every successful autonomous mission generates data that trains better algorithms for the next generation. OSIRIS-REx's navigation logs are being used to improve AI systems for future asteroid sample return missions. Hera's distributed autonomy experiments will inform designs for multi-spacecraft missions to the moons of Jupiter and Saturn.

But let's be clear about what we're trusting to algorithms. Space missions cost hundreds of millions or billions of dollars. They carry irreplaceable scientific instruments. Once launched, there's no calling them back to the shop for repairs. When an AI makes a bad decision, the consequences are permanent.

OSIRIS-REx almost learned this the hard way. During approach to Bennu, the spacecraft's hazard maps identified the surface as far rougher than expected, so rough that all the pre-planned landing sites were deemed unsafe. The AI had to autonomously identify a backup site, Nightingale, that was smaller and more challenging than mission planners had anticipated. It worked, but it was a reminder that autonomous systems sometimes face choices their designers never imagined.

Then there's the transparency problem. Modern deep learning networks are notoriously opaque. When a neural network decides to abort a landing approach, can we trust that decision? More importantly, can we understand why it made that choice? In safety-critical applications, explainability isn't a nice-to-have feature, it's essential for validating the system's reasoning.

The failure modes are genuinely scary. A reinforcement learning algorithm trained in simulation might encounter edge cases in real asteroid environments that break its learned policies. Computer vision systems could be fooled by unexpected lighting conditions or surface properties. Distributed autonomous systems might develop emergent behaviors, swarm dynamics that weren't predicted in testing.

There's also the question of what we lose when we stop sending humans into the decision loop. Mission controllers develop intuitions about spacecraft behavior that algorithms can't replicate. When Hayabusa's systems failed, human operators improvised recovery procedures that no autonomous system would have attempted, risky creative solutions born from desperation and ingenuity.

The broader societal question is thornier: if AI can run spacecraft, what else can it run? The same autonomous navigation algorithms being developed for asteroids have obvious applications in military drones, autonomous weapons, and surveillance systems. The technology is fundamentally dual-use.

Look ahead ten years. The asteroid belt between Mars and Jupiter contains somewhere between 1.1 and 1.9 million asteroids larger than a kilometer across, and millions more smaller bodies. If current trends continue, we'll have dozens of autonomous spacecraft operating simultaneously in this region, some scientific missions studying pristine material from the solar system's formation, others commercial ventures prospecting for water and rare minerals.

These spacecraft won't phone home for instructions. They'll form autonomous networks, sharing observations and coordinating operations without human oversight. When one probe discovers an unusual asteroid composition, it might autonomously redirect nearby spacecraft to investigate, optimizing the collective scientific return of the entire fleet.

The AI will get better fast. Current autonomous systems are impressive but narrow, they excel at specific tasks like navigation or hazard detection but lack general intelligence. The next generation will integrate multiple AI capabilities into unified decision-making frameworks that can handle unprecedented situations with minimal pre-programming.

We might see spacecraft that redesign their own mission plans mid-flight based on early discoveries. Imagine an asteroid mission that autonomously decides to extend its stay because preliminary samples revealed unexpected organic compounds, or that chooses to visit a second target because it detected an anomaly during flyby. These aren't far-fetched scenarios, they're logical extensions of existing autonomous capabilities.

The timeline for deep-space exploration will collapse. Instead of decade-long gaps between flagship missions, we could see continuous waves of smaller autonomous probes launching whenever orbital mechanics align, each building on data from its predecessors. The iterative cycle of design-launch-operate-learn could accelerate from decades to years.

Commercial operations will almost certainly lead this transformation. Private companies have stronger incentives to minimize operations costs, and they're less constrained by the conservative risk posture that governs government space agencies. When asteroid mining becomes economically viable, and that when is increasingly looking like within the next two decades, it will be because AI made the operations cheap enough to justify the upfront investment.

But the most profound shift might be psychological. For sixty years, space exploration has meant humans controlling machines at a distance. The next era will be about humans setting goals and machines figuring out how to achieve them. We're transitioning from commanders to stakeholders, from operators to observers.

The asteroid belt isn't a destination, it's a proving ground. Everything we learn about autonomous navigation in these environments scales to harder problems: autonomous submarines exploring oceans beneath the ice of Europa, autonomous aerial vehicles flying through the toxic atmosphere of Venus, autonomous rovers descending into Martian lava tubes where communication with Earth is impossible.

We're developing the technology stack that will define space exploration for the next century. Machine learning algorithms that navigate by starlight. Computer vision systems that see in wavelengths human eyes can't detect. Distributed AI architectures that coordinate robotic teams across millions of miles without central control.

The question isn't whether AI will run space missions—it already does. The question is how far we're willing to let it go, and whether we're comfortable with the answers it might find without us.

When Voyager 1 crossed into interstellar space in 2012, it was still receiving commands from Earth, still tethered to human decision-making despite being 12 billion miles away. The spacecraft we launch today won't need that tether. They'll navigate on their own judgment, make their own choices, and send back discoveries we never thought to look for.

Perhaps that's the real breakthrough. We're not just building smarter spacecraft—we're building scientific partners that can explore places we'll never visit, answer questions we haven't asked yet, and expand human knowledge in ways we can't predict. The asteroid belt is calling, and this time we're sending machines smart enough to answer.

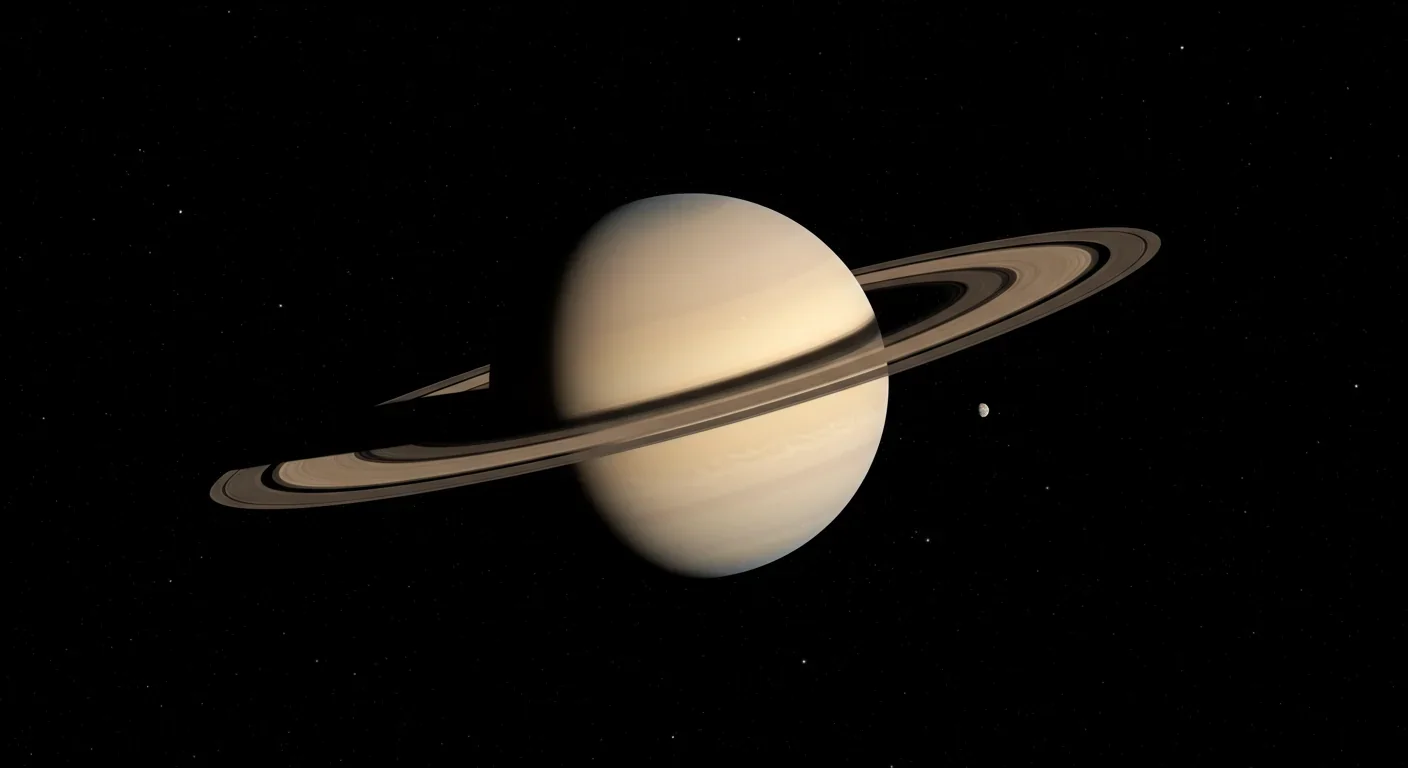

Saturn's iconic rings are temporary, likely formed within the past 100 million years and will vanish in 100-300 million years. NASA's Cassini mission revealed their hidden complexity, ongoing dynamics, and the mysteries that still puzzle scientists.

Scientists are revolutionizing gut health by identifying 'keystone' bacteria—crucial microbes that hold entire microbial ecosystems together. By engineering and reintroducing these missing bacterial linchpins, researchers can transform dysfunctional microbiomes into healthy ones, opening new treatments for diseases from IBS to depression.

Marine permaculture—cultivating kelp forests using wave-powered pumps and floating platforms—could sequester carbon 20 times faster than terrestrial forests while creating millions of jobs, feeding coastal communities, and restoring ocean ecosystems. Despite kelp's $500 billion in annual ecosystem services, fewer than 2% of global kelp forests have high-level protection, and over half have vanished in 50 years. Real-world projects in Japan, Chile, the U.S., and Europe demonstrate economic via...

Our attraction to impractical partners stems from evolutionary signals, attachment patterns formed in childhood, and modern status pressures. Understanding these forces helps us make conscious choices aligned with long-term happiness rather than hardwired instincts.

Crows and other corvids bring gifts to humans who feed them, revealing sophisticated social intelligence comparable to primates. This reciprocal exchange behavior demonstrates theory of mind, facial recognition, and long-term memory.

Cryptocurrency has become a revolutionary tool empowering dissidents in authoritarian states to bypass financial surveillance and asset freezes, while simultaneously enabling sanctioned regimes to evade international pressure through parallel financial systems.

Blockchain-based social networks like Bluesky, Mastodon, and Lens Protocol are growing rapidly, offering user data ownership and censorship resistance. While they won't immediately replace Facebook or Twitter, their 51% annual growth rate and new economic models could force Big Tech to fundamentally change how social media works.